AMD just launched a GPU that's 50 percent bigger than Big Navi

But it's been neutered so it can no longer game.

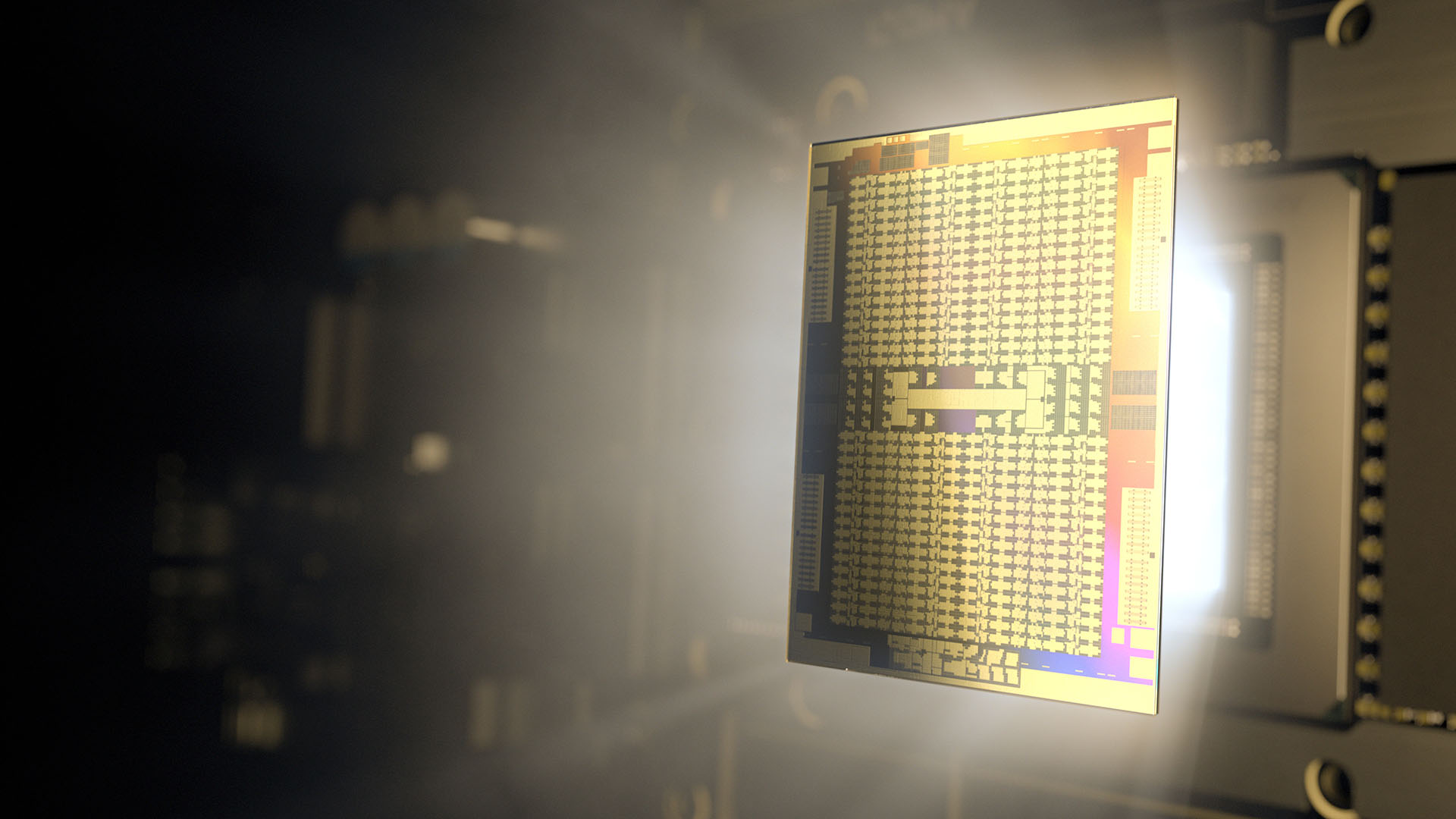

Back at the announcement of RDNA, AMD made it clear that it was at a fork in the road for its sole graphics architecture at the time, GCN. On the one hand, it had gaming requirements to meet. On the other, datacentre suits demanding big number crunching. To placate both parties, Radeon created two different architectures: RDNA and CDNA. What we're seeing today is the first graphics card to use the latter: the Instinct MI100.

The MI100 is a serious number crunching GPU, and is intended to be placed amongst the mess of cables found in any good datacentre. Or lack of mess at the really good ones. The card itself offers no graphics output—or any fixed-function graphics blocks whatsoever—meaning you couldn't connect this card up to your monitor for a little back of the warehouse gaming if you wanted to. Sorry.

It's a shame, too, because the MI100 houses 120 Compute Units. For comparison (rough comparison, mind) the so-called 'Big Navi' GPU found within the RX 6900 XT comes with 80 CUs. They're completely different architectures, after all, but that doesn't make the MI100 any less of a GPU monster.

That chip is designed to accelerate HPC and AI workloads like no other, and AMD says it's one of the best around. By its own numbers, it puts the MI100 ahead of Nvidia's A100 by a significant amount thanks to a new 'Matrix Core' (HPC gets all the cool named stuff) that accelerates certain workloads.

That Matrix acceleration helps take the cards standard 23.1 TFLOPS of FP32 performance to 46.1 TFLOPs when using MFMA instructions, a new family of wavefront-level instruction from AMD. As such, the performance uplift won't be immediate for all workloads.

Black Friday 2020 deals: the place to go for the all the best Black Friday bargains.

The fun doesn't stop there for some lucky datacentre engineer, though. The MI100 comes with 32GB of HBM2 memory (for a whopping 1.23TB/s memory bandwidth), PCIe 4.0 support, and all at a 300W TDP—the same as the upcoming RX 6800 XT and RX 6900 XT.

One reason for that easy-going TDP, at least for a chip of this size, is the fact that so much of the graphics-specific silicon has been ripped from the chip to make way for more number-crunching kit. Waste not, want not.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

There's not long to wait before we get to blow the lid off the second-generation RDNA 2 graphics cards (only two more days!), but in the meantime the datacentre world is getting its own taste of AMD's red-tinted version of the good life.

Oh and Nvidia also happened to release an 80GB A100 today, too. That's not due to some clever tactic by either side: All of these announcements were made at SC20, or Supercomputing 2020, a HPC conference taking place today.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.