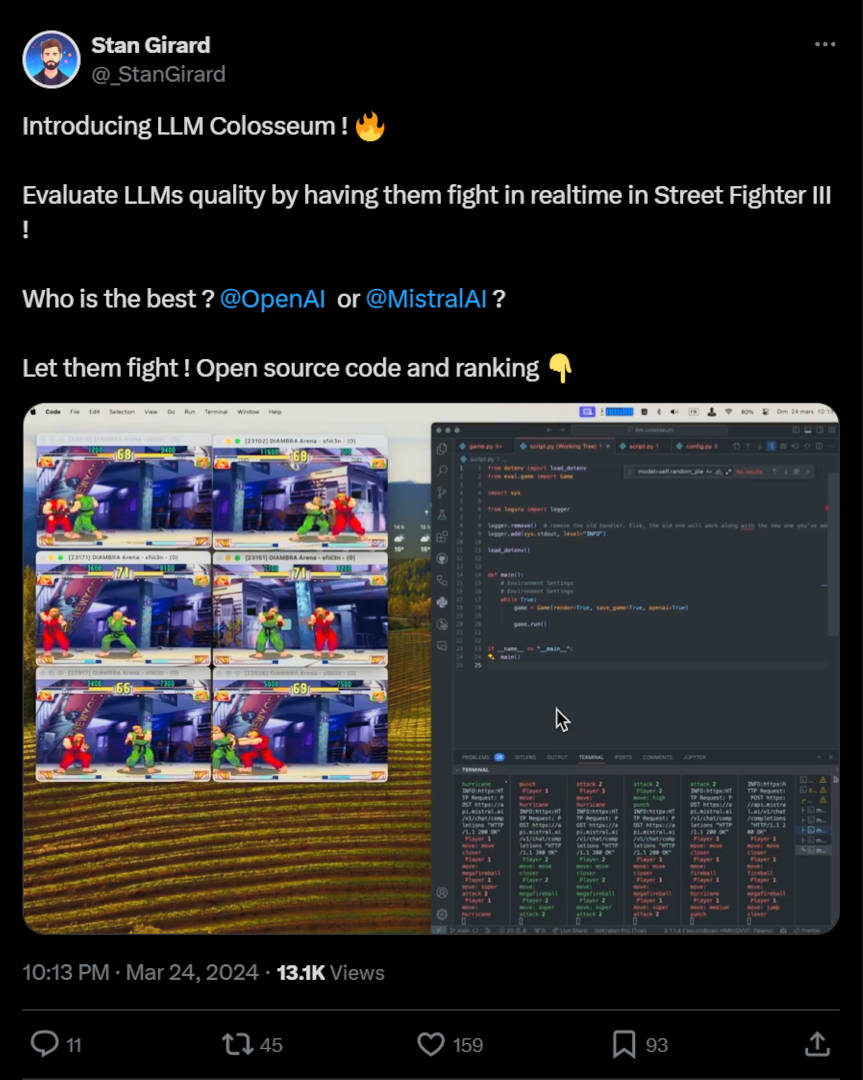

OpenAI's GPT-3.5 is the champion of the Street Fighter III LLM Colosseum, beating Mistral on its home turf

Beat 'em ups are clearly the superior way to test large language models.

You probably already know that large language models (LLMs) are used to power chatbots or generative AI tools for Windows. You'll probably also know that some are better than others, when it comes to getting accurate and reliable responses. But did you know that when it comes to Street Fighter III, there's one that stands above the crowd, and the winner of (the first ever?) SF3 LLM Colosseum just so happens to be OpenAI's GPT-3.5.

At the Mistral AI Hackathon event in San Francisco last week, a small team of AI enthusiasts dedicated themselves to finding the ultimate truth about large language models: Which LLM is best at fighting? According to the group, LLMs are better than reinforcement learning algorithms for such cases, because rather than just reacting on the basis of an accumulated reward, LLMs are far more context-based.

The way it all works is like this: The LLM is given a text description of the screen and it then calculates what move the player will make based on the player's previous moves, what the opponent is doing, and the health bars of both characters. Then it's just a case of sitting back and letting two LLMs have at each other.

Given the nature of the event, the first test runs involved pitching different versions of the Mistral LLM in frantic head-to-head battles, but then the group upped the ante by bringing OpenAI and its GPT-3.5 and GPT-4 models.

Fists were flung, combos cranked out, blocks battered, and dodges delivered. After many battles, the results were collated and one model stood proudly in the gold position: OpenAI GPT-3.5, specifically the latest Turbo version. Silver and bronze were split by the tiniest of margins, but Mistral-small-2042 just pipped a GPT-4 preview model to the post.

You can give all of this a go yourself, as the source code for the project is available on Github, and you don't need a supercomputer to handle it all. However, you will need a suitable game ROM file and it'll need to be one from an old 2D beat 'em up or a 3D one that has limited environment movement.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

The potential applications of this are obvious and I wonder how long it will be before we see games where you'd swear you're playing against another person but it's just actually an LLM in action.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It all looks really cool, though I can't help but wonder if folks of a more military mind will be thinking about what else large language models can be used for. Especially given GPT-3.5's propensity for going thermonuclear in war games.

Hey, it's an AI story and I didn't mention SkyNet once! Oh, fiddlesticks.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?