Microsoft's VASA-1 takes AI-generated video one step closer to 'aw hell, we're all doomed'

The researchers are targeting 'positive applications' for their work, so that's alright then.

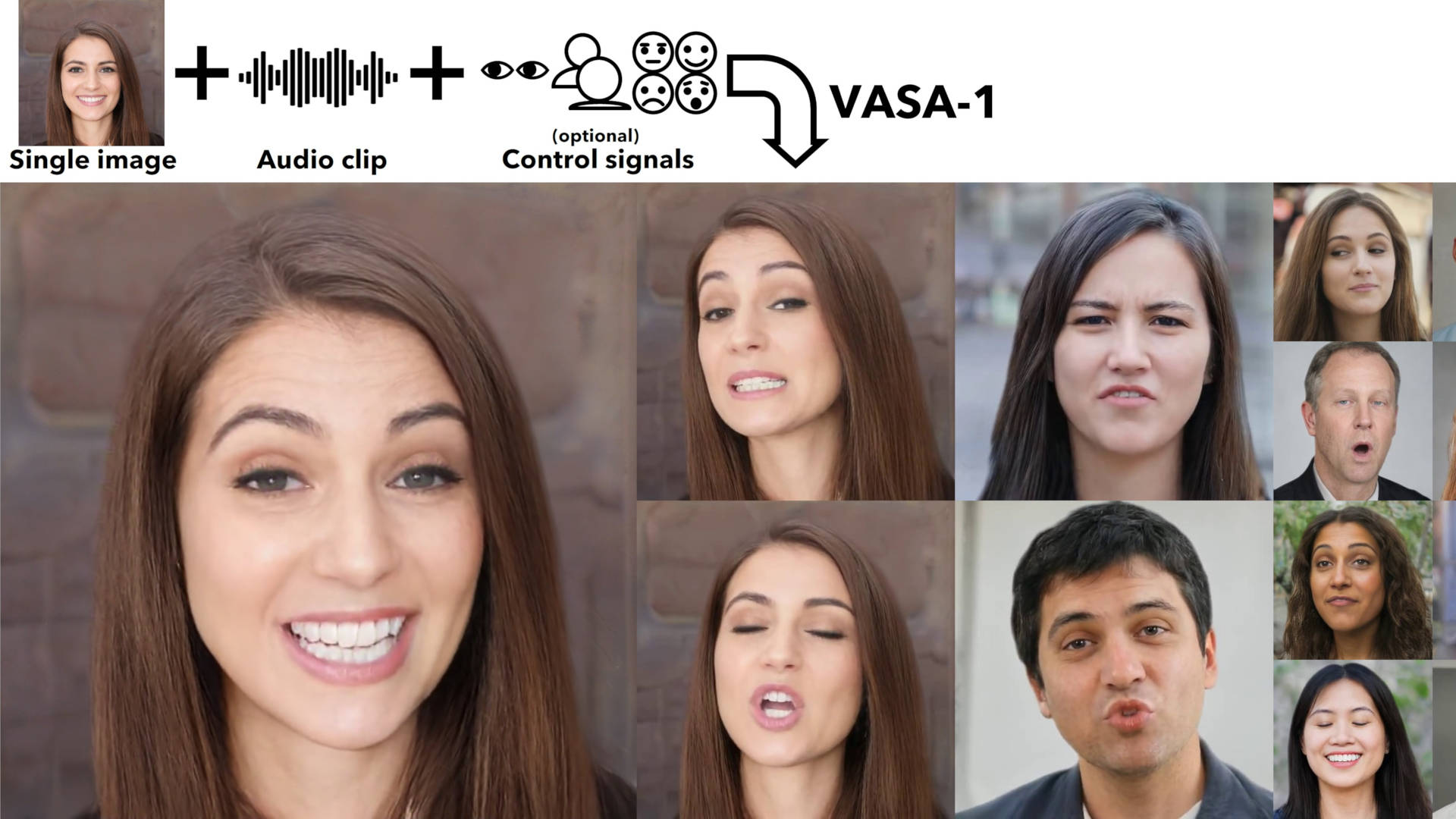

With generative AI being a key feature of all its new software and hardware projects, it should be no surprise that Microsoft has been developing its own machine learning models. VASA-1 is one such example, where a single image of a person and an audio track can be converted into a convincing video clip of said person speaking the recording.

Just a few years ago, anything created via generative AI was instantly identifiable, by several factors. With still images, it would be things like the number of fingers on a person's hand or even just something as simple as having the correct number of legs. AI-generated video was even worse, but at least it was very meme-worthy.

However, a research report from Microsoft shows that the obvious nature of generative AI is rapidly going to disappear. VASA-1 is a machine learning model that turns a single static image of a person's face into a short, realistic video, through the use of a speech audio track. The model examines the sound's changes in tone and pace and then creates a sequence of new images where the face is altered to match the speech.

I'm not doing it any justice with that description, because some of the examples posted by Microsoft are startlingly good. Others aren't so hot, though, and it's clear that the researchers selected the best examples to showcase what they've achieved. In particular, a short video demonstrating the use of the model in real-time highlights that it still has a long way to go before it becomes impossible to distinguish real reality from computer-generated reality.

But even so, the fact that this was all done on a desktop PC, albeit one using an RTX 4090, rather than a massive supercomputer shows that with access to such software, pretty much anyone could use generative AI to create a flawless deepfake. The researchers acknowledge this in the research report.

"It is not intended to create content that is used to mislead or deceive. However, like other related content generation techniques, it could still potentially be misused for impersonating humans. We are opposed to any behavior to create misleading or harmful contents of real persons, and are interested in applying our technique for advancing forgery detection."

This is probably why Microsoft's research remains behind closed doors right now. That said, I can't imagine it will be long before someone manages to not only replicate the work but improve it, and potentially use it for some nefarious purpose. On the other hand, if VASA-1 can be used to detect deepfakes and it could be implemented in the form of a simple desktop application, then this would be a big step forward—or rather, a step away from a world where AI dooms us all. Yay!

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Nick, gaming, and computers all first met in 1981, with the love affair starting on a Sinclair ZX81 in kit form and a book on ZX Basic. He ended up becoming a physics and IT teacher, but by the late 1990s decided it was time to cut his teeth writing for a long defunct UK tech site. He went on to do the same at Madonion, helping to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its gaming and hardware section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com and over 100 long articles on anything and everything. He freely admits to being far too obsessed with GPUs and open world grindy RPGs, but who isn't these days?