Our Verdict

The GeForce RTX 2080 is plenty fast and has untapped potential, but it's an expensive card that's ahead of its time.

For

- Second fastest GPU available

- Ray tracing and deep learning

- New and improved design

Against

- No current ray tracing games

- Barely faster than 1080 Ti

- More expensive than previous gen

PC Gamer's got your back

The future of graphics cards and gaming is about to experience the next revolution, or at least that's the marketing behind the new Nvidia GeForce RTX 2080 and other Turing-based cards. When Nvidia teamed up with Epic to demonstrate real-time ray tracing at GDC earlier this year, and then we found out it was only running at a 'cinematic' 24fps on a $69,000 DGX Station with four Tesla V100 GPUs, it felt like a lot of hoopla over something more beneficial to Hollywood than to PC gamers. Six months later, we're looking at single graphics cards that can apparently outperform the DGX Station in ray tracing applications, at a comparatively amazing price of just $799. Great news, at least for enthusiast gamers with deep pockets.

The technology crammed into the Nvidia Turing architecture is certainly impressive. The CUDA cores have been enhanced in a variety of ways, promising up to 50 percent higher efficiency per core than on Pascal, and there's more of them to go around. Next, add faster GDDR6 memory, improved caching, and memory subsystem tweaks that also provide up to 75 percent more effective memory bandwidth. Then for good measure toss in some RT cores to accelerate ray tracing and Tensor cores for deep learning applications.

I've already talked about those areas in-depth in separate articles, so the focus today is on the actual real-world performance of the GeForce RTX 2080 Founders Edition. Nvidia has made quite a few design changes with the RTX Founders Editions. Gone are the old blowers, replaced by dual axial fans, dual vapor chambers, and a thick metal backplate. I like the metal backplate, even if it's unnecessary, because it makes the card look cleaner and protects the PCB and components from getting scratched or damaged by careless handling.

The GeForce RTX 2080 Founders Edition comes with a 90MHz factory overclock.

The GeForce RTX 2080 Founders Edition runs quieter than the 1080 Founders Edition, though the backplate does get quite hot to the touch. That's by design and temperatures stay in the acceptable range, but fans of small form factor cases might prefer the old blower designs. The GeForce RTX 2080 Founders Edition also comes with a 90MHz factory overclock, with further manual overclocking an option. It's good to see Nvidia's premium branded Founders Edition finally matching the typical factory overclocks on AIB partner cards, since it costs up to $100 more than the baseline cards (which we likely won't see for a couple of months, if history repeats itself).

Architecture: Turing TU104

Lithography: TSMC 12nm FinFET

Transistor Count: 13.6 billion

Die Size: 545mm2

Streaming Multiprocessors: 46

CUDA Cores: 2,944

Tensor Cores: 368

RT Cores: 46

Render Outputs: 64

Texture Units: 184

GDDR6 Capacity: 8GB

Bus Width: 256-bit

Base Clock: 1515MHz

Boost Clock: 1800MHz

Memory Speed: 14 GT/s

Memory Bandwidth: 448GB/s

TDP: 225W

Fans of virtual reality headsets also have something to look forward to, and not just because of the Turing architecture updates that can further improve multi-view rendering. The GeForce RTX 2080 (and every other RTX card I've seen so far) includes the new VirtualLink output, which provides up to 35W power, two USB 3.1 Gen1 data connections, and HBR3 video (up to 8k60) over a single cable. The only problem is that we need new headsets that support the standard, but it significantly reduces cable concerns for VR.

When the VirtualLink connector is in use, the GeForce RTX 2080 can draw up to 35W more power, but that's separate from the normal GPU TDP. You won't see performance drop, and the Founders Edition includes 8-pin (150W) plus 6-pin (75W) PCIe power connections, so there's ample power on tap (up to 300W, including the 75W from the x16 PCIe slot).

All the architectural and physical improvements make the RTX 2080 Founders Edition a recipe for success. What could possibly go wrong? There are several concerns, unfortunately. First, games will need to be coded to use ray tracing and deep learning enhancements. Those are coming, with 11 currently announced games featuring some form of ray tracing, and 25 games that use Nvidia's new DLSS. But at launch, none of those are available, and we haven't had sufficient time to analyze the DLSS demonstrations. But the bigger problems are pricing and performance.

Over the past several generation of GPU architectures, Nvidia's x80 cards have been the initial halo product, followed in most cases by a later x80 Ti variant. The GTX 480 and GTX 580 (Fermi GF100 / GF110) launched at $499 in 2010. Then the GTX 680 (Kepler GK104) in 2012 also stuck with a $500 price point, but the GTX 780 (Kepler GK114) moved pricing north to $649. The 700-series saw the return of the Ti branding, with the GTX 780 Ti in late 2013 unveiled at $699, pushing the old 780 back to the $499 target. The Kepler era also saw the creation of the first Titan cards, with a new $999 target.

The GeForce RTX 2080 Founders Edition ushers in higher pricing than we've ever seen on a consumer-centric part.

The 900-series cards followed a similar pattern to the 700-series, with a few minor changes. GTX 980 (Maxwell GM204) came out in 2014, at a price of $549. Nine months later the GTX 980 Ti (GM200) was revealed, at 'just' $649, a downsized version of the $999 GTX Titan X. Prices crept north again on the 10-series parts, with GTX 1080 launching at $699 for the Founders Edition, and custom cards with a starting price of $599 available a couple of months later. Then the GTX 1080 Ti took over the $699 spot and pushed the 1080 down to the current $499/$549 MSRP.

That's a lengthy discussion of past pricing, but it's important for context because the GeForce RTX 2080 Founders Edition ushers in higher pricing than we've ever seen on a consumer-centric part. $799 is $100 more than the 1080 launch price, $250 more than the current $549 1080 FE MSRP, and a whopping $330 more than the current lowest price on a GTX 1080 custom card. It's also $100 more than the current 1080 Ti MSRP and $150 more than the lowest priced GTX 1080 Ti. Ideally, we would want at least a 50 percent boost to performance relative to the GTX 1080, and 15-20 percent better performance than the GTX 1080 Ti. Cue the drumroll….

GeForce RTX 2080 Founders Edition performance

For the launch of the GeForce RTX 2080 and 2080 Ti, I've gone back and retested every card you see in the charts. All testing was done using the testbed equipment shown in the boxout to the right. A few key points are that the Core i7-8700K is overclocked to 5.0GHz, taking the already fastest gaming CPU and pushing it to new levels—all in the attempt to minimize CPU bottlenecks on the graphics cards. All existing GPUs were tested with Nvidia's 399.07 and AMD's 18.8.2 drivers (the latest available in late August and early September), while the GeForce RTX 2080 and RTX 2080 Ti were tested with the newer 411.51 drivers released last Friday.

All the recent graphics cards are 'reference' models where possible. That does present a small disparity as the GeForce RTX 2080 and 2080 Ti Founders Editions are factory overclocked by around six percent. Similar overclocks are possible and available on virtually every GPU, so keep that in mind when looking at the charts.

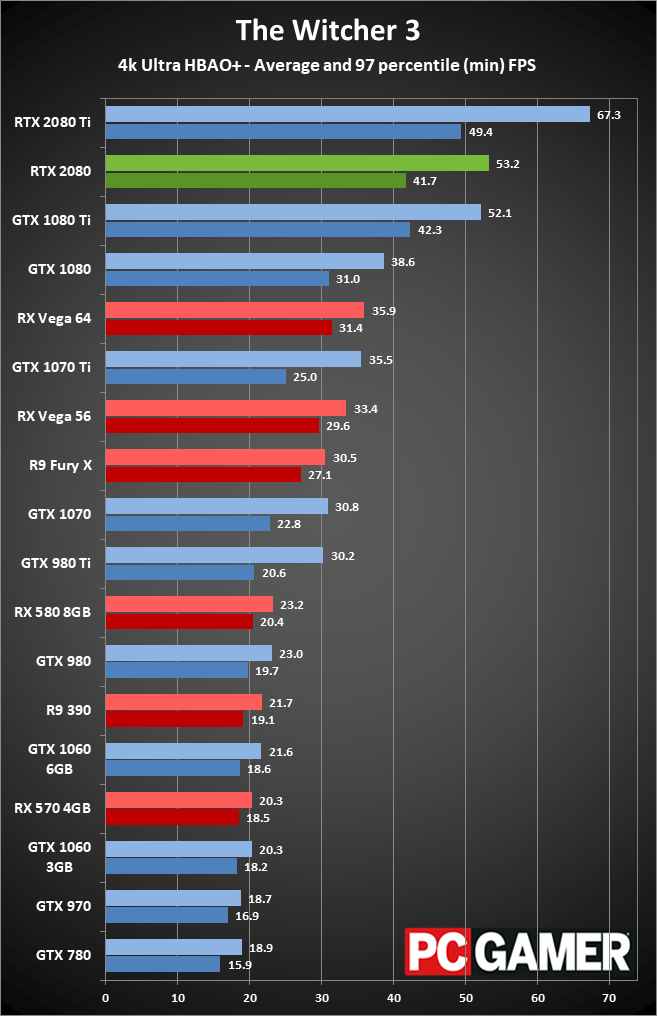

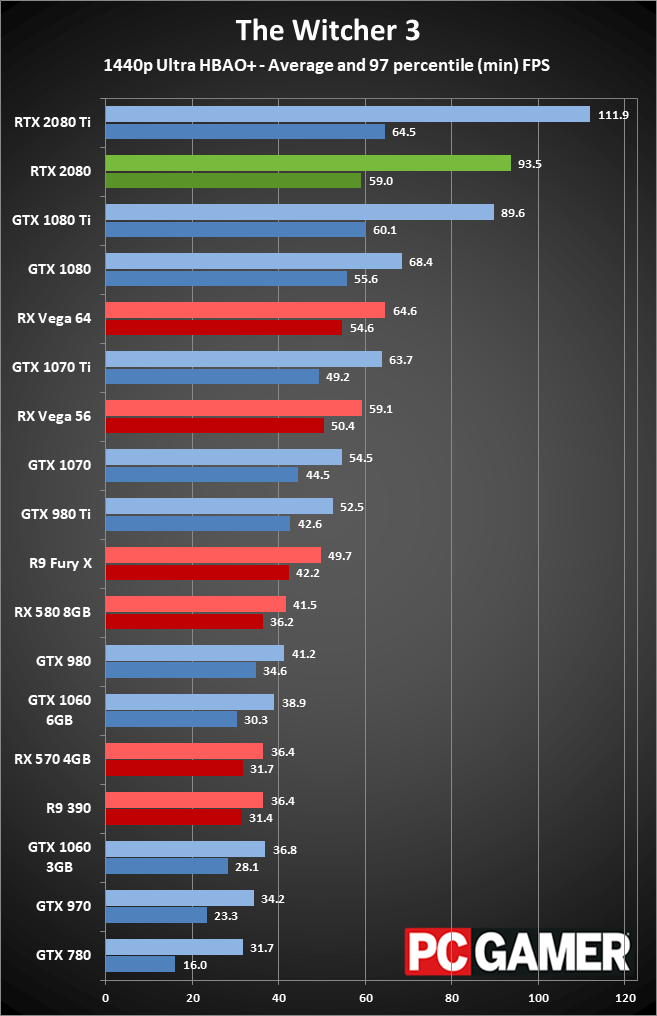

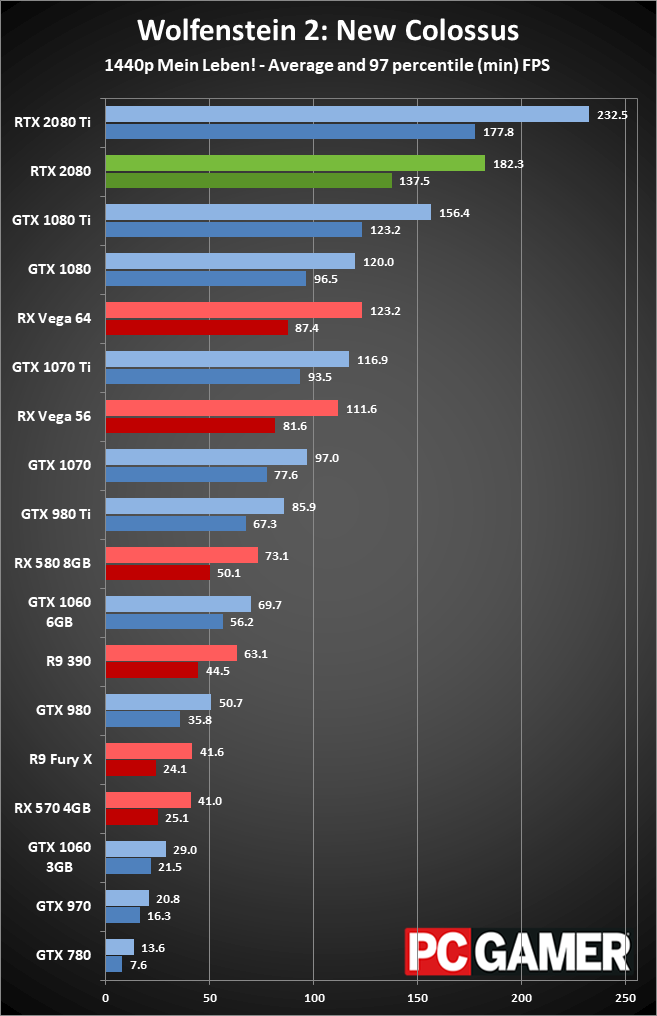

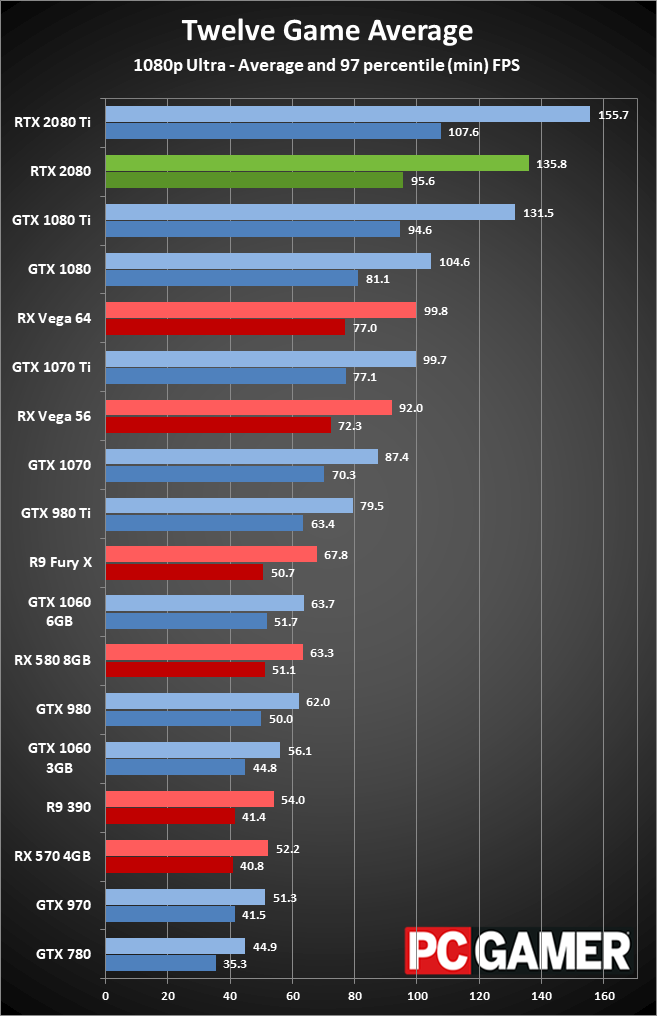

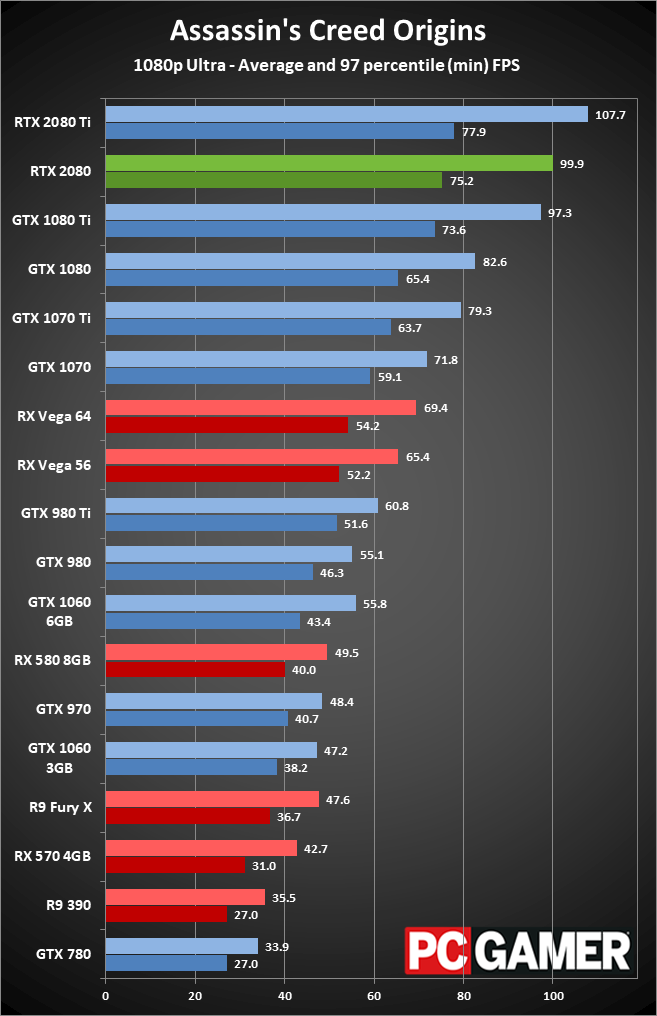

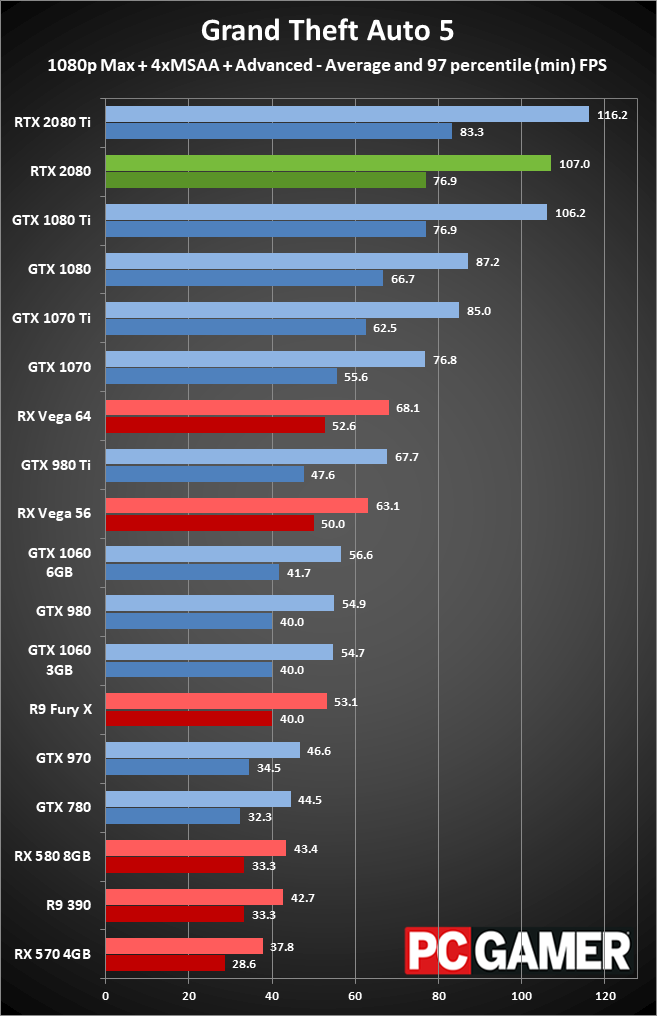

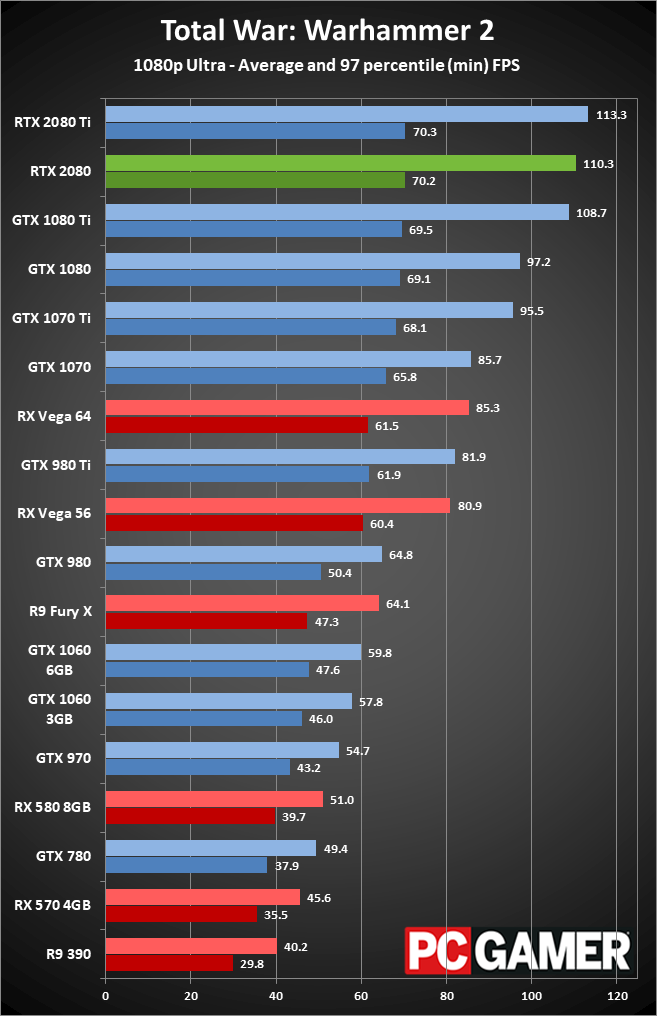

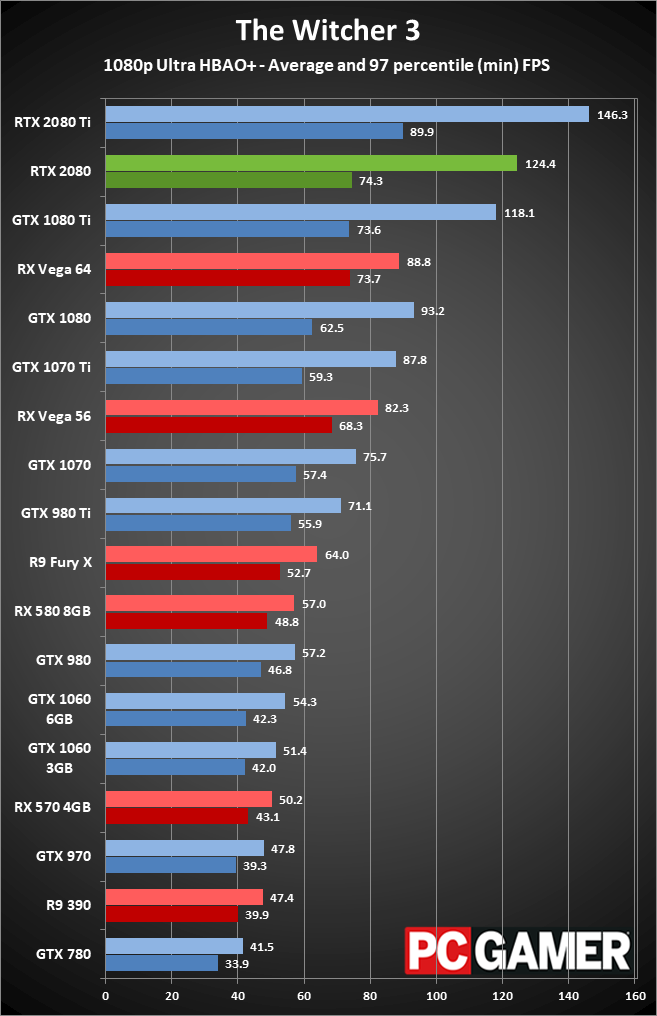

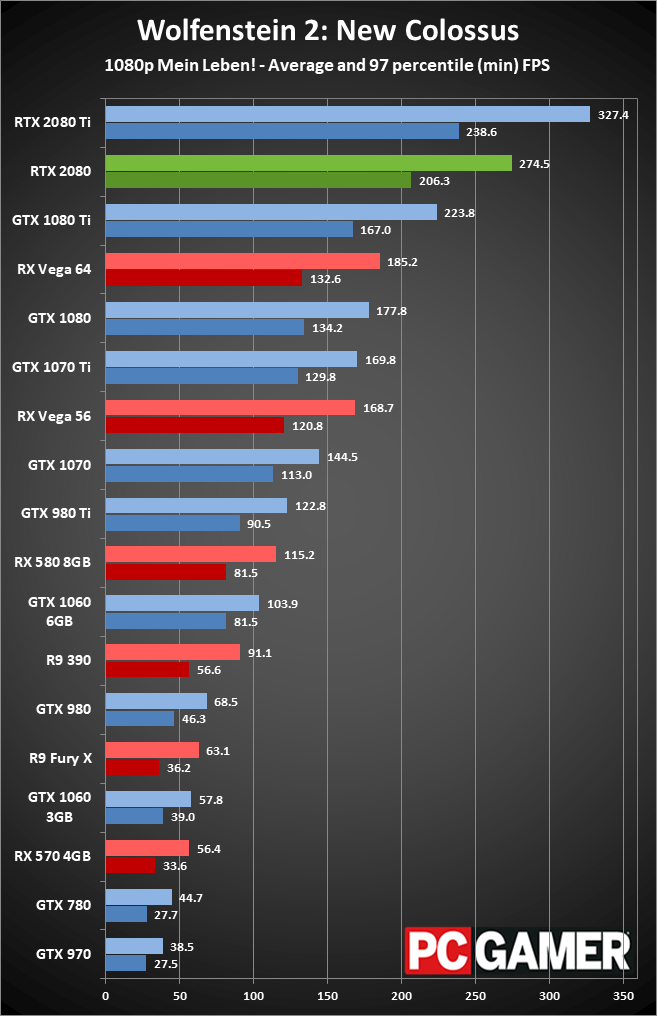

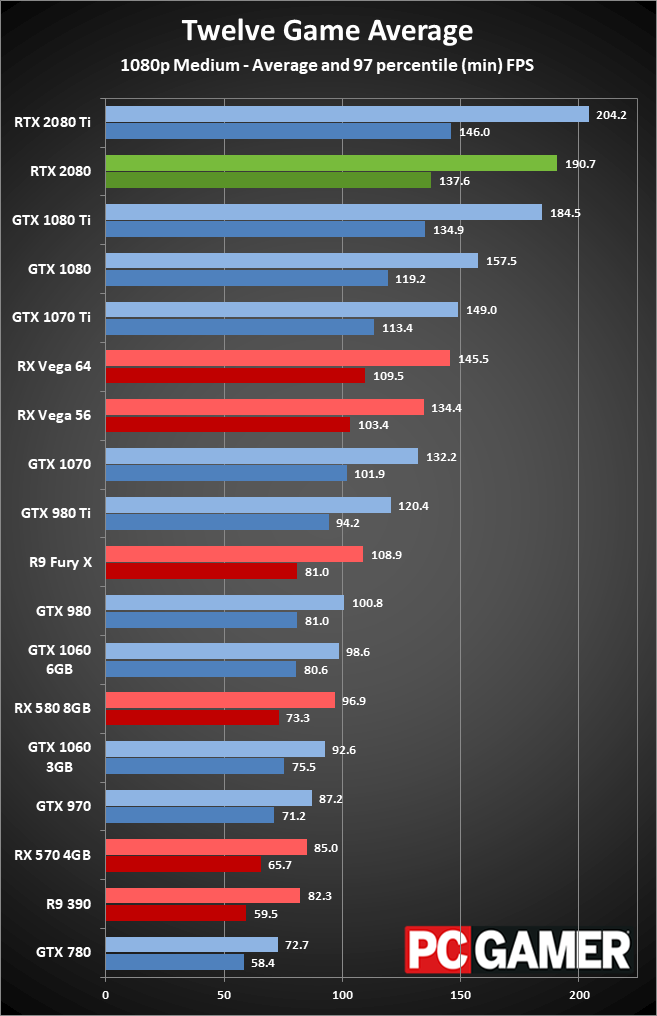

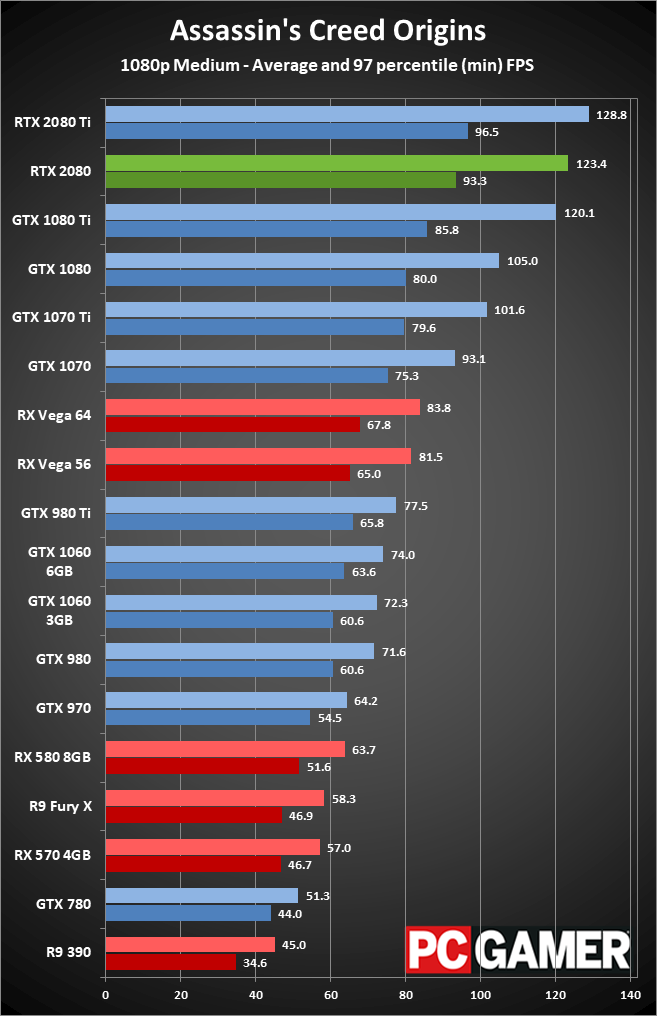

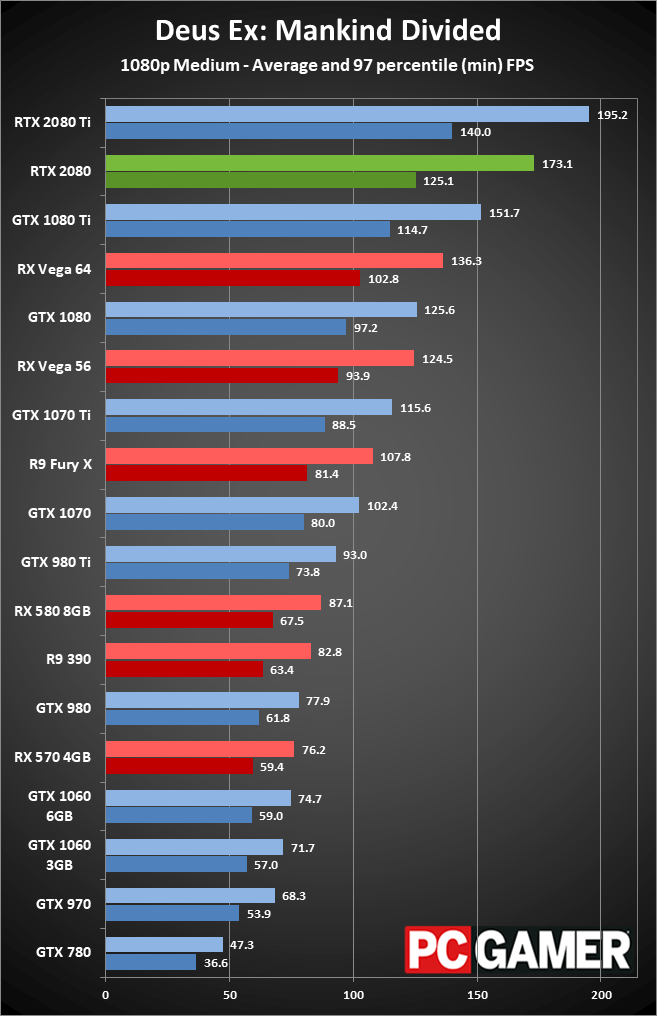

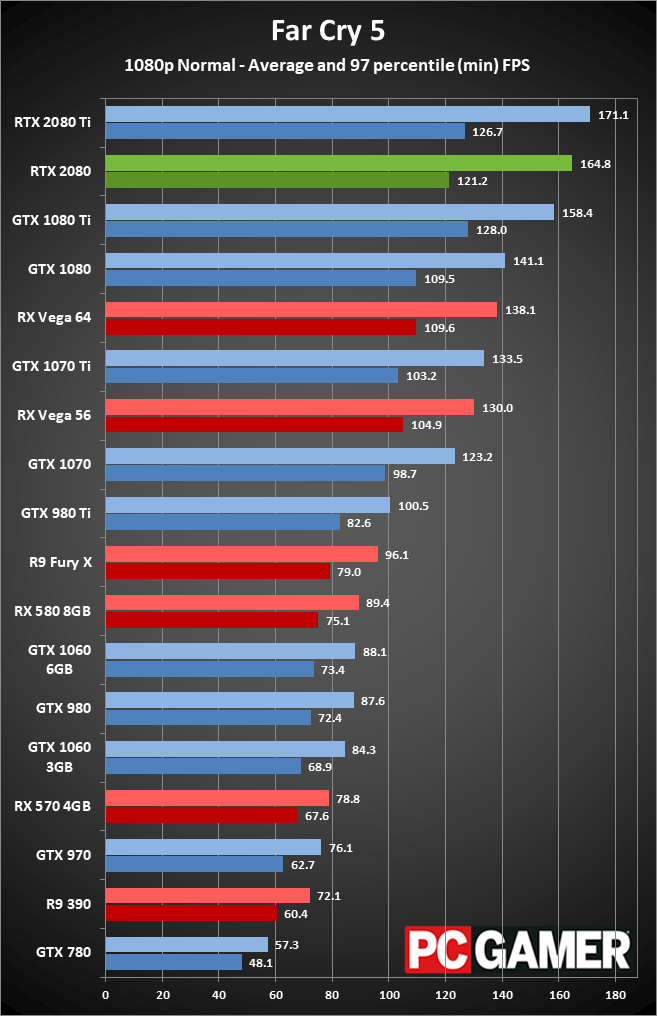

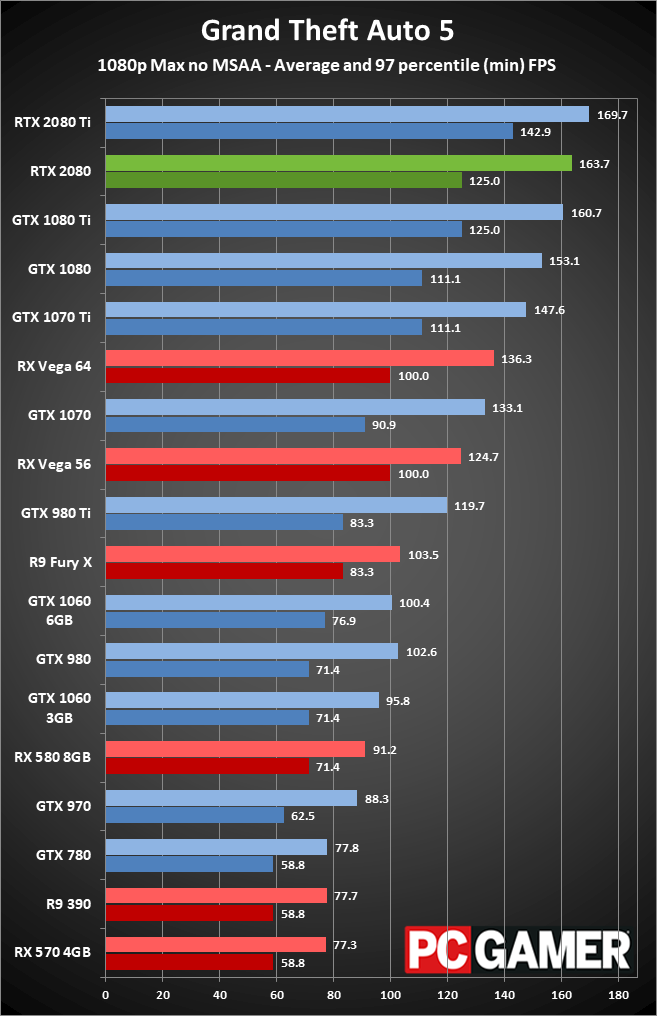

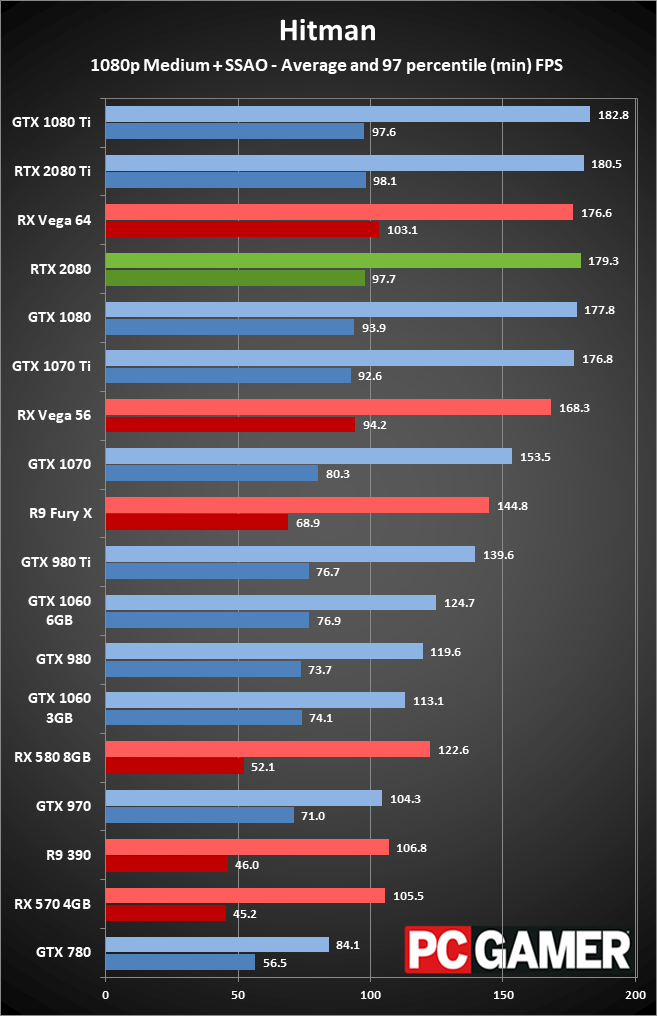

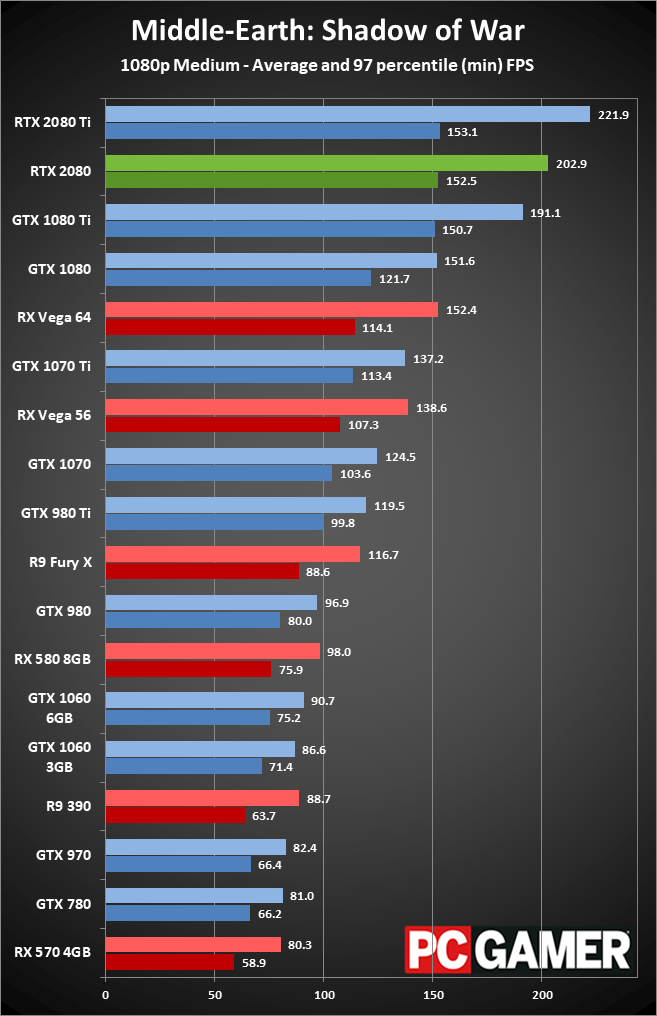

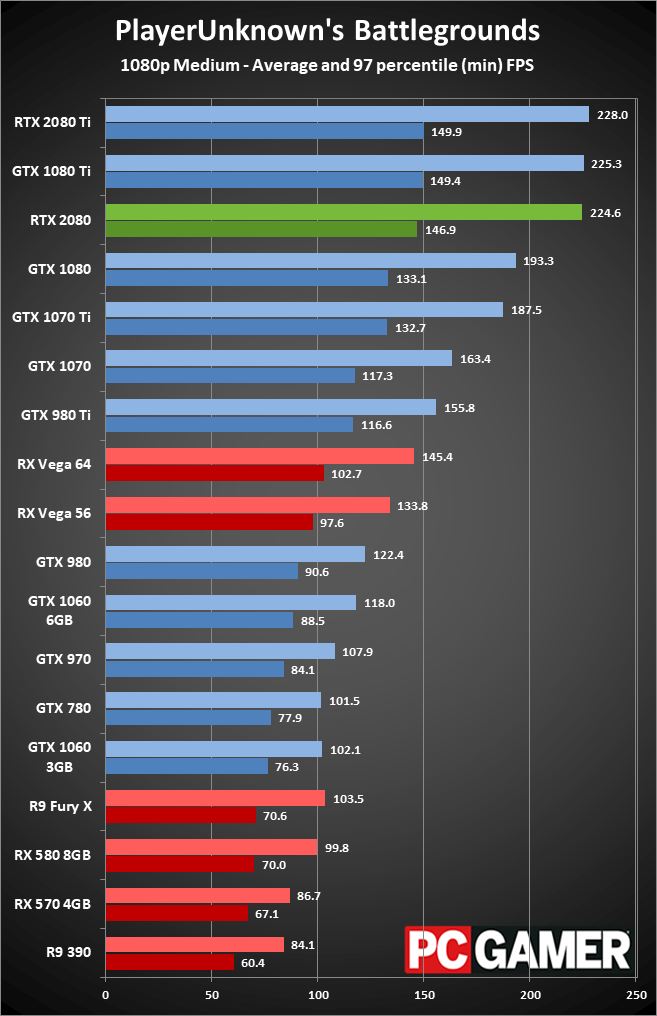

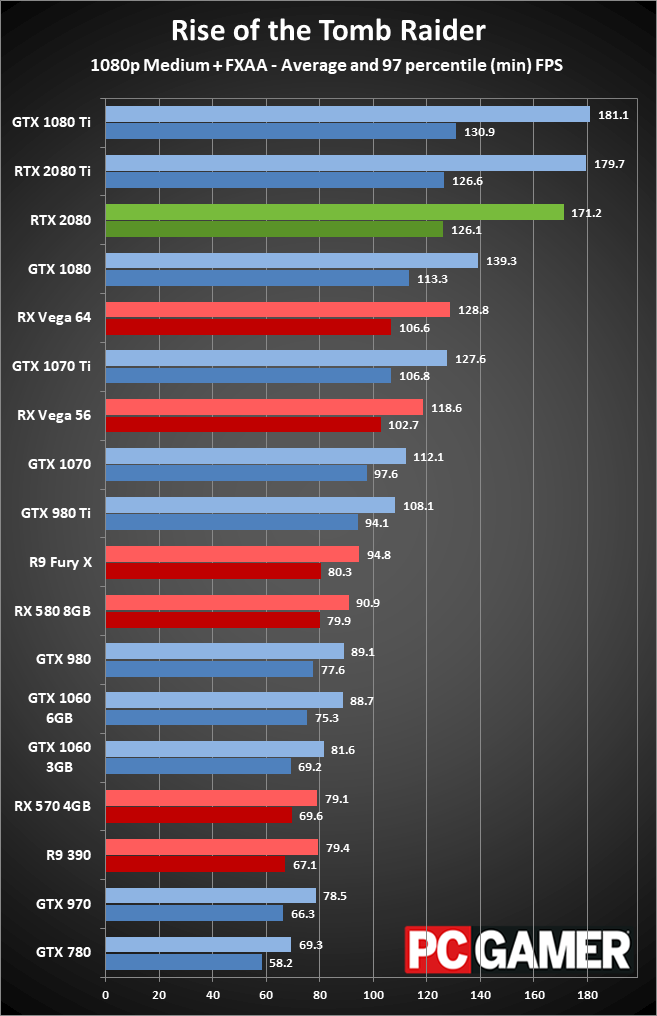

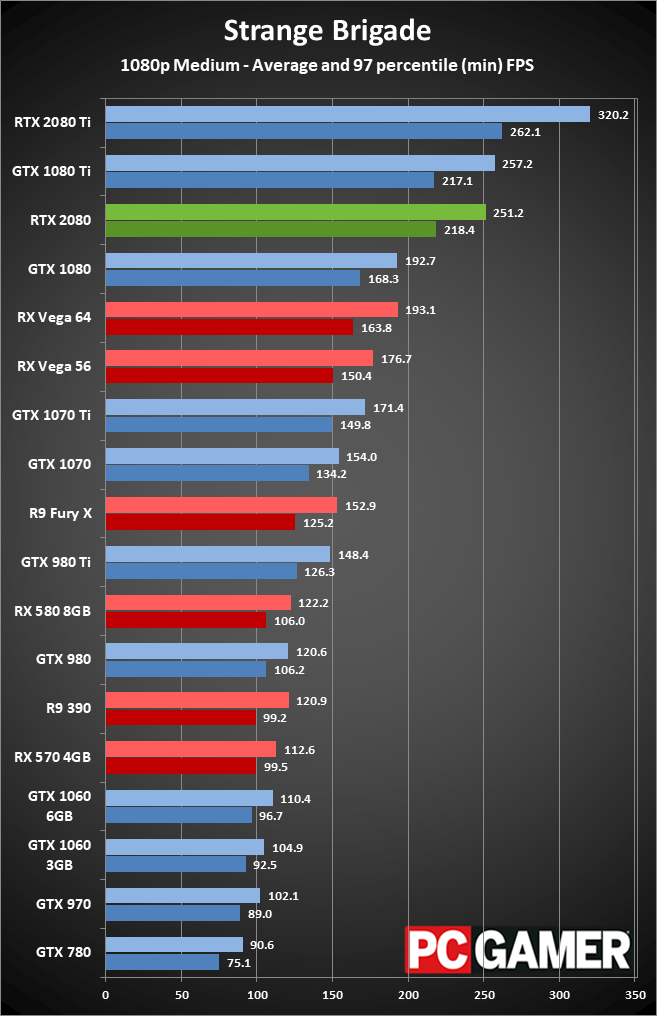

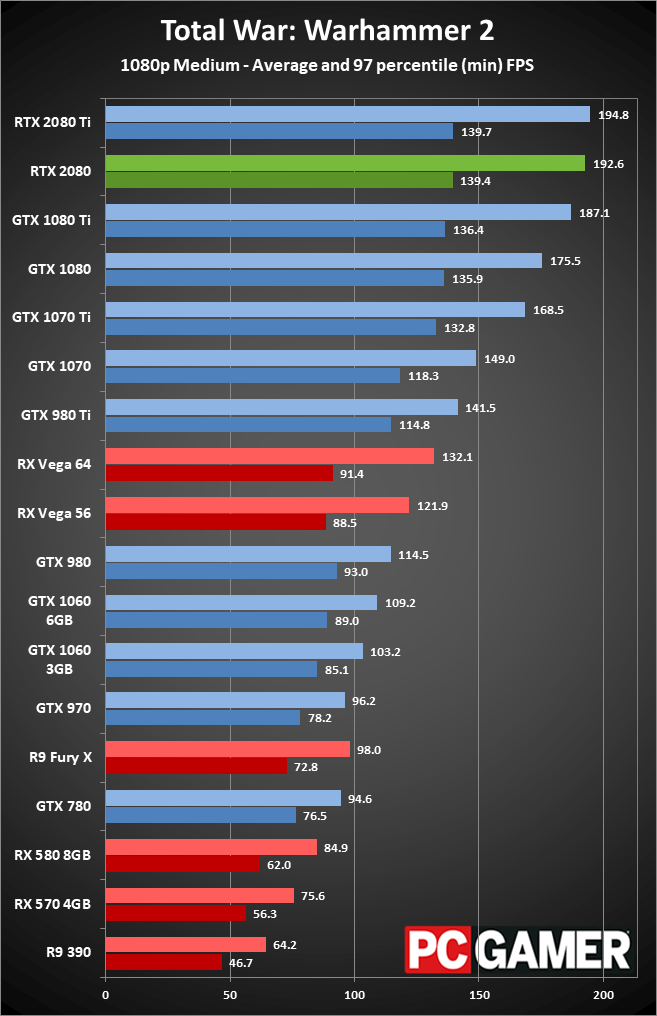

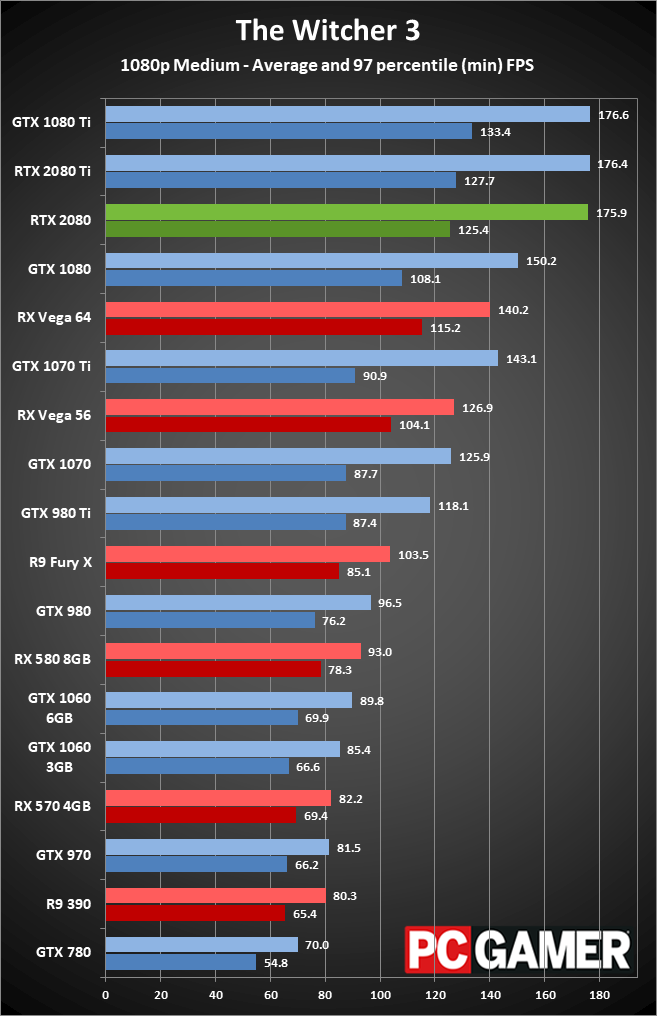

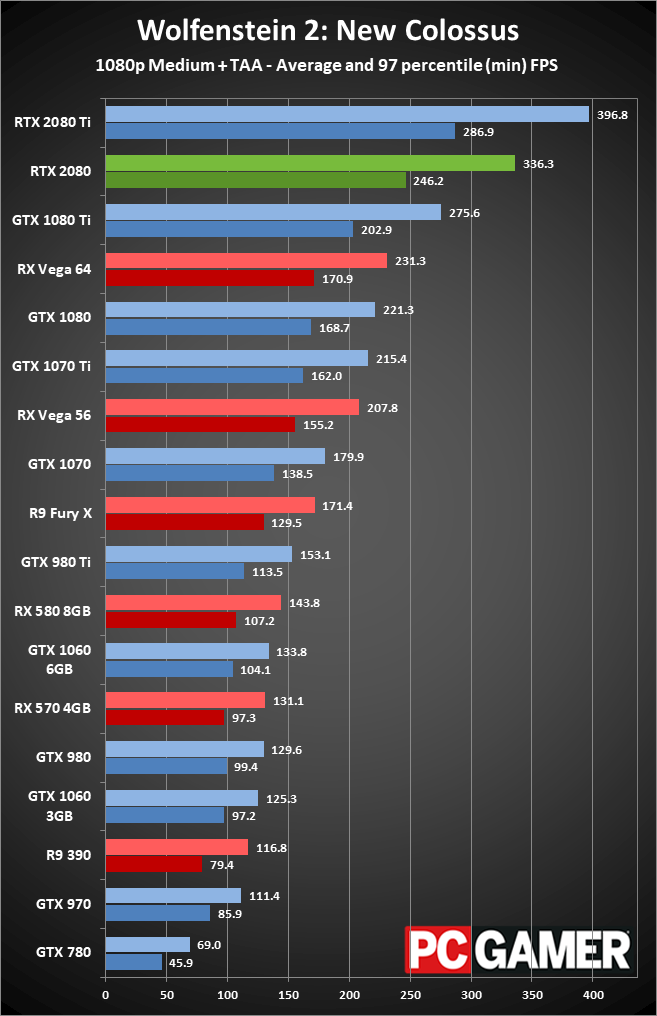

I've also gone with 'maxed out' settings in twelve popular games with this round of testing. That includes all the extras under GTA5's advanced graphics menu, HBAO+ in The Witcher 3, and so on (but not super-sampling AA). Some games punish older generation cards with less than 6GB (or in some cases, even 8GB) of VRAM at these settings, which can make cards like the GTX 980 look far worse than they are in practice, but if you're looking at extreme / enthusiast cards like the GeForce RTX 2080 Founders Edition, running at maximum quality seems a given. I did test at 1080p medium quality as well, mostly as a point of reference—CPU bottlenecks become a real limitation at that point.

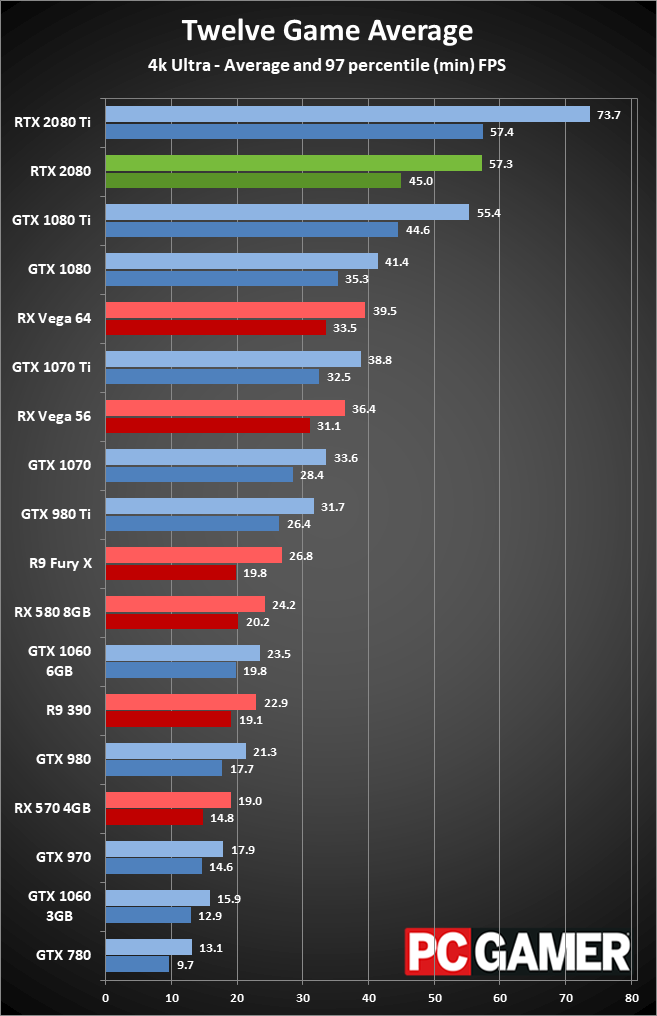

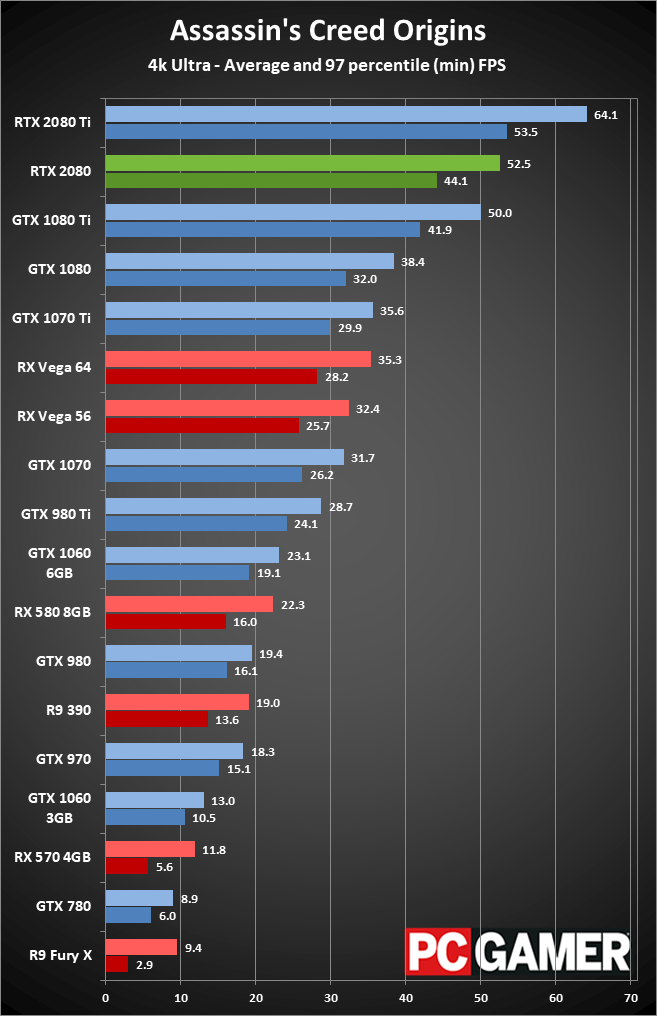

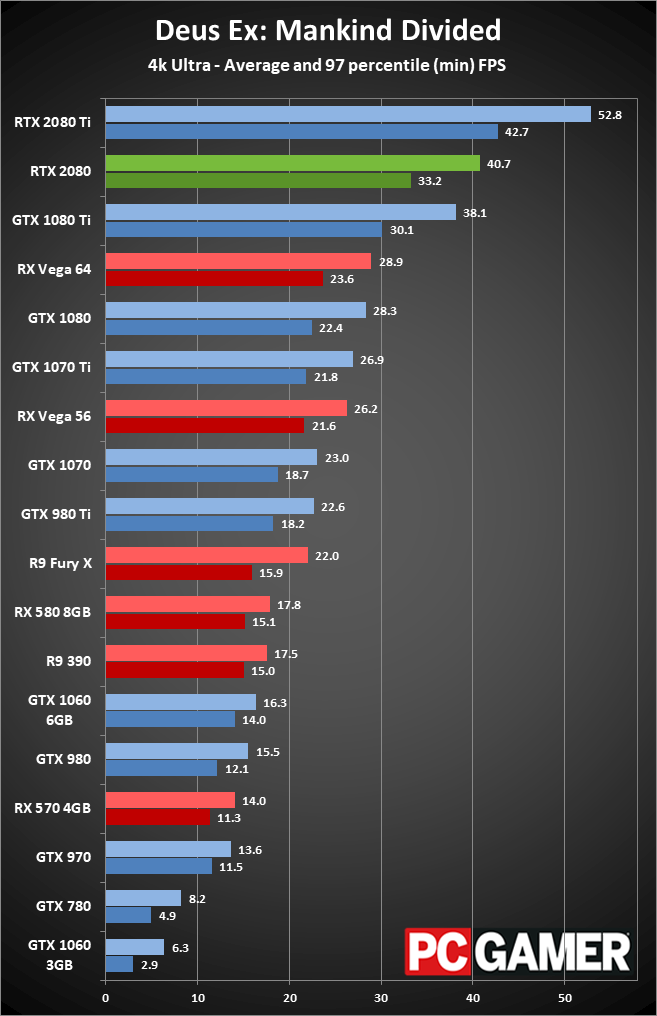

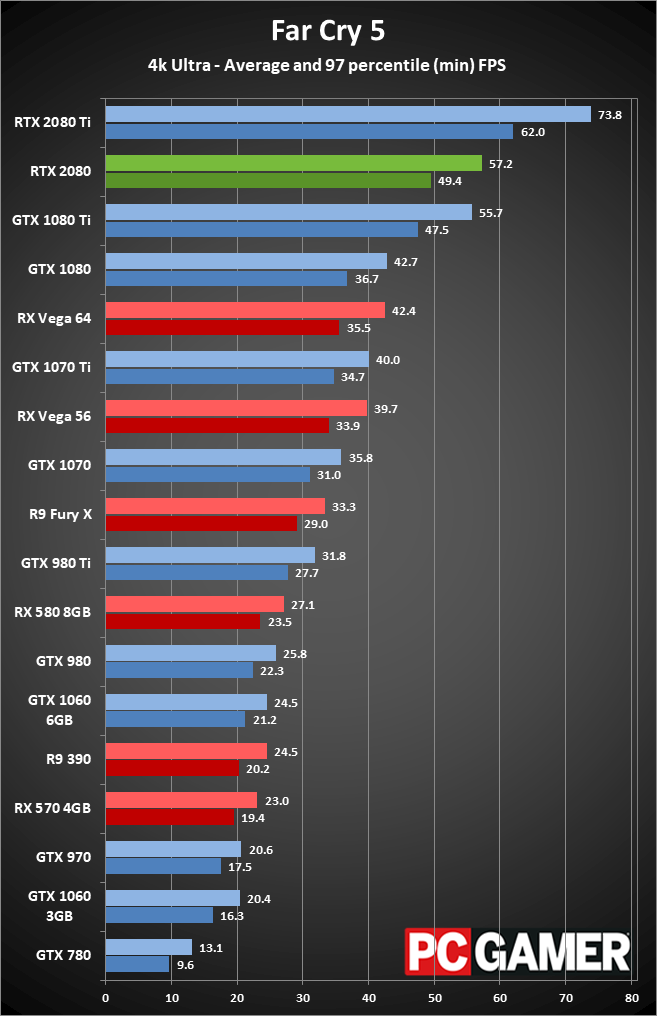

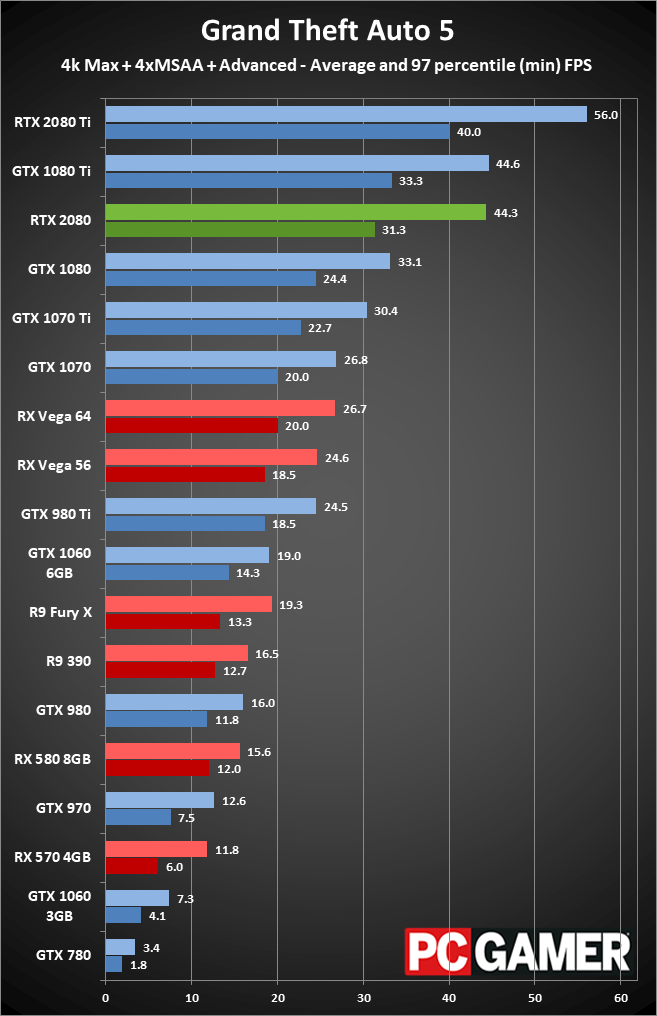

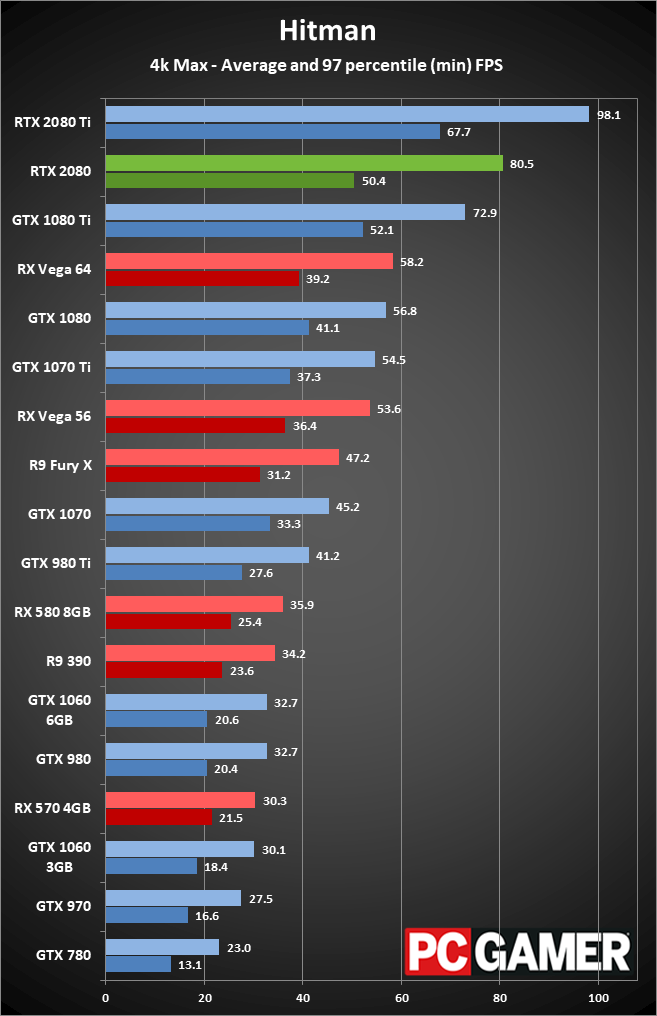

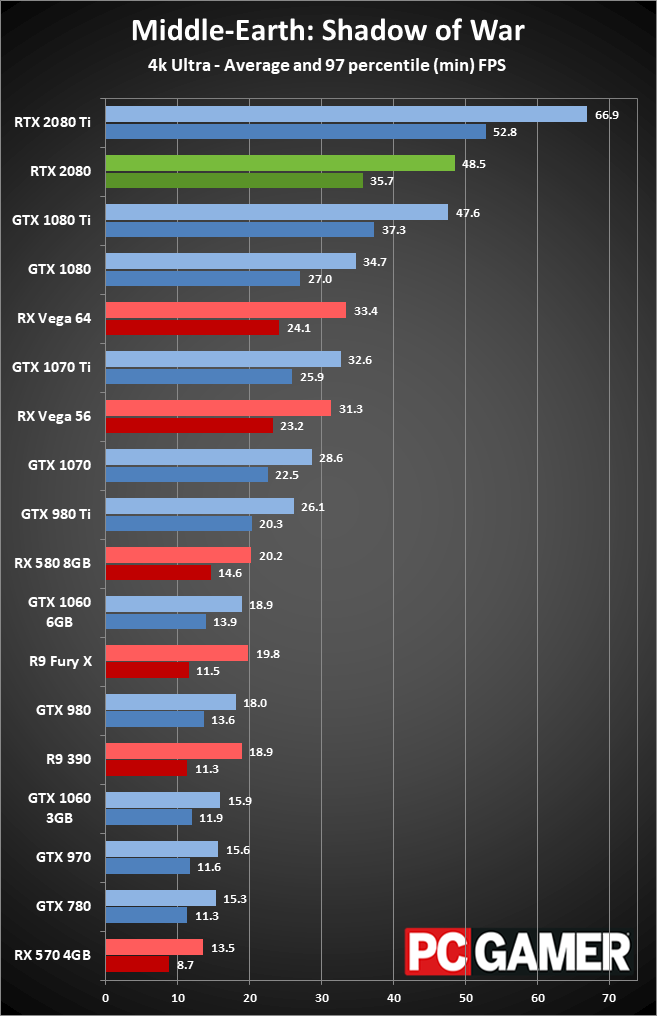

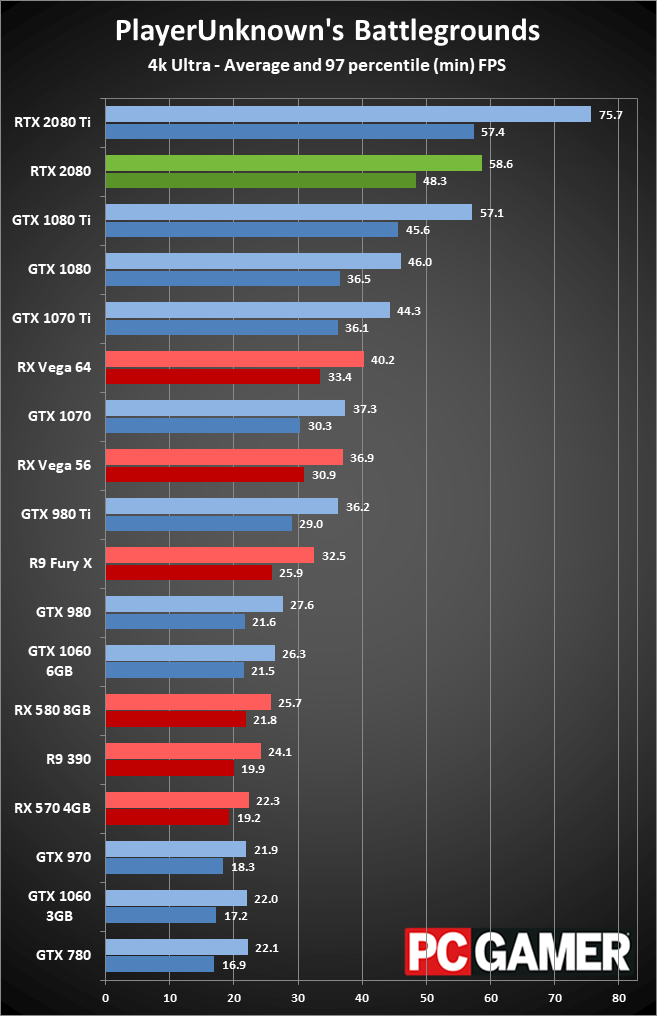

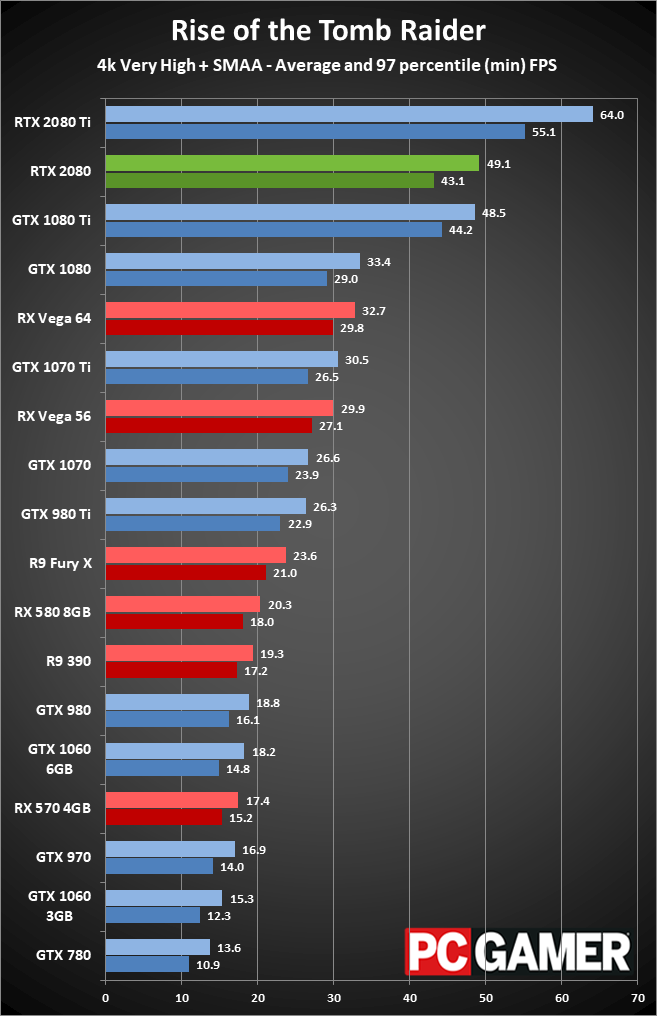

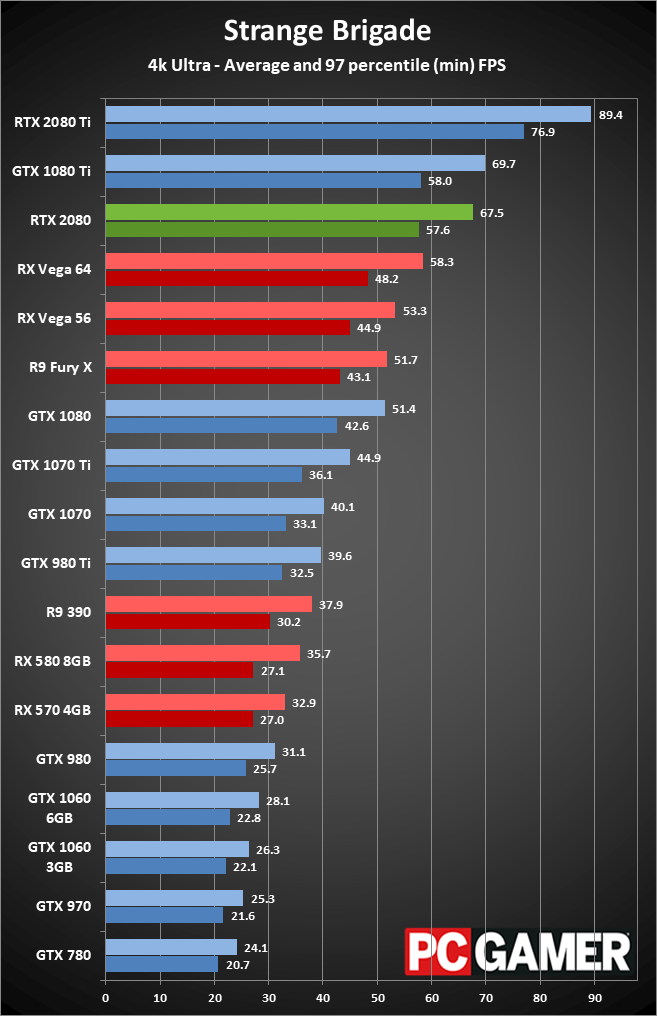

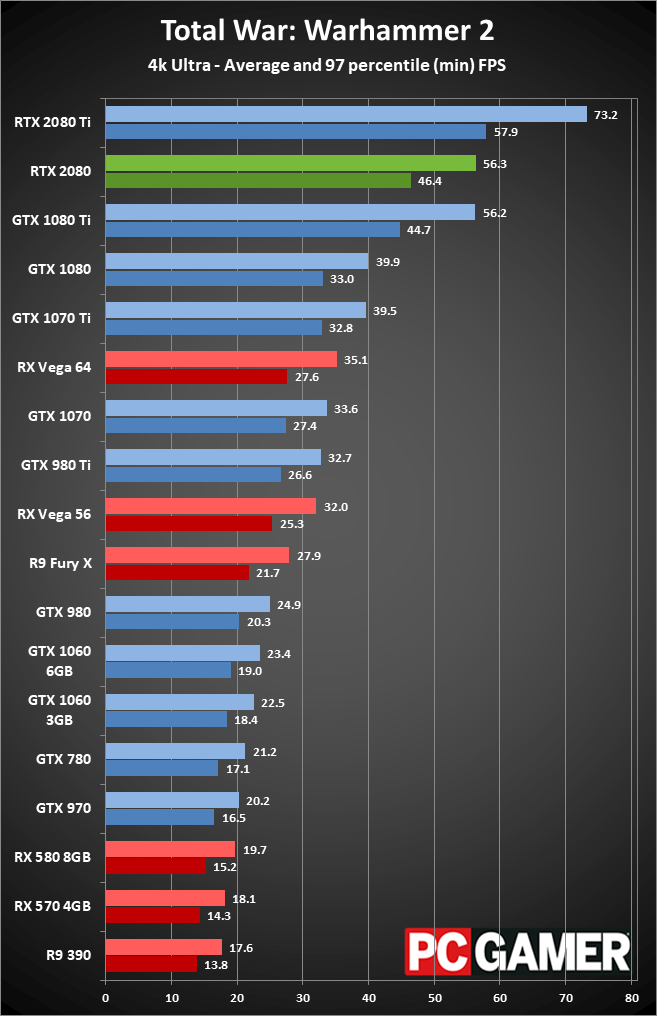

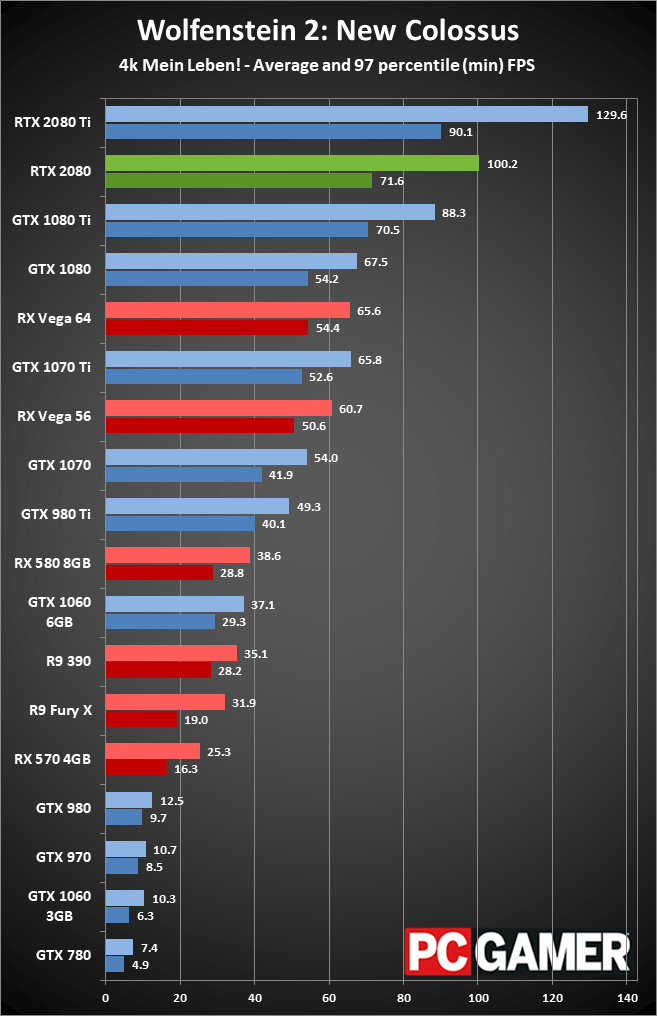

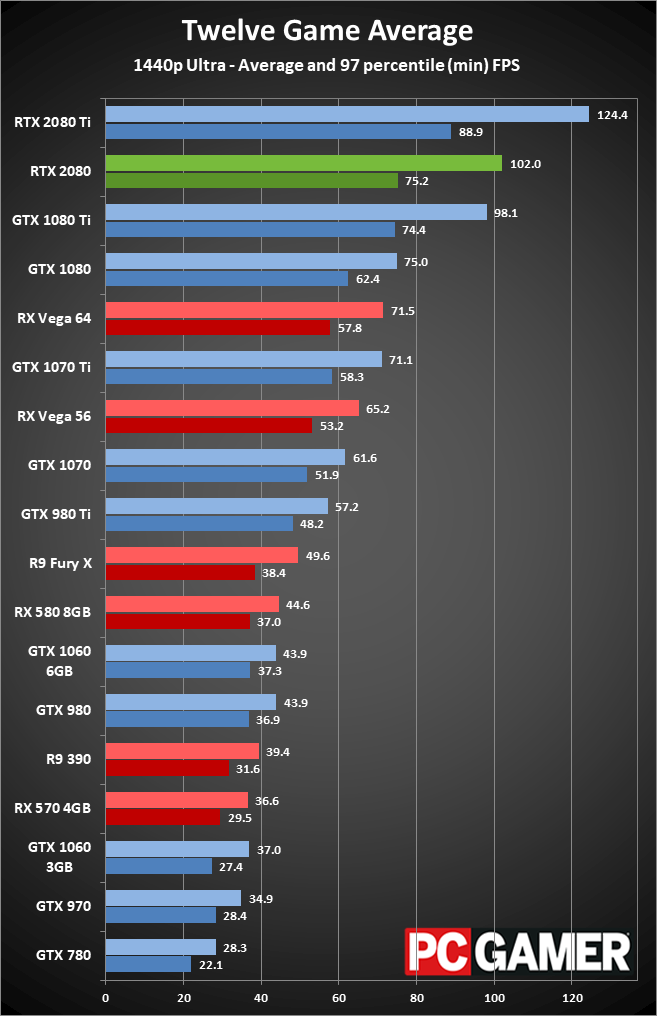

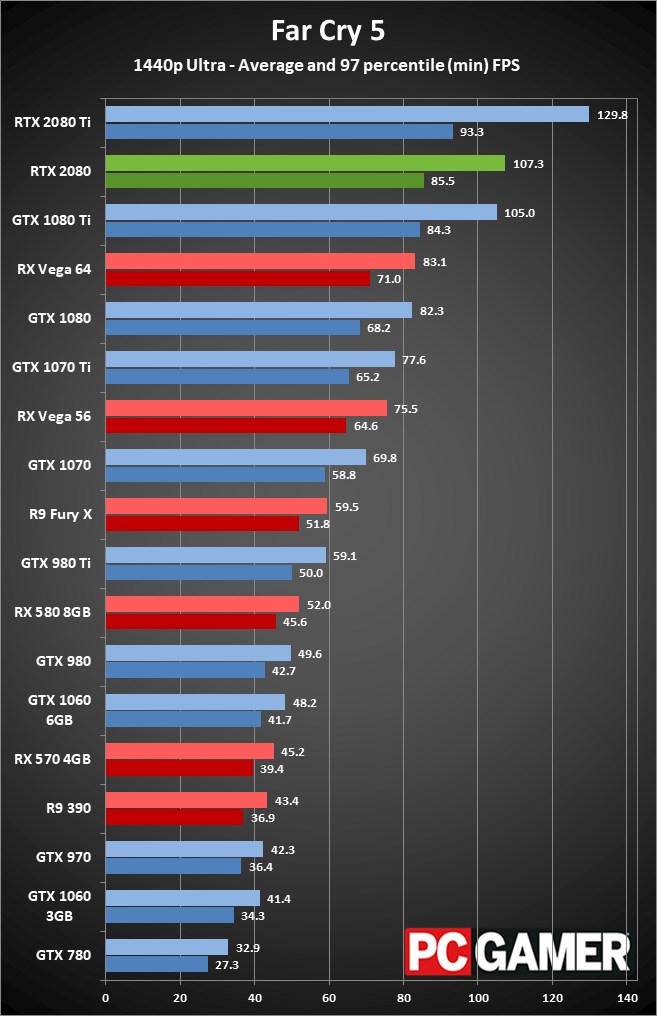

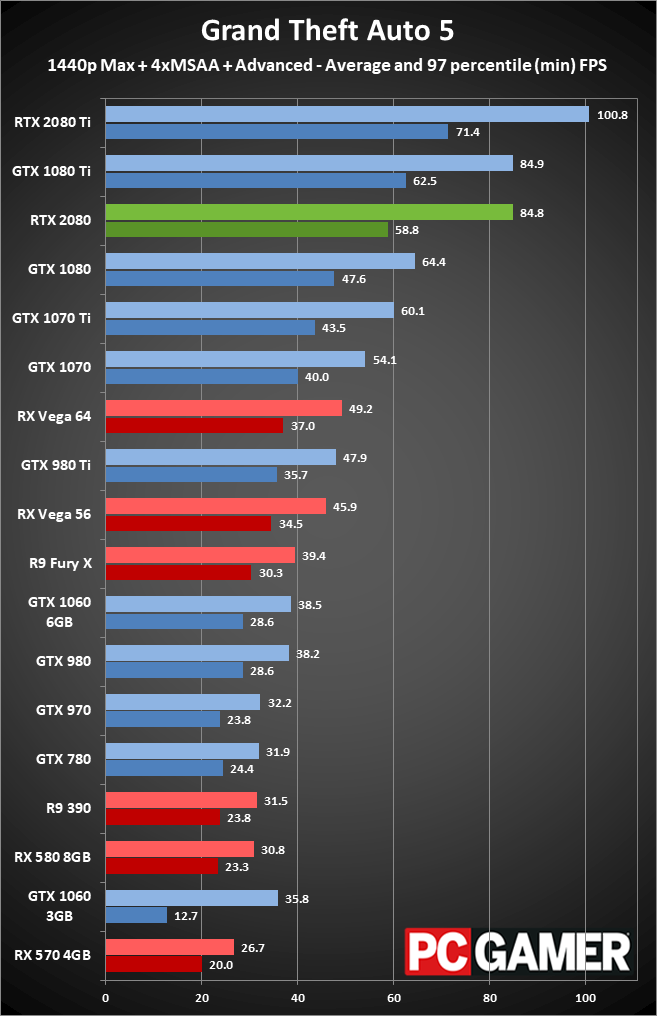

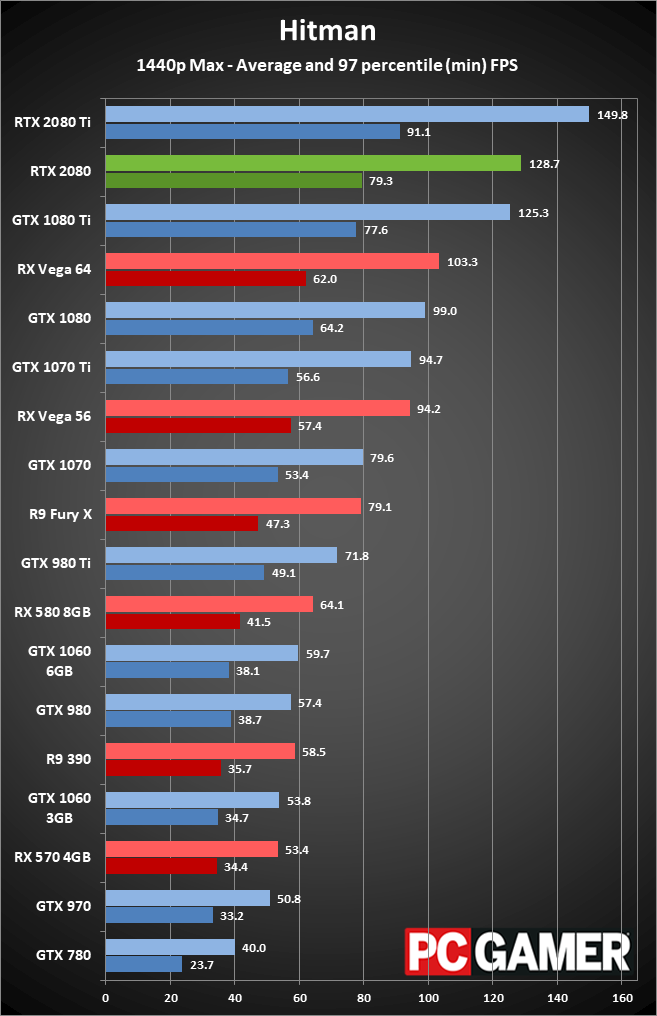

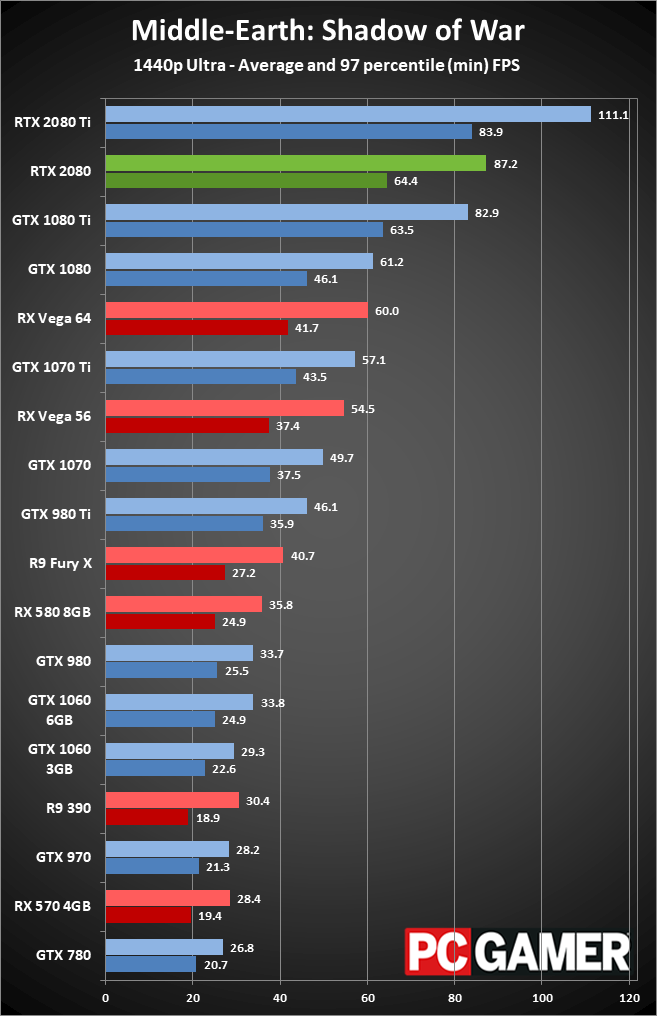

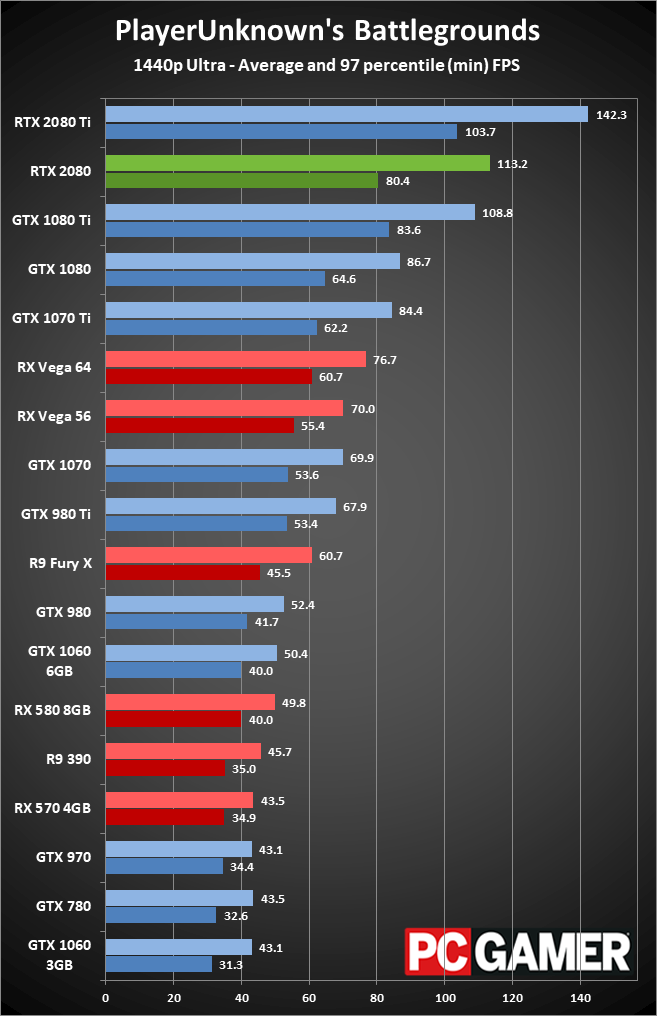

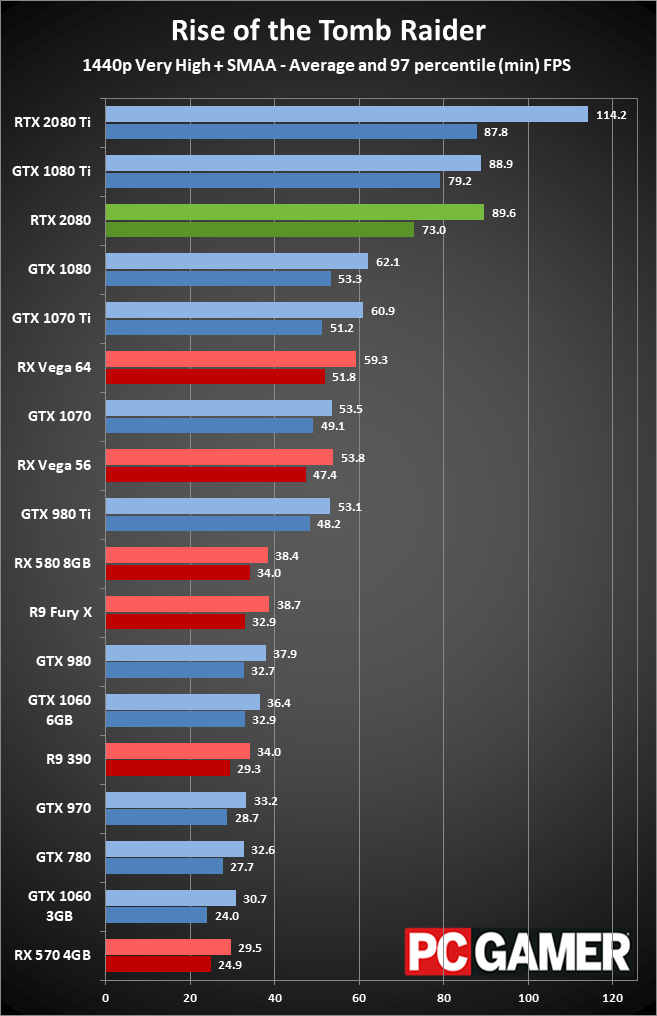

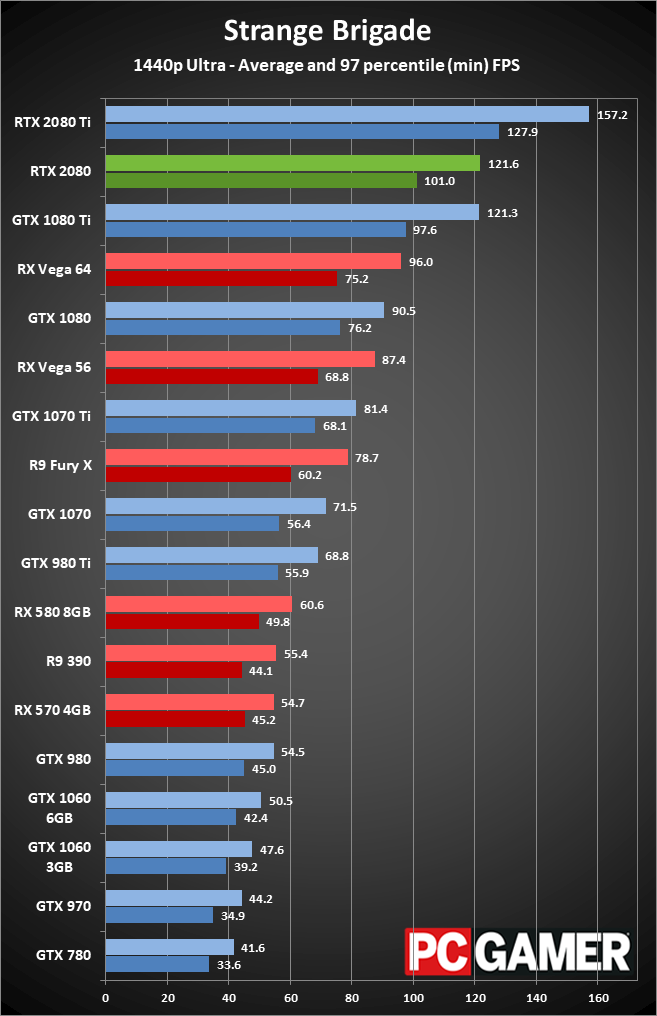

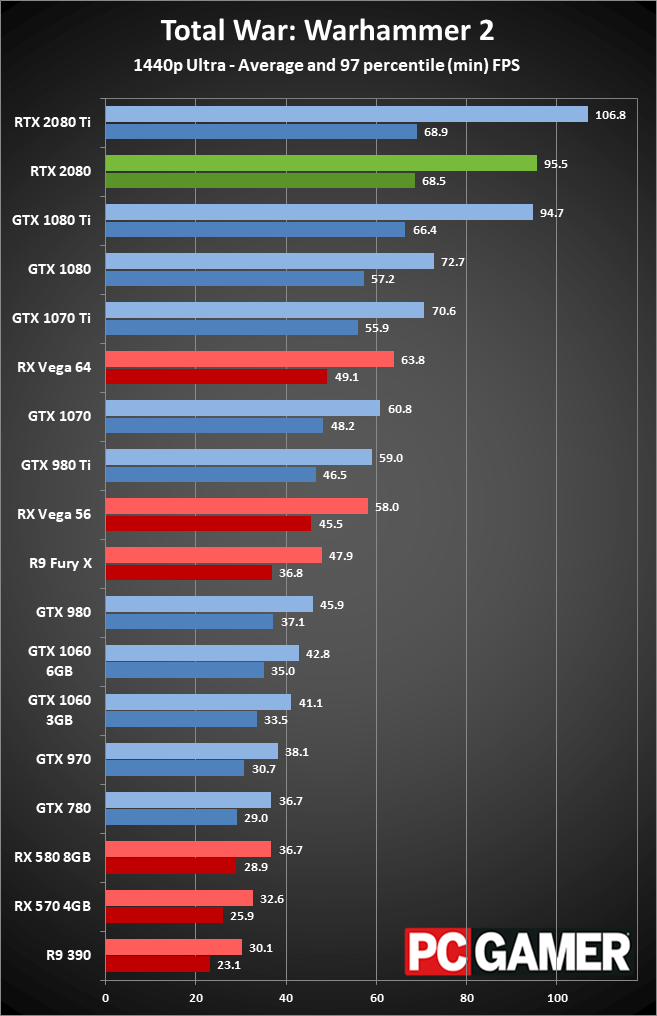

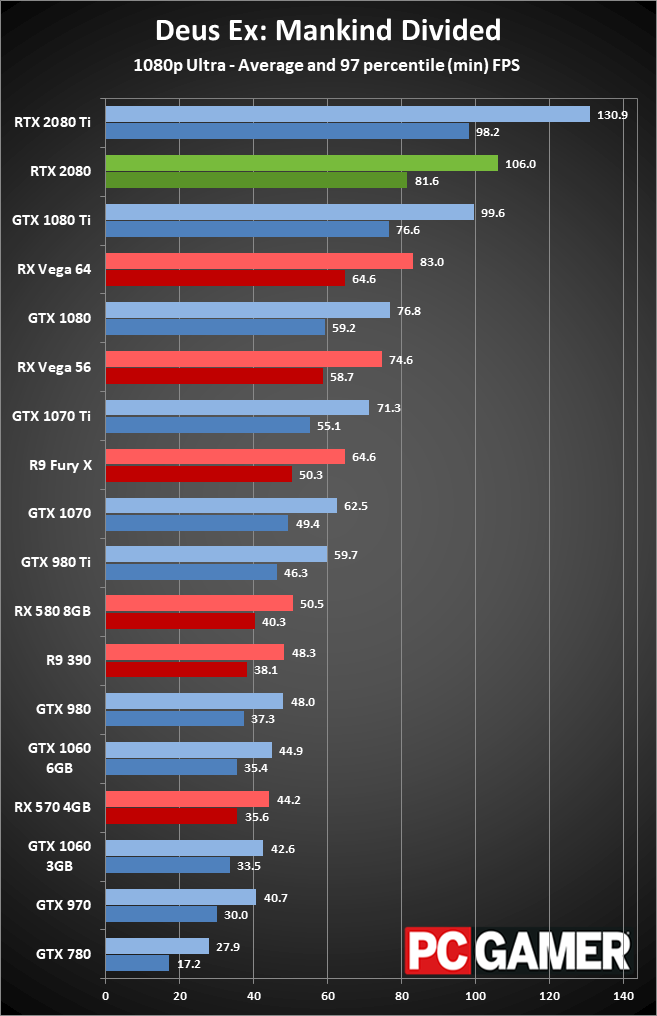

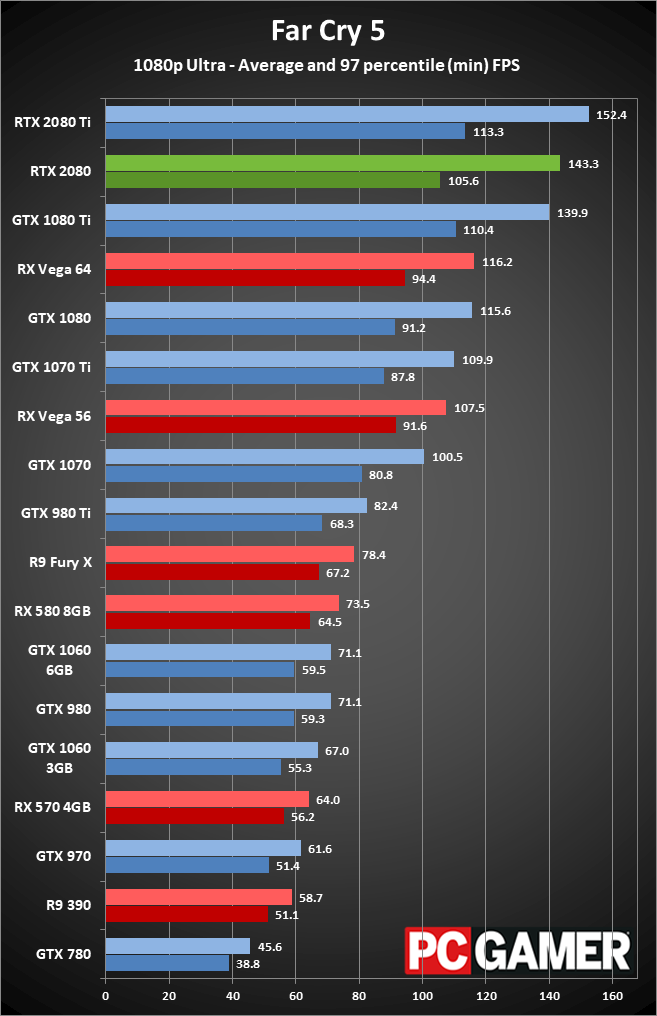

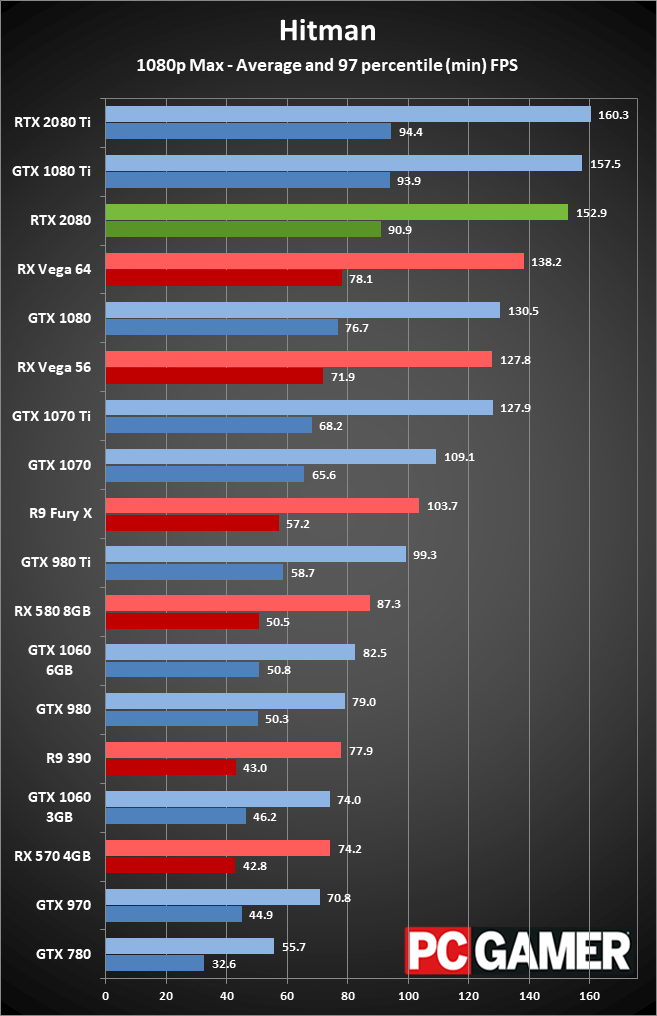

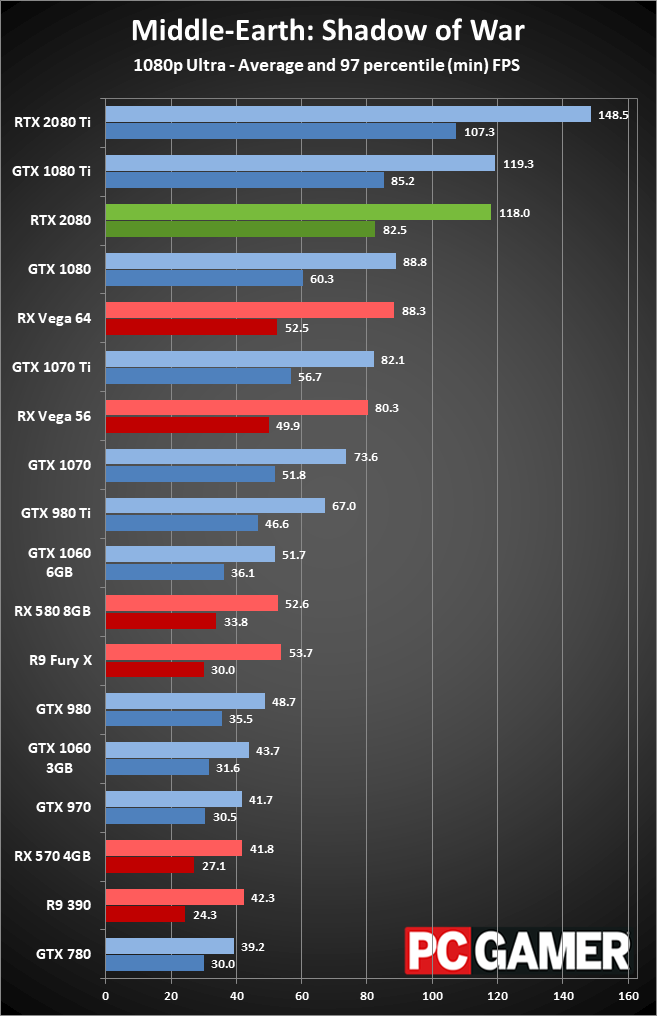

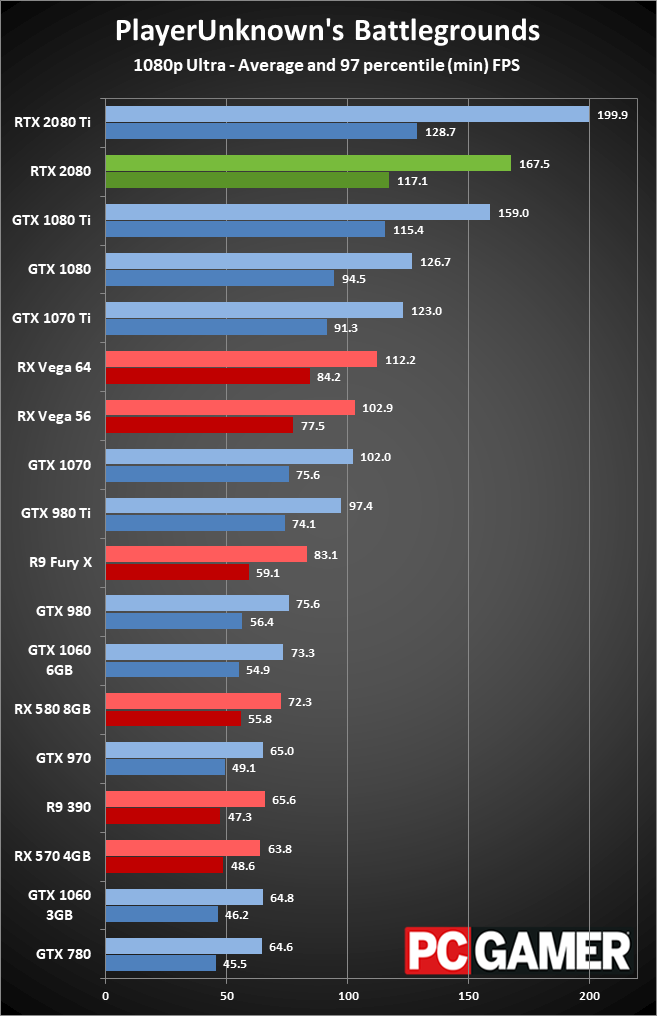

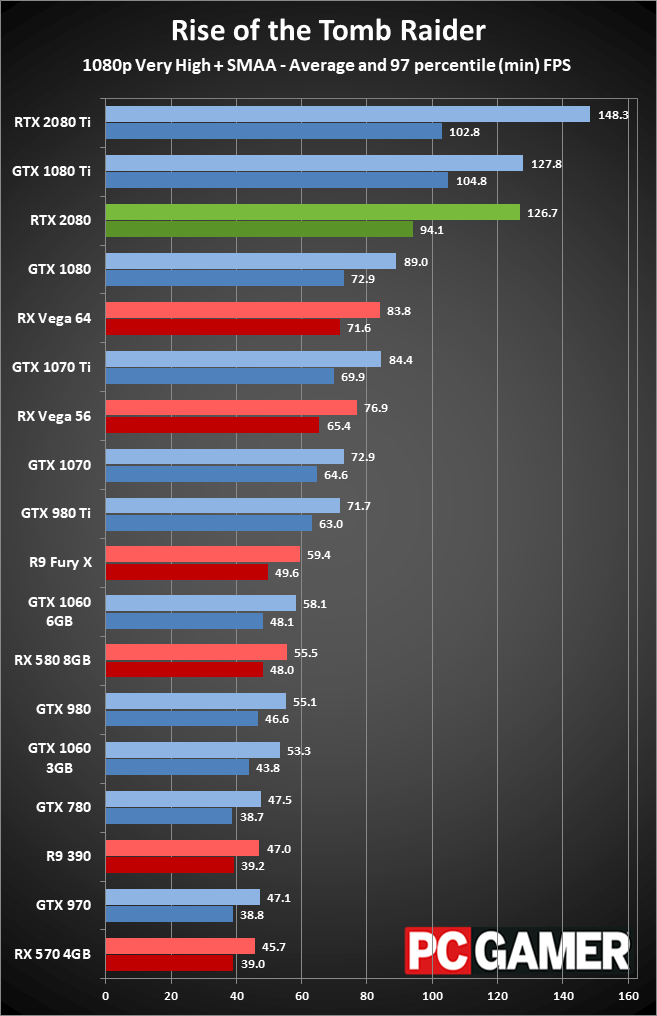

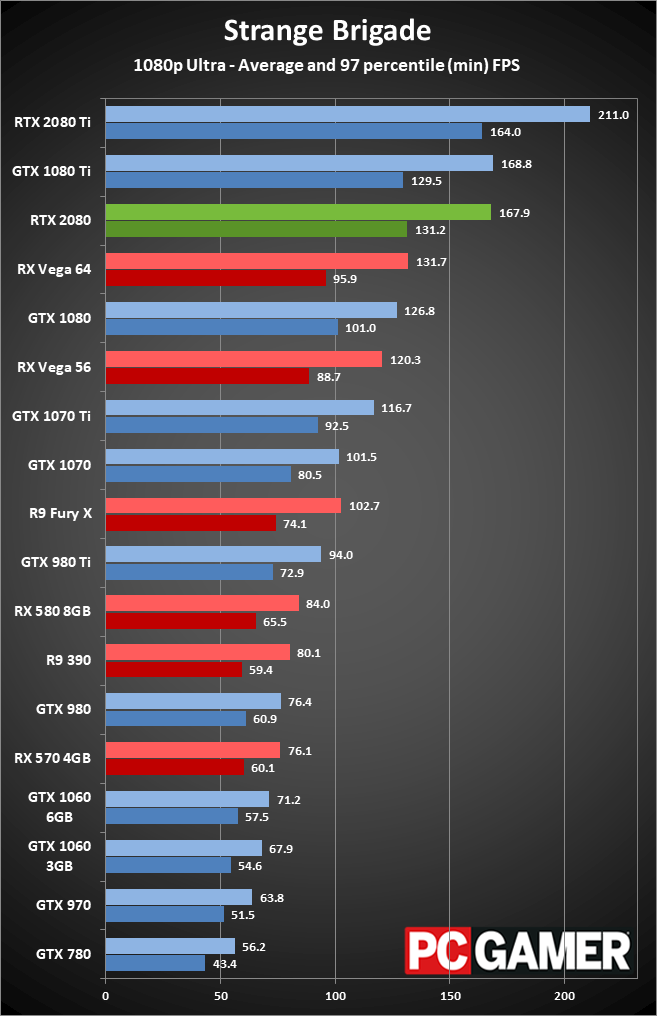

Starting at 4k, there was hope that the GeForce RTX 2080 would be significantly faster than the RTX 1080 Ti. That clearly isn't the case, at least not on existing games. Hitman running DX12, and Wolfenstein 2 using the Vulkan API, end up being the only games to see double digit percentage gains at 4k. Most other games are a wash, and overall with the twelve tested games, GeForce RTX 2080 leads the GTX 1080 Ti by just 3 percent.

Relative to the GTX 1080, things look much better. The GeForce RTX 2080 registers a healthy 38 percent average improvement at 4k, and a few games show a 50 percent jump. If we were talking about cards nominally priced the same, that would be a great generational improvement, but this only comes a 45 percent increase over current pricing.

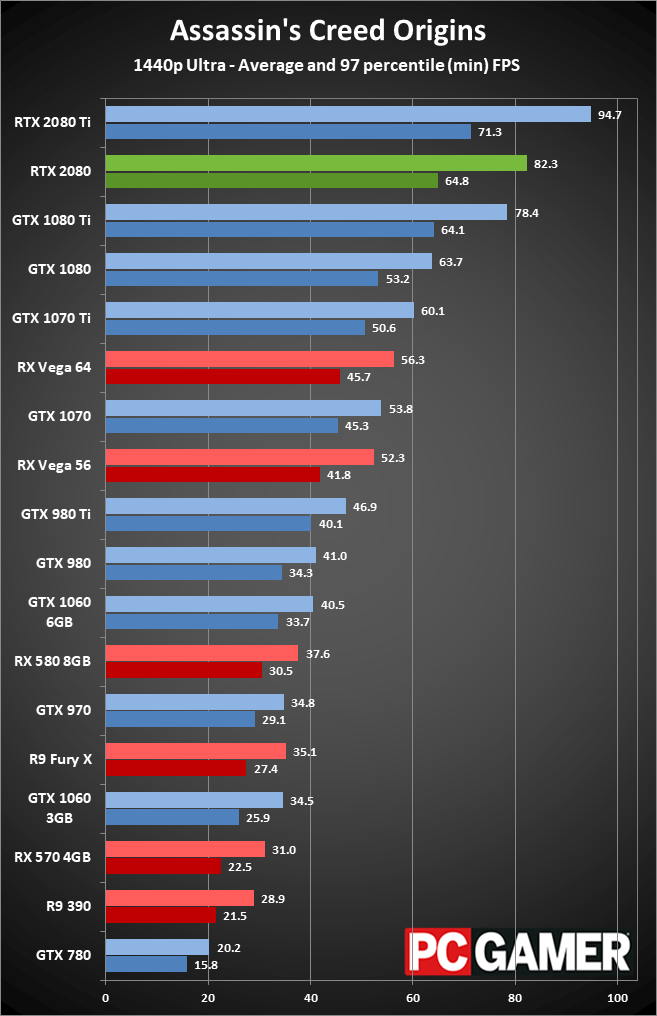

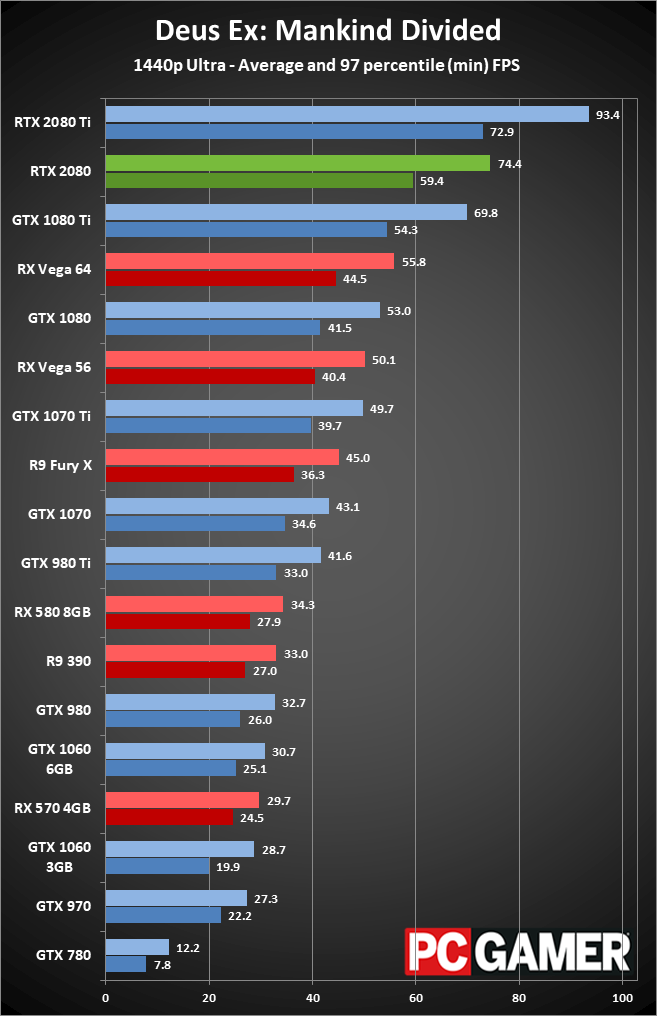

Dropping to 1440p doesn't really change things much. The GeForce RTX 2080 FE is 4 percent faster than the GTX 1080 Ti, and 36 percent faster than the GTX 1080. If you have a 144Hz G-Sync display, you're in the sweet spot of around 100fps. However, you honestly wouldn't notice the difference between 1080 Ti and 2080 in the current crop of games. That might change once the ray tracing and DLSS games start arriving, but right now it's a wash.

You really shouldn't be thinking about buying a GeForce RTX 2080 for 1080p gaming—at least not without ray tracing and/or DLSS 2X. It's still technically faster than previous generation 10-series cards, but the high-end cards all hit 90fps averages or more. The 1080p medium charts are provided purely for the sake of completeness.

Overall, I'm surprised we don't see a larger performance jump from the Turing architecture. Clockspeed and core counts should provide a 20 percent boost, and memory bandwidth is 40 percent higher. Nvidia claims the CUDA cores are 50 percent more efficient this time, and memory subsystem enhancements further boost effective memory bandwidth. Our benchmarks meanwhile don't come anywhere near those theoretical numbers, which might seem like typical marketing BS, but it may also be a matter of maturity, or the definition of 'efficiency.' There's hope that future drivers will improve performance, however, which is often the case for the first couple of months after a new architecture launches.

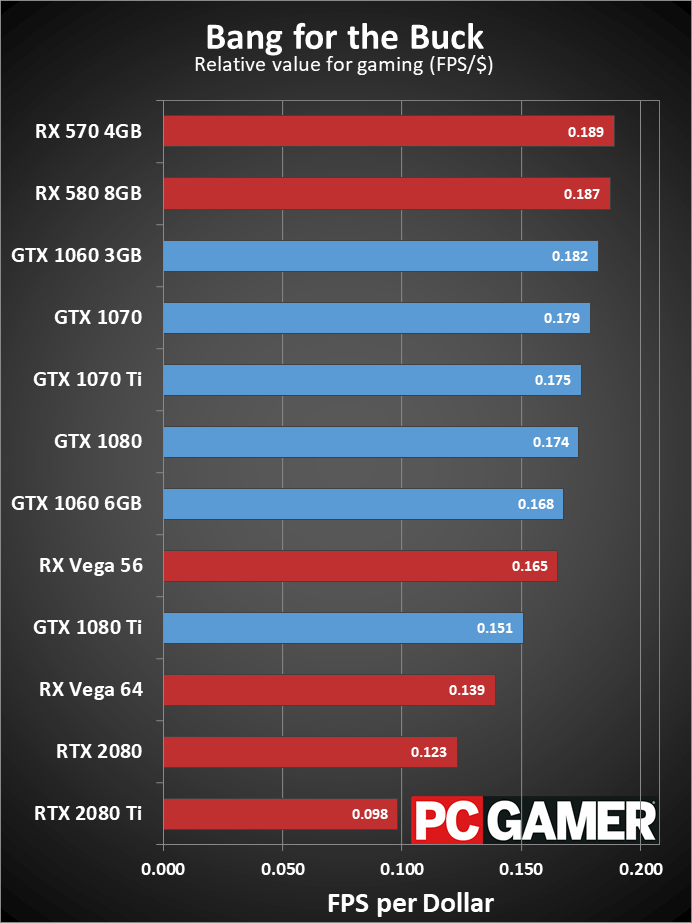

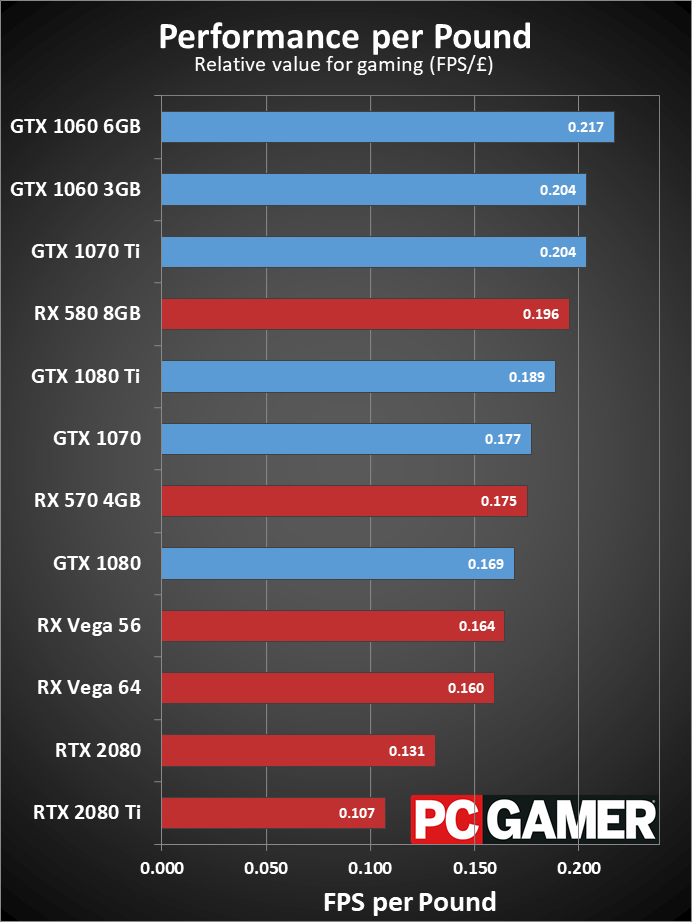

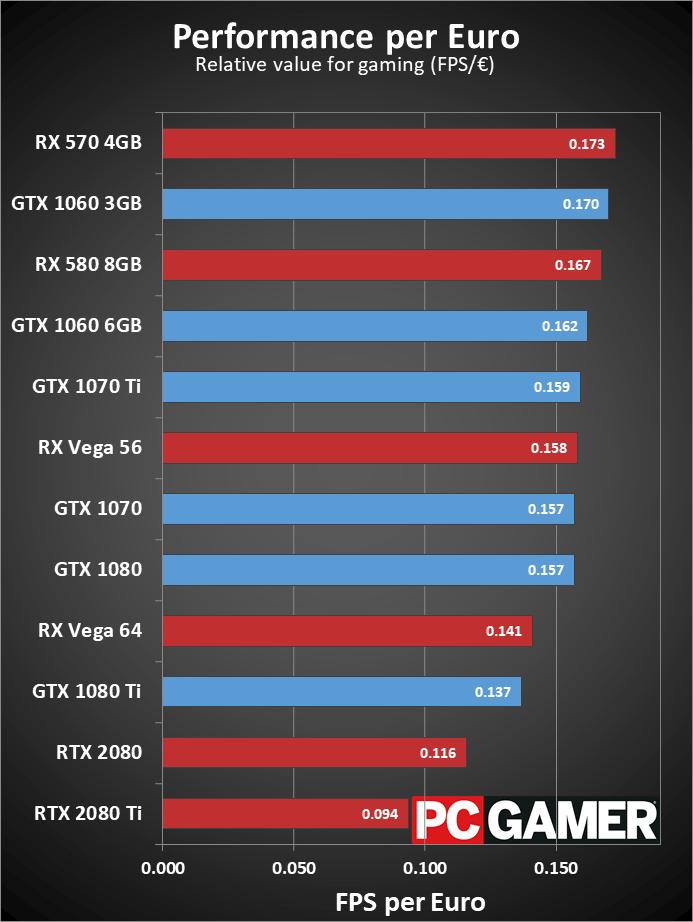

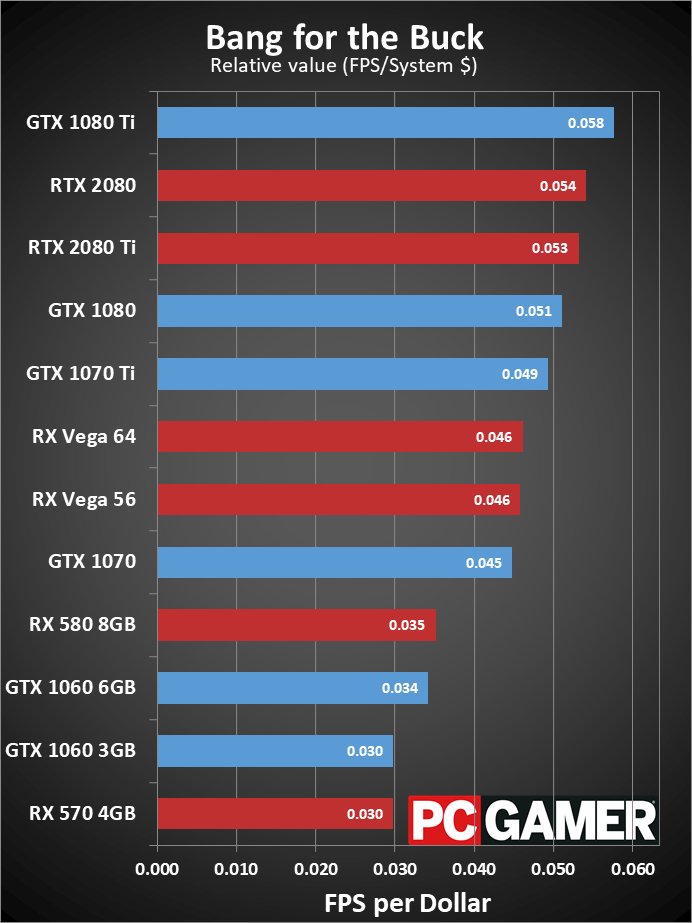

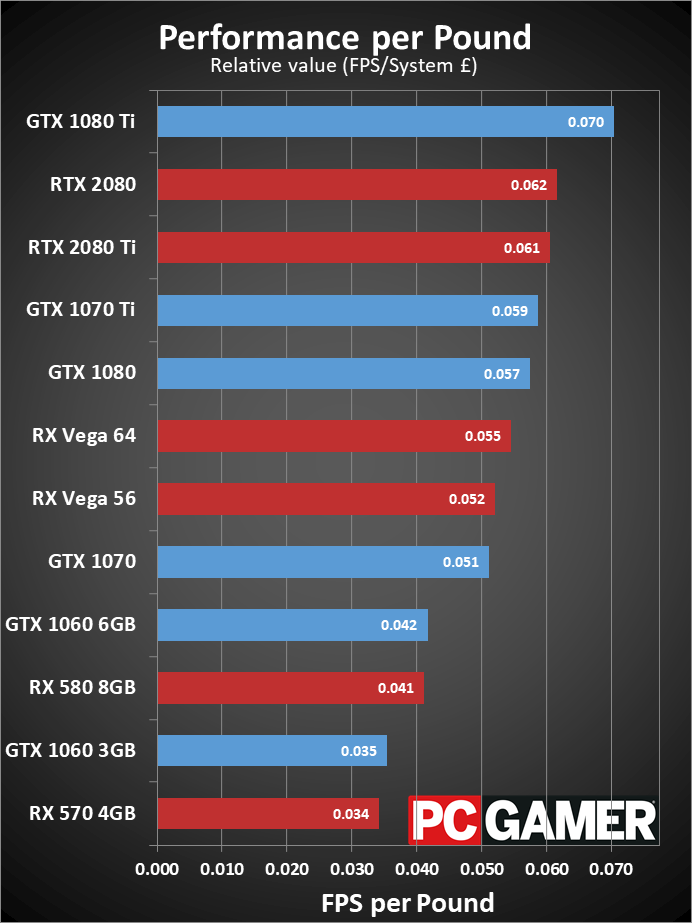

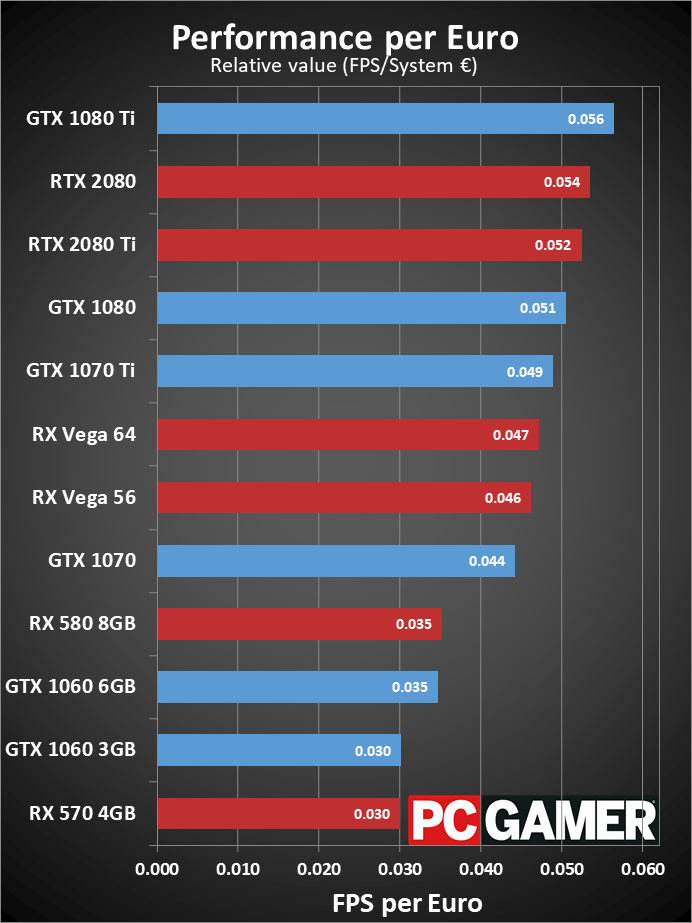

The GeForce RTX 2080 Founders Edition value proposition

There's another way of looking at performance, fps per monetary unit. That works well if you're only buying a graphics card, but some people are looking at building an entirely new PC. I've included both value metrics in the above charts, for the US, UK, and Europe (using Germany pricing). If you're only looking to upgrade your graphics card, the new GeForce RTX 2080 is a terrible value—the only worse value is the even more expensive 2080 Ti. That maxim hold true regardless of your location. For users looking to build a high-end gaming PC, like my testbed, things change quite a bit, with the 1080 Ti claiming top honors followed by the GeForce RTX 2080.

How can a graphics card be both the best and worst value? It's all in the perspective, and that perspective includes the current crop of tested games—test again in six months using games with ray tracing or some other new feature and the rankings could change again. You should also factor in your current graphics card when considering value, as upgrading from a GTX 1070 to an RTX 2080 means the potential gains are even smaller. Put another way, I don't feel either of these value charts are an entirely valid representation of the market, because there are dozens of contributing factors, but they're at least an interesting data point to consider.

The GeForce RTX 2080 Founders Edition is fast, but is it fast enough?

I can't help feeling like there's a ton of untapped potential in the GeForce RTX 2080 right now. The GTX 1080 Ti basically delivers the same level of performance on current games, and with the cryptocurrency induced graphics card shortage behind us and the next generation cards arriving, 1080 Ti cards are selling for under $650 and dropping. But when the next generation games start arriving, the 1080 Ti might suddenly look outdated. That makes the $799 RTX 2080 Founders Edition a question of faith.

Do you believe enough games that leverage the new ray tracing features will arrive in time to warrant jumping on the bandwagon? And will those games run well enough on the RTX 2080, when many appeared to struggle even on the beefier RTX 2080 Ti? Are you willing to pay more money for a card that's only incrementally faster on existing games than the former champion, in the hope it will deliver significantly better performance in the future? These are good questions, and there's no clear answer.

I can't help feeling like there's a ton of untapped potential in the GeForce RTX 2080 Founders Edition.

I'm optimistic about the future potential for the Turing architecture, as it represents the biggest improvements to the graphics rendering pipeline we've seen since the first GeForce cards. And let's be clear on this as well: Nvidia isn't just milking customers. Turing TU104 is a huge chip, 16 percent larger than the GP102, with 13 percent more transistors, and nearly twice this size of the GP104. It includes more CUDA cores, architectural enhancements, RT cores, and Tensor cores that are all investments in a ray traced future.

From the technology side of things, Turing and the GeForce RTX 2080 are amazing and warrant a substantially higher score. In another year, maybe less, GeForce RTX 2080 could be a kick-ass product. Or it may be the first generation of ray tracing consumer hardware that's not quite able to handle the workload.

It's the proverbial chicken and egg problem. Nvidia has created the hardware solution, and now it's up to the software to catch up. But in the here and now, the RTX 2080 carries a high price and lacks the raw performance to back it up. It's not a revolution, at least not yet, but for those that believe in a ray traced future, go ahead and bump the score up by 10 points.

The GeForce RTX 2080 is plenty fast and has untapped potential, but it's an expensive card that's ahead of its time.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.