These are the 5 most ridiculous GPUs of all time (or rather 4 GPUs and one genuine leaf blower)

These GPUs were all kinda genius, but that didn't make them any less ridiculous

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

The current state of the GPU market is just plain odd, if you ask me. We've got some of the best graphics cards readily available in stock, but nice as they are, they're also majorly overpriced. At the same time, those ever-so-impressive GPUs often turn out to be kind of meh when compared to the previous generations, and we just sort of … live with it.

We're complacent. We take our overpriced, painfully mid GPUs and we like them.

Who can blame us, though? Innovation has turned into iteration. The latest developments in GPU technology often don't feel so mindblowing; they're a natural extension of what came before them. At this point, no one expects AMD and Nvidia to reinvent the wheel. If it works, it works.

But for that wheel to be reinvented, it had to be invented first, and it was a wild time. The early days of graphics cards were nothing like what we're dealing with now, and the road to get here was paved with some truly ridiculous graphics processing units.

Buckle up, because we're about to go on an adventure all the way back to the early days of graphics cards. I'll tell you how it all began, and then, I'll show you some truly ridiculous GPUs — including an Nvidia GPU that was so loud that Nvidia itself made a video just to mock it.

First, a brief history of GPUs

So Nvidia invented the GPU… but did it really?

In the early days of computer graphics, innovation was wild.

Before we had ray tracing, path tracing, DLSS, 4K gaming, and all that fancy jazz, we were just lucky to play a game at an 800 x 600 resolution. In fact, we were lucky to play a game at all, and even though everything was pretty much three pixels across in size, in our minds, it looked better than real life. (I'd still prefer to live in The Sims than IRL, as that's where you can somehow buy land and build a house for $20,000. And crisps are entirely free.)

In the early days of computer graphics, innovation was wild, and it came in many shapes and forms. But where did it all start?

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

If you trust Nvidia, it started in 1999 when, according to the company website, Nvidia "Invented the GPU, the graphics processing unit, which set the stage to reshape the computing industry." However, nothing is ever that simple.

Long before Nvidia started making GPUs en masse, specialized graphics circuits were already integral to computing, present in things such as arcade systems in the '70s. Companies, such as RCA, introduced their own graphics chips in those days, with chips like Pixie being able to output a mindblowing resolution of 64 x 128.

In 1981, IBM introduced the Monochrome Display Adapter (MDA), which was used for displaying text and symbols. Hercules Computer Technology also debuted its own graphics card (HGC) not long after, and Intel joined the fray in 1983 with the iSBX 275 Video Graphics Controller Multimodule Board. Yup, Intel's been in the graphics card game longer than you thought. It could display a whopping eight total colors at a maximum 256 x 256 resolution. Amazing, right? Hold on, we're getting there.

The '90s saw the advent of more video cards, and it was a volatile time with the constant rise and fall of companies and products alike.

At a time when 2D graphics and 3D graphics weren't handled by a single component, 3dfx Interactive launched the Voodoo graphics chip in 1996, which made real-time 3D graphics accessible for the first time for a broad consumer market. 3D add-in cards such as this couldn't handle 2D graphics, though, so you still needed a separate 2D card with pass-through cables. What a chore.

Meanwhile, near the end of the decade, ATI Technologies (later swallowed up by AMD) was also on the rise as a formidable player, releasing its "Rage" series of graphics accelerators. The Rage Pro (1997) stood out as an attempt to offer a 2D/3D solution in one piece of retro hardware.

Nvidia then arrived on the scene in 1993, but it only really made a name for itself when it launched the Riva 128 in 1997. This was a big deal, I'm not gonna lie—the Riva 128 did the unthinkable by combining 3D acceleration with high-performance 2D and video acceleration, and it ran at a 100 MHz core clock speed, which honestly makes me tear up a little.

In 1999, Nvidia debuted the GeForce 256 and coined the term "GPU"—but wait, did it really? Reportedly, Sony first used the acronym in 1994 when referring to PlayStation consoles, but it wasn't in the same context as what Nvidia later produced; Nvidia's definition for a GPU included a processor handling geometry transform, lighting, and rendering. Nvidia certainly popularized and fully defined the meaning of a graphics processing unit, but did it invent GPUs as a whole? Definitely debatable.

The most ridiculous GPUs of all time, ranked

Many, many, many GPUs (and GPU-like products) launched between the release of the ol' RIVA 128 and the RTX 5090. Some were brilliant; some were awful; many were downright ridiculous. Let's have a look at some of the most iconic and faintly ludicrous GPUs of all time.

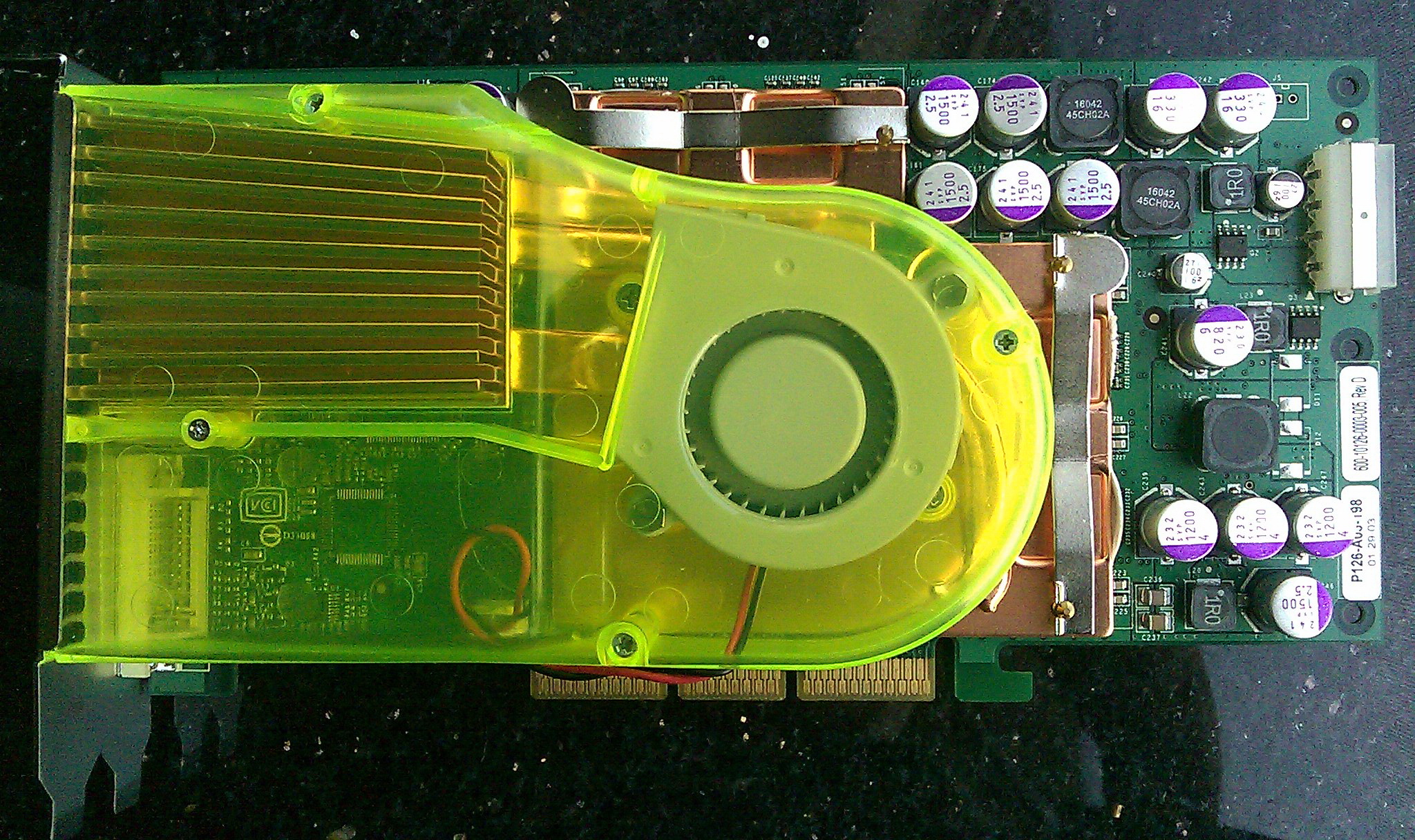

1. Nvidia FX 5800 Ultra

A rare case of Nvidia having a sense of humor.

If you think your current gaming rig is loud, think again. It's probably as quiet as a mouse compared to this 2003 Nvidia graphics card.

The Nvidia FX 5800 Ultra, also referred to as the "Dustbuster" or "leaf blower," earned the top spot on this list because it well and truly deserved those two nicknames. Reviewers at the time likened the card to a hairdryer, a leaf blower, a vacuum cleaner, and all manner of things you don't want your computer to sound like. It was seriously loud.

Spec-wise, the GPU had a lot to offer (for 2003 standards, that is). Unfortunately, gamers started complaining in droves the moment they attempted to actually use the GPU and needed a pair of earplugs to survive the experience.

The FX 5800 Ultra holds a special place in my heart because it was a rare case of Nvidia having a sense of humor. The company later shared a video of some of its execs discussing the card, making fun of how noisy it was. Give it a looksee—it was hilarious (well, as far as corporate videos go, at least).

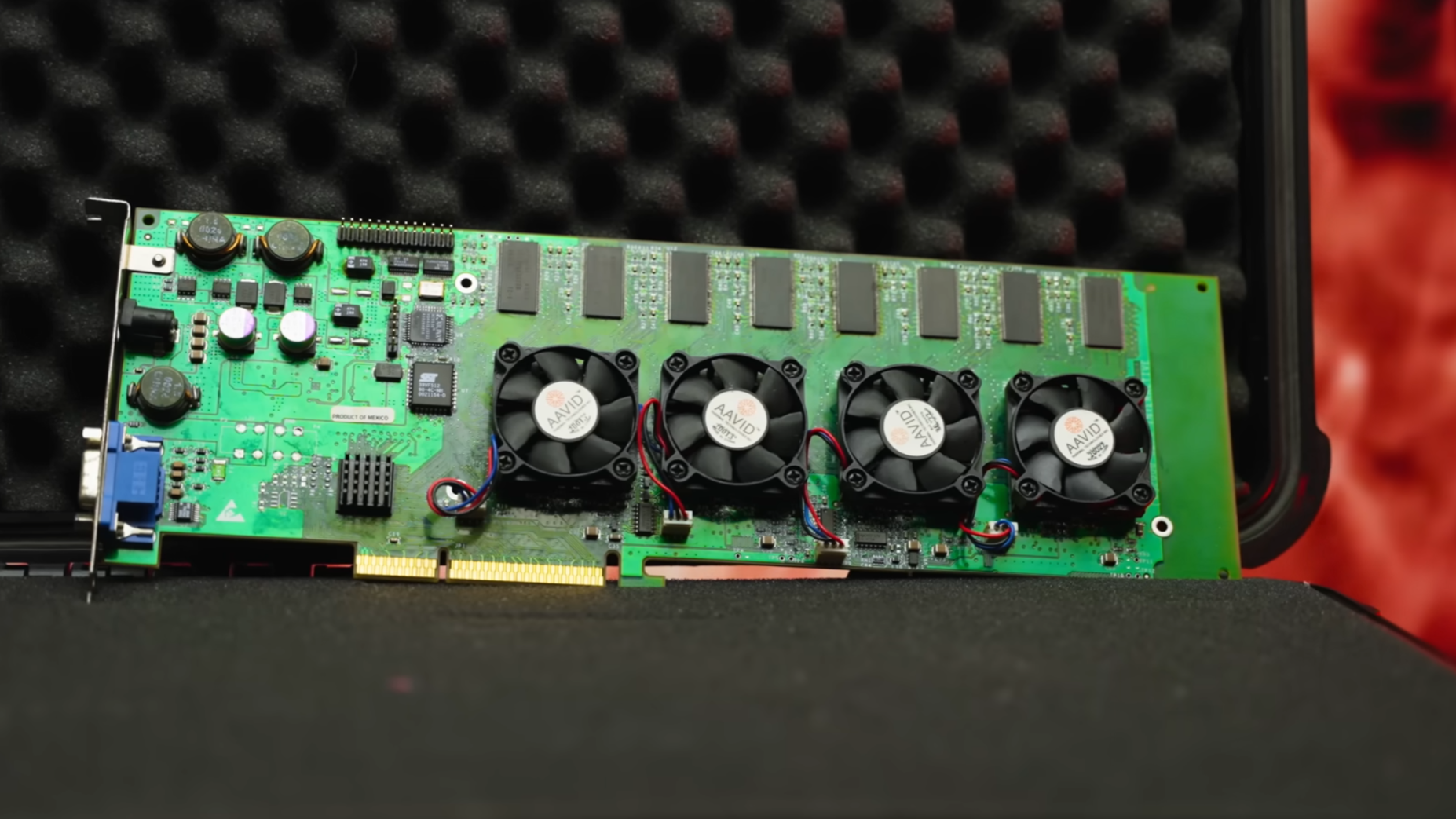

2. 3dfx Voodoo 5 6000

I could write a whole separate article about the history of 3dfx Interactive. The company fell victim to the turbulent hardware industry of the '90s and the early '00s, but before it filed for bankruptcy in 2002 and sold off its remaining assets to Nvidia, it launched some once-in-a-lifetime cards. The 3dfx Voodoo5 6000, however, never actually made it to the mainstream market, but boy, was it a ridiculous GPU.

The main kicker was the fact that it needed its own external power supply. It packed four VSA-100 GPUs onto a single board, and used SLI—no, not the Nvidia kind, but rather Scan Line Interleaving—to use all four at the same time. Each of the four GPUs had 32 MB of 166 MHz SDR memory at its disposal, as well as two pixel shaders (vertex shaders were not yet a thing back then).

A fully-functioning engineering prototype of the Voodoo 5 6000 was sold at an auction for a whopping $15,000. It's truly an unforgettable piece of GPU history; completely over-the-top and 3dfx's last hurrah that sadly never truly saw the light of day.

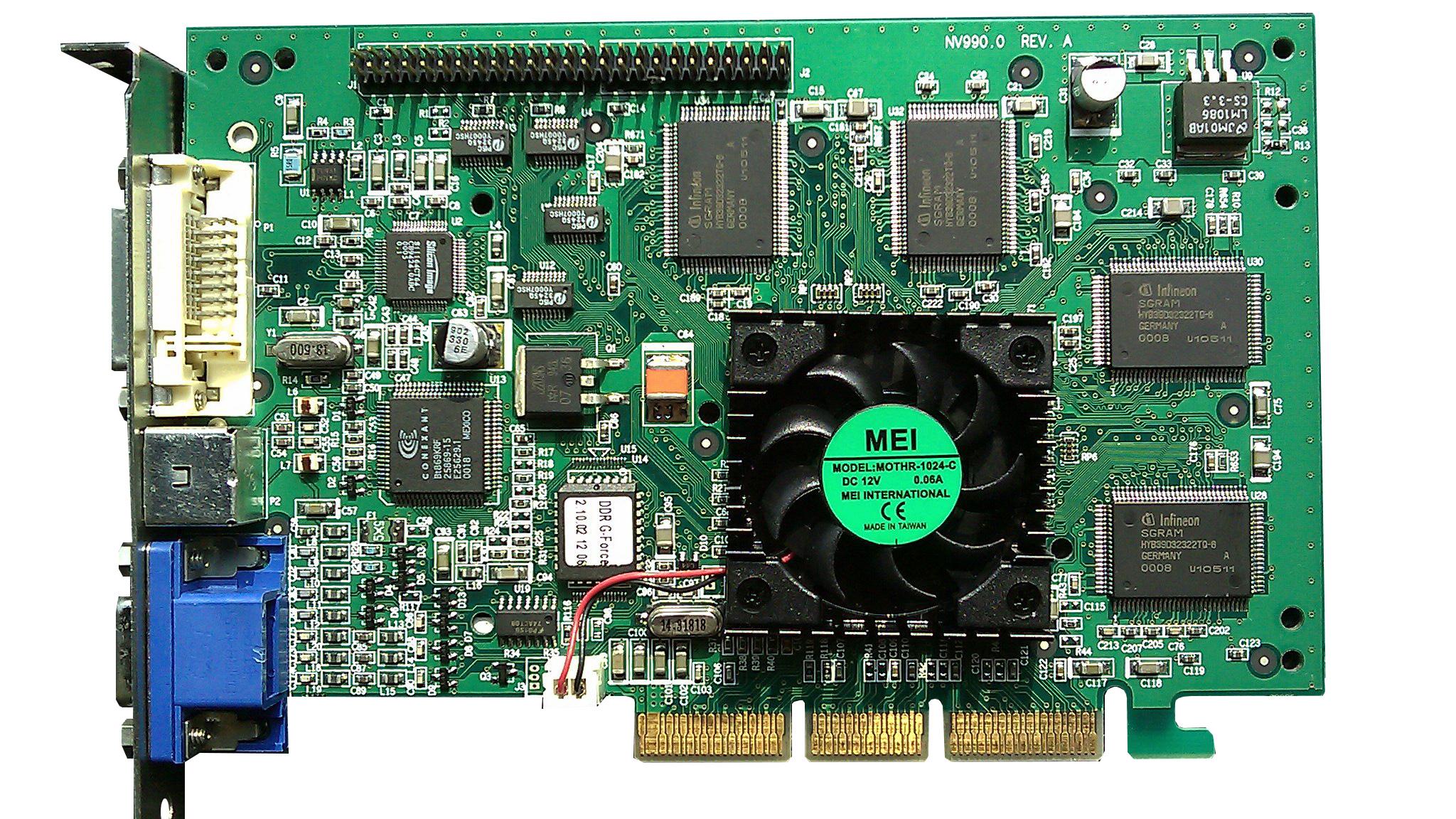

3. Nvidia NV1 (Diamond Edge 3D)

These days, we all know Nvidia as the GPU giant that controls the majority of the market and makes us pretend to be grateful when we're fed a $2,000 graphics card (that is then sold for $3,000, because who cares about the MSRP). But in the '90s, Nvidia had its fair share of blunders, and the NV1 (sold as Diamond Edge 3D) was one of them.

The NV1 was an audacious attempt to be an all-in-one multimedia powerhouse way ahead of its time. It could handle 2D and 3D video, supported the Sega Saturn controller, and even had a built-in sound card. The idea was to replace multiple expansion cards with one, but this resulted in a pricey, unnecessarily complex GPU that ran into many compatibility issues.

The biggest problem and innovation all in one was the unique approach to 3D rendering. Watch out: Tech jargon follows.

This "unique approach" was referred to as quadratic texture mapping. The standard for processing 3D objects involved using triangles, but the NV1 used more complex quadratic primitives (what a term). This was great on paper, but compatibility was a problem, and the timing was all kinds of wrong: Microsoft had just committed to triangle-based rendering in its DirectX API, which left the NV1 in a sticky spot.

Imagine if Nvidia flopped back in the '90s? How much would a high-end GPU cost now? I'd rather not think about this.

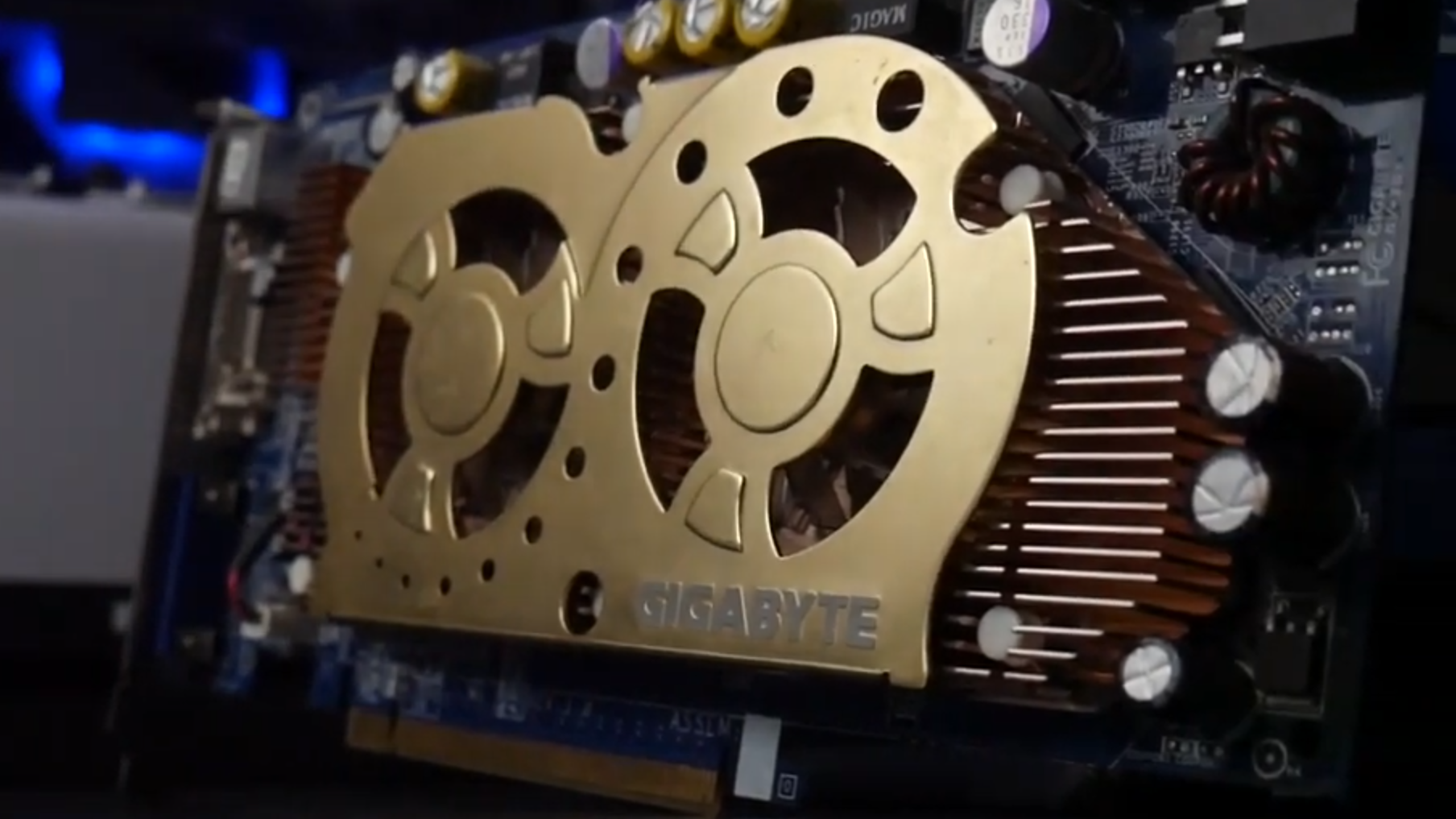

4. Gigabyte GV-3D1

On a list filled with less-than-practical GPUs, the Gigabyte GV-3D1 is perhaps the most impractical one. PC builders love the flexibility of being able to design their rig from scratch, but with this one, you had to do what Gigabyte said or else only use half your GPU. Let me explain.

The GV-3D1 had a niche, proprietary design. Gigabyte grabbed two Nvidia GeForce 6600 GT GPUs and two memory modules, then stuck them all onto one PCB, combining them via SLI. Dual-GPU options weren't all that rare back in those days, but Gigabyte's approach to the subject locked the GV-3D1 into a single specific motherboard. The GPU came bundled with the mobo, sure, but what if you already had a mobo you were happy with? Well, tough luck—no dual GPU GV-3D1 for you!

To be fair, you could use the Gigabyte GV-3D1 with other motherboards, but then it'd only use one of the 6600 GTs, completely defeating the purpose of buying it in the first place. It was also cheaper to buy two 6600 GT cards and a compatible SLI motherboard separately.

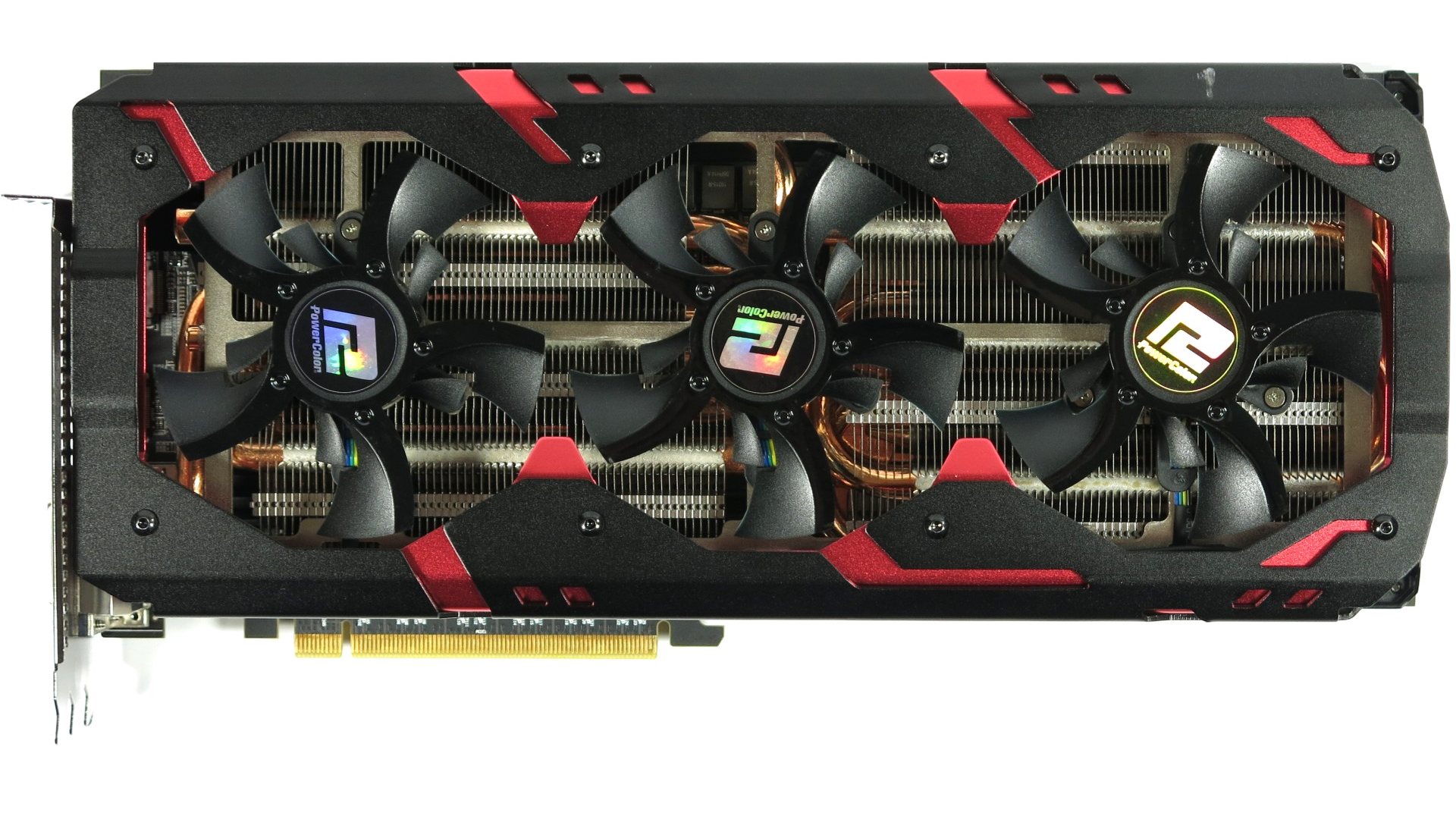

5. PowerColor Devil 13 Dual R9 290X

The PowerColor Devil 13 was as close to extravagant as a GPU can get.

Here's a newer GPU for you to round out this list. In all honesty, I debated between three different cards that all served up a dual-GPU setup — this one, the Matrox Parhelia, and the ATI Rage Fury MAXX. Ultimately, I think this 2014 GPU is still a more ridiculous option than the two oldies. Here's why:

The PowerColor Devil 13 was as close to extravagant as a GPU can get. (Well, apart from this Asus GPU, coated in pure gold.) This thing was a monstrosity. It combined two of AMD's R9 290X GPUs, already known for their high temperatures, and bundled them with a ginormous air cooler; surprise, surprise, this resulted in the GPU being super loud. Our sister site Tom's Hardware reviewed it back in the day and had this to say about it: "Registering more than 58 dB(A) in quiet mode and more than 61 dB(A) in performance mode, this graphics card is a conversation piece only in the sense that it doesn’t allow conversations to happen anywhere near it."

To make this whole thing work, PowerColor equipped the Devil with four 8-pin power connectors, demanding up to 675 watts just for the card. That's more power hungry than even the RTX 5090 today. Also, the GPU came with a support bracket to prevent sag way before it was cool, and with good reason: It weighed over 5 lbs.

And for all this, you had to pay $1,500, which works out as a little over $2,000 today. Somehow, that's comforting to me; the GPU hellscape we're stuck in now is in some ways no different from the one from a decade ago.

We've really got it good now, don't we?

Man, looking through all these GPUs made me majorly nostalgic. I built my first PC in 2005, and so much has changed since then.

The early days of graphics cards were filled with so much wide-eyed imagination. Companies rose to fame and crashed and burned in record time as innovation was so rapid back it was hard to keep up with—but at least you could count on most new releases being thrilling, for good or ill. Some of the approaches to GPUs were downright misguided, but they brought a feeling of excitement that's hard to replicate in 2025.

These days, we're tracking benchmarks for single-digit fps gains. Back then, GPUs were often 50% to 100% faster from one generation to the next.

But innovation doesn't stop here. We do get entirely new updates here and there, and while focus is currently largely on AI, us gamers will eventually benefit from that, too—even if we're left to just collect the leftover scraps. And who knows, multi-GPU cards might one day make a reappearance for PC gamers if anyone can figure out how to make multiple graphics compute chiplets actually work in a gaming context.

One thing is for sure: Looking back gave me a newfound appreciation for where we're at right now, and the next time I decide to play Cyberpunk 2077, I'll pause and appreciate the experience a tad more than I did the previous time.

👉Check out our list of guides👈

1. Best CPU: AMD Ryzen 7 9800X3D

2. Best motherboard: MSI MAG X870 Tomahawk WiFi

3. Best RAM: G.Skill Trident Z5 RGB 32 GB DDR5-7200

4. Best SSD: WD_Black SN7100

5. Best graphics card: RTX 5070 Ti or RX 9070 XT (whichever is cheaper)

Monica started her gaming journey playing Super Mario Bros on the SNES, but she quickly switched over to a PC and never looked back. These days, her gaming habits are all over the place, ranging from Pokémon and Spelunky 2 to World of Warcraft and Elden Ring. She built her first rig nearly two decades ago, and now, when she's not elbow-deep inside a PC case, she's probably getting paid to rant about the mess that is the GPU market. Outside of the endless battle between AMD and Nvidia, she writes about CPUs, gaming laptops, software, and peripherals. Her work has appeared in Digital Trends, TechRadar, Laptop Mag, SlashGear, Tom's Hardware, WePC, and more.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.