Intel's patent for 'software defined super cores' probably won't make an appearance in CPUs any time soon but implementing the tech could spell the end of the P-core

No P, no E. Just cores. Super cores.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

In the world of CPUs, GPUs, and all things chip-based, patent applications are so frequent that there's little point in paying attention to them. However, one filing from Intel has made its way into tech news headlines because it offers a clever way to improve a CPU's performance-per-watt efficiency. It also suggests that Intel's hybrid approach to processors could ultimately give way to an all-E-core design.

Initially submitted to the US Patent Office at the very end of 2023 (via Videocardz), Intel's application for its so-called 'software defined super cores' (SDCs) is still pending. But given how long it takes to do all of the required research, testing, planning, and writing of the patent, it's clear that Intel has been working on this for a good while.

That doesn't mean we're likely to see the implementation of SDCs in the next generation of Core Ultra chips, though. In fact, SDCs may never see the light of day. Just because something has been patented doesn't mean it ultimately gets used, because it could be too expensive to implement or, in this particular case, it might be too dependent on other variables outside of Intel's control to be really effective.

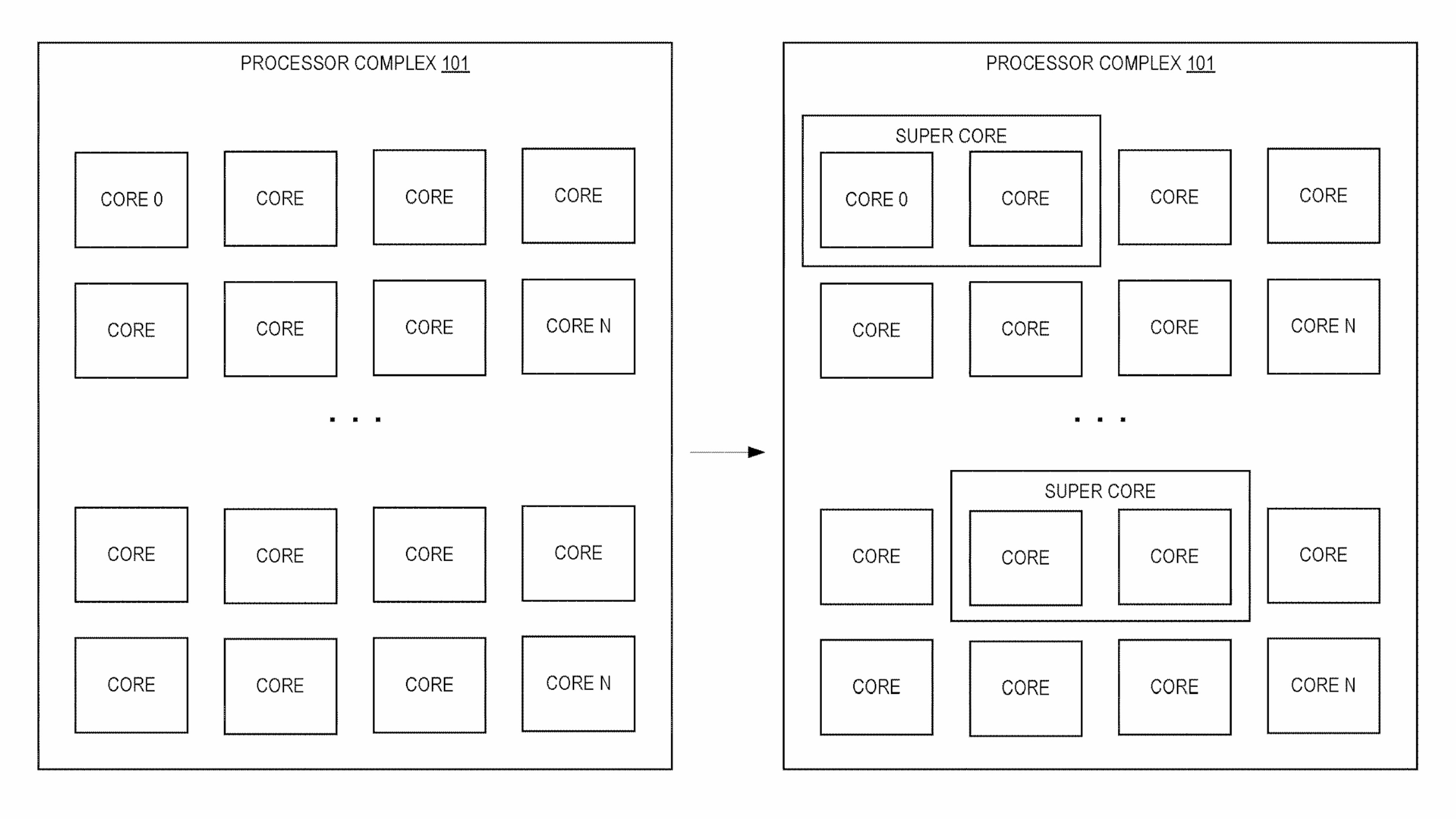

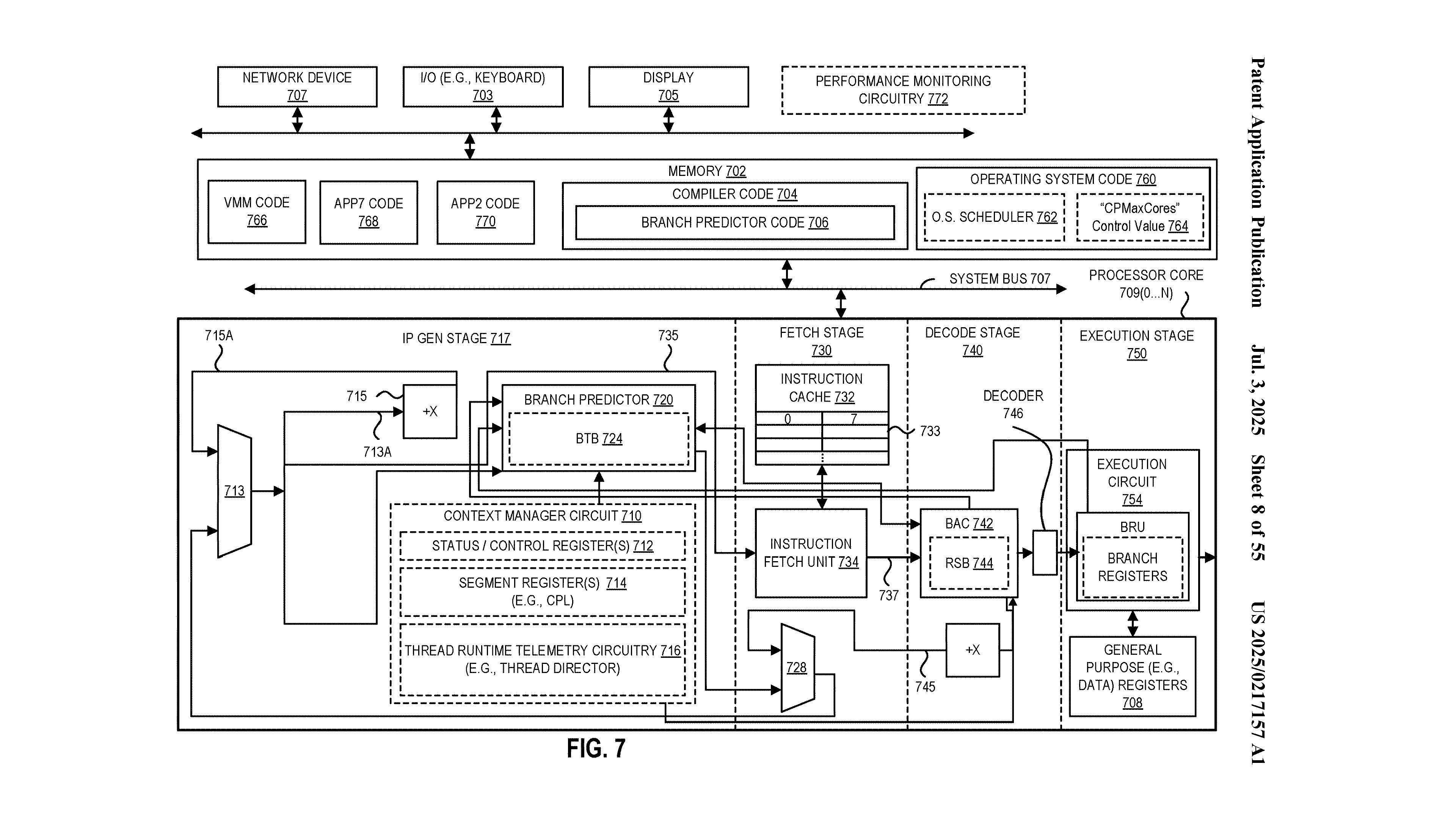

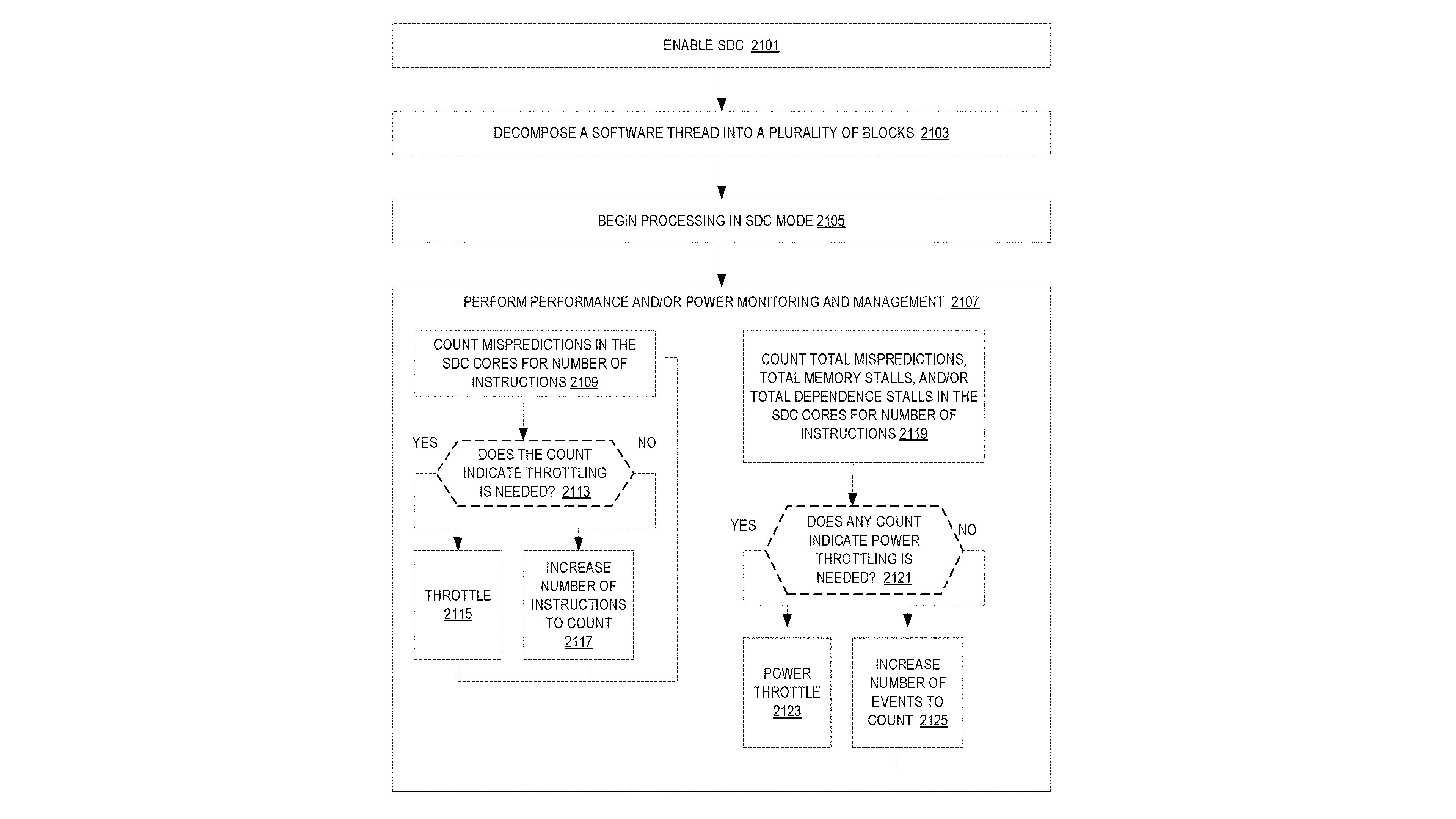

Anyway, the idea behind SDC is relatively simple. Take any CPU in a gaming PC, and you'll find that each physical core in these processors will be handling instructions from one or two threads. The problem that SDC tries to address is that the cores themselves are a bit like a long factory of machines, and ideally, you want them always busy so that the factory is always churning out goods.

Unfortunately, the various instructions that CPUs have to crunch through take different amounts of time to process. That time can be really stretched if a unit is having to wait for data, too. SDC works by analysing incoming threads and then separating instructions so that they can be distributed across processor cores that are physically next to each other.

In more technical terms, rather than having two individual long pipelines, you get a single virtual pipeline that's twice as wide. This gives you more instruction parallelism, and in many ways, it's emulating what GPUs already do. A single Streaming Processor in an AMD RDNA 4 GPU is a massively wide SDC—it can carry out one instruction from up to 64 threads simultaneously.

The difference here is that the two CPU cores can be doing different parts of one, rather than doing the same thing from multiple threads. It's essentially the inverse of HyperThreading, Intel's name for simultaneous multithreading (aka SMT). That's where one CPU core can be issued instructions from two different threads.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

It's also something that Intel explicitly dropped for its Arrow Lake processor design, where each P- and E-core only ever processes one thread at a time. E-cores have always been like this, but ever since 2002, Intel's primary CPU core designs have always supported SMT.

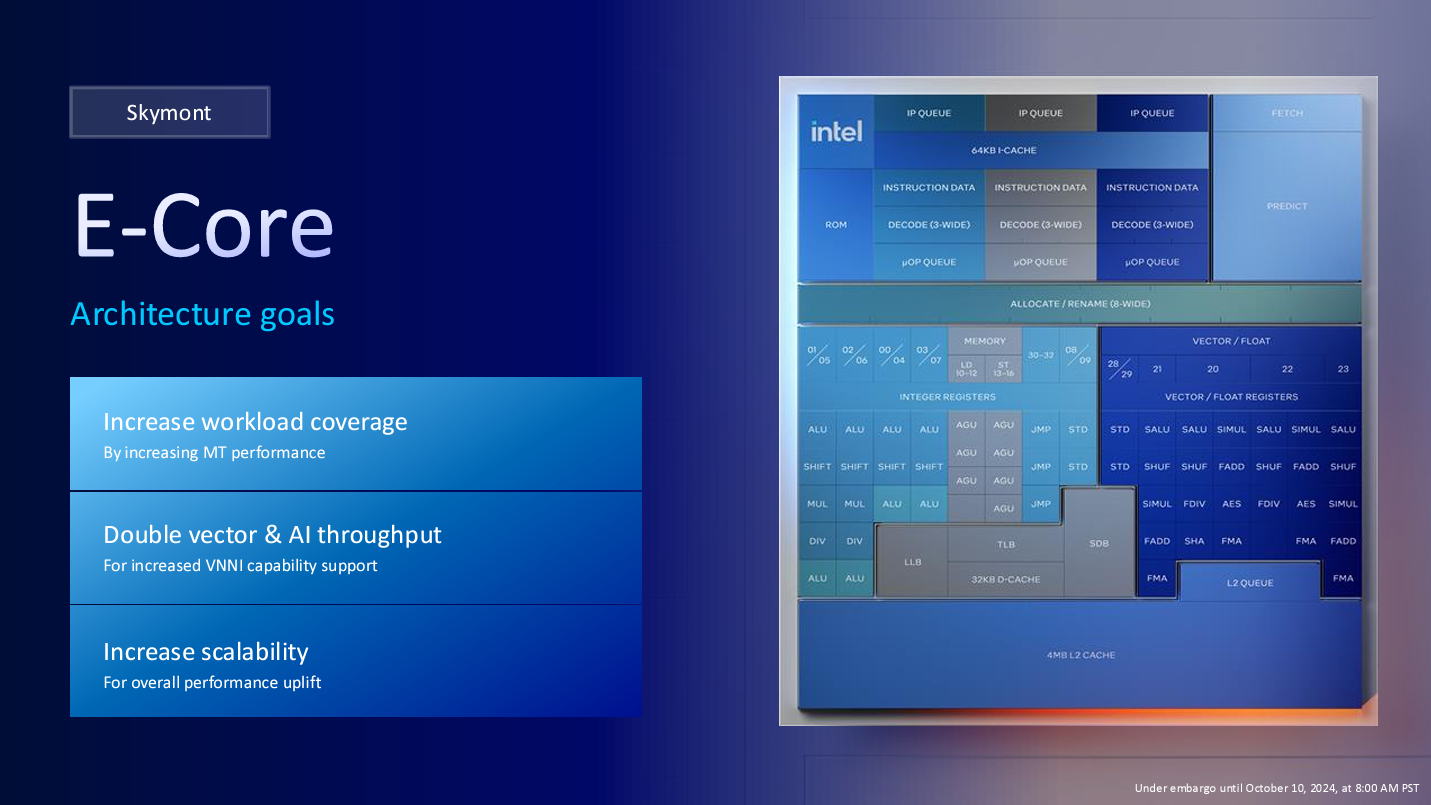

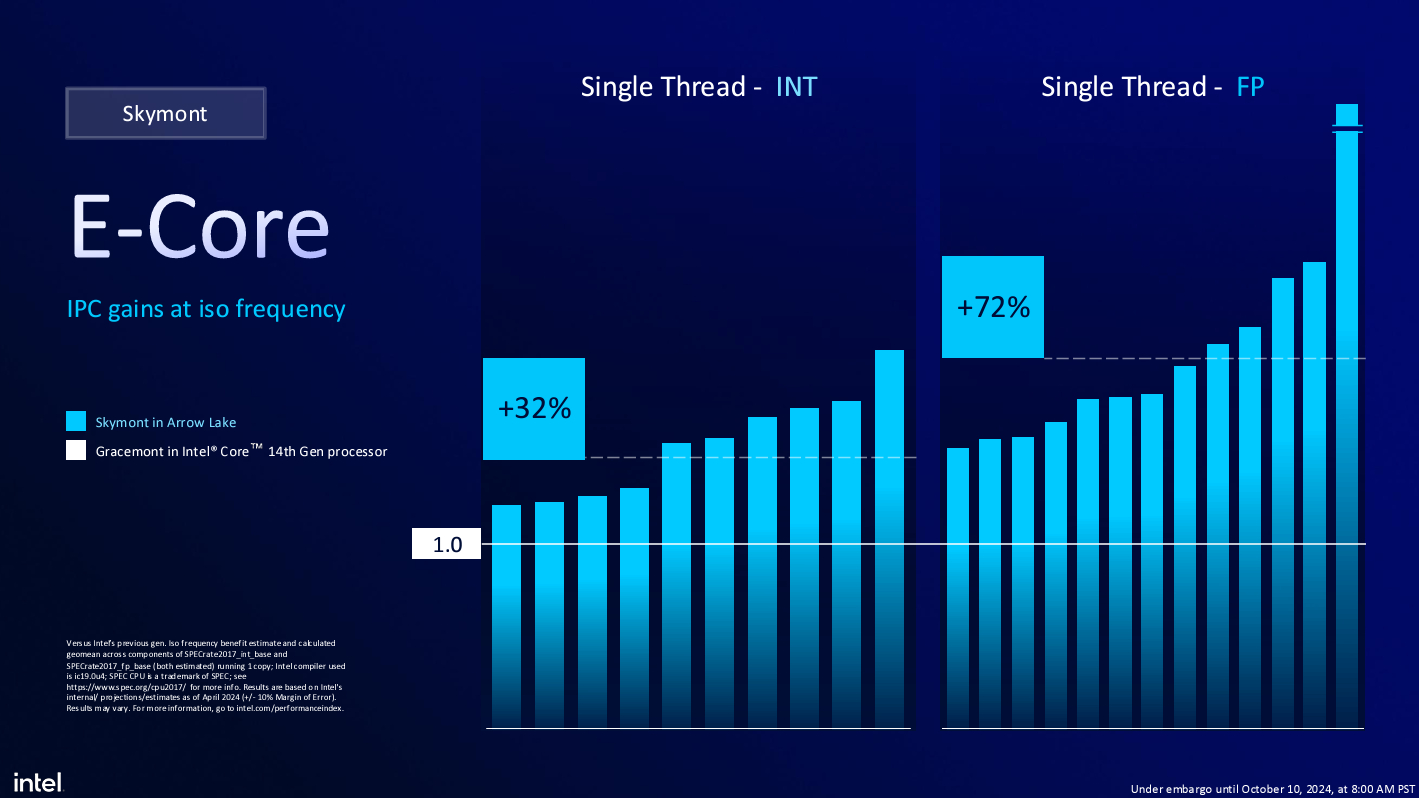

So why did Intel drop it for Lunar Lake and then Arrow Lake? The answer was to reduce overall power consumption, but it was also in response to the fact that its latest E-cores are far superior to previous designs. No need to have the P-cores juggling two threads when the E-cores are fine for a lot of cases, and since you can have more of these in the same die space (and use less power), it's one way of improving the overall performance-per-watt of the processor.

The latter is the ultimate goal of SDC, as the patent explains: "High performance cores through frequency turbo is [sic] inefficient in performance/watt. To counter this, we must build larger (deeper/wider) high IPC cores. This has a high degree of dependence on process technology node scaling and availability at scale. Additionally, larger cores then come at the cost of core count."

To me, the changes introduced in Arrow Lake and the level of detail in the SDC patent suggest that Intel is looking to move the majority of its processors to a design that's entirely E-cores. They would get used on an individual basis for most tasks, but when more IPC (instructions per clock) is required, SDC can kick in and bundle them together.

However, I don't see any of that happening in the consumer/desktop space any time soon, and it's far more likely to first appear in the world of server processors. Intel already has all-E-core CPUs for that market (Xeon 6900-series with E-cores), so if SDC does become flesh incarnate—sorry, silicon incarnate—then it's obvious which processors would be first in line to sport the technology.

Today's big 3D games are multithreaded, though not massively so at the moment; they don't heavily rely on vast amounts of IPC, either. This will change in time, as the rise in scale and complexity of them necessitates more threads to handle parallel tasks, such as background shader compilation, data streaming, and AI. And it's easier to manage and schedule all those threads if the processor's architecture is uniform rather than hybrid.

We probably won't see the end of Intel's P-cores in gaming CPUs just yet, but the concept of 'super cores', the continued improvement in E-cores, and the desire to move away from high clocks/power consumption, all point to a future where there will be no P- or E-cores. Just lots of identical cores. Super identical cores.

To keep moving forward, it's almost like Intel's going back to its past.

1. Best overall:

AMD Ryzen 7 9800X3D

2. Best budget:

Intel Core i5 13400F

3. Best mid-range:

AMD Ryzen 7 9700X

4. Best high-end:

AMD Ryzen 9 9950X3D

5. Best AM4 upgrade:

AMD Ryzen 7 5700X3D

6. Best CPU graphics:

AMD Ryzen 7 8700G

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.