Nvidia is the world's first $5 trillion company, but CEO Jensen Huang's nifty narrative about accelerated computing and the remarkable foresight that led to today's AI revolution doesn't quite add up

Nvidia has done incredible things, but a lot of luck was involved, too.

As I type these very words, Nvidia's market capitalisation is ticking along at $5.12 trillion. Yup, Nvidia has become the world's first $5 trillion company. Truly, these numbers are getting silly.

Of course, it really, really (really!) wasn't all that long ago we reported on Nvidia becoming the world's most valuable company at $3.3 trillion. It was only a few months ago that Nvidia breached $4 trillion.

But now here we are at $5 trillion. Where does it all end? Increasingly, market observers think the whole AI thing is a bubble set to catastrophically burst. Or maybe AI is the real deal, and an apocalypse of a very different kind will be upon us soon enough.

But one thing is for sure. Whatever the outcome, there's no doubting Nvidia will have been absolutely pivotal. It dominates the AI industry when it comes to both hardware and the software development platforms on which it is all built.

But as I watched CEO Jensen Huang's keynote address at the GTC event yesterday, his narrative around Nvidia was just a little too neat, a bit too cute. If you take Huang at his implied word, Nvidia saw it all coming. Personally, I think a fair bit of luck was involved.

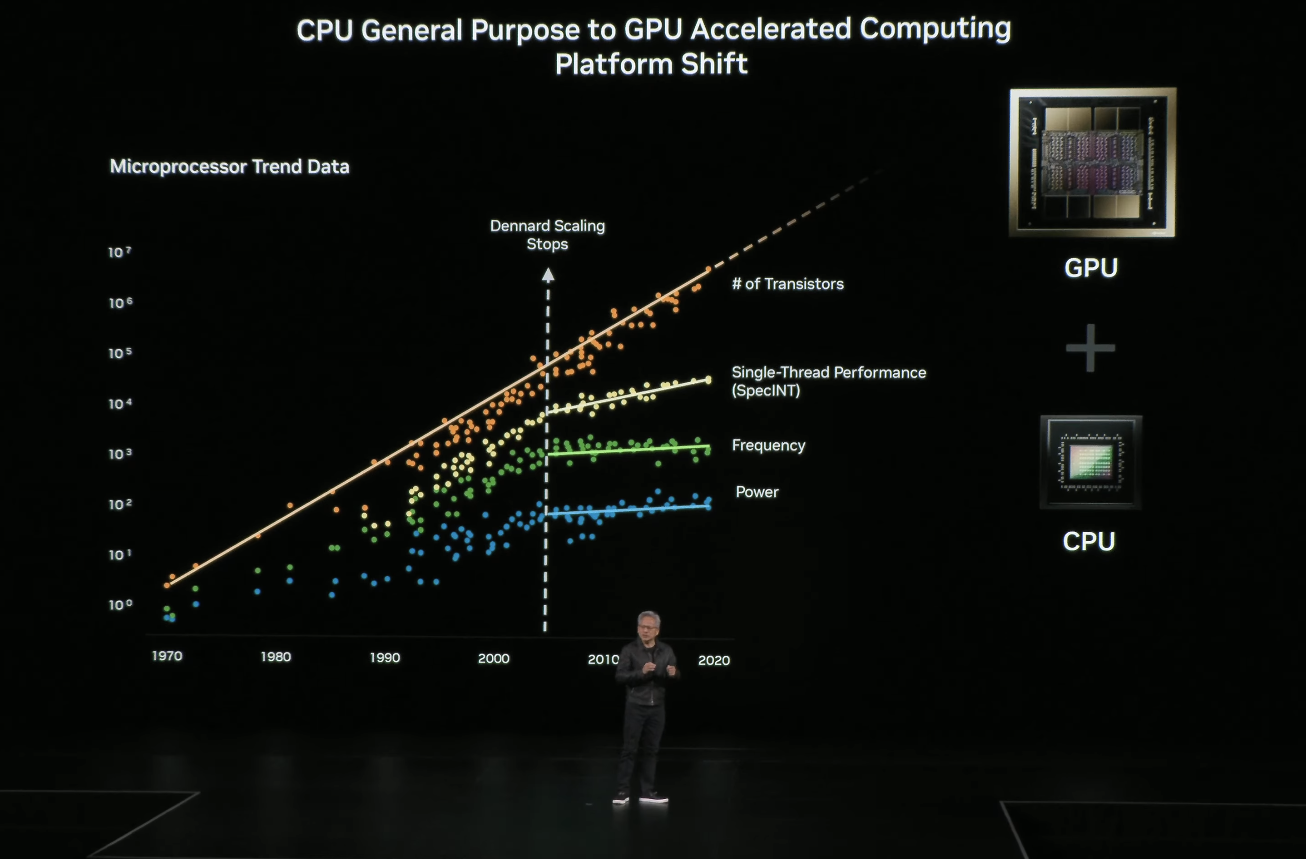

At GTC, Huang pointed out that Dennard scaling broke down about 10 years ago. Dennard scaling is something of a sibling observation to Moore's Law. While the latter makes predictions or at least observations about transistor densities and costs in computer chips, Dennard scaling is concerned with power consumption and operating frequency.

Nvidia's trend data rightly shows chip frequencies have largely stalled, while power efficiency is only improving gradually, too. At the same time, transistor densities have mostly continued to scale. So, a computing paradigm that takes advantage of Moore's Law but can mitigate the end of Dennard scaling is required. Huang says Nvidia saw all this coming and worked out that parallelised or "accelerated" computing was the solution.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"We made this observation a long time ago, and for 30 years we've been advancing this form of computing, we call it accelerated computing. We invented the GPU, we invented a programming model called CUDA, and we observed that if we could add a processor that takes advantage of more and more and more transistors, applied parallel computing, add that to a sequential processing CPU that we could extend the capabilities of computing well beyond, and that moment has really come," he says.

He's clearly right that the moment for parallelised or "accelerated" computing has come. I'm just not so sure Nvidia and Huang were quite so prescient as he makes out.

Who am I to question to technical and financial acumen of the CEO of the world's most valuable company? Nobody, obviously, but I have been following Nvidia in a professional capacity for over 20 years, and I can certainly take a clear view on the messages Nvidia has been putting out over that period.

My impression, for what it is worth, is that Nvidia was all about graphics in the early days. It wasn't building graphics chips with a view to parallelising everything. Instead, after building graphics chips, the company's ambitions to make more money drove it to think about what alternative applications might just be convinced to run on what it cleverly marketed as "GPUs", leveraging awareness around the existing term "CPU" as applied to Computer processors.

I can remember years of Nvidia throwing just about anything against the wall, hoping it would stick. 2D video processing, physics simulations, protein folding, mineral prospecting—they tried the lot in those early years.

Notably, it wasn't until around 2012 when Nvidia began to even namecheck "AI" as an application for its GPUs, and even then it was merely one of a long list of candidate applications for Nvidia GPUs that fell into the broader category of "GPGPU" or general purpose GPU computing.

I'd argue any claim, implied or otherwise, that Nvidia and Huang saw the AI revolution as it is today coming—transformer models running on GPUs—doesn't hold up to scrutiny. Nor does it bear cross-referencing with what Nvidia was saying or doing until much more recently.

That isn't to say that Nvidia doesn't deserve its success. No other company bet as big on GPUs as Nvidia did. No other company put nearly as much effort into creating the supporting software frame, in CUDA, as Nvidia did.

But AI in general blowing up in the way it has over the last few years and transformer models, in particular, both turning out to be so effective for creating AI models and being so well suited to run on GPUs, isn't something that Nvidia predicted. At least, if it did, Nvidia kept that entirely secret while spending large amounts of money trying to get companies to use its GPUs for lots of tasks and workloads other than AI.

So, props certainly go to Nvidia for its incredible success. But I don't think it follows that the company necessarily has any great insight into the future. And if that realisation is true, it surely has implications for everything from the stock market to the future of AI.

1. Best overall: AMD Radeon RX 9070

2. Best value: AMD Radeon RX 9060 XT 16 GB

3. Best budget: Intel Arc B570

4. Best mid-range: Nvidia GeForce RTX 5070 Ti

5. Best high-end: Nvidia GeForce RTX 5090

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.