PlayerUnknown's Battlegrounds settings and performance guide

We've updated all of our testing, using the new Vikendi map with the latest GPUs and CPUs.

PlayerUnknown's Battlegrounds may not be the most popular game in town these days, but PUBG remains a hot commodity, with more than a million people playing it daily according to Steam. Over the past year, Bluehole has added three new maps, the latest being the snow-covered landscape of Vikendi.

Performance has changed for the better since Early Access, and the 144fps framerate cap is now a thing of the past. With new maps and engine updates, we felt it was a good time to update all of our benchmarks.

Provided you're not trying to run at ultra-high resolutions and maximum quality, PUBG's system requirements aren't too bad. The minimum GPU recommendation is a GeForce GTX 660 2GB or Radeon HD 7850 2GB, but you can get by with less—just not at a smooth 60fps. CPU requirements are even more modest, with Core i3-4340 / AMD FX-6300 listed as the minimum. In testing, all of the current CPUs and GPUs can deliver playable performance, though you'll want to tune things for your hardware. If you're in any doubt, check out our best graphics card guide for more.

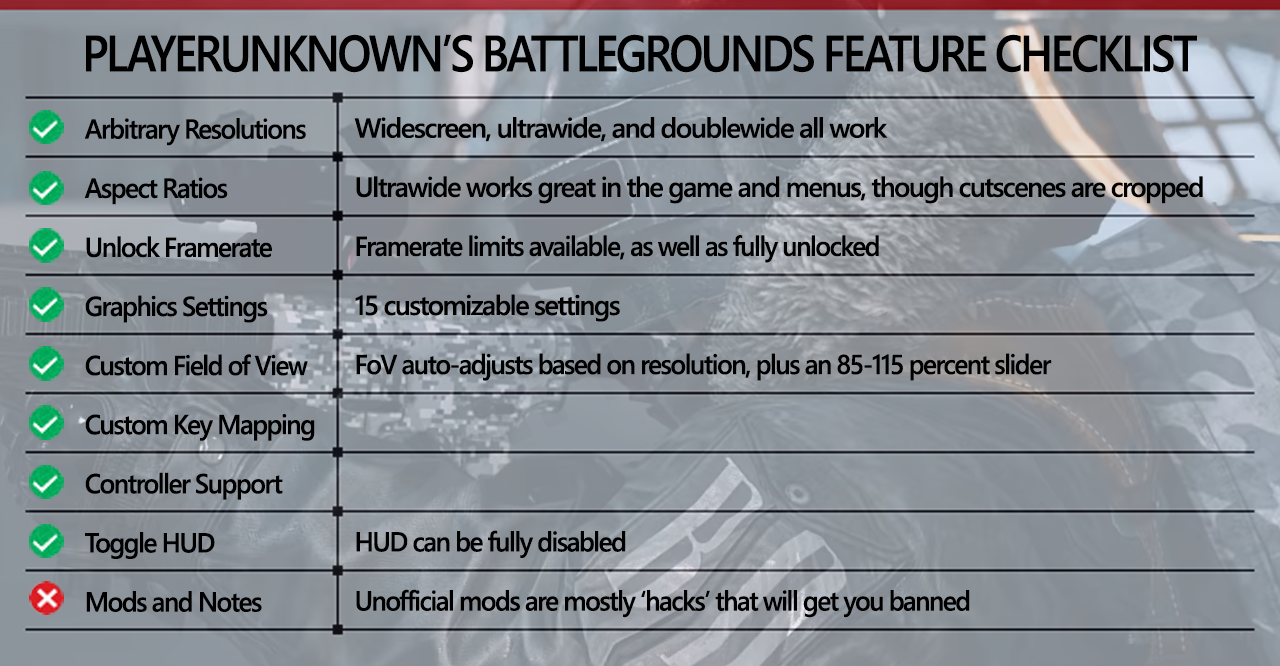

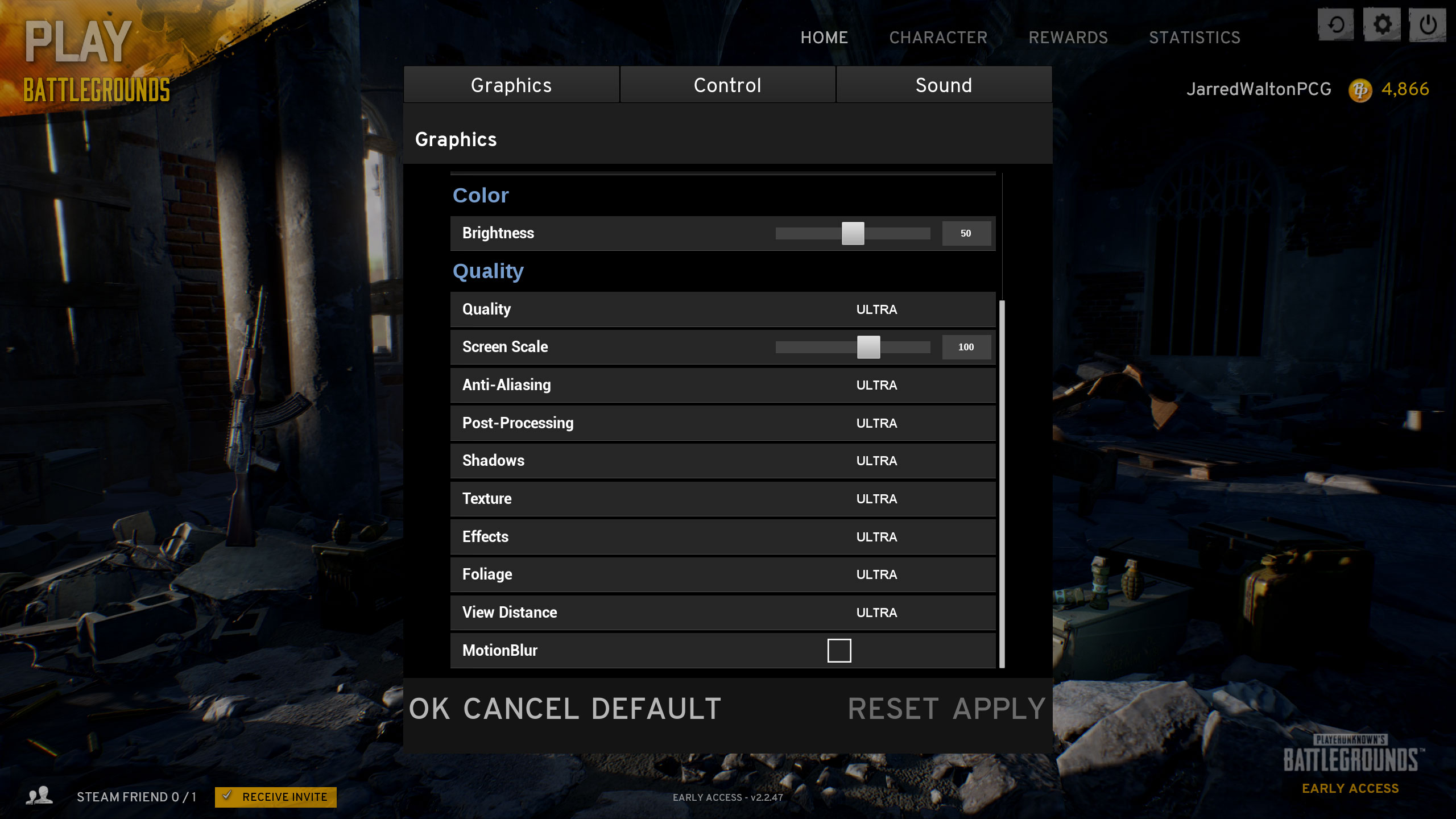

Quickly running through the features checklist, Battlegrounds has plenty of graphics options, and it checks most of the right boxes. Resolution support is good, and aspect ratio support works properly in my testing, with a change in the FOV on ultrawide displays. There's also an FOV slider, but that only affects the FOV if you're playing in first-person perspective.

Some items have been fixed over the past year, like the removal of the framerate cap. Modding support remains the only missing item, and that's officially out—not that there aren't plenty of unauthorized hack mods floating around, though PUBG has been quite active about banning cheater accounts.

Swipe left for RX 580 8GB results, right for image quality comparisons

Swipe right for GTX 1060 6GB results, left for image quality comparisons

PUBG settings

Fine tuning PUBG settings

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test PlayerUnknown's Battlegrounds on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops—see below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

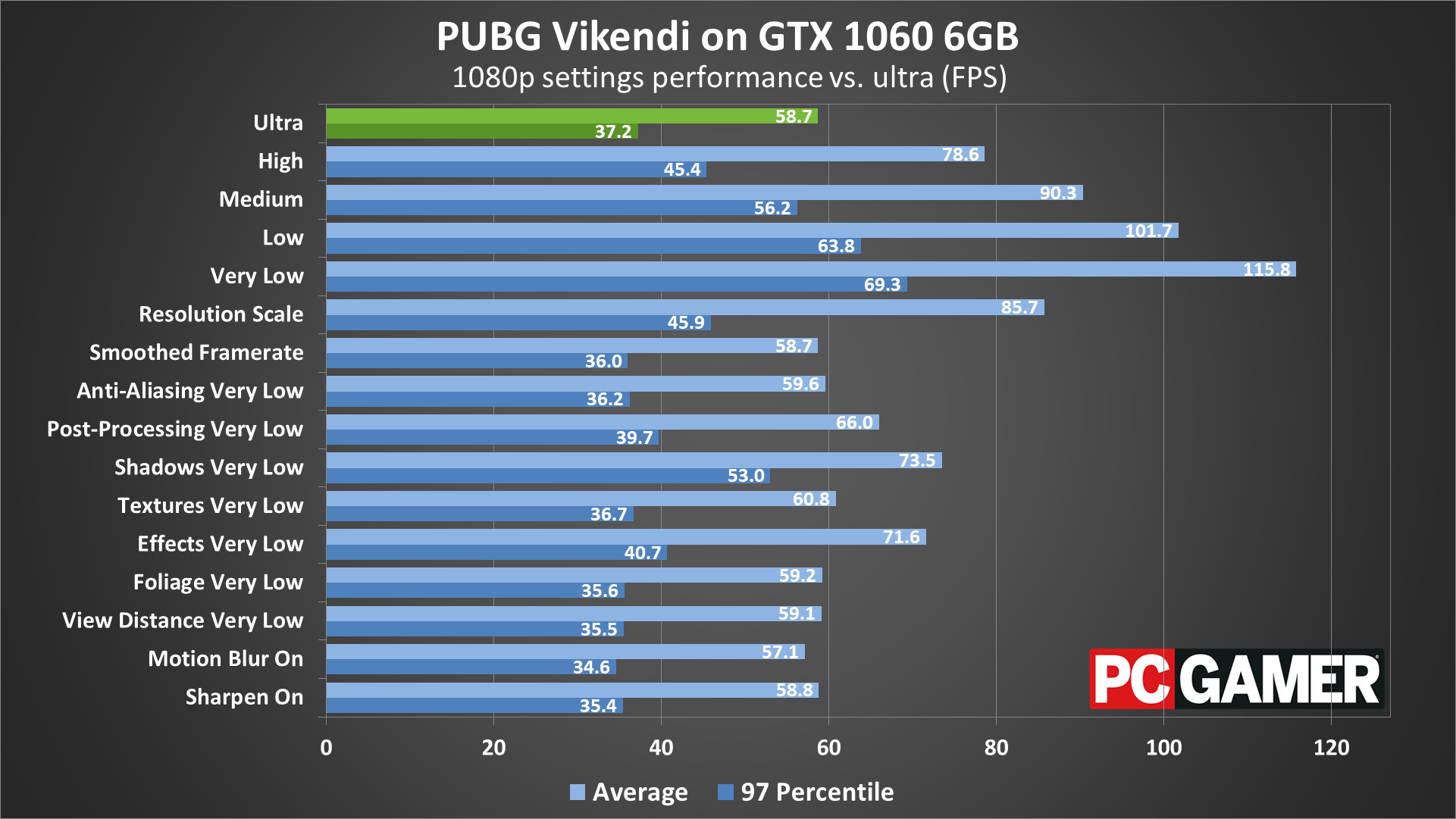

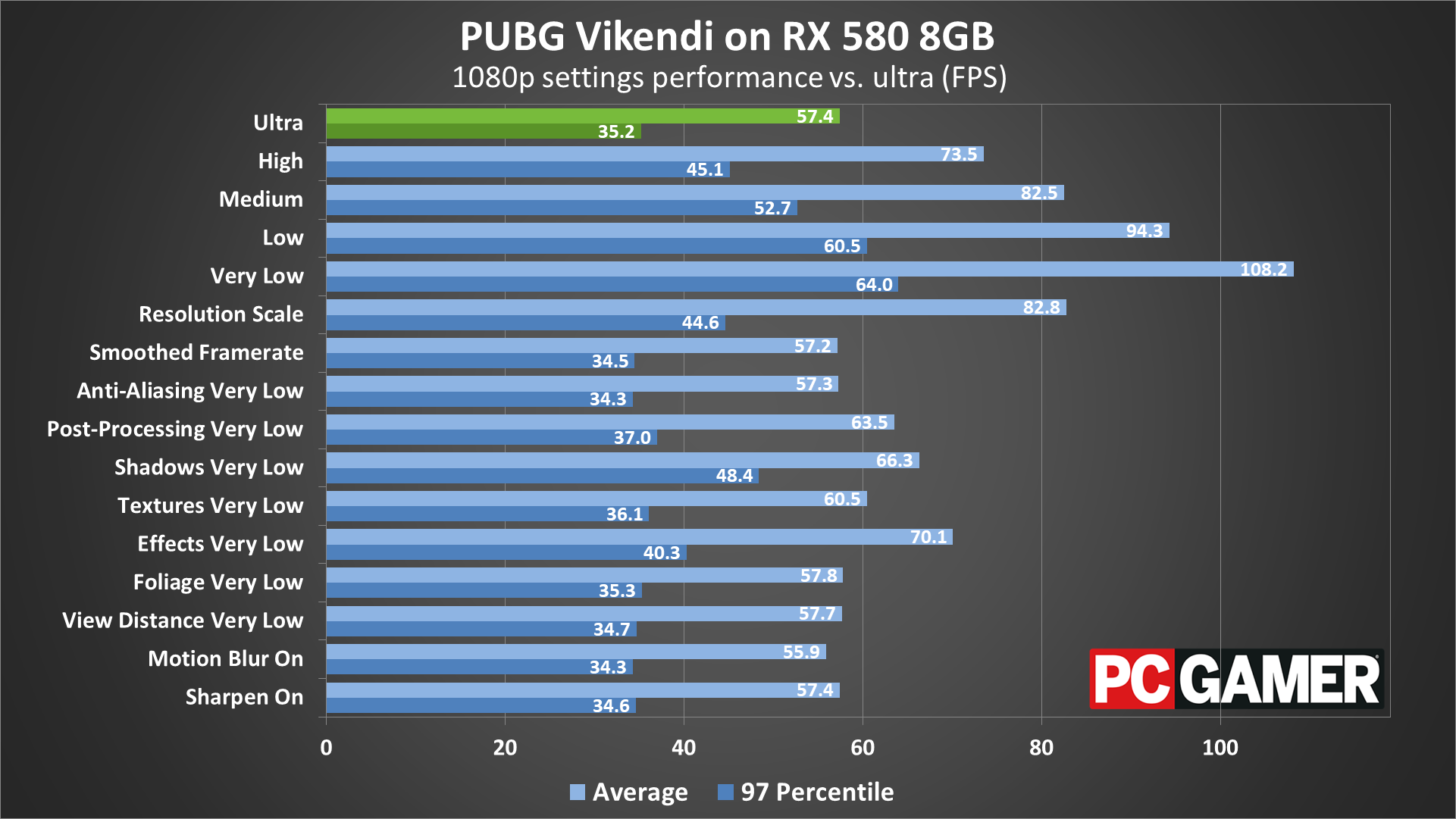

I tested performance with the various presets, along with each setting set to minimum, and compared that with the ultra preset. The RX 580 8GB and GTX 1060 6GB end up performing quite similarly in most cases.

The global preset is the easiest place to start tuning performance, but you'll probably want to tweak things to find a better balance. Going from the ultra preset to high will boost performance about 30 percent, the medium preset runs 45-55 percent faster than ultra, the low preset will boost performance 65-75 percent faster, and the minimum very low preset runs nearly twice as fast.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Most competitive players will run with the very low preset, but with view distance at ultra. If you're hoping to tweak the individual settings to better tune performance, I did some testing to see how much each setting affects framerates using the ultra preset as the baseline. I then dropped each setting down to the minimum (very low) setting and to measure the impact.

Screen Scale: The range is 70-120, and this represents undersampling/oversampling of the image. It's like tweaking your resolution by small amounts, but I recommend leaving this at the default 100 setting and changing your resolution instead. Using 70 can improve performance by around 45 percent.

Anti-Aliasing: Surprisingly not a major factor, but this is because Unreal Engine requires the use of post-processing techniques to do AA. If you want better AA, you could set screen scale to 120 to get a moderate form of super-sampling. Going from ultra quality AA to very low quality AA has a negligible impact on performance.

Post-Processing: A generic label for a whole bunch of stuff that can be done after rendering is complete. This has a relatively large impact on performance—going from ultra to very low improves framerates by 10-15 percent.

Shadows: This setting affects ambient occlusion and other forms of shadow rendering, and going from ultra to very low improves performance by 15-25 percent depending on your GPU.

Textures: Only a minor impact on performance, provided you have enough VRAM. Dropping from ultra to very low increases framerates by 3-5 percent.

Effects: This setting relates to things like explosions, among other elements. THis is the single most demanding setting in the game. Dropping to very low improves performance by up to 25 percent.

Foliage: Given all the trees and grass, you might expect this to have a larger impact on performance, but I only saw a 1 percent difference after setting it to very low.

View Distance: This appears to have a greater impact on CPU performance than on graphics performance, so if your CPU is up to snuff you can safely set it to ultra. Even on a Core i3 system, dropping to very low only made a 3 percent difference in framerates.

Motion Blur: There's a reason this is off by default, right? Spotting enemies while moving around is more difficult with motion blur enabled. But if you like the effect, turning it on causes about a 3 percent drop in framerates.

Sharpen: Sort of the reverse of motion blur, this is also off by default. Enabling it had no measurable impact on performance.

If you're looking to be more competitive, turning post-processing, shadows, effects, and motion blur to minimum can improve framerates by around 75 percent. That can make it easier to spot enemies as well, though it won't necessarily help you aim any better.

MSI provided all of the hardware for this testing, mostly consisting of its Gaming/Gaming X graphics cards. These cards are designed to be fast but quiet, though the RX Vega cards are reference models and the RX 560 is an Aero model.

My main test system uses MSI's Z370 Gaming Pro Carbon AC with a Core i7-8700K as the weapon of choice, with 16GB of DDR4-3200 CL14 memory from G.Skill. I also tested performance with Ryzen processors on MSI's X370 Gaming Pro Carbon. The game is run from a Samsung 850 Pro SSD for all desktop GPUs.

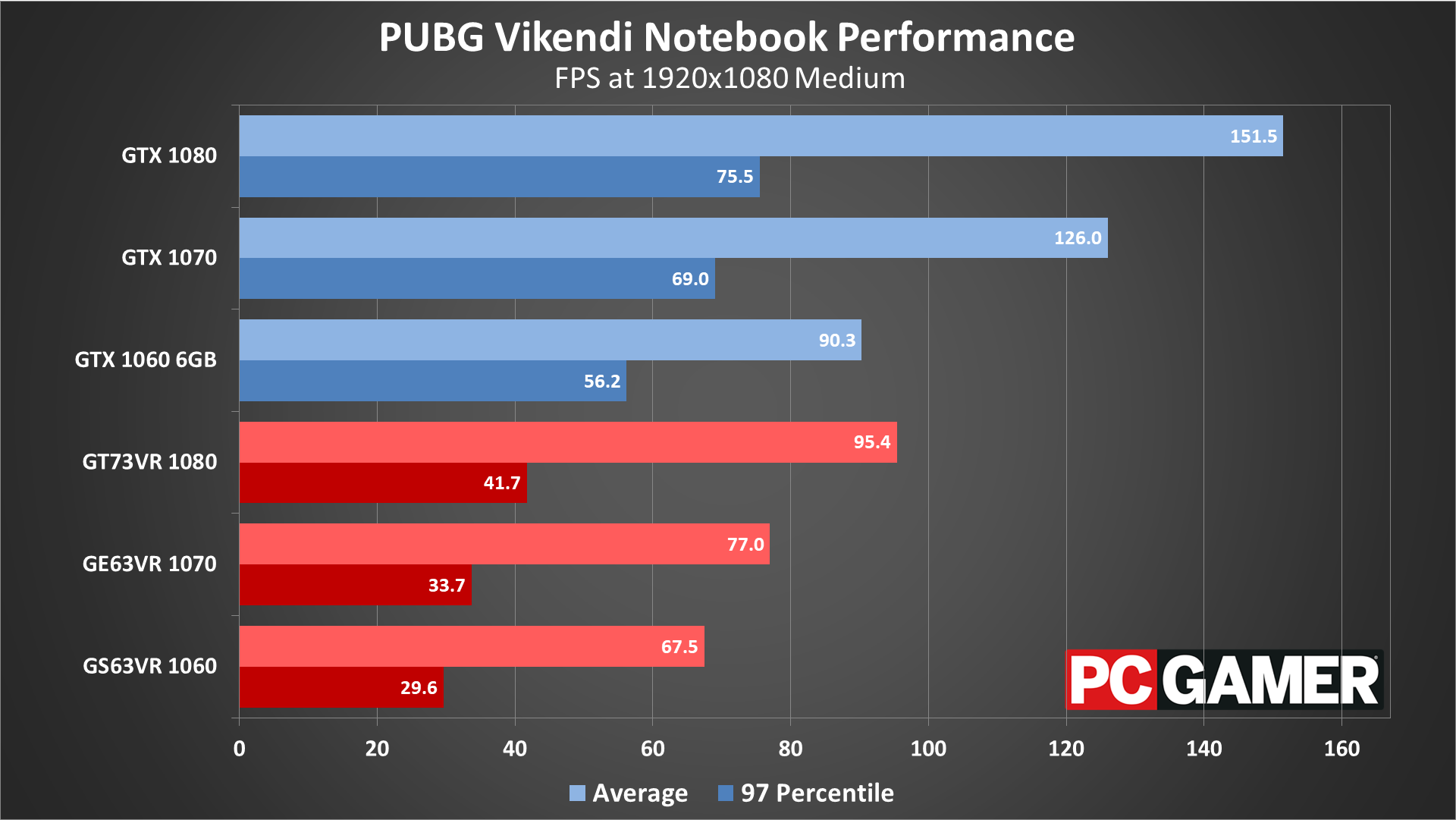

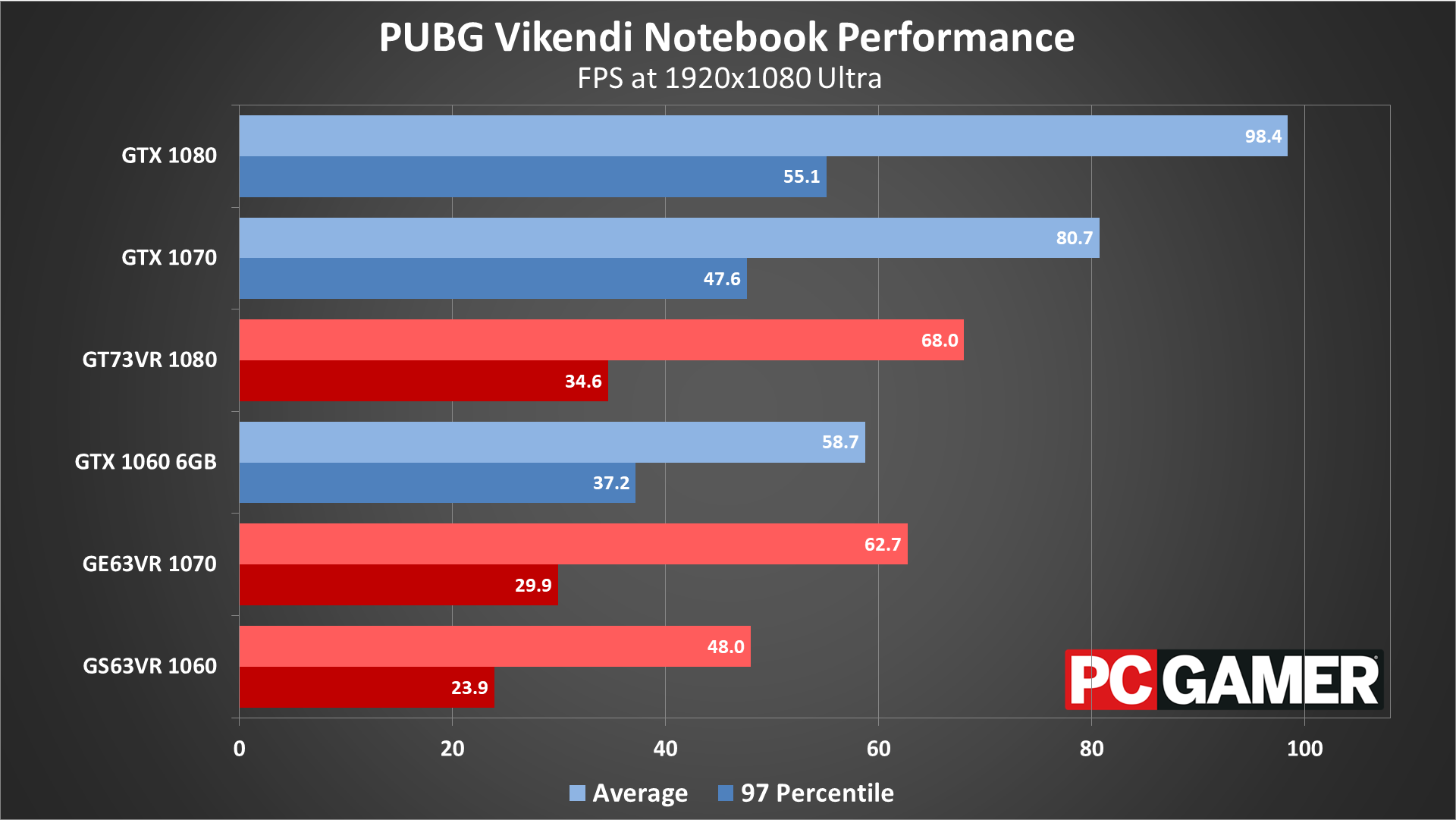

MSI also provided three of its gaming notebooks for testing, the GS63VR with GTX 1060 6GB, GE63VR with GTX 1070, and GT73VR with GTX 1080. The GS63VR has a 4Kp60 display, the GE63VR has a 1080p120 G-Sync display, and the GT73VR has a 1080p120 G-Sync display. For the laptops, I installed the game to the secondary HDD storage.

PUBG Vikendi GPU benchmarks

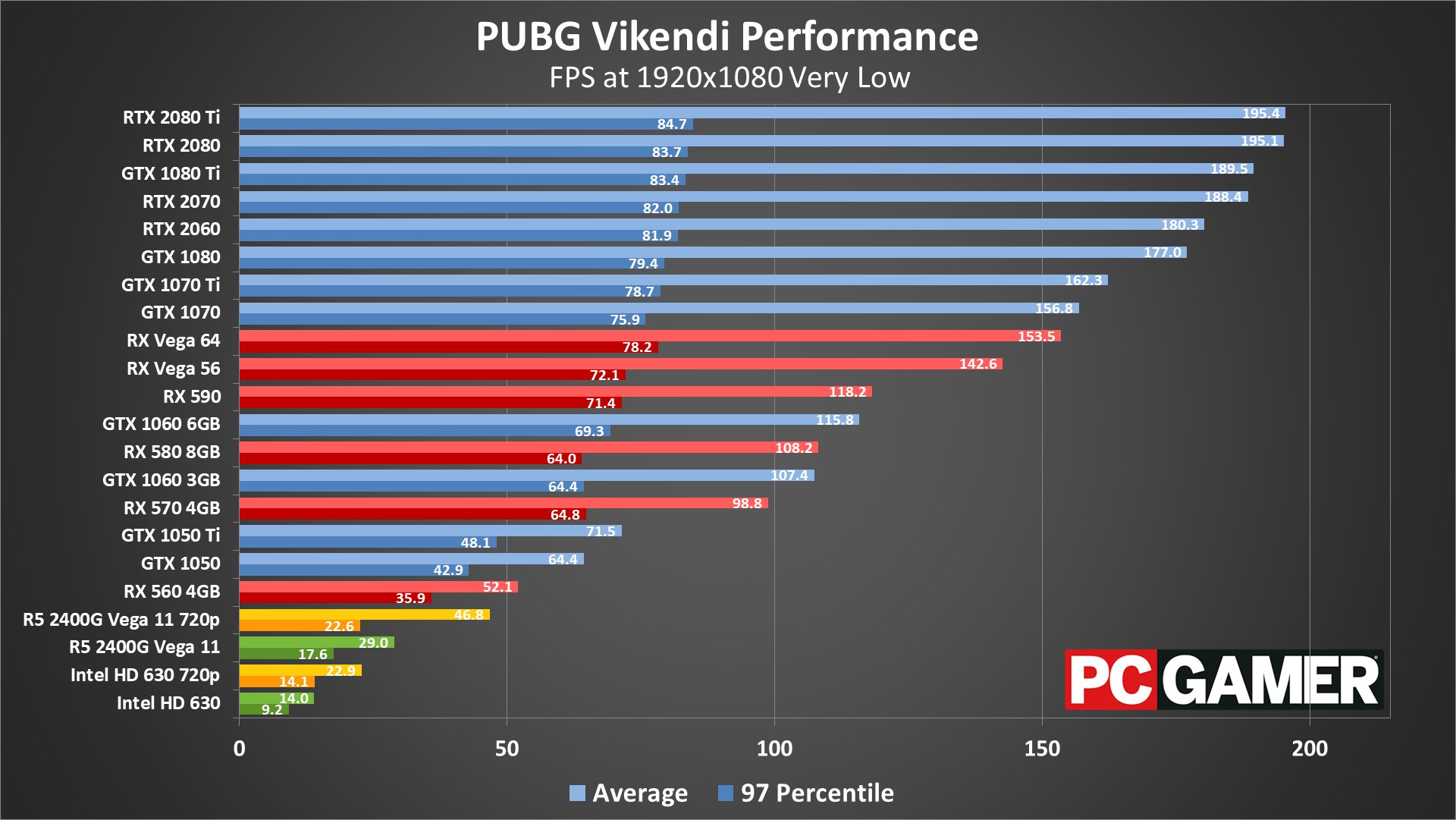

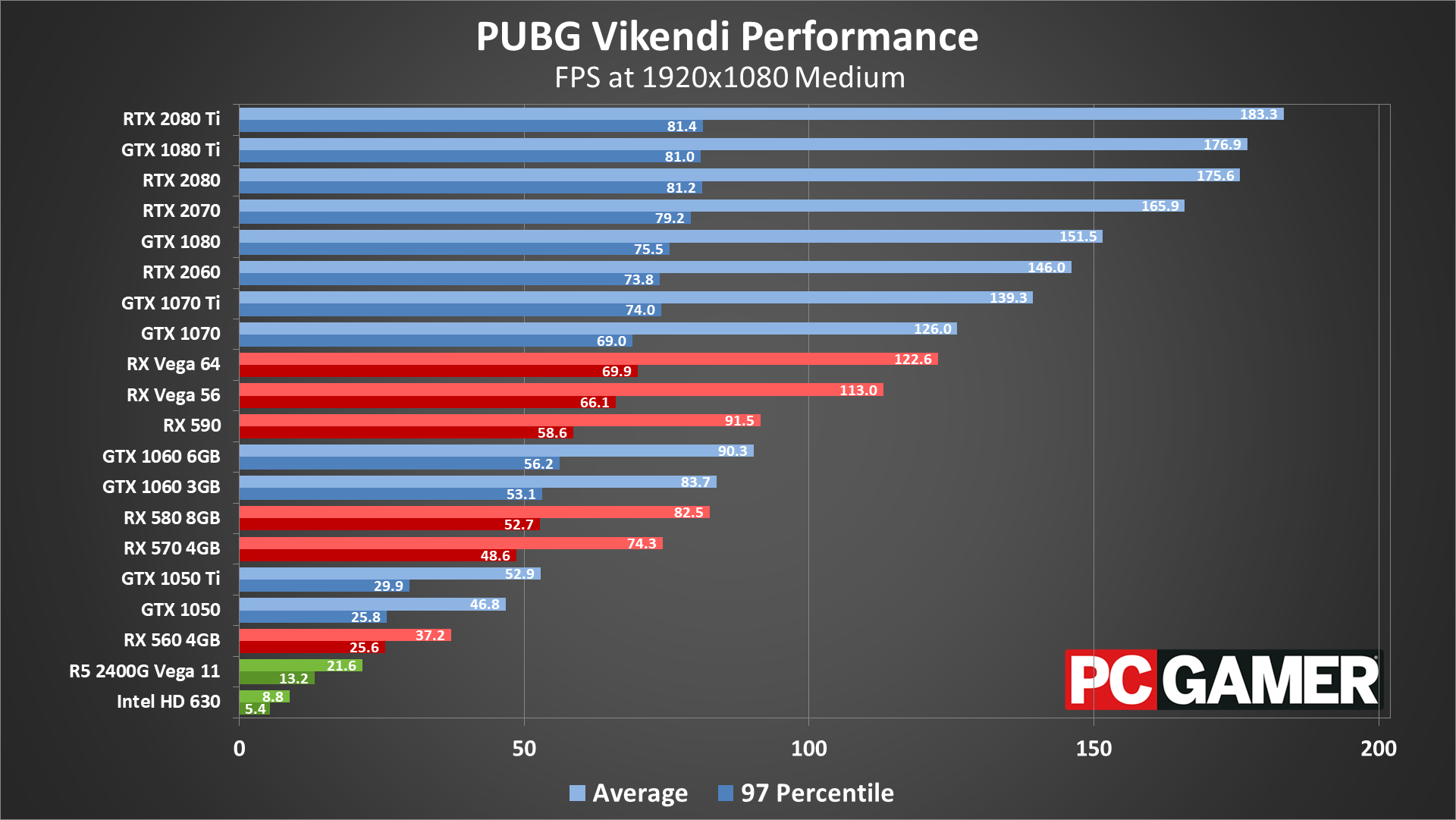

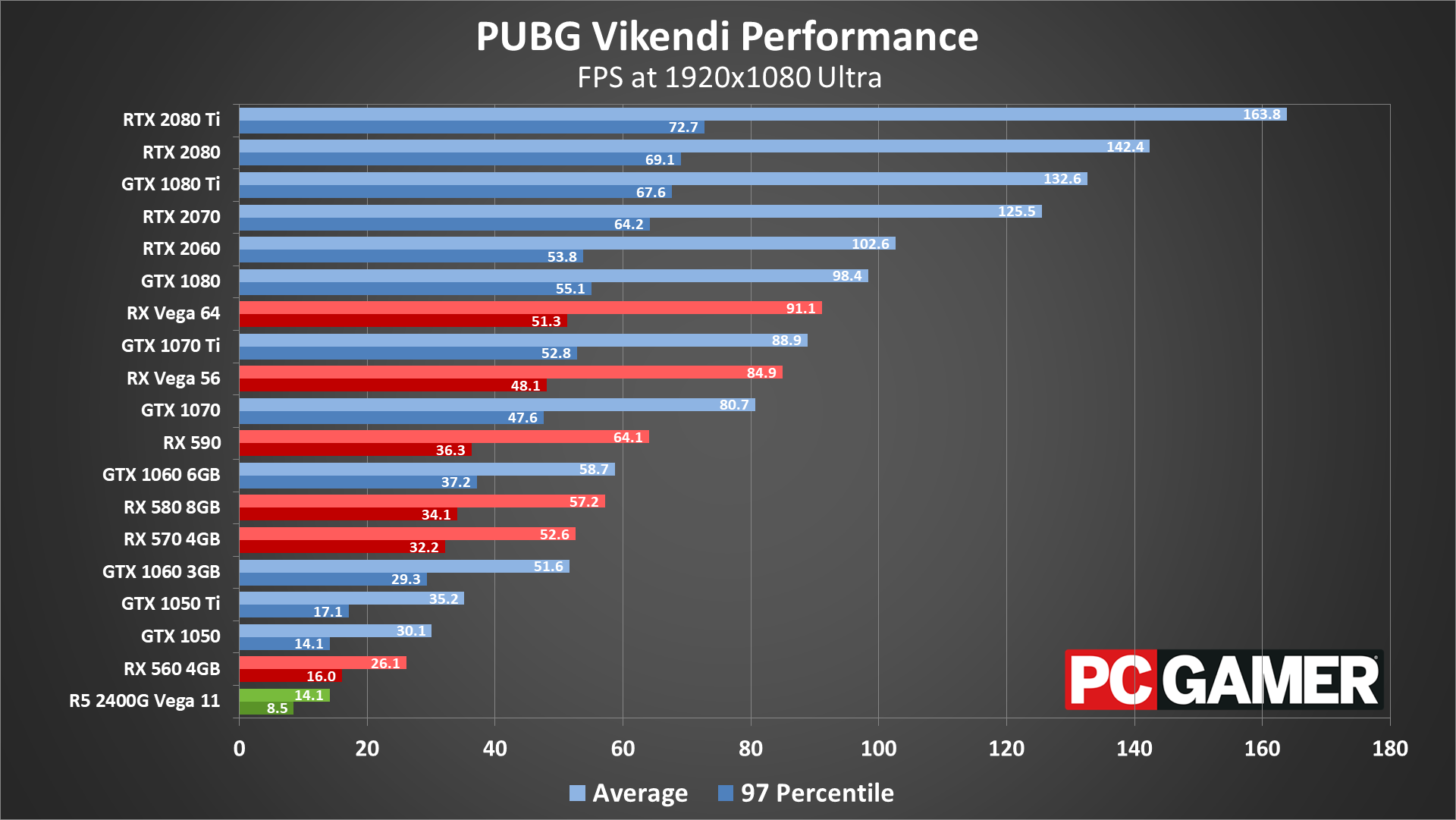

For the benchmarks, I've tested 1080p at the very low, medium, and ultra presets, along with 1440p and 4k at ultra. Some people will want to run at minimum graphics quality, except for the view distance, to try and gain a competitive advantage. It looks ugly, but it may be easier to spot people hiding in the grass or shadows. Performance should be very similar to the very low results. I've also tested 720p very low on integrated graphics solutions.

Starting at 1080p very low, which is close to what most competitive streamers use, the CPU limits performance to around 200fps, with minimums well below that mark. AMD GPUs appear to have an even lower maximum level of performance, as the Vega cards both fall behind the GTX 1070.

For budget GPUs, the GTX 1050 and above manage 60fps averages, but the RX 560 comes up short. The integrated graphics solutions also fail to break 30fps at 1080p, though the Ryzen 5 2400G and its Vega 11 GPU do manage reasonable performance at 720p. Intel's HD Graphics 630 meanwhile still only averages 23fps at minimum settings, though resolution scaling might help a bit.

1080p medium drops performance on the mainstream cards by about 20-25 percent, though the faster GPUs are still closer to CPU limits. AMD GPUs still underperform a bit, though the RX 590 does come out ahead of both 1060 cards. The RX 570 4GB and above all break 60fps, but with occasional dips below that mark.

Moving up to 1080p ultra drops performance by 35-40 percent relative to medium quality. Most mainstream and above cards are playable, though you'd have to adjust a few settings on the GTX 1060 and RX 570/580 to hit 60+ fps. The GTX 1070 and above average well above 60fps, but the RTX 2070 and above are needed to get minimums above 60.

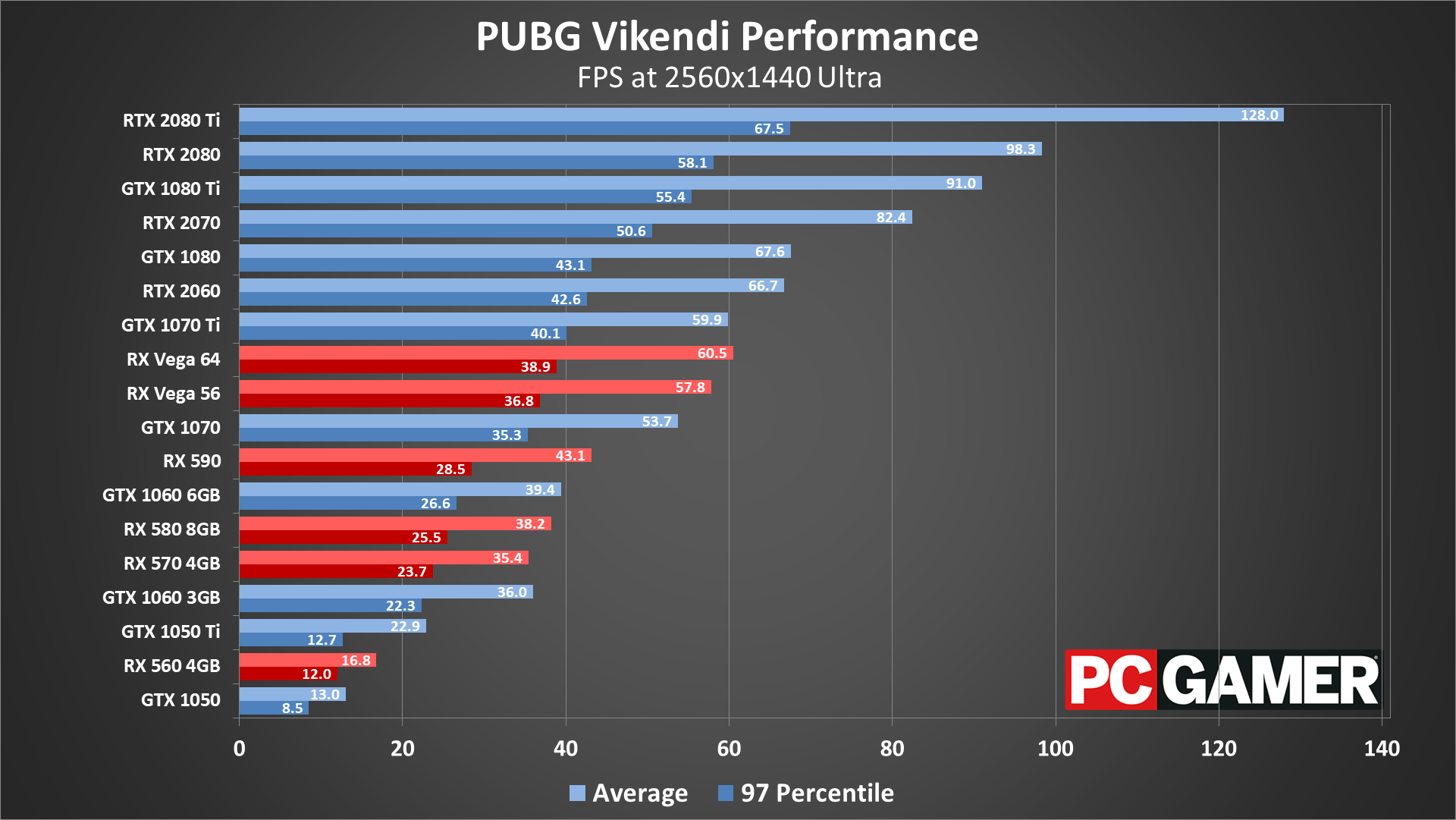

1440p ultra continues the downward trend in performance, and now only the GTX 1080 and above average 60fps, while the RTX 2080 Ti is the only GPU to keep minimums above 60. The Vega cards move up the charts a bit, likely thanks to their higher memory bandwidth. Alternatively, dropping the settings a few notches will get most of the midrange and above GPUs above 60fps.

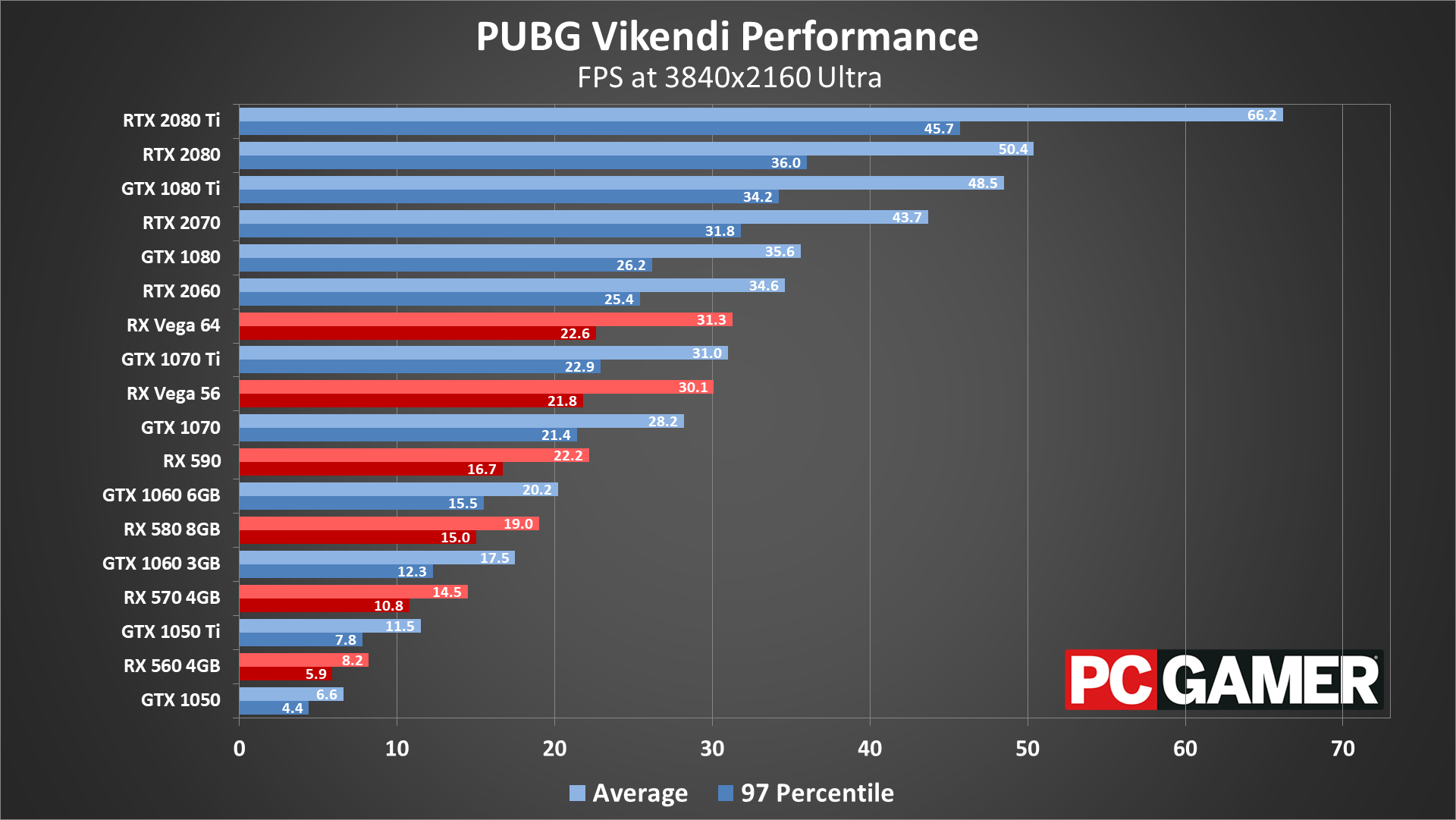

4k ultra is still brutal, with only the RTX 2080 Ti breaking 60fps, but still with dips below that. For competitive reasons, playing at 4k isn't generally recommended. Even with the fastest PC around, you'd be better dropping to 1440p.

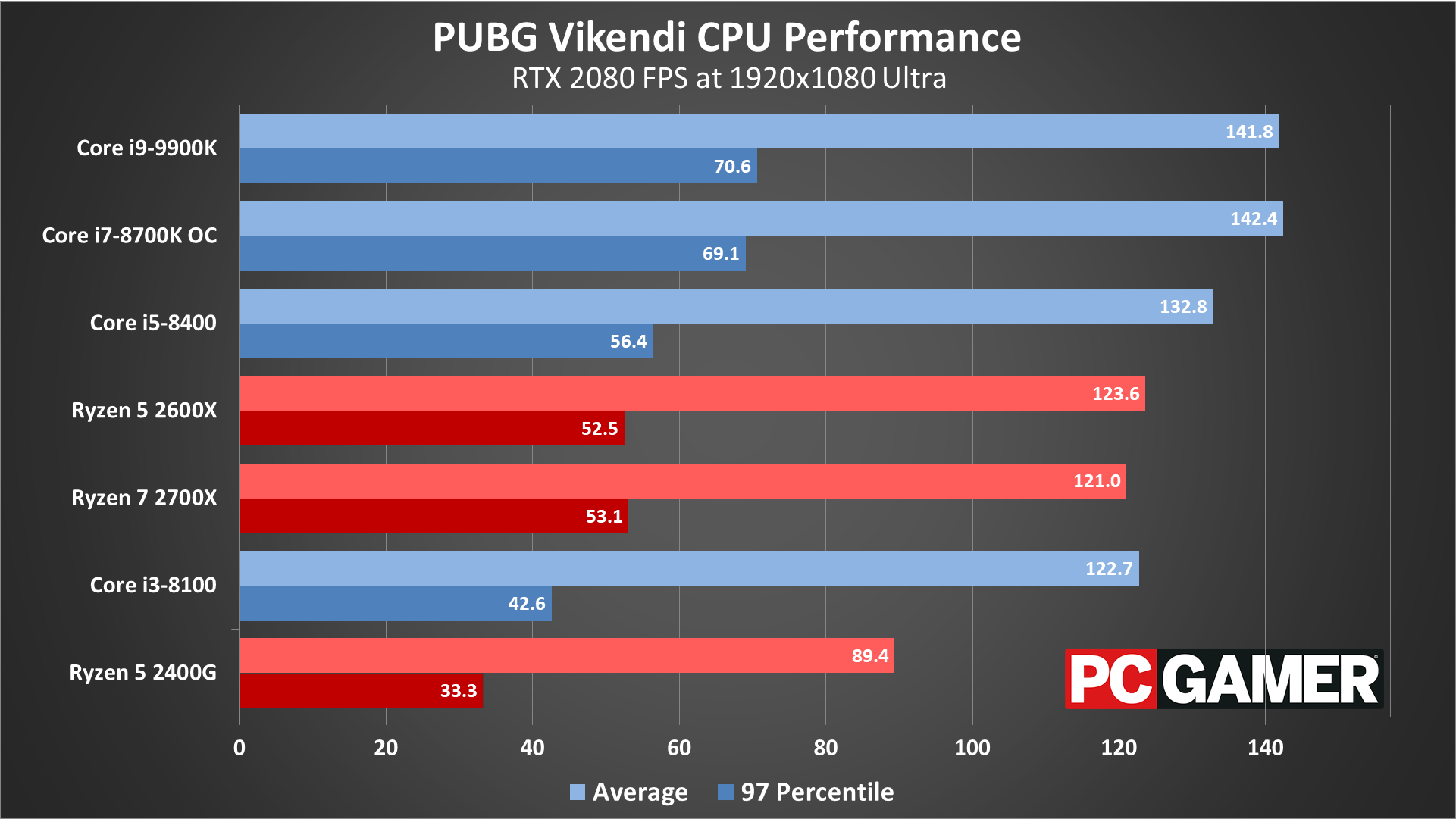

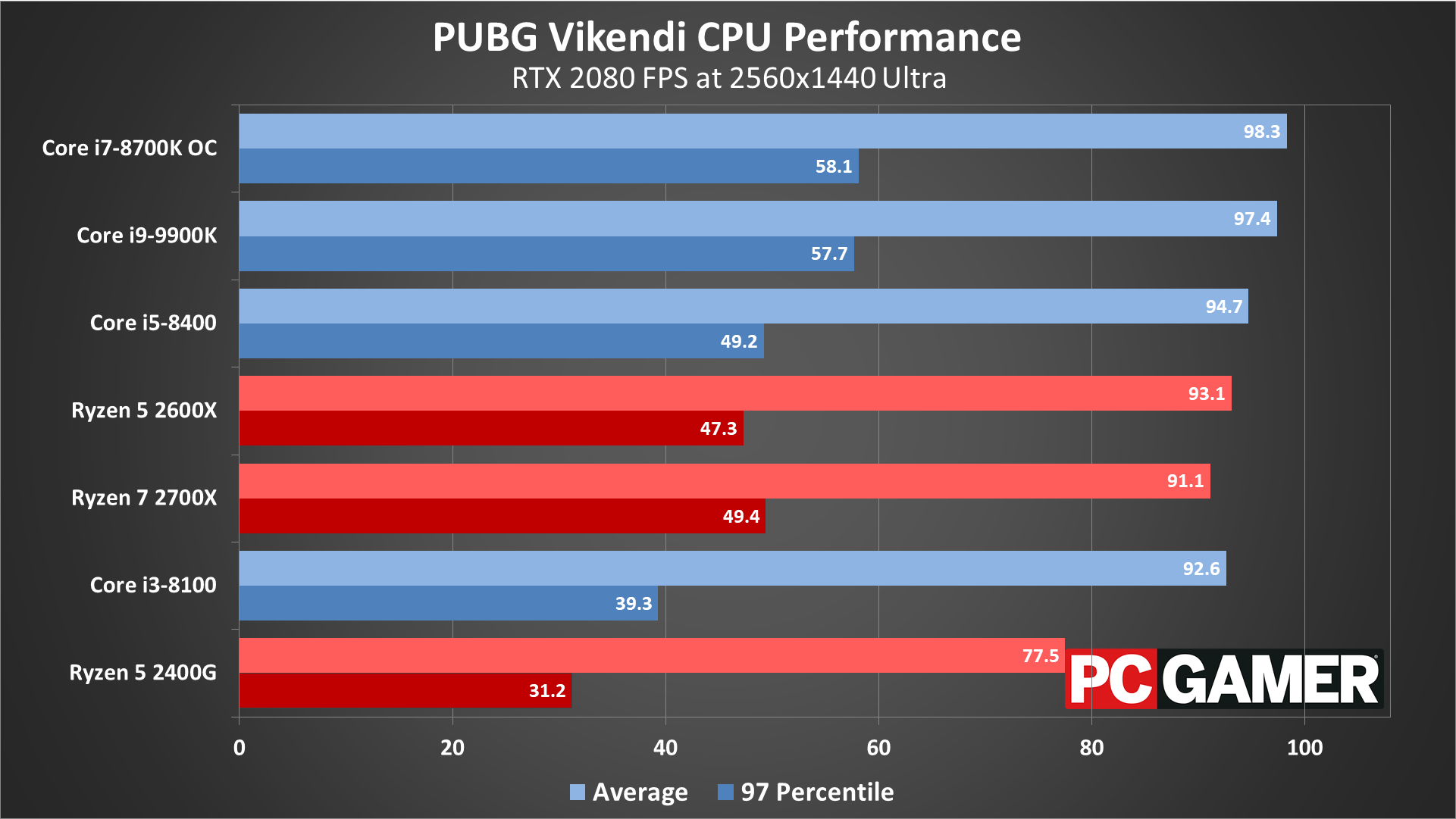

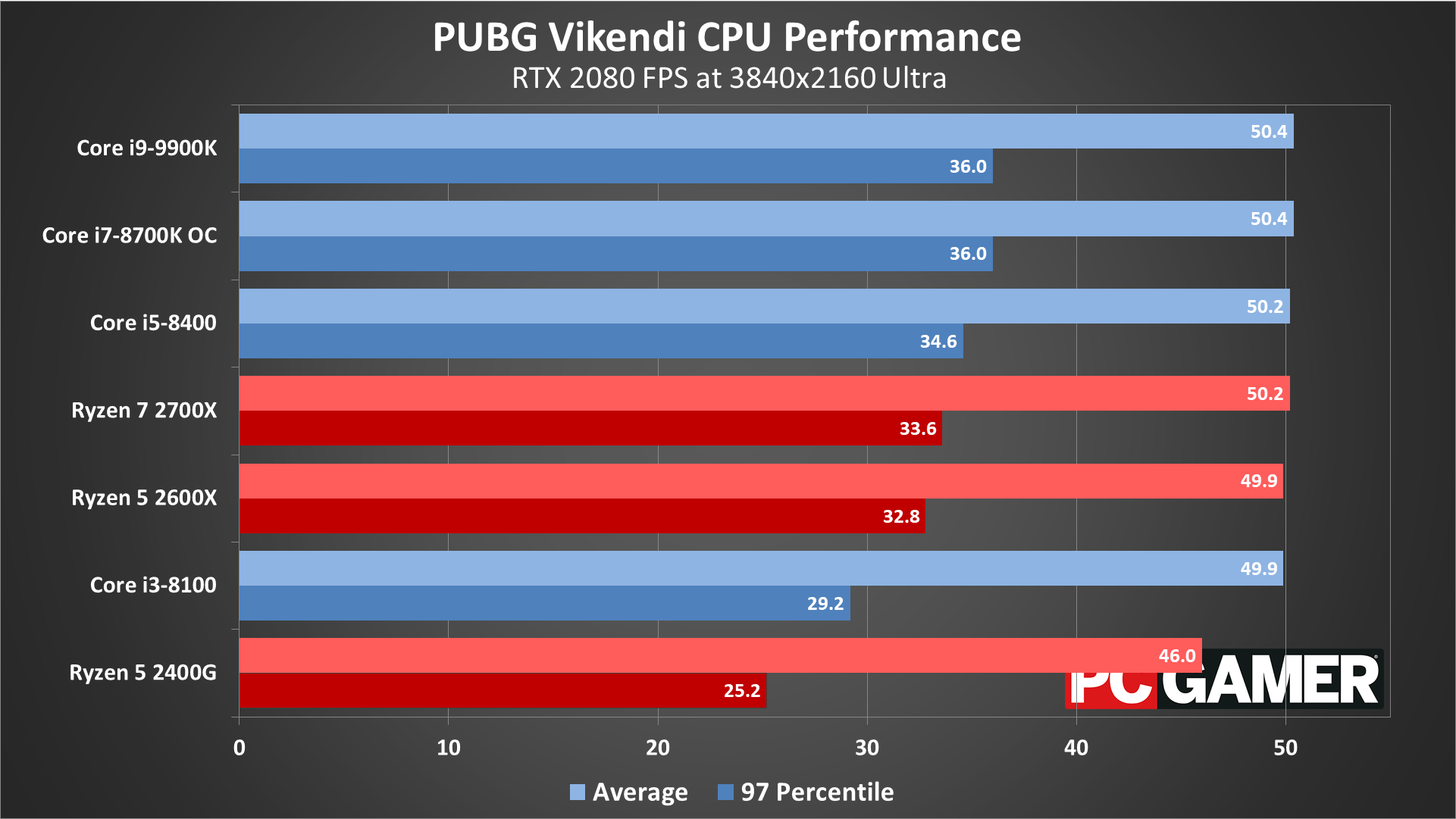

PUBG Vikendi CPU performance

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

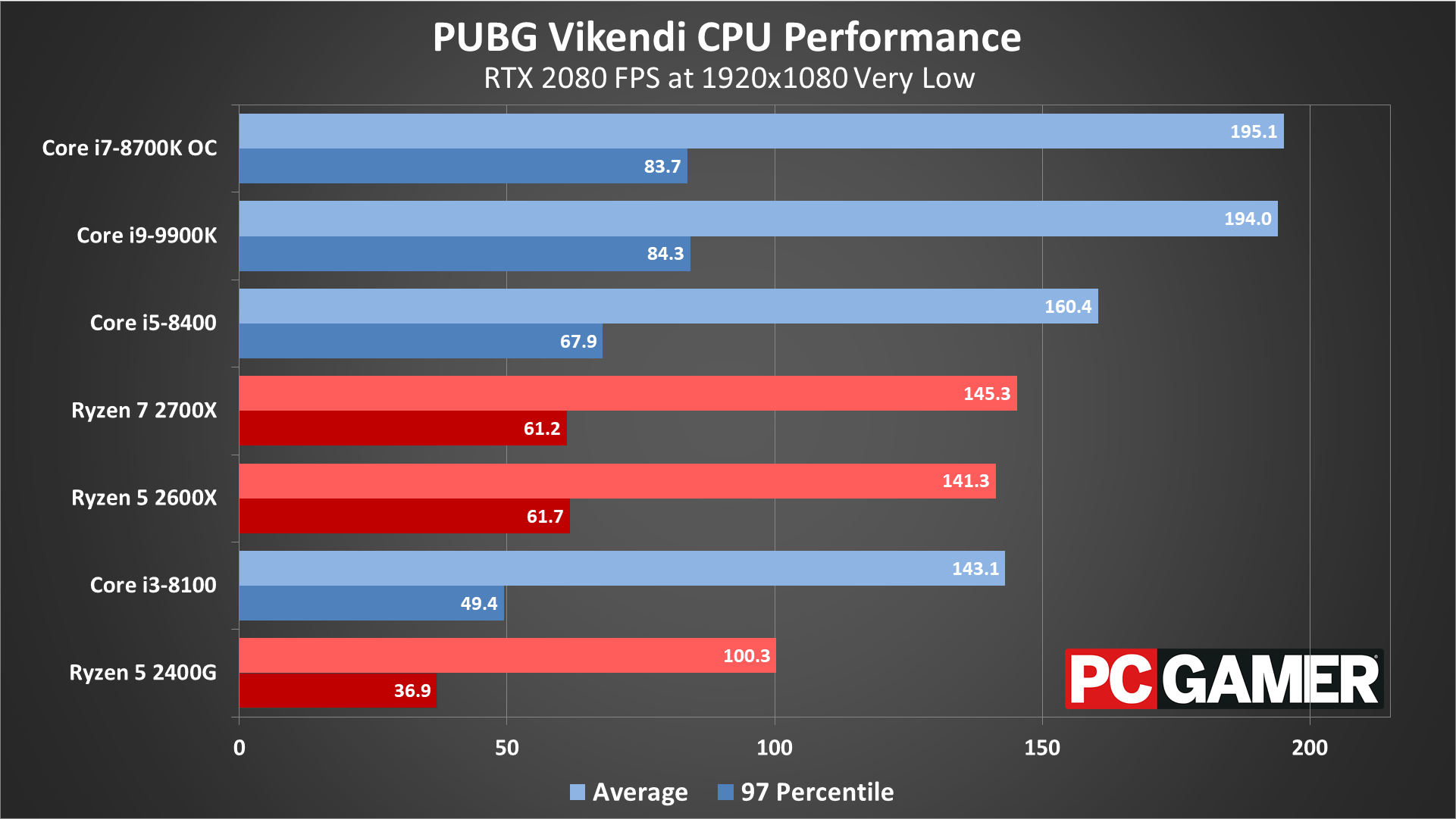

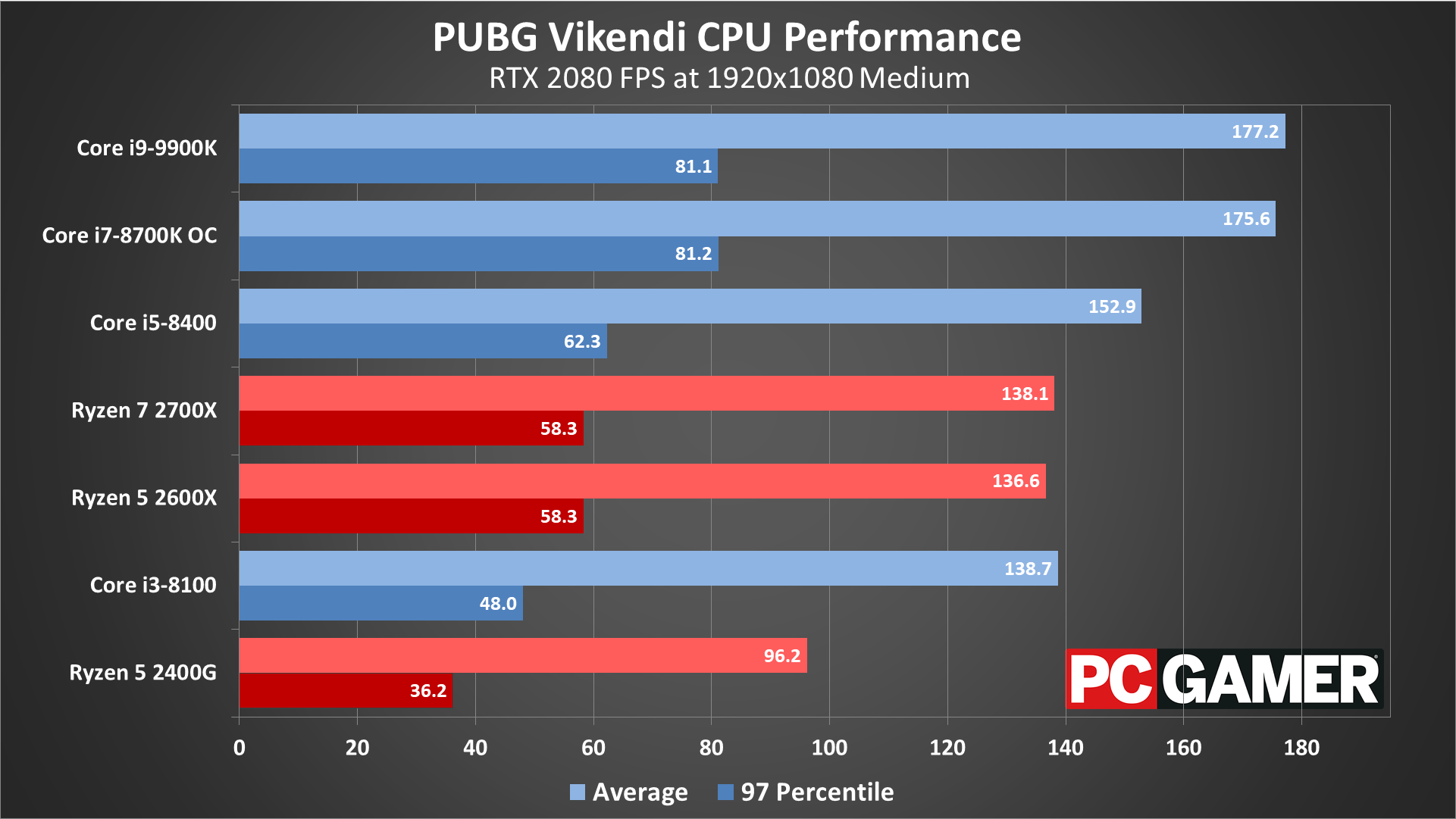

What about the CPU side of things—how many cores does Battlegrounds need to run properly? I've used the RTX 2080 for all of these tests in order to create the biggest difference in CPU results you're likely to see. (Yes, the RTX 2080 Ti would increase the gap even a bit more, but that's a bit excessive for most gamers.)

At the very low preset, the fastest CPU is about 40 percent faster than the slowest CPU. 1080p medium reduces the margin to 30 percent, and at 1080p ultra it's a 20 percent difference. At 1440p ultra and above, it's mostly a tie between all the CPUs, though minimums do show a bit more variance.

So CPU performance does help, but only with an extremely fast CPU. Of course, if you're doing other things while playing PUBG (like livestreaming), you'd want a more potent CPU than the i3-8100.

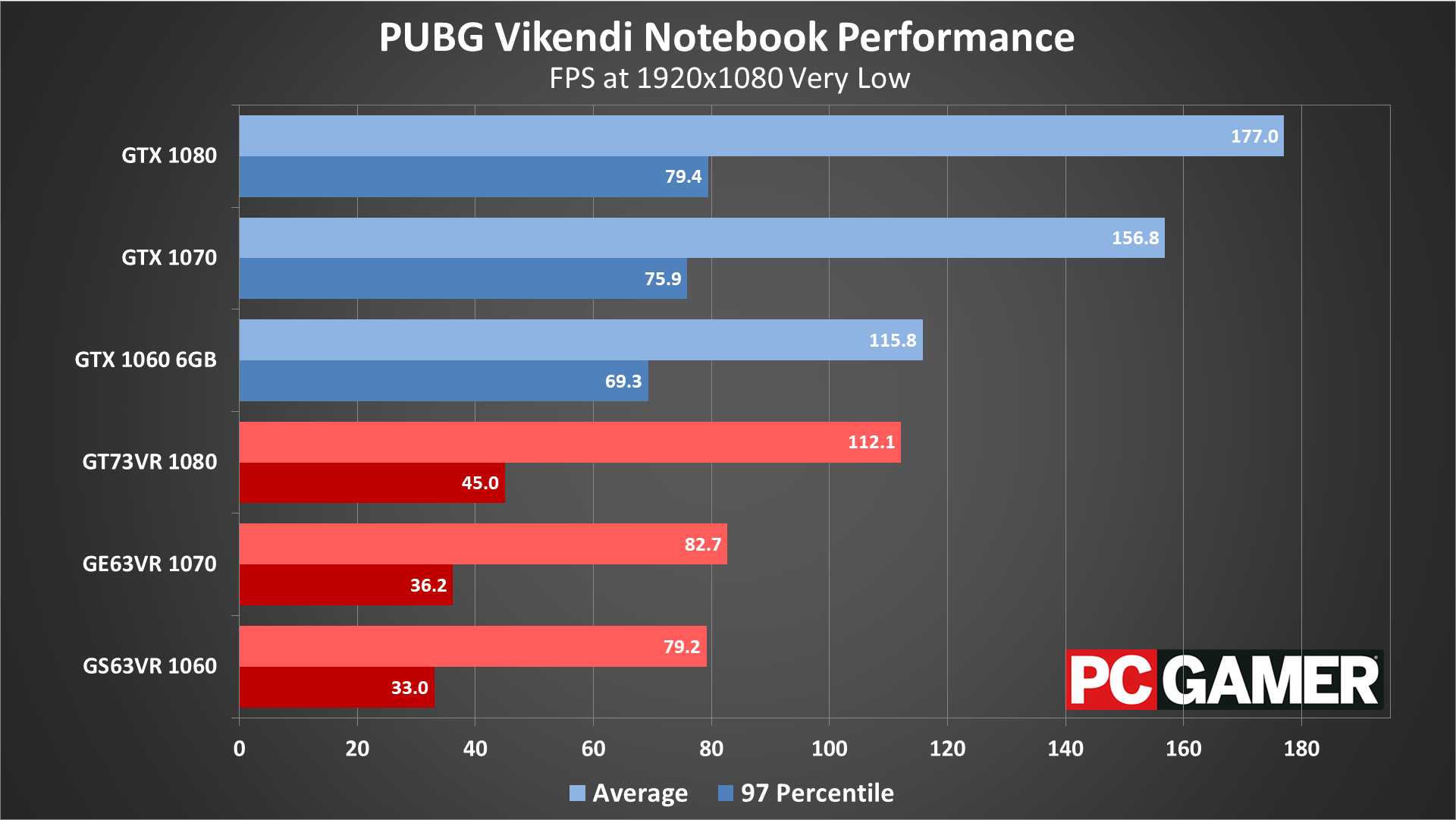

PUBG Vikendi notebook performance

Swipe left/right for more images.

Swipe left/right for more images.

Swipe left/right for more images.

Shifting gears to notebook testing, the mobile CPUs aren't able to keep the GPUs fully fed with data at 1080p low and 1080p medium. The result is that the GTX 1060 desktop GPU is able to outperform even the GT73VR, particularly when it comes to minimum fps. Once we move to 1080p ultra, the mobile 1080 is at least able to move into third place, but in games that are less CPU limited I've seen the GT73VR outperform even the desktop 1080.

I suspect the newer 6-core mobile CPUs would help eliminate the performance deficit, but the desktop chips still clock 20-30 percent higher at stock. If you have a higher resolution mobile display, you could probably play at 1440p high or medium and still get good performance, but frequent dips in framerate remain a problem.

PUBG closing thoughts

Desktop PC / motherboards

MSI Z390 MEG Godlike

MSI Z370 Gaming Pro Carbon AC

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X470 Gaming M7 AC

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

MSI Aegis Ti3 VR7RE SLI-014US

The GPUs

MSI GeForce RTX 2080 Ti Duke 11G OC

MSI GeForce RTX 2080 Duke 8G OC

MSI GeForce RTX 2070 Gaming Z 8G

MSI GeForce GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Ti Gaming 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GE63VR Raider (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

The past year of updates has made benchmarking PUBG a bit less of a pain in the ass, thankfully. With the replay feature, it's now possible to run the exact same test sequence on each GPU and CPU, which is what I've done for these updated results. Of course, the replays do expire each time a significant update to the engine comes along, which is relatively frequent, but I was able to get all of the testing done within a period of several days.

With multiple maps now available, I've checked performance on all of them. In general, it doesn't matter which map you're on, as performance is generally similar. There are of course areas within each map that are more demanding, but overall framerates are relatively consistent across the various landscapes.

Thanks again to MSI for providing the hardware. All the updated testing was done with the latest Nvidia and AMD drivers in late December 2018, Nvidia 417.35 and AMD 18.12.3. (RTX 2060 was tested with its launch drivers in January.) While previously Nvidia GPUs held a clear advantage, things are far closer these days, and really you can play on just about any decent graphics card with the right settings.

For competitive players looking for optimal performance, the best results typically come with everything at minimum quality except for view distance. Or you could turn down just post-processing, shadows, and effects and get close to the same performance.

Bluehole continues to add to Battlegrounds, but Unreal Engine is pretty well tuned at this point. We likely won't see massive changes in performance going forward, and the game runs well on a large variety of hardware. The Vikendi map on the other hand is new enough that further tweaks to the level could improve performance, particularly when it comes to minimum fps.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.