How to get the best framerate in Rainbow Six Siege

From minimum to maximum quality, we show how the game runs on a large suite of hardware.

Rainbow Six Siege launched at the end of 2015, and it's experiencing a minor renaissance today. If you're hoping to become more competitive for the latest season, hitting 144fps on a high refresh rate monitor definitely helps. But what sort of hardware does that require? To answer that question, I’ve put together a suite of test results on all the latest graphics cards, along with several previous generation parts, and three gaming notebooks. I've also collected benchmarks from seven of the latest CPUs, though Rainbow Six Siege ends up being relatively tame when it comes to CPU requirements (at least if you're not livestreaming).

Many competitive players drop to lower quality settings to boost framerates, but it’s important to point out that Siege uses render scaling combined with temporal AA by default. The render scaling is normally set to 50 percent, so unless you change the setting, 1920x1080 renders at 1360x764 and then scales that up to 1080p. This of course introduces some blurriness and other artifacts, and if you want optimal visibility I recommend setting this to 100 percent scaling (or disable temporal AA).

As far as graphics cards, everything from GTX 770 and R9 380 and above can easily break 60 fps at 1080p medium, and dropping to 1080p low allows even lower spec systems to run well. Higher quality settings naturally require more powerful hardware, but most PCs built within the past several years should be more than sufficient.

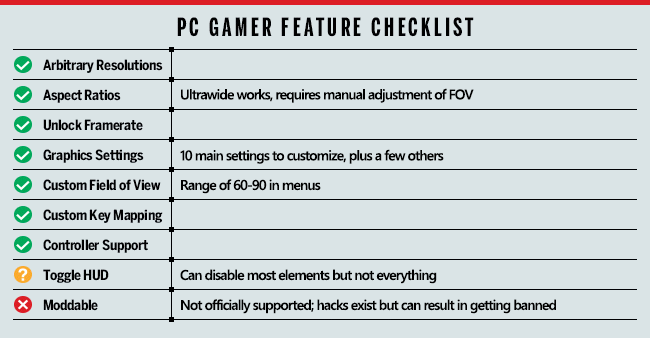

Let's start with the full rundown of the features and settings before going any further.

Rainbow Six Siege includes most of the key features we want from a modern game, with the only missing items being the ability to fully disable the HUD (although you can toggle plenty of it), and the lack of mod support. The absence of mod support is frustrating, but not uncommon for Ubisoft games. The desire to prevent cheating and hacks is often cited as a reason for not supporting mods, but as with other popular competitive games, that hasn't completely stopped people from figuring out ways to exploit the system.

Rainbow Six Siege settings overview

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Rainbow Six Siege on a bunch of different AMD and Nvidia GPUs, along with multiple CPUs—see below for the full details. Full details of our test equipment and methodology are detailed in our Performance Analysis 101 article. Thanks, MSI!

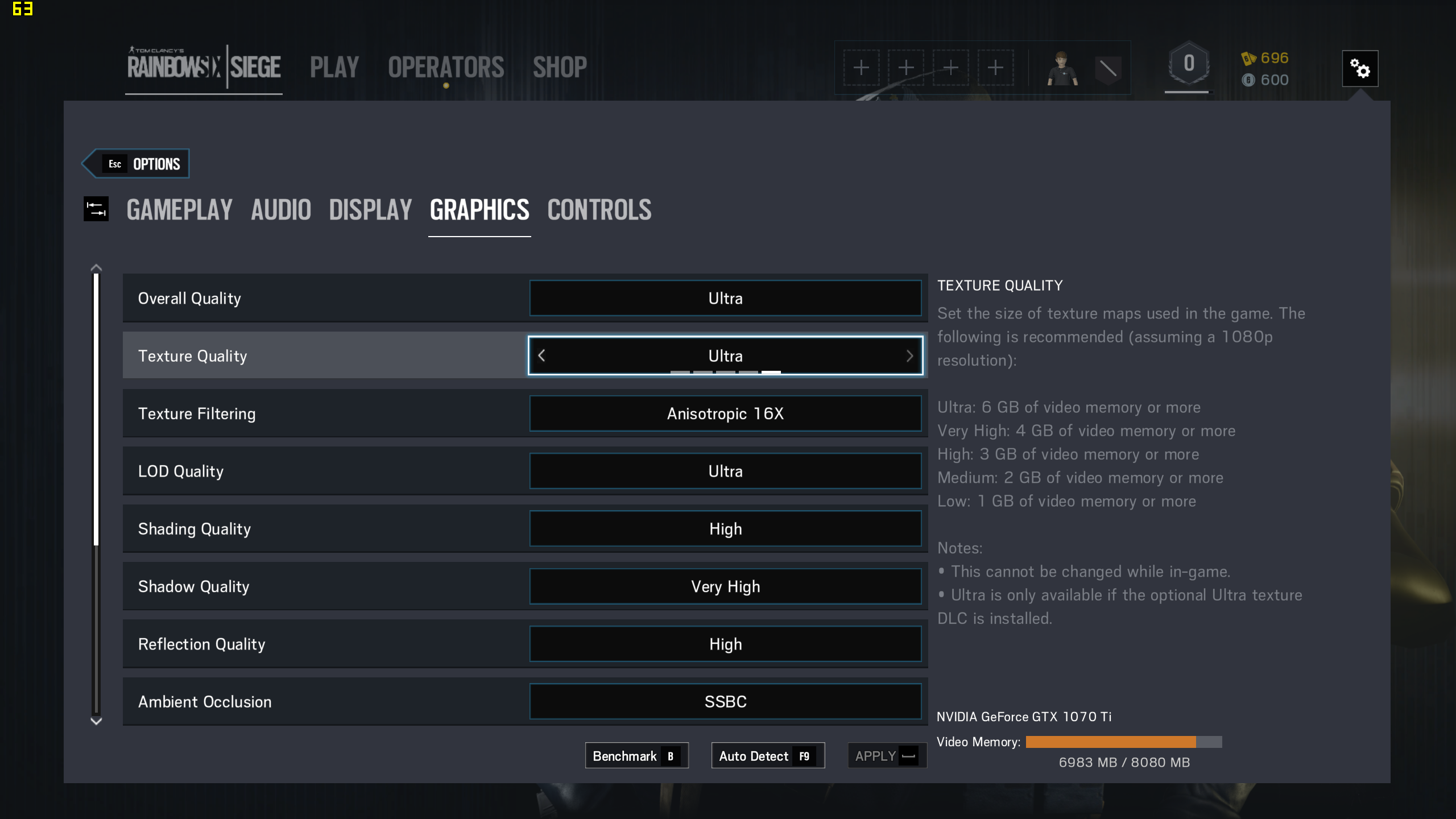

Under the Display options you can change the resolution, V-sync, and FOV, while all the quality options are under the Graphics menu. There are five presets (plus 'custom'), ranging from low to ultra. Using the presets with a GTX 1070 Ti at 1440p, with 100 render scaling unless otherwise noted, the presets yield the following results (with performance increase relative to the ultra preset):

- Ultra: 78.3 fps

- Very High: 86.2 fps (10% faster)

- High: 97.6 fps (25% faster)

- Medium: 116.2 fps (48% faster)

- Low: 144.1 fps (84% faster)

- Minimum (no AA): 154.0 fps (97% faster)

- Ultra with 50 render scaling: 121.0 (55% faster)

If you're looking to play Rainbow Six Siege at 1080p, the hardware requirements are pretty modest.

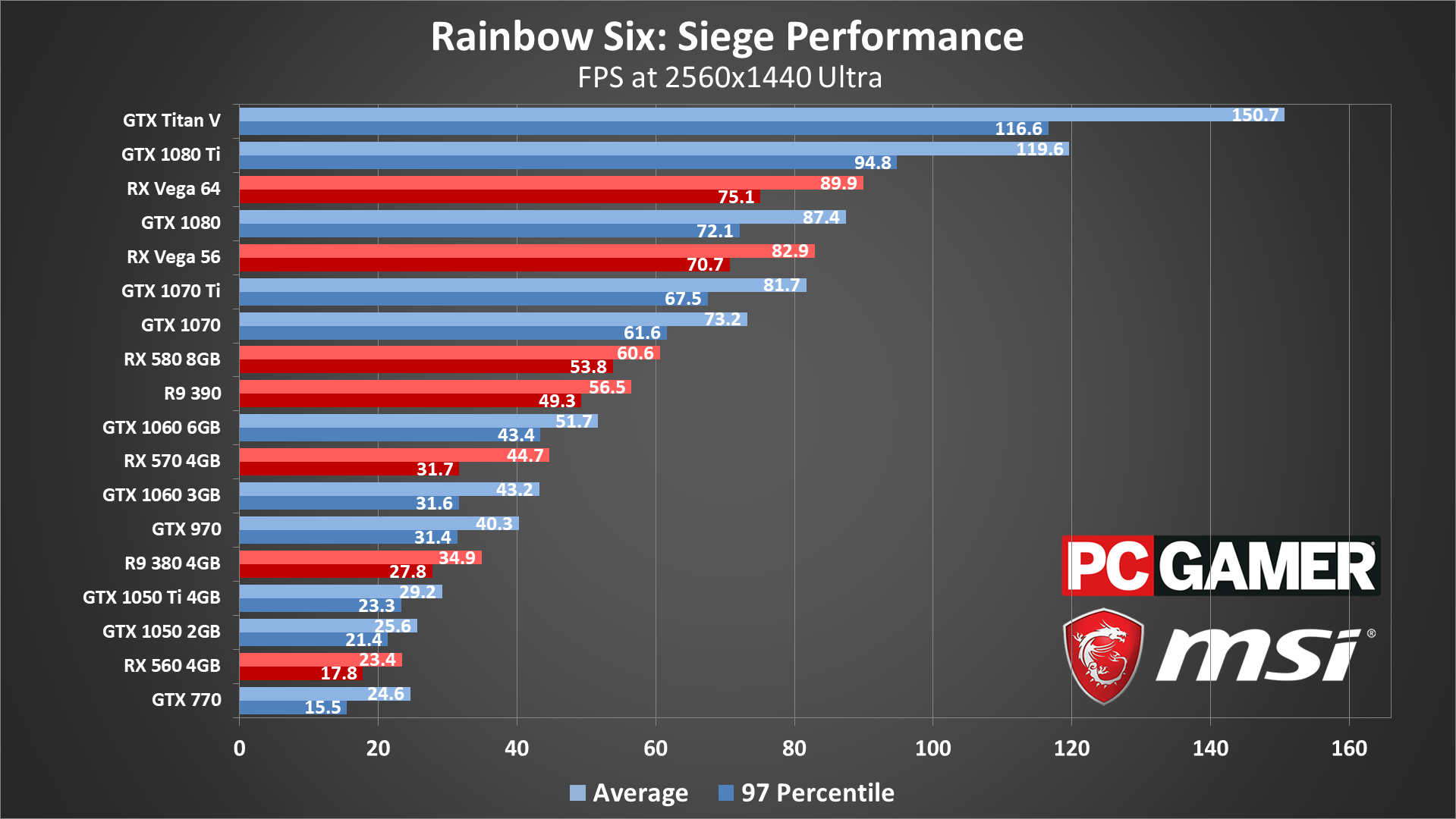

We consider 1440p and a 144Hz display (preferably G-Sync or FreeSync) to be the sweet spot for displays, and Rainbow Six Siege proves quite demanding at higher quality settings. Note also how big of a difference the default 50 percent render scaling makes—and the option to adjust render scaling was only introduced into the PC version in late 2017 (meaning, prior to then, everyone on PC was running with 50 percent render scaling if temporal AA was enabled).

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Rainbow Six Siege has ten primary quality settings you can adjust, plus two more related to the way temporal AA is handled. It's a bit odd, but I've found that after enabling at the ultra preset, as soon as you change any setting (which triggers the 'custom' preset), even if you put it back to the 'ultra' defaults, you get a relatively large boost in framerate—around 20 percent. There's almost certainly a change in image quality as well when this happens, though it's difficult to determine exactly what's happening. For the following tests, all settings are at the default ultra settings, but using the 'custom' preset, and performance changes are relative to that.

Texture Quality: Sets the size of the textures used in the game: 6GB for ultra, 4GB for very high, 3GB for high, 2GB for medium, and 1GB for low. Requires reloading a map to change. Cards with less VRAM can be impacted more, but with a high-end card dropping to low only improves performance by about four percent.

Texture Filtering: Controls the type of texture filtering, either linear or anisotropic. Only causes a small three percent improvement in performance.

LOD Quality: Adjusts the distance at which lower detail geometry meshes are used. Minor impact of around four percent.

Shading Quality: Tweaks the lighting quality, skin subsurface scattering, and other elements. Less than a one percent improvement in performance.

Shadow Quality: Sets the resolution of shadow maps as well as other factors for shadows. Dropping to low improves performance by around ten percent.

Reflection Quality: Adjust the quality of reflections, with low using static cube maps, medium uses half-resolution screen space reflections, and high uses full resolution screen space reflections. Dropping to low improves performance by around seven percent, with only a minor change in image quality.

Ambient Occlusion: Affects the soft shadowing of nearby surfaces and provides for more realistic images. Uses SSBC (a Ubisoft-developed from of AO) by default for most settings. Turning this off improves performance by around 14 percent, while switching to HBAO+ (an Nvidia-developed form of AO that's generally considered more accurate) reduces performance by a few percent—potentially more on older AMD cards.

Lens Effects: Simulates real-world optical lenses with bloom and lens flare. Turning this off made almost no difference in performance, but it may have a more noticeable effect when using scoped weapons.

Zoom-in Depth of Field: Enables the depth of field effect when zooming in with weapons. This didn't impact performance in the benchmark sequence but may affect actual gameplay more. If you don't like the blur effect, I recommend turning this off.

Anti-Aliasing: Allows the choice of post-processing technique to reduce jaggies. By default this is T-AA (temporal AA), which uses an MSAA (multi-sample AA) pattern combined with previous frame data. T-AA-2X and T-AA-4X use samples beyond the display resolution and can require a lot of VRAM. FXAA doesn't work as well but is basically free to use. Turning AA off improves performance by around four percent.

Render Scaling: Combined with the AA setting (when T-AA is in use), it adjust the rendered resolution, which is then scaled to your display's resolution. The default is 50, which improves performance by 50-80 percent compared to 100, but this is somewhat like lowering the display resolution. I prefer this to be set to 100, though for performance reasons you may want to lower it.

T-AA Sharpness: Determines the intensity of the sharpening filter that's applied after performing temporal AA, but can actually negate some of the benefits of AA. Negligible impact on performance.

For these tests, I'm using 100 percent render scaling, which will result in performance figures that are lower than what you may have seen previously. I’m also testing with the latest version of the game, as of late February 2018, using the then-current Nvidia 390.77 and AMD 18.2.2 drivers. On the medium preset, I also switch to FXAA instead of T-AA, while the ultra preset uses T-AA.

Rainbow Six includes a built-in benchmark, which I’ve used for this performance analysis. Different maps and areas can alter the framerate, but in general performance scales similarly, so the ranking of GPUs should remain roughly the same. One thing that's worth pointing out however is that AMD GPUs tend to perform quite a bit better in higher complexity scenes (eg, when there are half a dozen or so character models in the benchmark sequence, with wall explosions going off), while the Nvidia cards hit higher framerates in less complex environments. Despite having an Nvidia logo and branding, then, Rainbow Six Siege often runs better on AMD graphics cards right now—not that any GPUs are affordable at present.

MSI provided all the hardware for this testing, consisting mostly of its Gaming/Gaming X graphics cards. These cards are designed to be fast but quiet, though the RX Vega cards are reference models and the RX 560 is an Aero model. I gave the both the Vega cards and the 560 a slight overclock to level the playing field, so all of the cards represent factory OC models.

My main test system uses MSI's Z370 Gaming Pro Carbon AC with a Core i7-8700K as the primary processor, and 16GB of DDR4-3200 CL14 memory from G.Skill. I also tested performance with Ryzen processors on MSI's X370 Gaming Pro Carbon, also with DDR4-3200 CL14 RAM. The game is run from a Samsung 850 Pro 2TB SATA SSD for these tests, except on the laptops where I've used their HDD storage.

Rainbow Six Siege graphics card benchmarks

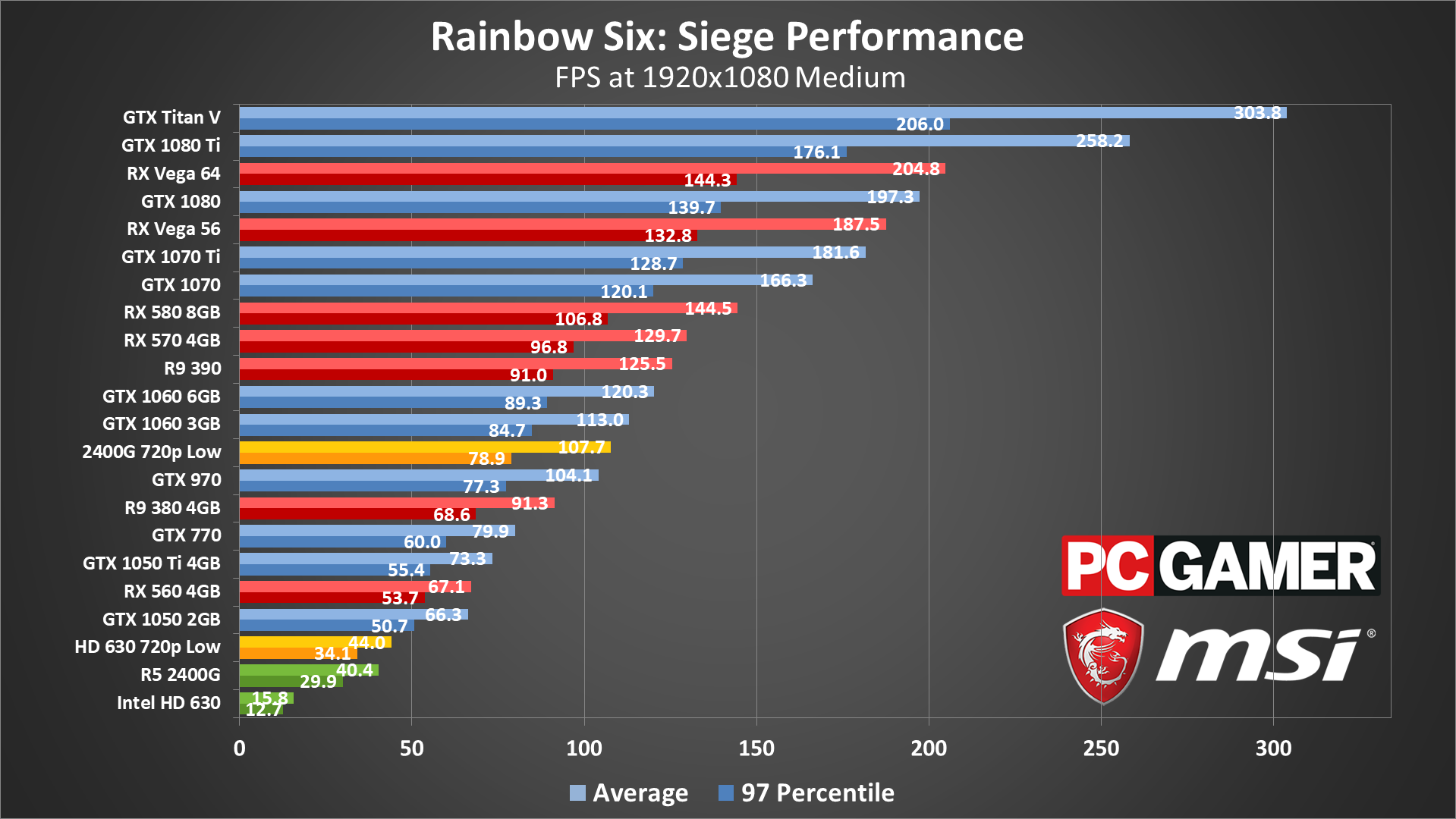

At 1080p medium, everything from the RX 560 and GTX 1050 and above is able to break 60fps averages, though minimums on some of the cards fall just short. I've also included AMD's 2400G with Vega 11 and Intel's 8700K with HD 630 in the charts, at both 1080p medium as well as 720p low settings. Both the integrated solutions are playable, with the 2400G easily breaking 60fps, though at 1080p low it does fall short (53fps).

If you're looking to play Rainbow Six Siege at 1080p, the hardware requirements are pretty modest—the game lists a minimum requirement of a GTX 460 or HD 5870, while the recommended GPU is a GTX 670/760 or HD 7970/R9 280X or above. At low to medium quality 1080p, the recommended GPUs should be able to hit 60fps or more, while the minimum GPUs will only be sufficient for around 30-50 fps.

But what if you're hoping to max out a 144Hz or even a 240Hz 1080p display? For the latter, you'd need at least a GTX 1080 or RX Vega, and even then you'd need to drop to low quality. The difference between 144Hz and 240Hz is pretty minimal, however (unless you're hopped up on energy drinks?), and for 144Hz gaming the 1060 and above at low quality should suffice.

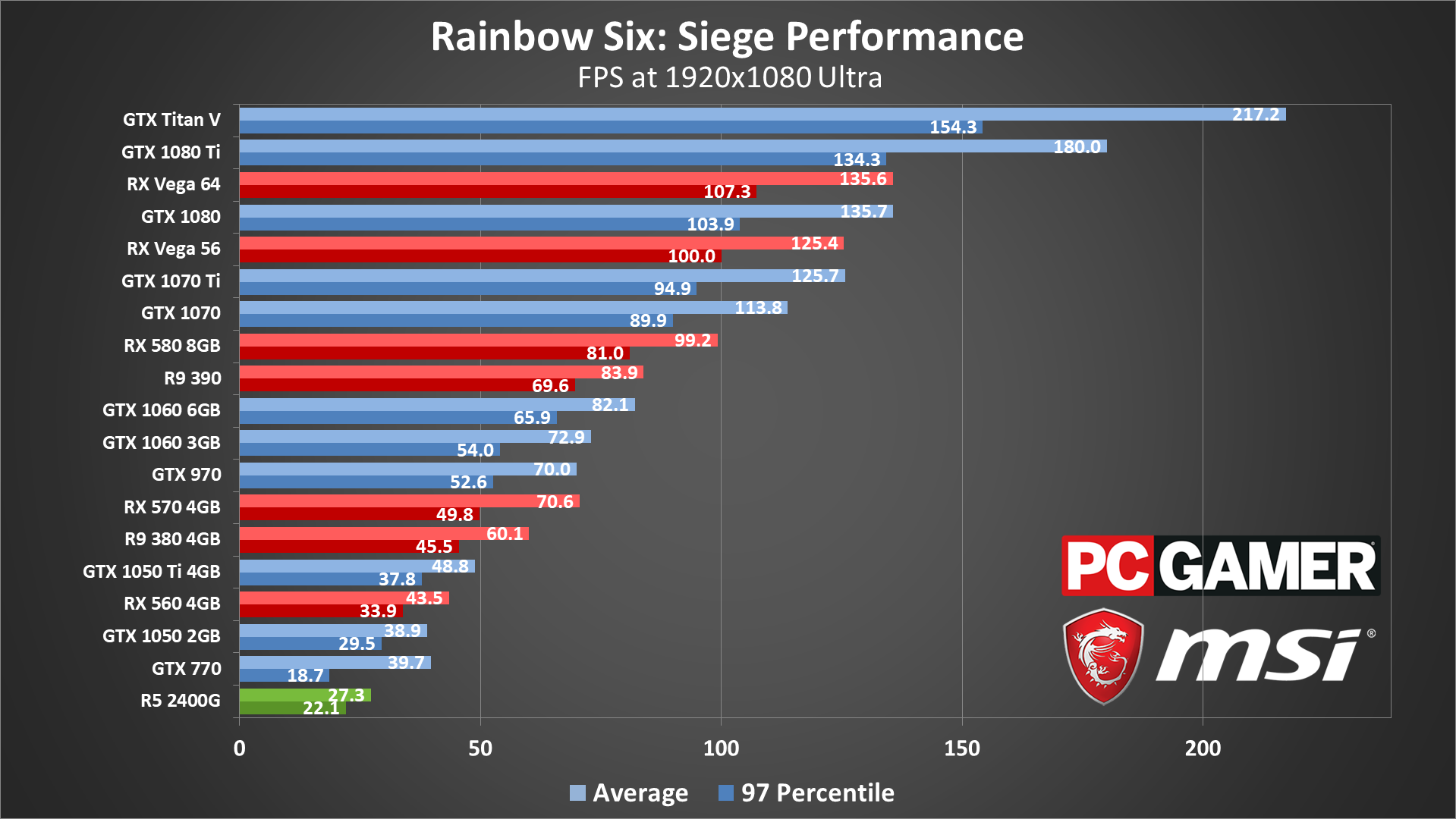

Maxing out the settings at 1080p ultra drops performance by about 30-40 percent, depending on your GPU—even more on GPUs that don't have 4GB or more VRAM. The R9 380 and GTX 970 are still able to hit 60+ fps, though the RX 570 4GB stumbles a bit relative to its direct competition. I'd strongly suggest dropping to the high preset on most of the GPUs, which boosts performance by about 25 percent relative the ultra and doesn't cause a significant drop in image fidelity.

For 144Hz 1080p gaming, you'll need at least a 1080 Ti to max out your monitor, or the 1070 and Vega and above if you're willing to reduce a few settings. 240Hz on the other hand is out of reach of even the Titan V, unless you tweak the settings or use render scaling. As with 1080p medium, AMD GPUs tend to deliver a better overall experience than their direct Nvidia competitors, at least if we go by MSRPs. The 580 8GB has a significant 20 percent lead over the 1060 6GB for example, possibly thanks to the extra VRAM. The Vega cards also just manage to edge out the 1070 Ti and 1080, though it's basically a tie.

If you're looking to hit Platinum in ranked play, you likely won’t want to go beyond 1440p, and even then, running at lower quality settings in order to hit higher refresh rates would be advisable. If you have a 1440p 144Hz G-Sync or FreeSync display, however, you can get an extremely smooth experience at anything above 90fps or so. Dropping a few settings (eg, using the very high preset for a 10 percent performance boost) should suffice on everything from the 1070 and above, or medium to low quality on the 1060 and above.

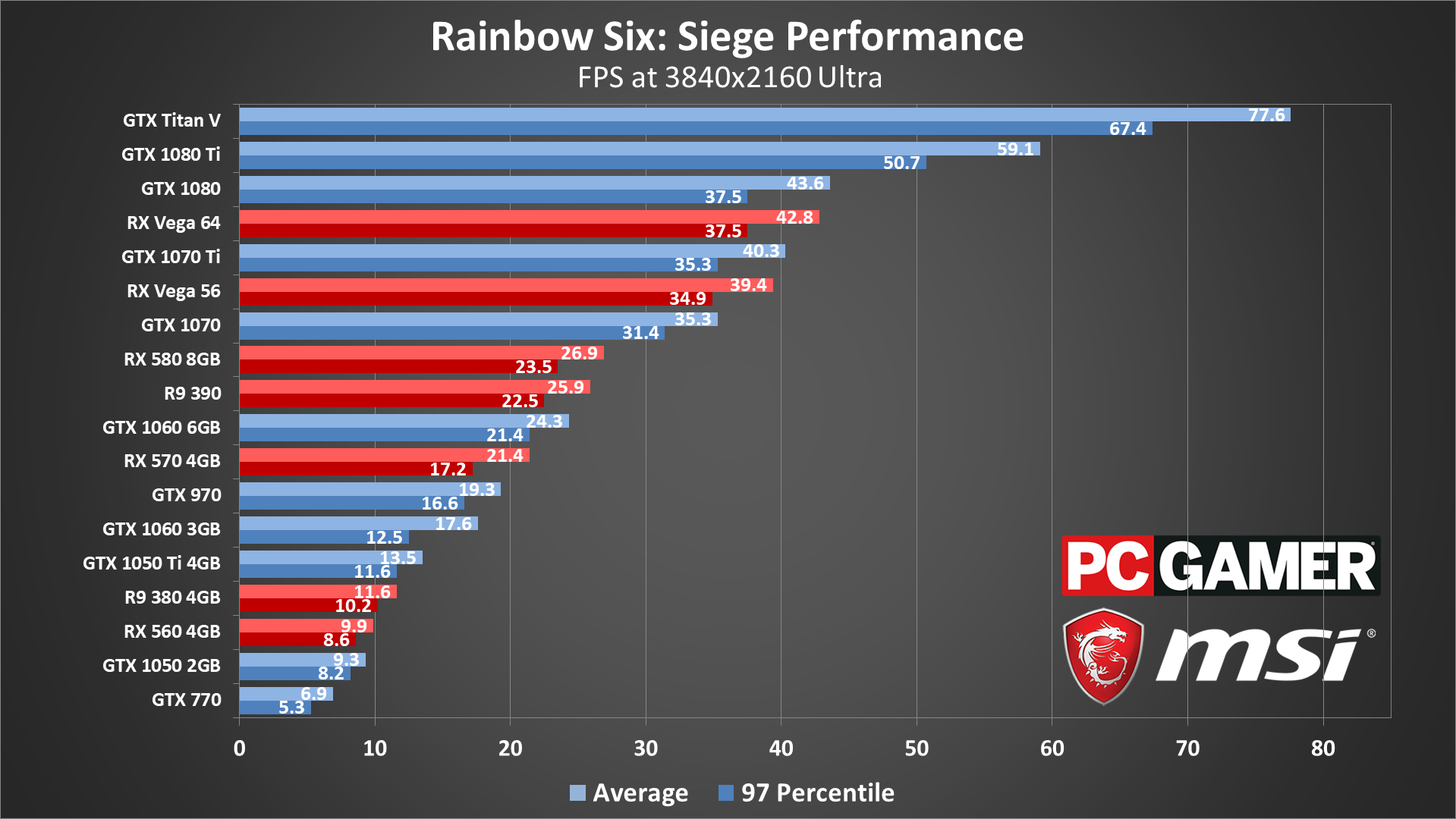

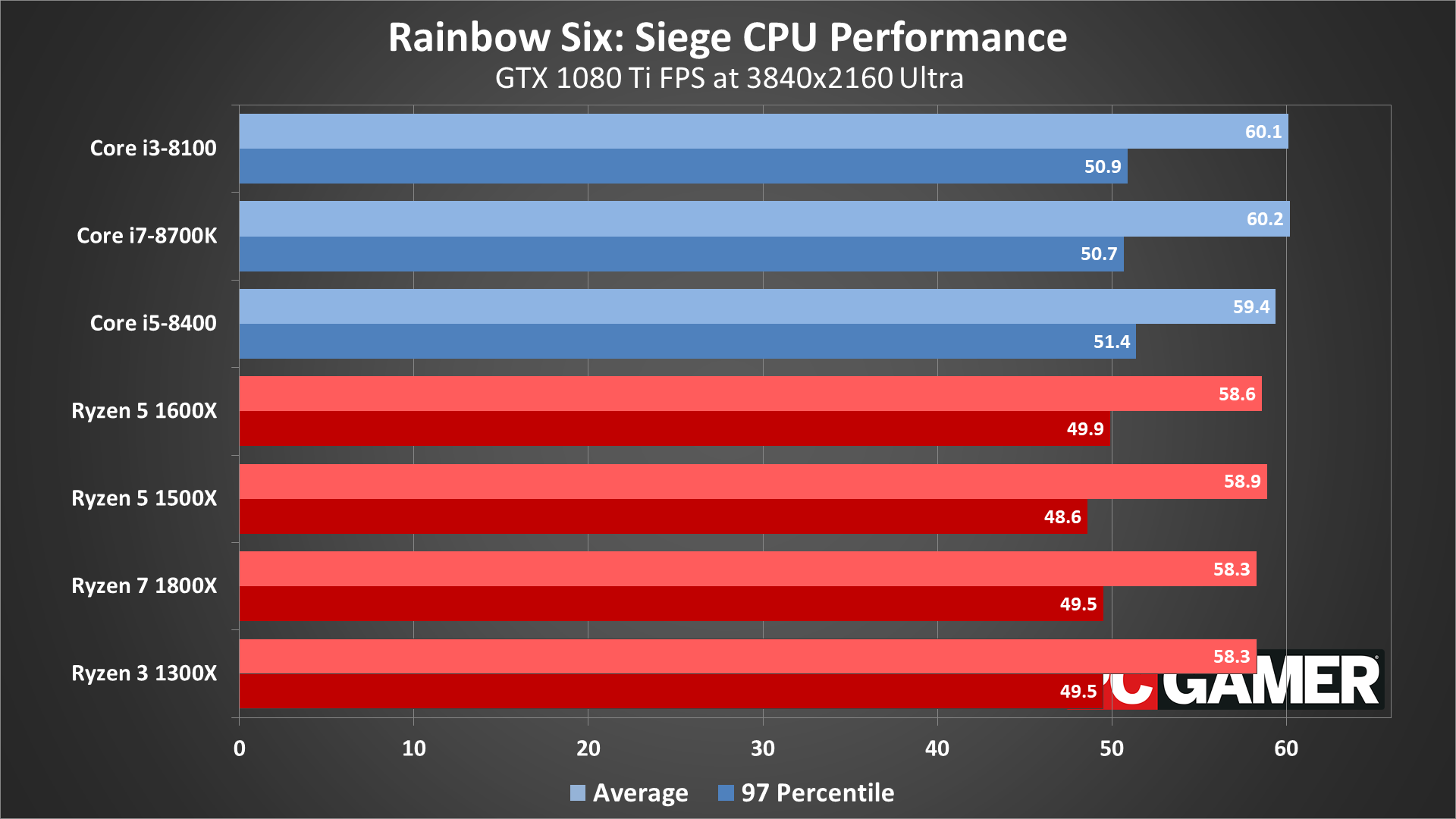

4k gaming isn't a great choice for competitive shooters, at least not unless you're running a 120Hz or higher 4k display, which are now starting to appear. But even then, hitting 120fps at 4k and maximum quality is basically a pipe dream—even the 1080 Ti comes up just shy of 60fps. There are ways to change that, of course. As an example, using ultra quality with 50 percent render scaling, which renders at 2716x1528 and then upscales with T-AA to 3840x2160, the GTX 1080 Ti gets up to 95 fps. Welcome to the new 4k, which is actually 2.7k.

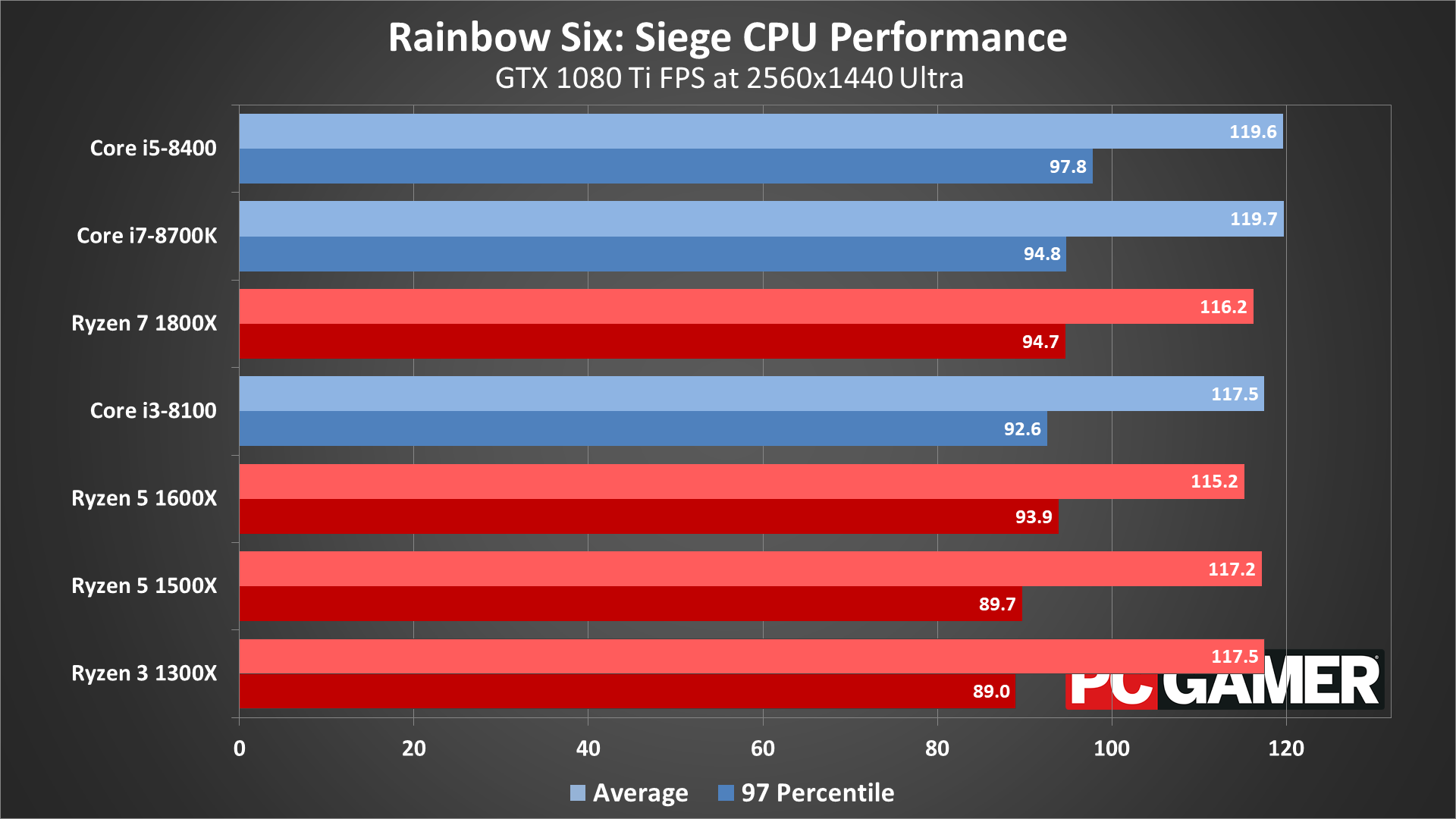

Rainbow Six Siege CPU performance

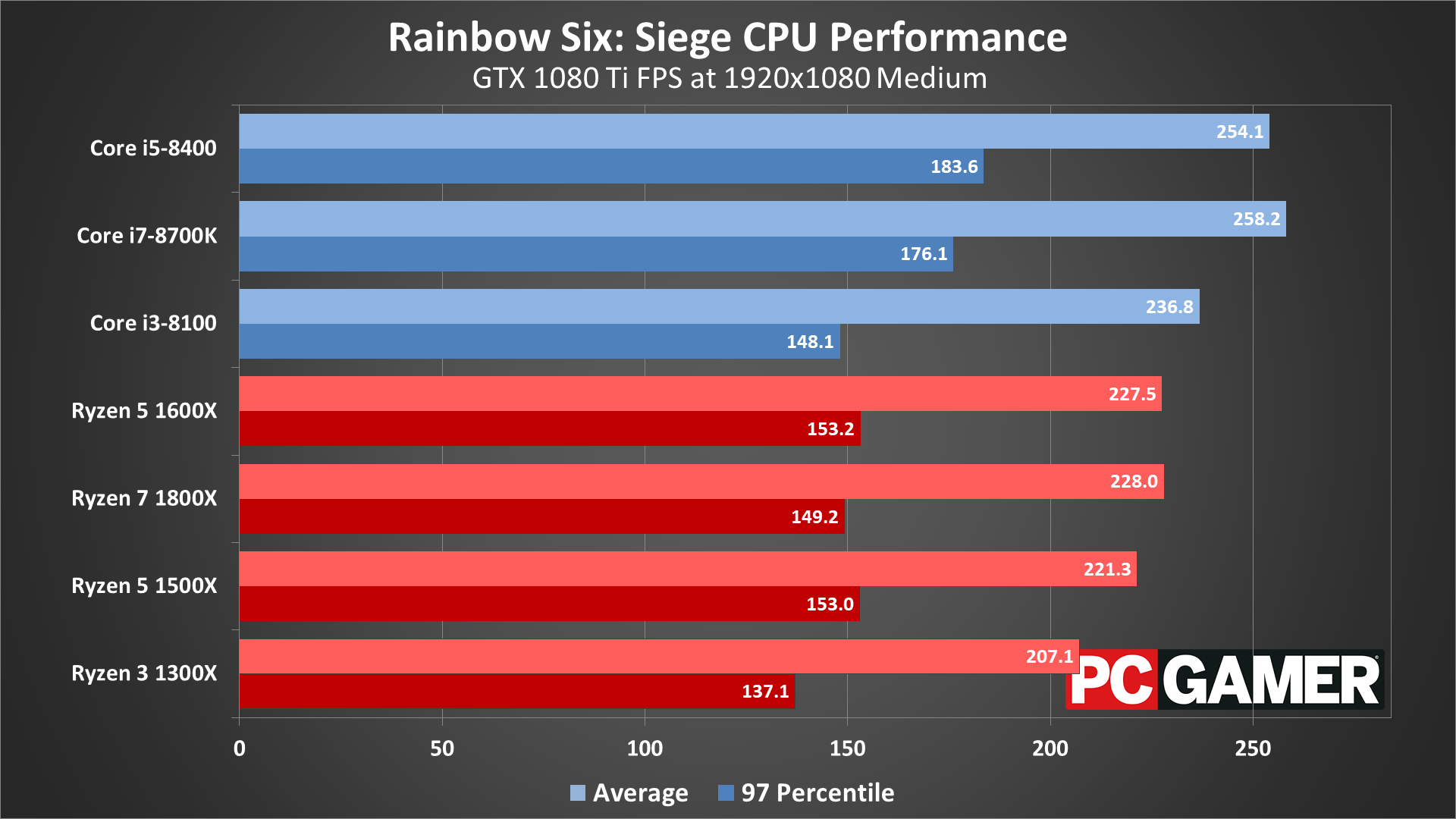

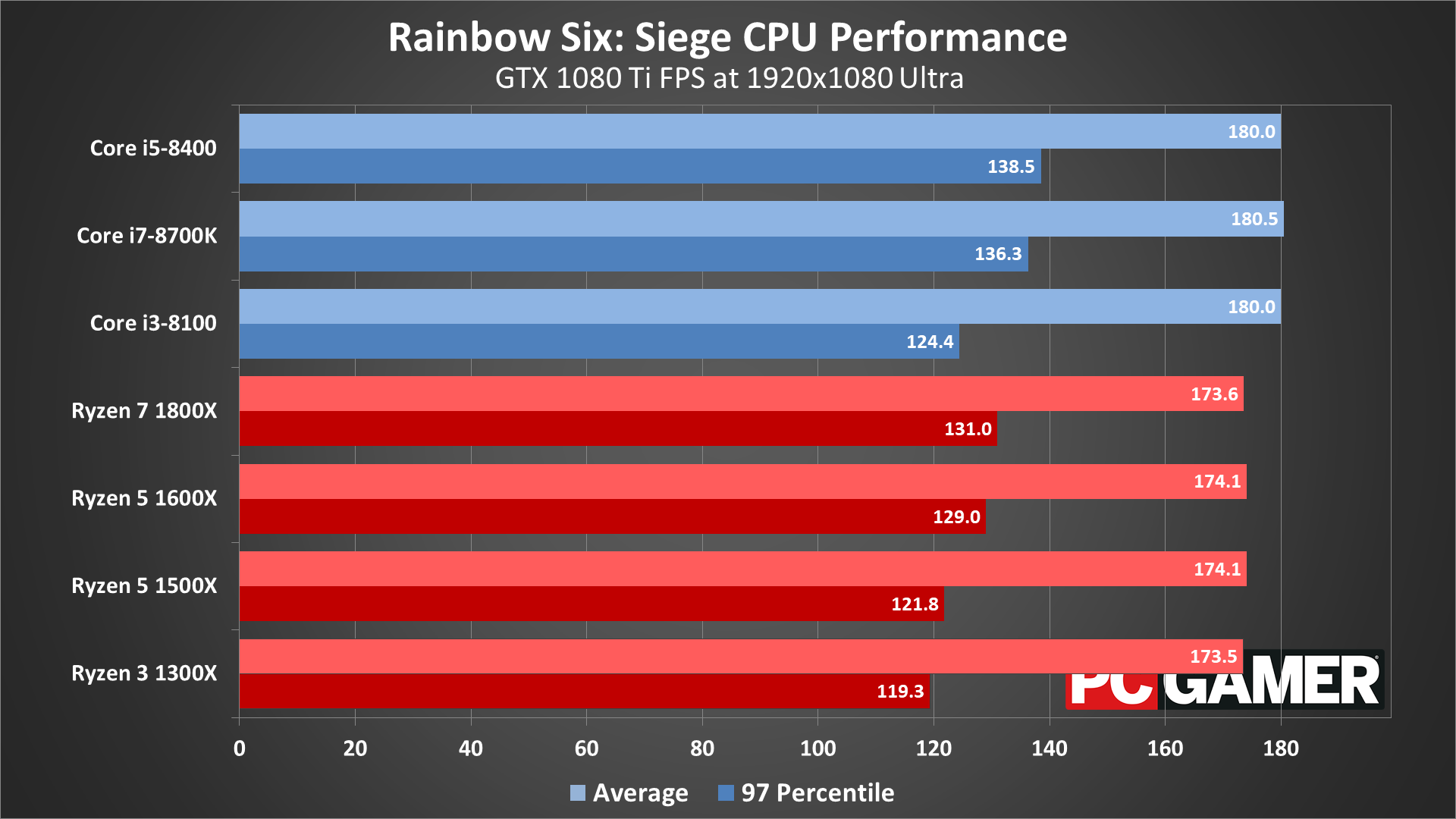

For CPU testing, I've used the GTX 1080 Ti on all the processors. This is to try and show the maximum difference in performance you're likely to see from the various CPUs—running with a slower GPU will greatly reduce the performance gap. For Rainbow Six Siege, the CPU ends up being practically a non-event, at least if you're running anything relatively recent. Even 4-core/4-thread CPUs like the Ryzen 3 1300X and Core i3-8100 (which is basically the same performance as an i5-6500) only drop performance by 10-20 percent at 1080p medium, and that's compared to an overclocked 4.8GHz i7-8700K. Move to 1080p ultra and the gap between the fastest and slowest CPU I tested is only four percent, and at 1440p and 4k it's basically a tie among all the CPUs.

The 4-core/4-thread parts do show more framerate variations and lower minimums, and if you're doing something like livestreaming you'll want at least a 6-core processor. But if you're just playing the game, all the CPUs I tested can break 144fps (with the right GPU). Older 2-core/4-thread Core i3 parts or chips like AMD's FX-6300 might fare worse, but you should be able to easily hit 60fps or more.

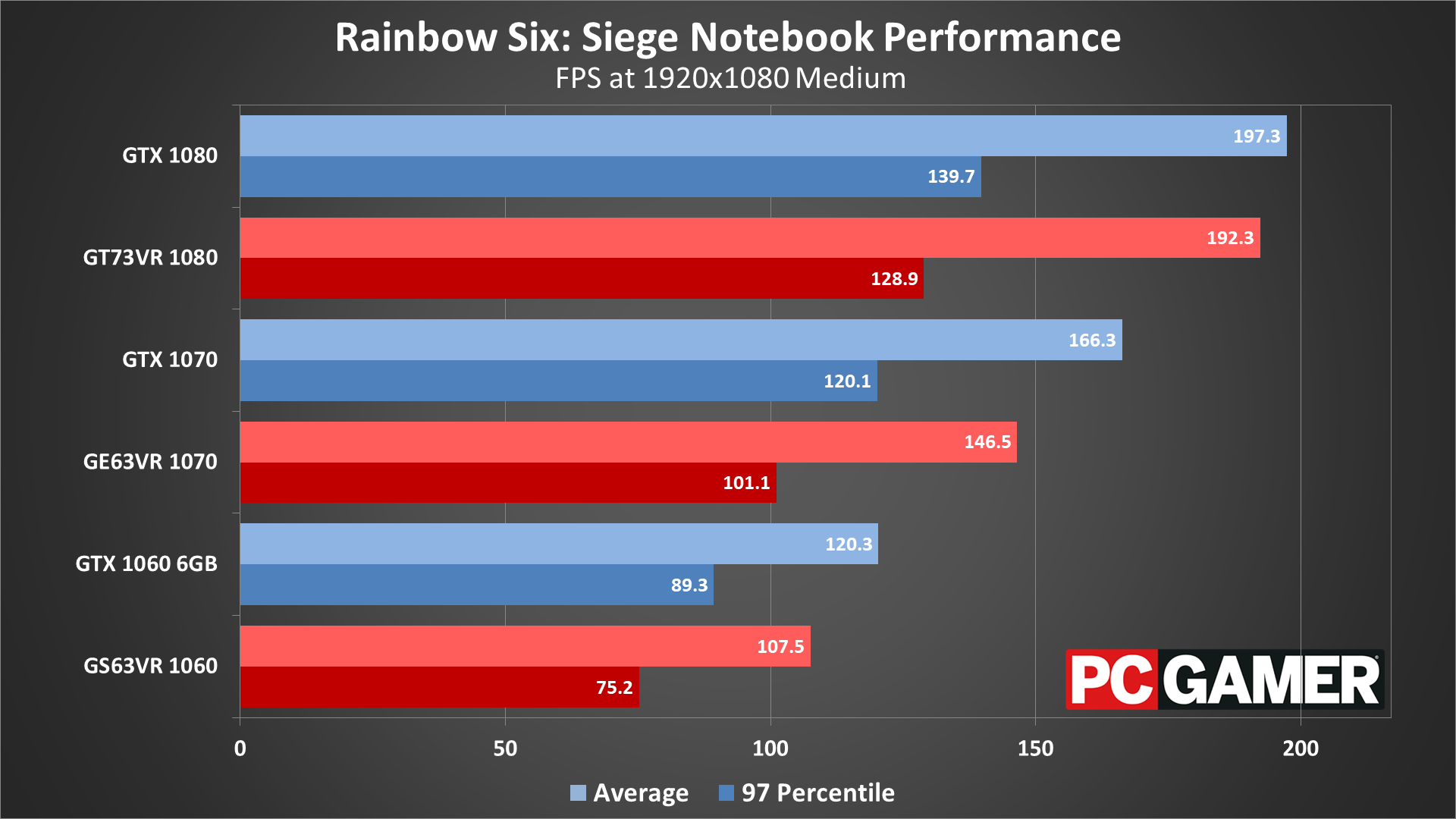

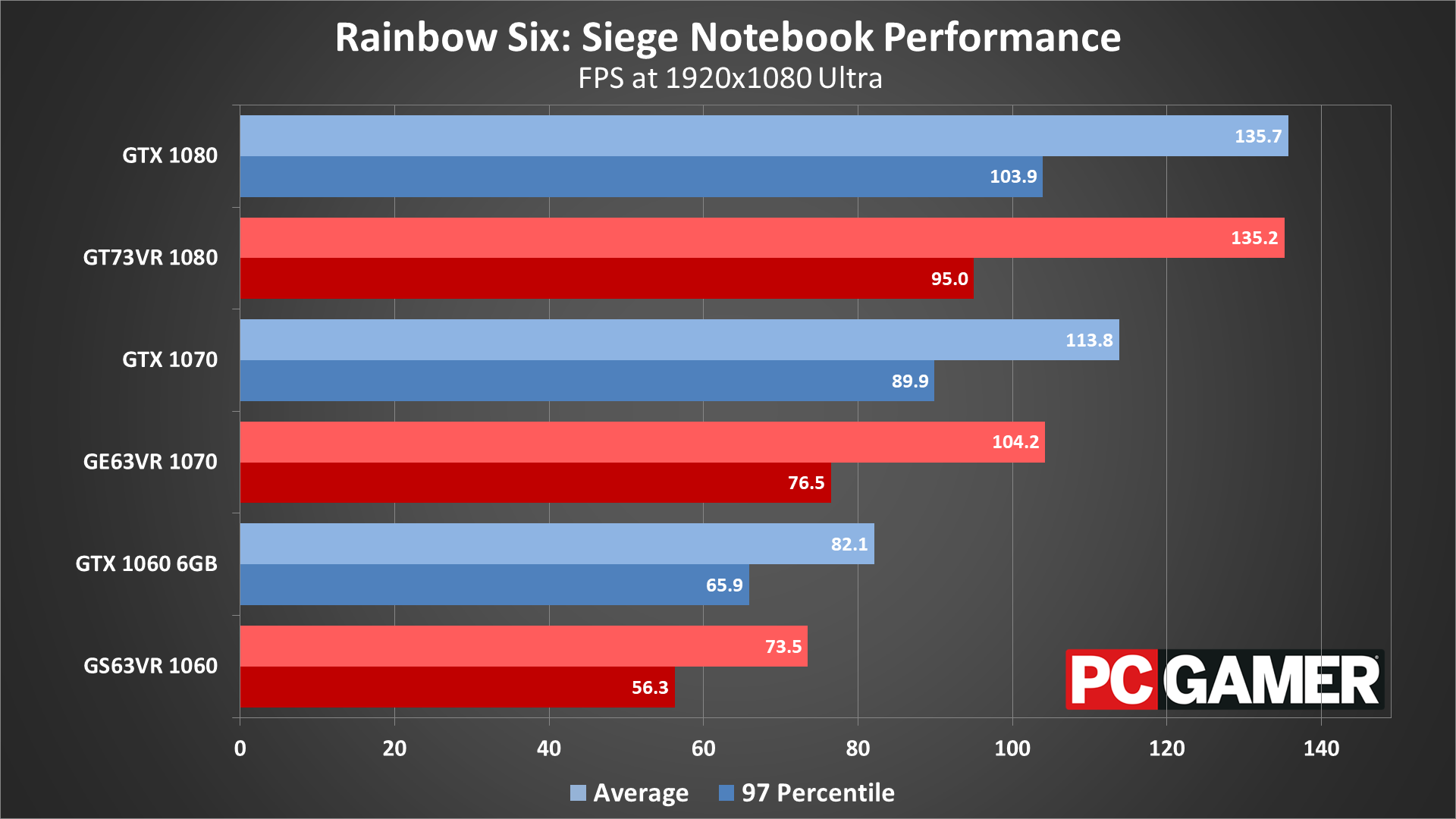

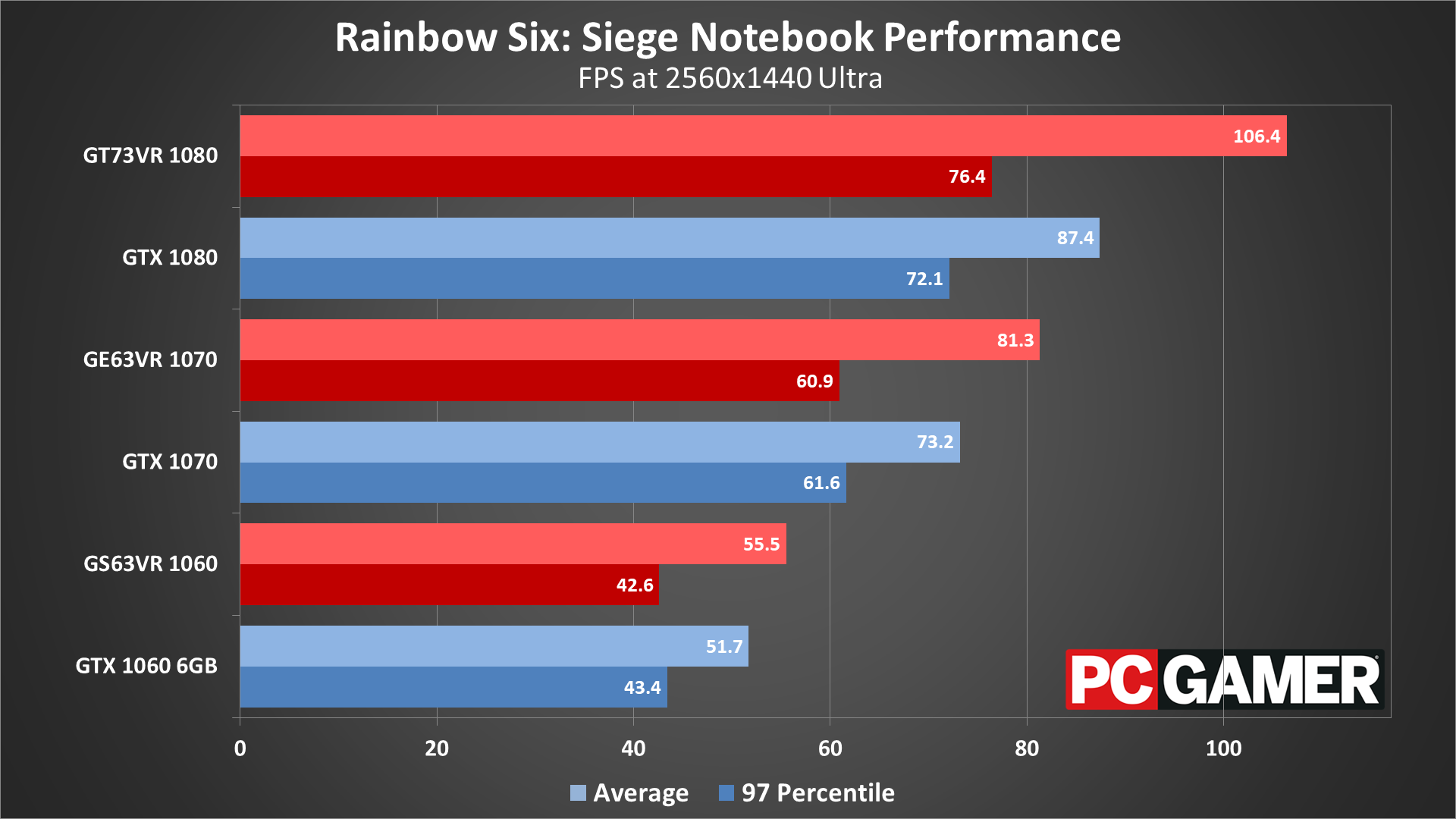

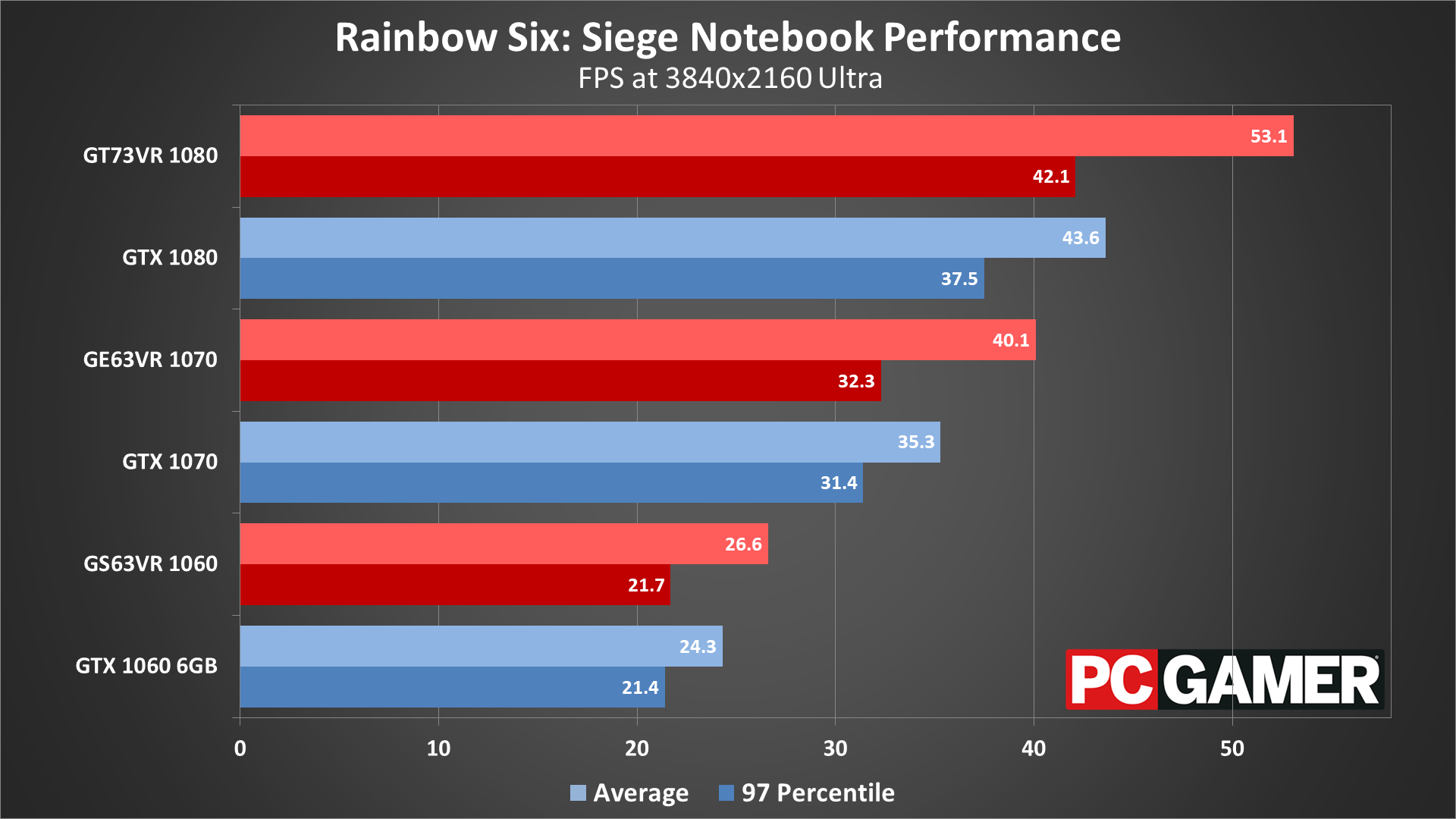

Rainbow Six Siege notebook performance

Note: the notebooks are using T-AA-4x with 1280x720 for this chart.

Note: the notebooks are using T-AA-4x with 1920x1080 for this chart.

What about playing on a gaming notebook? Because the CPU doesn't have a major influence on performance, the only real difference between the desktop and notebook Nvidia GPUs is their clockspeeds—the notebook parts are typically clocked about 10 percent lower, though the GT73VR is actually overclocked to desktop GPU speeds. The biggest gap I measure between the desktop and the notebooks is about 15 percent (the 1070 at 1080p ultra).

Desktop PC / motherboards

MSI Aegis Ti3 VR7RE SLI-014US

MSI Z370 Gaming Pro Carbon AC

MSI X299 Gaming Pro Carbon AC

MSI Z270 Gaming Pro Carbon

MSI X370 Gaming Pro Carbon

MSI B350 Tomahawk

The GPUs

GeForce GTX 1080 Ti Gaming X 11G

MSI GTX 1080 Gaming X 8G

MSI GTX 1070 Ti Gaming 8G

MSI GTX 1070 Gaming X 8G

MSI GTX 1060 Gaming X 6G

MSI GTX 1060 Gaming X 3G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

MSI RX Vega 64 8G

MSI RX Vega 56 8G

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Gaming Notebooks

MSI GT73VR Titan Pro (GTX 1080)

MSI GE63VR Raider (GTX 1070)

MSI GS63VR Stealth Pro (GTX 1060)

I also wanted to show how the render scaling isn't quite the same as actually playing at a higher resolution, so for the laptops I used T-AA-4x with 1280x720 (supposedly the same as 2560x1440) and 1920x1080 (3840x2160 simulated). Instead of being slightly slower, the notebooks outperform the desktop GPUs at these faux-1440p/4k resolutions, and with fewer jaggies as a bonus. I'd recommend sticking with your laptops native resolution if possible, however.

If you'd like to compare performance to my results, I've used the built-in benchmark to make things easy. Just remember to switch to 100 percent render scaling for the ultra preset, and FXAA for the 1080p medium results. I collected frametimes using FRAPS, and then calculated the average and 97 percentile minimums using Excel. 97 percentile minimums are calculated by finding the 97 percentile frametimes (the point where the frametime is higher than 97 percent of frames), then finding the average of all frames with a worse result. The real-time overlay graphs in the video are generated from the frametime data, using custom software that I've created.

Thanks again to MSI for providing the hardware. These test results were collected February 22-26, 2018, using the latest version of the game and the graphics drivers available at the start of testing (Nvidia 390.77 and AMD 18.2.2). In heavy firefights, AMD GPUs tend to do better, which is good to see considering the game has Nvidia branding—no favoritism is on display here. Part of that may also be thanks to the age of the game, as AMD and Nvidia have both had ample time to optimize drivers, and the popularity of the game has been a good impetus to do so.

With plenty of dials and knobs available to adjust your settings, Rainbow Six Siege can run on a large variety of hardware, which might partially explain its popularity. More likely is that the tactical combat appeals to a different set of gamers than stuff like PUBG and CS:GO, and Ubisoft has also done a great job at providing regular updates to the game over the past two years, with the new Operation Chimera and the Outbreak event set to go live in the near future.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.