Nvidia vs AMD: Which is truly better?

Everything you really need to know about Team Green vs. Team Red.

Millions of virtual soldiers have fought and died in the forum wars arguing over the relative merits of AMD and Nvidia GPUs. Gamers are a passionate group, and no other piece of hardware is likely to elicit as much furor than graphics cards. But really, what are the differences between AMD and Nvidia graphics processors? Is one vendor truly better, who makes the best graphics card, and which should you buy?

This is our take on the subject, which sets aside notions of brand loyalty to look at what each company truly offers.

A brief historical overview

PC Gamer is going back to the basics with a series of guides, how-tos, and deep dives into PC gaming's core concepts. We're calling it The Complete Guide to PC Gaming, and it's all being made possible by Razer, which stepped up to support this months-long project. Thanks, Razer!

A quick history of both companies is a good place to start. AMD (Advanced Micro Devices) has been around since 1969, nearly 50 years now. Based out of Santa Clara, California, the company got its start making microchips, often for other vendors. Over the years, AMD has acquired other companies and sold off portions of its business. The two most noteworthy of these are the purchase of ATI Technologies in 2006, which became AMD's GPU division, and the sale of its manufacturing foundry division in 2008 into GlobalFoundries. This is the AMD most people are familiar with today, a company that designs both CPUs and GPUs, and has those parts manufactured at one of several places—TSMC, GlobalFoundries, or Samsung. AMD's primary products today are sold under the Ryzen (CPUs) and Radeon (GPUs) brands.

Nvidia hasn't been around quite as long. Founded in 1993 and also based out of Santa Clara, Nvidia focused on graphics from the beginning. Its first major product was the Riva TNT in 1998, followed by the TNT2 later that same year. These were arguably the most successful all-in-one 2D and 3D graphics solutions up to that time. The GeForce 256 in 1999 became the first GPU (Graphics Processing Unit) thanks to its inclusion of hardware support for T&L (Transform and Lighting) calculations. Nvidia's GeForce brand has stayed in place for nearly 20 years, and is currently (depending on how you want to count) in its 17th generation. Nvidia is also a fabless company (meaning it designs chips, but doesn't manufacture them itself), relying primarily on TSMC for GPU manufacturing, though Samsung also makes some of the chips.

The graphics industry has been consolidated to primarily ATI/AMD and Nvidia, with Intel involved as well thanks to its integrated graphics business. (Intel is also planning to go after the dedicated graphics card market starting in 2020, though it's far too early to say how that will play out.) While both AMD and Nvidia have had numerous other ventures over the decades, including chipsets, mobile devices, and more, discussions and arguments over AMD and Nvidia tend to be focused on the GPU and graphics products. We'll confine the remainder of our discussion to that subject.

How your GPU works

Part of the early difficulties in the graphics market involved competing standards. 3dfx created its Glide API (Application Programming Interface) as a low-level way of talking to the hardware, which helped with performance but could only run on 3dfx hardware. Having a generic interface that works on any hardware helps with software development, and eventually DirectX and OpenGL would win out.

Today we have 'generic' low-level APIs like DirectX 12 and Vulkan, but whatever the API, the idea is that the GPU is a black box. The API defines the inputs to a specific function and the expected outputs, but how those outputs are generated is up to the drivers and hardware. If a company can come up with faster or more efficient ways to perform the required calculations, it can gain a competitive advantage. There's a second way to gain an advantage, and that's to create extensions to the core API that perform new calculations—and if the extensions later become part of the main API, even better.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

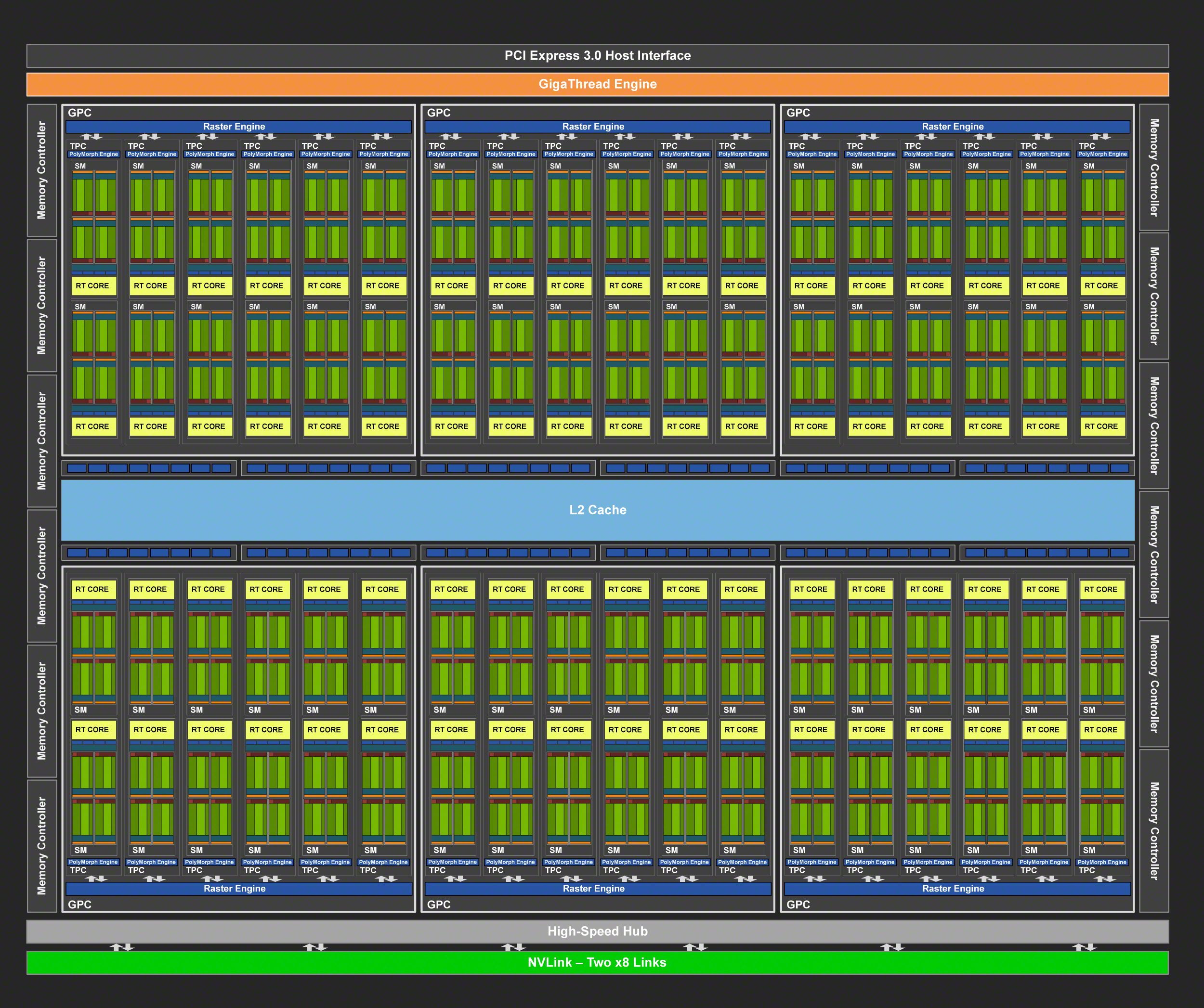

Internally, both AMD and Nvidia GPUs do the same primary calculations, but there are differences in the implementation. AMD GPUs as an example have had asynchronous compute since the first GCN (Graphics Core Next) architecture rolled off the production line in 2012, but it didn't really matter until Windows 10 arrived in 2015 with DirectX 12. Async compute provides more flexibility in scheduling graphics instructions for execution. Specifically, there are three instruction queues defined in DirectX 12 and the GCN architecture has three separate hardware queues to match. Nvidia GPUs don't have separate hardware queues, instead choosing to implement the queues via drivers.

AMD GPUs tend to have more processing cores—stream processors, GPU cores, or whatever you want to call them—compared to their Nvidia counterparts. As an example, the AMD RX Vega 64 has 4,096 GPU cores, while the competing Nvidia GTX 1080 has 2,560 cores. The same goes for the RX 580 (2,304 cores) against the GTX 1060 6GB (1,280 cores). Nvidia typically makes up for the core count deficit with higher clockspeeds and better efficiency.

Secondary calculations, for extensions and other elements, are where Nvidia holds a clear advantage—or monopoly if you prefer. As the dominant GPU manufacturer (see below), Nvidia has wielded its position to add numerous technologies over the years. PhysX and most GameWorks libraries are designed for Nvidia GPUs, and in many cases can't even be used on non-Nvidia cards. The GeForce RTX Turing architecture takes this to a new level, helping pave the way for real-time ray tracing, deep learning, and other features. Will AMD have compatible DirectX Ray Tracing (DXR) equivalents in the future? Probably, but we don't now when that will happen—Navi in late 2019 looks like the earliest possibility.

The real questions: how fast and how expensive?

Ultimately, the debates about which company is better come down to performance and price. Many people love the idea of driving an exotic sportscar, but few of us are willing to shell out the money. Competition between the major vendors tends to have a positive effect on pricing, and conversely when one vendor holds a commanding lead it often leads to higher prices. Historically, the crown for the fastest GPU has traded hands many times. The original GeForce DDR, GeForce 4, and GeForce 980 and on have Nvidia in the lead, while AMD's Radeon 9700/9800 Pro, HD 5870, HD 7870, and R9 290X have been excellent options.

Today, in raw performance, there's no question about which GPUs are faster. Nvidia's GTX 1080 Ti and above easily beat AMD's fastest GPUs, and the company's new RTX 2080 Ti holds the crown. Across twelve benchmarked games, many of which carry AMD branding, the RTX 2080 Ti averages a commanding 87 percent lead over the RX Vega 64. Nvidia also claims the next three slots in performance: the RTX 2080 is 'only' 45 percent faster than the Vega 64, GTX 1080 Ti is 40 percent faster, RTX 2070 is 18 percent faster, and even the GTX 1080 is 5 percent faster. But the prices on those RTX cards….

Competition is good, and if we're left with only one option, there's no question what will happen to prices.

The RTX 2080 theoretically starts at $699, the same price as the outgoing GTX 1080 Ti, with slightly faster performance in existing games and potentially even better performance in the games of tomorrow. However, the least expensive RTX 2080 at present is $770—10 percent higher than the base MSRP. The RTX 2080 Ti is even worse, with a $999 base MSRP and $1,199 for the Founders Edition, and no 2080 Ti are currently in stock. At least $499 RTX 2070 cards are available, and as noted those still lead the fastest AMD cards in overall performance. Meanwhile, AMD's Vega 64 starts at $450 these days, and the only slightly slower RX Vega 56 starts at $380.

The above is all well and good if you're going for a top performance card, but far more $200 graphics cards are sold than $500 cards. Nvidia may lead in performance, and even performance per dollar with some of its high-end offerings, but what about mainstream models? Nvidia's GTX 1060 6GB ends up being a bit slower overall compared to AMD's RX 580 8GB, and AMD's card costs about $20 less on average. The same goes for the RX 570 4GB versus GTX 1060 3GB—though we'd be hesitant to buy a card with less than 8GB VRAM these days.

Which brand should you buy?

There's a case to be made for supporting the smaller brand—competition is good, and if we're left with only one option, there's no question what will happen to prices. A quick look at the GeForce RTX launch tells you everything you need to know. But if it comes down to our own wallets? Yeah, most of us aren't going to support a multi-billion-dollar corporation on 'principle.' But let's look at things a bit more closely.

There are instances where one brand of GPUs holds a commanding lead in performance. We cover many of the major game launches in our performance analysis articles, looking at a wide selection of graphics cards and processors. But most people play a variety of games, so overall performance averages and value become the driving factors. At present, the breakdown is pretty straightforward—we've already covered some of it above.

If ever there was a time to buy an AMD GPU to support the underdog, it's now.

Nvidia owns the high-end GPU market. For any graphics card costing $350 or more, Nvidia typically wins in value and performance, and Nvidia's GPUs are more efficient at each price point. GTX 1070 Ti may trade blows with RX Vega 56, but it takes the overall performance lead, costs a bit less, and uses about 50W less power under load. Go higher up the price and performance ladder and AMD doesn't even have a reasonable option. The GeForce RTX cards are presently in a league of their own as far as performance goes.

The midrange market is thankfully far more competitive. Now that GPU prices are settling back to normal following the cryptocurrency mining boom of early 2018, AMD's RX 570 and RX 580 beat Nvidia's GTX 1060. AMD still loses in overall efficiency, drawing 30-50W more power, but at least you're getting better performance and a lower price. There are games where Nvidia leads in performance (eg, Assassin's Creed Origins and Odyssey, Grand Theft Auto 5, and Total War: Warhammer 2), so it's not a clean sweep, but AMD is a great choice for midrange gaming PCs.

The budget category also looks a lot like the midrange market. The GTX 1050 Ti is faster than the RX 560 4GB, but it costs as much as an RX 570 4GB—a card it can't hope to beat. GTX 1050 meanwhile typically trails the RX 560 4GB by a small margin, thanks in part to only having 2GB VRAM. If you're looking for the best graphics card in the $100-$125 range, AMD currently claims the budget crown as well.

Nvidia holds a commanding lead in overall GPU market share.

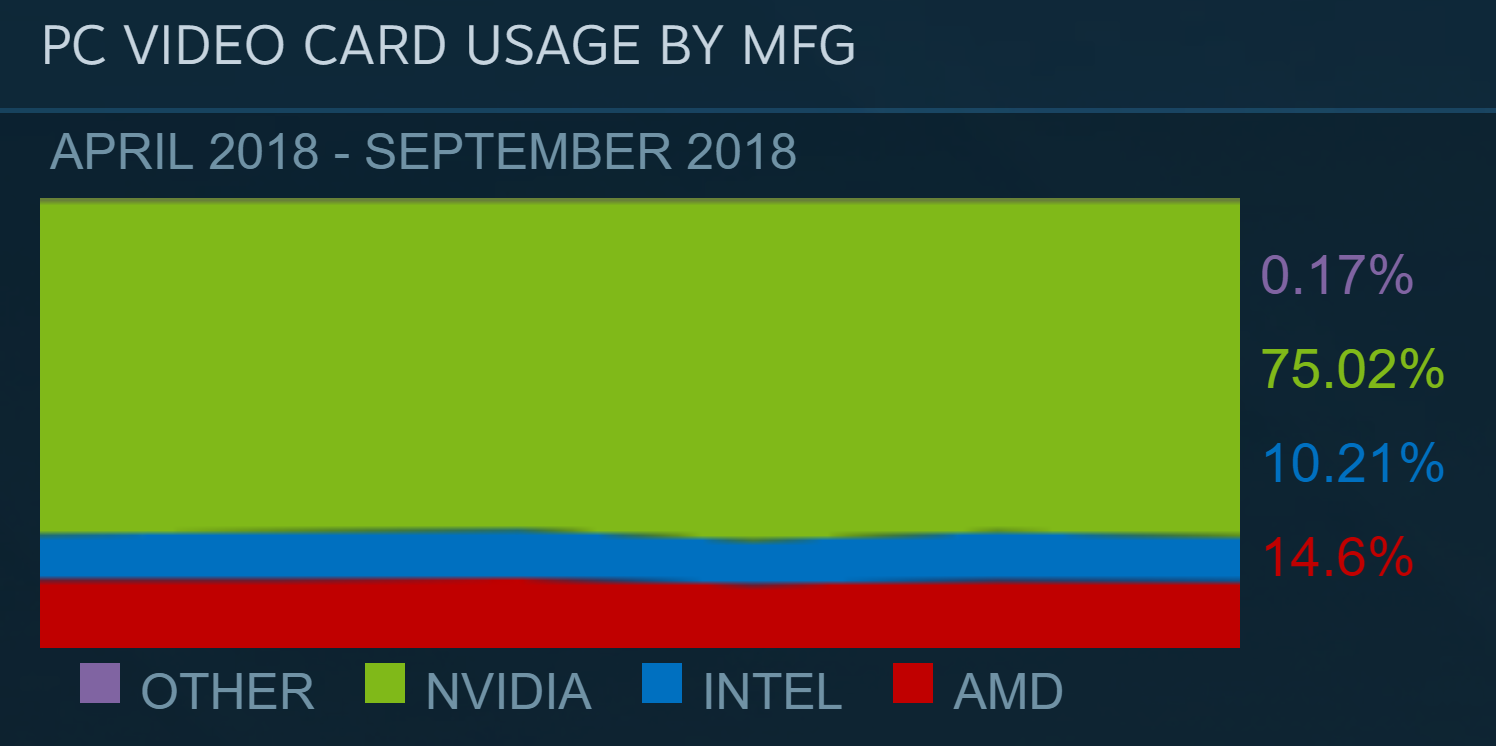

Frankly, we're quite happy to see AMD take the lead in these categories, because there are theoretically far more budget and midrange gamers than high-end gamers. However, that doesn't stop Nvidia from holding a commanding lead in overall GPU market share. According to the latest Steam hardware survey—probably as good a snapshot as we're going to get of the PC gaming community—the results are sobering: 75 percent Nvidia, 15 percent AMD, and 10 percent Intel. Ouch. But it gets worse.

We narrowed down the focus to 'recent' DirectX 12 compatible GPUs—AMD HD 7800 and above, and GTX 760 and above, removing Intel and other slow and/or old GPUs from the mix. With that filter in place, AMD's PC gaming market share drops to a bit more than 7 percent, with Nvidia at 93 percent. Double ouch. Take things a step further and look at AMD's RX and later GPUs compared to Nvidia's GTX 900 and 1000 series parts and Nvidia's market share grows to 97 percent.

The top 15 most popular DX12 GPUs come from Nvidia—and AMD's Vega doesn't even show up on the charts. That means it falls into the nebulous 'other' category, composed of individual products that account for less than 0.3 percent of all sampled products. If ever there was a time to buy an AMD GPU to support the underdog, it's now, at least if you're looking at a budget or midrange card.

AMD is set to release updated mainstream and budget graphics cards in the coming months, which will hopefully increase its share of new GPU sales. We're also still recovering from the more recent cryptocurrency craze, which likely ate up a huge chunk of AMD GPU sales. If the current trends continue, our only hope for future competition may be Intel's GPUs in 2020, so an AMD resurgence would be welcome. AMD plays an important role in the industry, and while we often recommend Nvidia cards, that doesn't mean we have any interest in seeing an Nvidia monopoly.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.