The most game-changing graphics cards in PC gaming history

These are the graphics cards that shaped PC gaming over the past three decades.

Sometimes it's easy to forget about where we came from in PC gaming, especially while we're gazing into our crystal balls for any glimpse of graphics cards future. But there's actually a lot that we can glean from the annals of GPU history—the colossal leaps in power and performance that GPUs have taken in under 25 years goes some way to explaining why today's best graphics card costs so much.

You have to walk before you can run, and just like any trepidatious toddler there were many attempts to nail an image resolution of just 800 x 600 before anyone could dream up rendering the pixel count required to run the best PC games at 4K (that's 480,000 pixels per frame vs. 8,294,400, by the way).

Yet you'd also be surprised by just how many features so prevalent in modern GPUs were first introduced back at the dawn of the industry.

Take SLI, for example. It might be on its way out now but dual-wielding graphics cards played an important role in the past decade of GPU performance. And would you believe it was possible for a PC user to connect two cards together for better performance way back in 1998? But let's start right at the beginning—when active cooling was optional and there were chips aplenty.

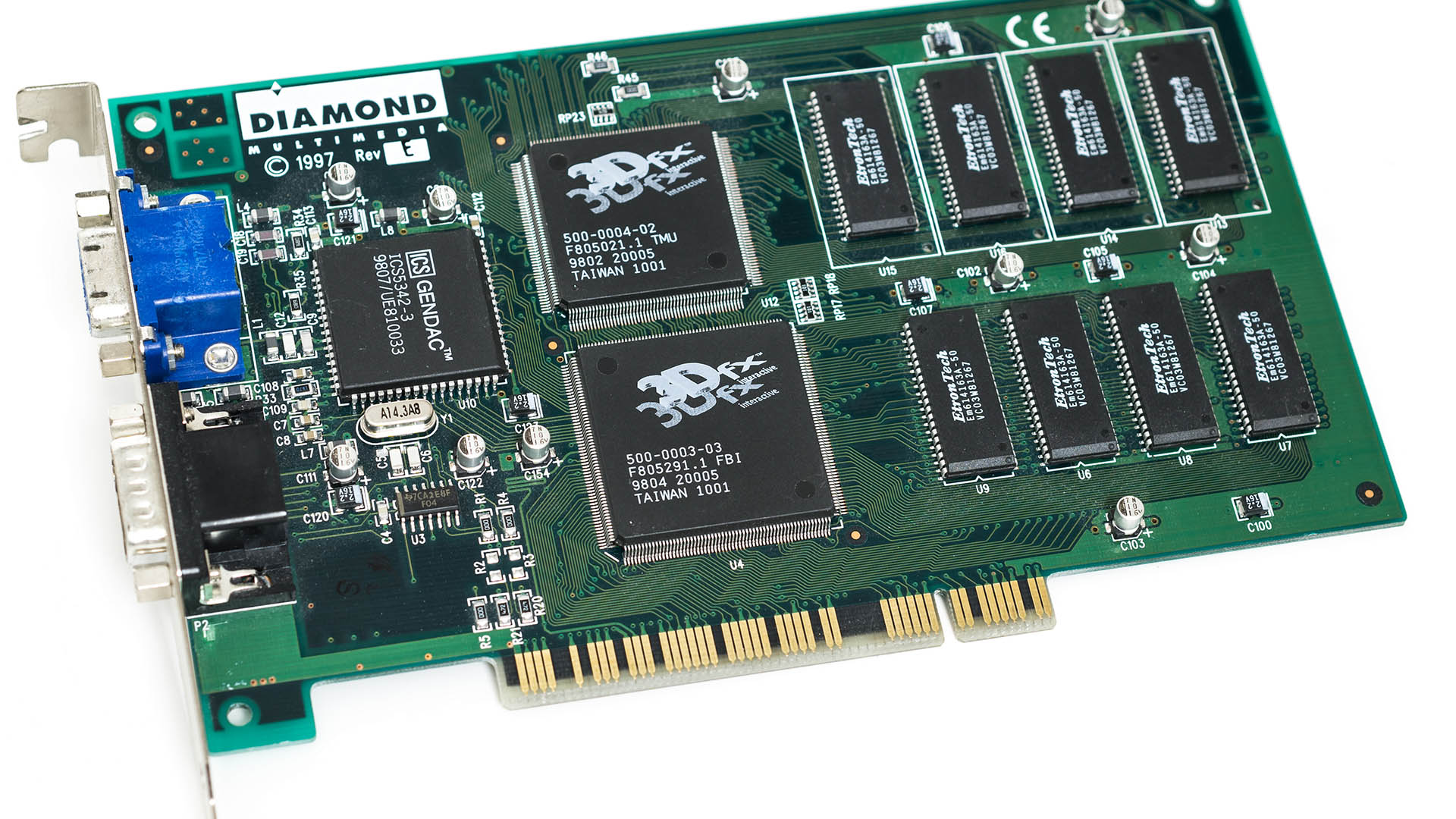

3dfx Voodoo

3dfx Voodoo

Year: 1996 | Clock speed: 50MHz | Memory: 4/6MB | Process node: 500nm

It's March, 1996—England is knocked out of the Cricket World Cup by Sri Lanka, a young boy celebrates his fourth birthday (that's me), and 3dfx releases the first of what would be a couple of game-changing graphics cards: the Voodoo.

Clocked at just 50MHz and fitted with a whopping 4/6MB of total RAM, the Voodoo was clearly the superior card for 3D acceleration at the time. The top spec could handle an 800x600 resolution, but the lower spec was capable of only 640x480. Despite its 2D limitations, it would prove a highly successful venture, and set 3dfx on a trajectory into PC gaming fame—and eventually into Nvidia's pocket.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Note: the 3dfx Voodoo is often referred to as the Voodoo1, although that name only caught on after the release of the Voodoo2.

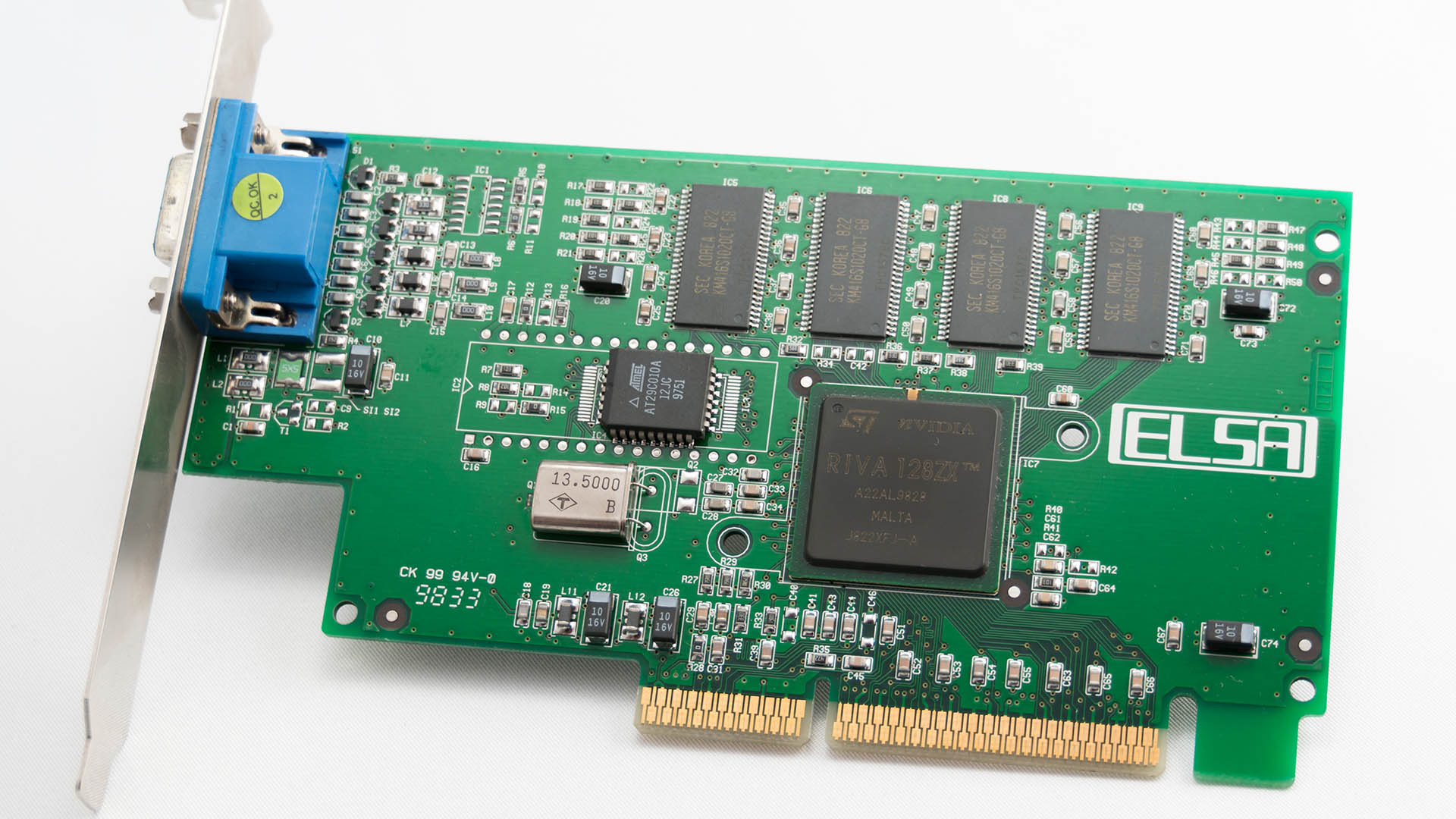

Nvidia Riva 128

Nvidia Riva 128

Year: 1997 | Clock speed: 100MHz | Memory: 4MB | Process node: SGS 350nm

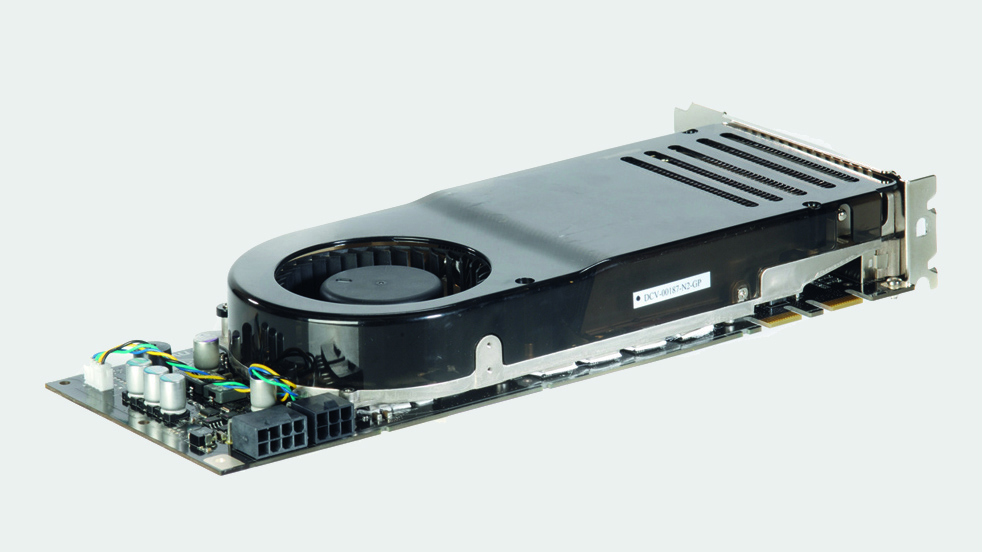

Dave James: I've gamed on more graphics cards than I can remember. My first Voodoo2 was transformative, the Riva TNT was ace, and I've since had twin Titans and dual-GPU Radeon cards in my home rigs, but none hold so dear a place in my heart as the glorious 8800GT. Forget the monstrous 8800GTX, the simple GT combined stellar performance, great looks, and incredible value. I've still got my single-slot, jet-black reference card (pictured above), and will never part with it.

A chipset company by the name of Nvidia would soon offer real competition to the 3dfx in the form of the Nvidia Riva 128, or NV3. The name stood for 'Real-time Interactive Video and Animation', and it integrated both 2D and 3D acceleration into a single chip for ease of use. It was a surprisingly decent card following the Nvidia NV1, which had tried (and failed) to introduce quadratic texture mapping.

This 3D accelerator doubled the initial spec of the Voodoo1 at 100MHz core/memory clock, and came with a half-decent 4MB SGRAM. It was the first to really gain traction in the market for Nvidia, and if you take a look at the various layouts—memory surrounding a single central chip—you can almost make out the beginnings of a long line of GeForce cards, all of which follow suit.

But while it offered competition to 3dfx's Voodoo1, and higher resolutions, it wasn't free of its own bugbears—and neither would it be alone in the market for long before a 3dfx issued a response in the Voodoo2.

Pictured above is the Nvidia Riva 128 ZX, a slightly refreshed take on the original chip.

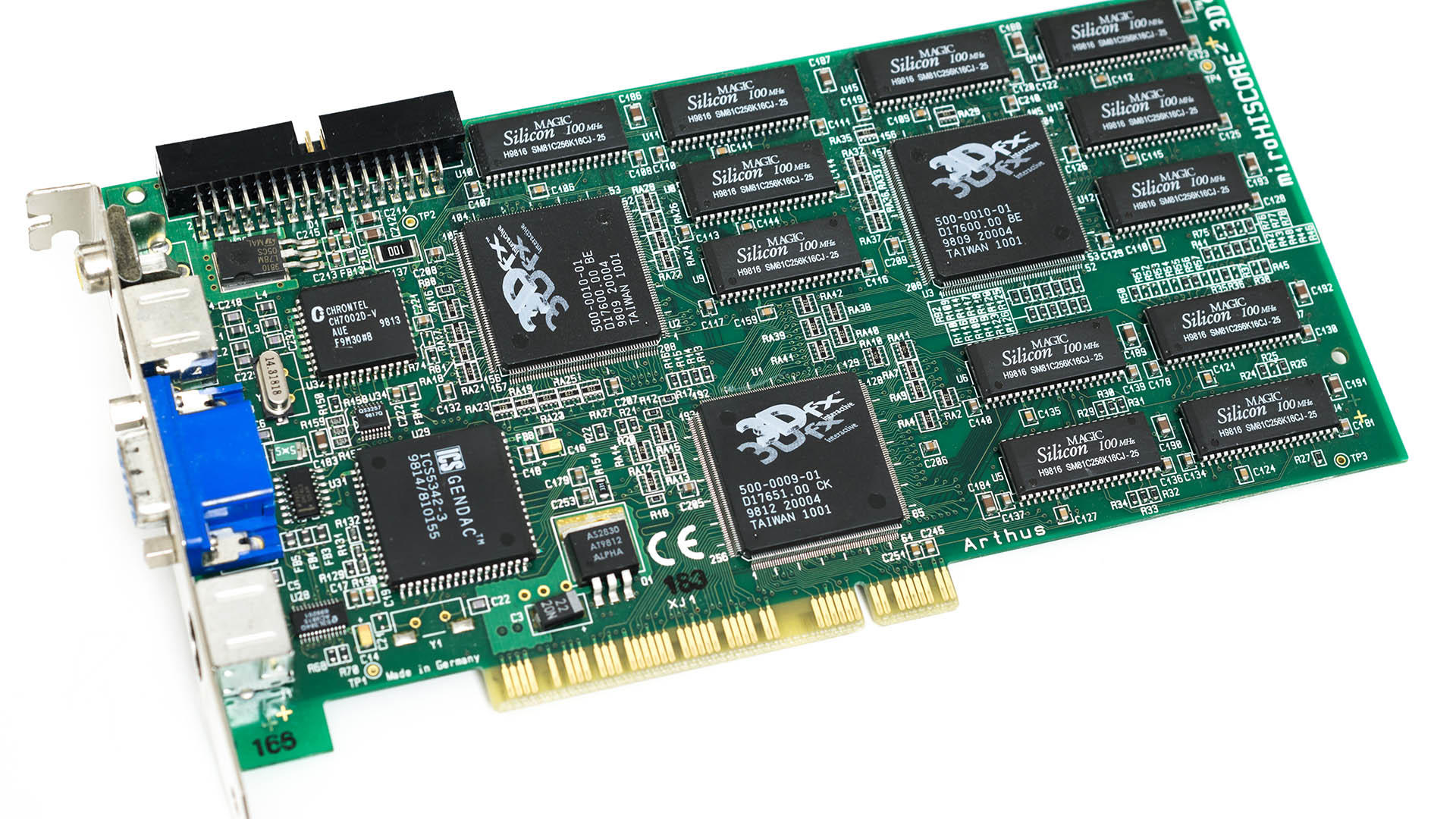

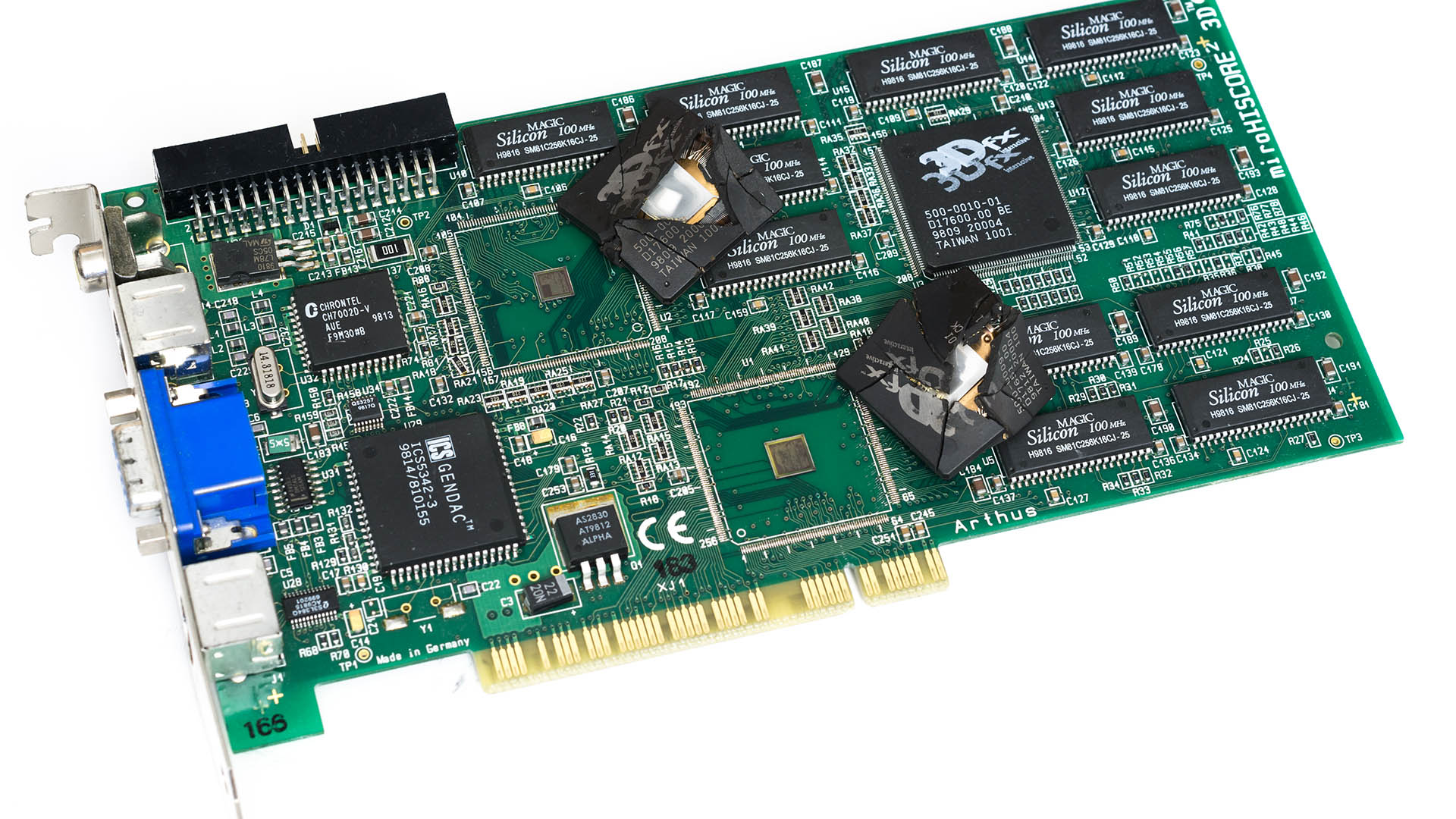

3dfx Voodoo2

3dfx Voodoo2

Year: 1998 | Core clock speed: 90MHz | Memory: 8/12MB | Process node: 350nm

Now this is a 3D accelerator that requires no introduction. Known far and wide for its superb performance at the time, the Voodoo2 is famed for its lasting impact on the GPU market.

A smorgasbord of chips, the Voodoo2 featured a 90MHz core/memory clock, 8/12MB of RAM, and supported SLI—once connected via a port on twinned cards, the Voodoo2 can support resolutions up to 1024 x 768.

3dfx managed to stay on top with the Voodoo2 for some time, but it wasn't long until the company would make a few poor decisions and be out of the graphics game entirely.

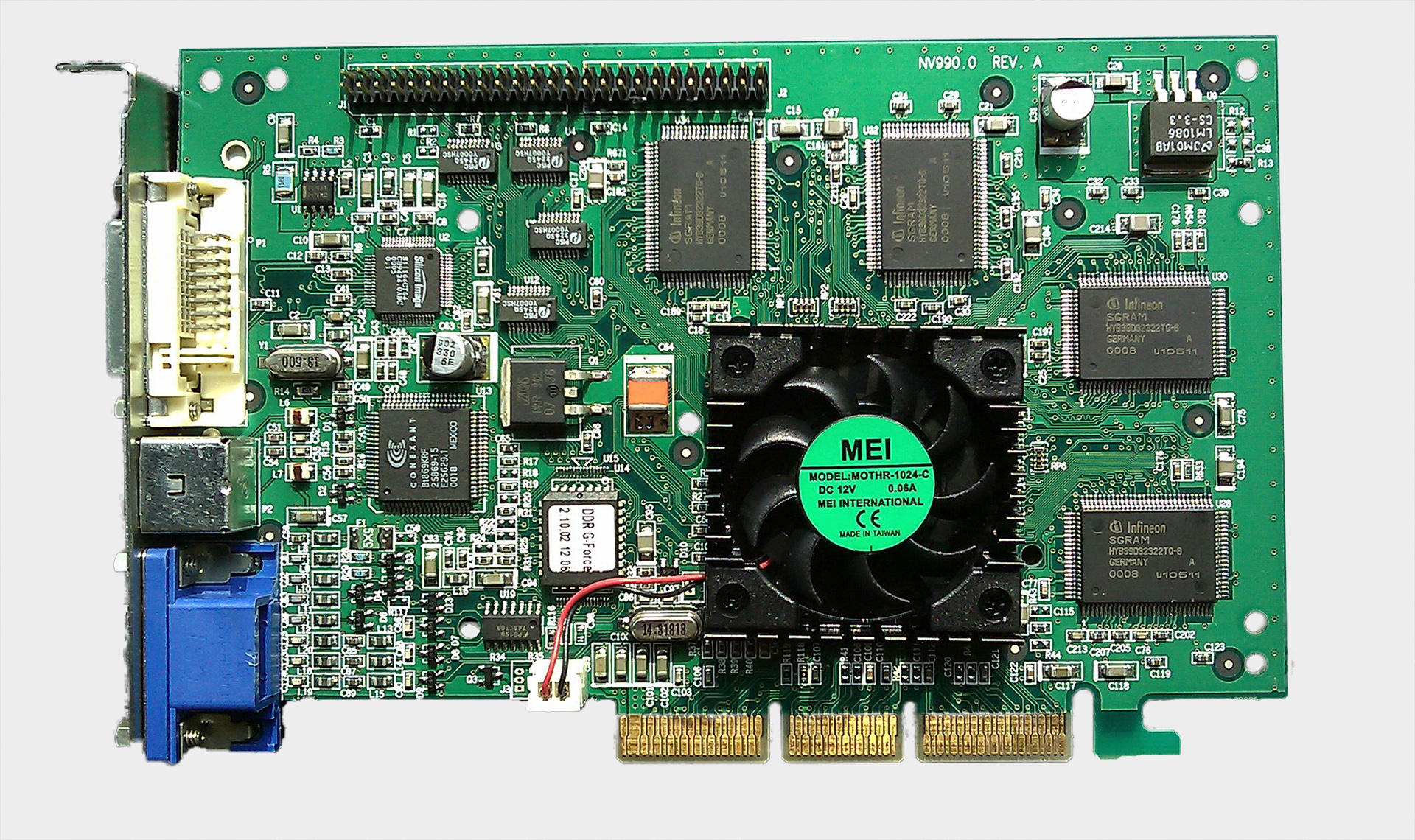

Nvidia GeForce 256

Nvidia GeForce 256

Year: 1999 | Core clock speed: 120MHz | Memory: 32MB DDR | Process node: TSMC 220nm

The first bearing the GeForce name still in use today, the GeForce 256 was also the "world's first GPU'. 'But what about the Voodoos and the Rivas?', I hear you ask. Well, clever marketing on Nvidia's part has the GeForce 256 stuck firmly in everyone's minds as the progenitor of modern graphics cards, but it was really just the name Nvidia gave its single-chip solution: a graphics processing unit, or GPU.

Paul Lilly: You always remember your first, and for me, it was the Voodoo2, which found a home in an overpriced Compaq I bought from Sears (before I started building my own PCs). But it's the Gainward GeForce4 Ti 4200 I bought several years later that stands out as one my best GPU purchases. It arrived a couple months after Nvidia launched the Ti 4600 and 4400, and quickly cemented itself as the clear bang-for-buck option over those two pricier models. It was fast, comparatively affordable, and dressed in a bright red PCB.

As you can probably tell, this sort of grandiose name, a near-parallel to the central processing unit (CPU) that had been raking in ungodly wads of cash since the '70s, was welcomed across the industry.

That's not to say the GeForce 256 wasn't a worthy namesake, however, integrating acceleration for transform and lighting into the newly-minted GPU, alongside a 120MHz clock speed and 32MB of DDR memory (for the high-end variant). It also fully-supported Direct3D 7, which would allow it to enjoy a long lifetime powering some of the best classic PC games released at that time.

Here's an excerpt from a press release on integrated transform and lighting that Nvidia released that year:

"The developers and end-users have spoken, they're tired of poor-quality artificial intelligence, blocky characters, limited environments and other unnatural looking scenes," said David Kirk, chief technologist at Nvidia. "This new graphics capability is the first step towards an interactive Toy Story or Jurassic Park."

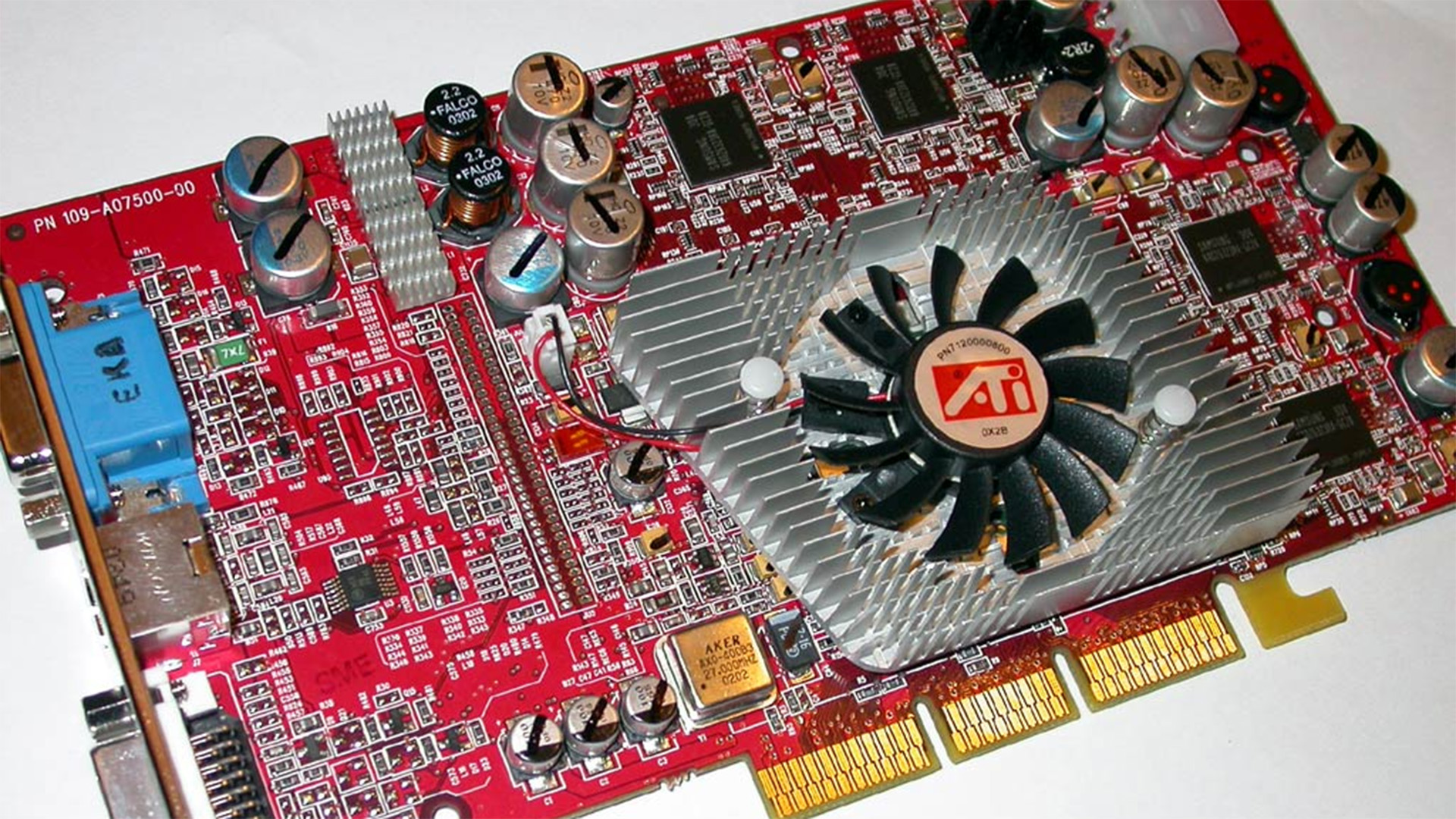

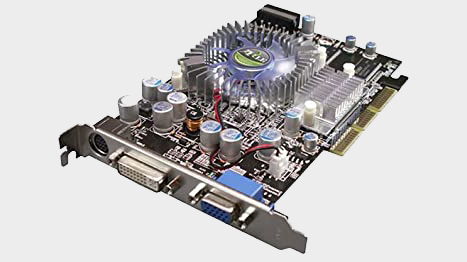

ATI 9800 Pro

ATI Radeon 9800 Pro

Year: 2003 | Core clock speed: 380MHz | Memory: 128MB/256MB DDR2 | Transistors: 117 million | Process node: TSMC 150nm

Alright, I hear you. The ATI Radeon 9800 Pro absolutely dominated in its era and easily deserves a spot on this list. This card came from a company that hadn't managed a major splash in the GPU market for a couple of years, and decided to make up for it with a graphics card that was "simply breathtaking," according to a Guru3D review at the time.

Based on the R350 GPU, this card followed on from the already successful launch of ATI's latest R300 GPUs, namely the Radeon 9700 and Radeon 9500, both of which would impress at the time. The latter was especially handy for some, as you could flash the cheaper chip and turn it into a more performant card. Oh, those were the days.

This entire architectural generation would see ATI beating Nvidia at pretty much every turn, and a wide memory bus and significant performance with growing anti-aliasing technologies saw it stay that way for a while.

"If you want the best out today, look no further than the Radeon 9800 Pro; it's quiet, faster, occupies a single slot, and will enjoy much wider availability than the GeForce FX," wrote Anand of Anandtech fame in 2003.

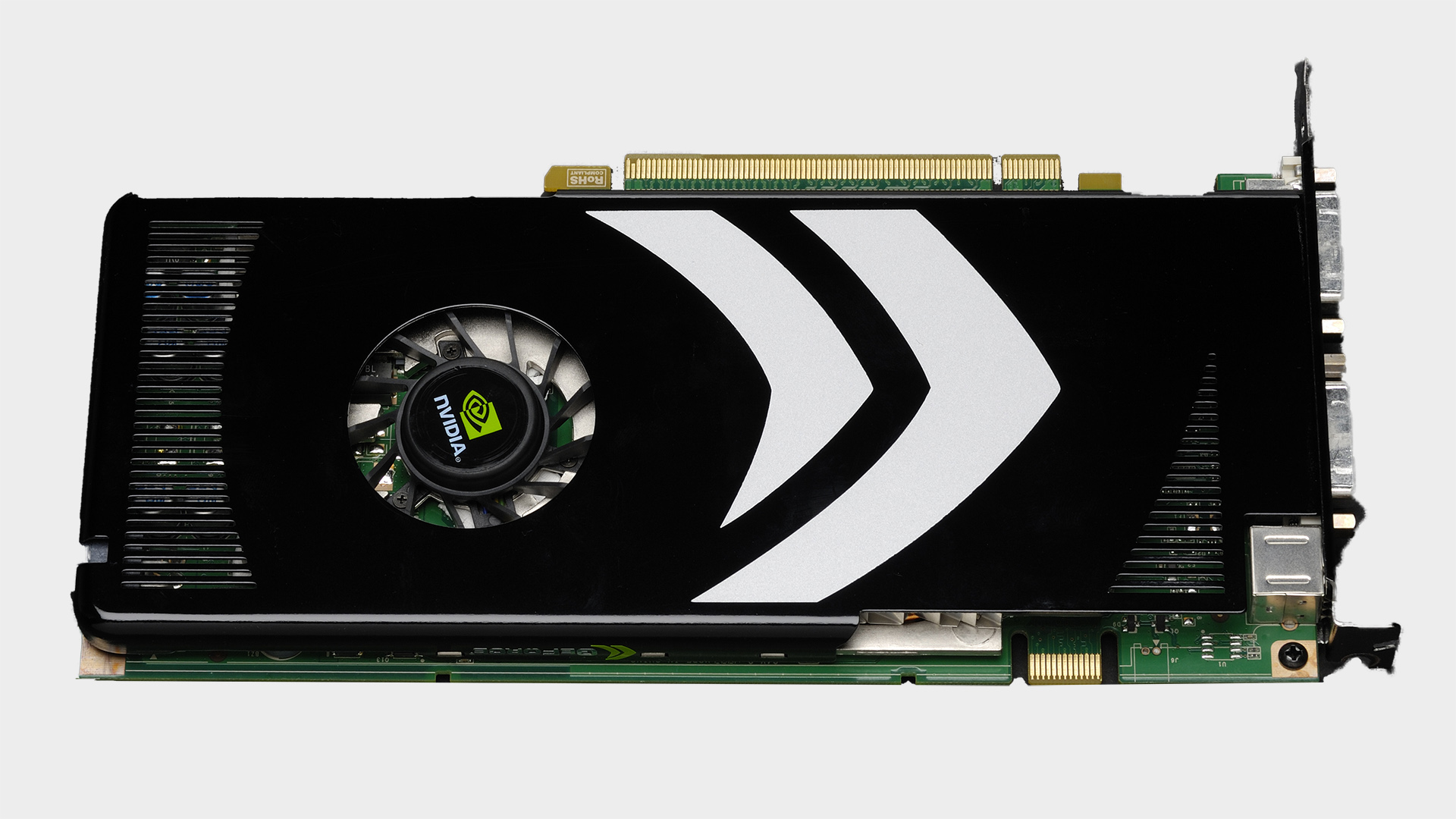

Nvidia 8800 GTX

Nvidia GeForce 8800 GTX

Year: 2006 | Core clock speed: 575MHz | Memory: 768MB GDDR3 | Transistors: 681 million | Process node: TSMC 90nm

Once Nvidia rolled out the GeForce 8800 GTX, there was no looking back. If you want to talk about a card that really got peoples' attention, and threw aside all regard for a compact size, it's the GeForce 8800 GTX.

With 128 Tesla cores inside the much-anticipated G80 GPU, and 768MB of GDDR3 memory, the 8800 isn't an unfamiliar sight for a modern GPU shopper—even if it might be a little underpowered by today's standards. It ruled over the GPU market at launch, and stuck around for some time afterwards thanks to a unified shader model introduced with the architecture and Direct3D 10. And it could run Crysis.

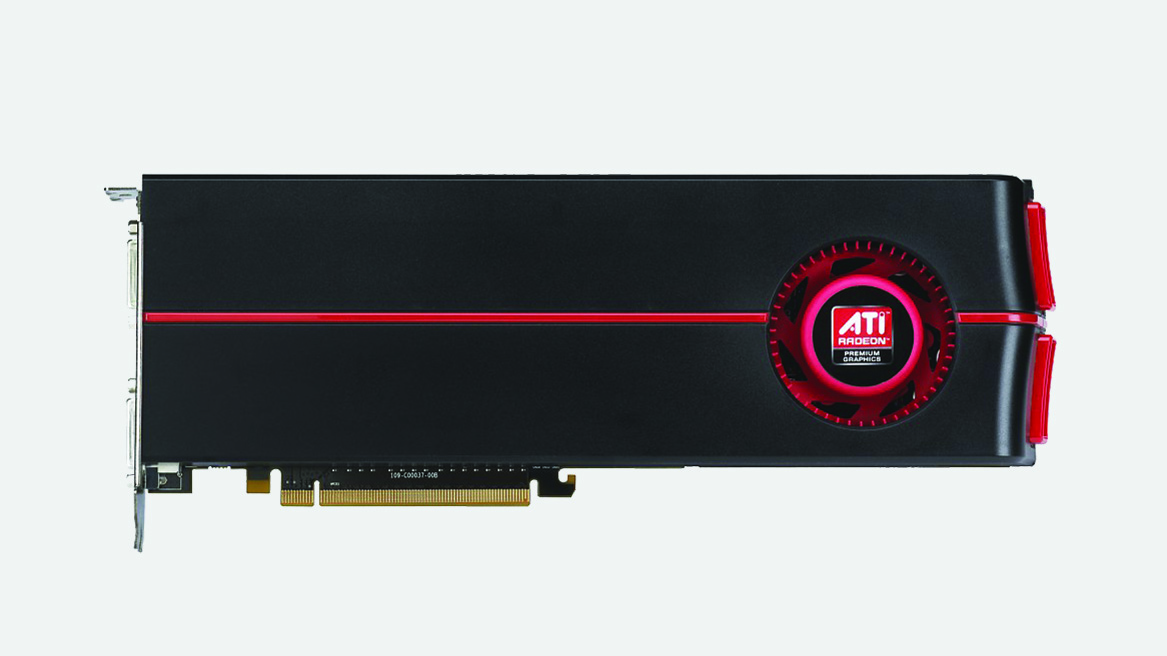

ATI HD 5970

ATI Radeon HD 5970

Year: 2009 | Core clock speed: 725MHz | Memory: 2,048MB GDDR5 | Transistors: 4,308 million | Process node: 40nm

And what's AMD been doing all this time? Semiconductor company ATI was busy building heaps of bespoke console chips right the way through the '90s and early 2000s, and made some excellent GPUs in its own right, such as the X1900 XTX. It was later purchased by AMD in 2006 and the Radeon brand gently incorporated under the AMD umbrella. After the abortive HD 2000 and 3000 series, the HD 4870 and 4850 were quality cards, but the one which made the biggest splash after the move was the Radeon HD 5970.

The Radeon 5970 was essentially a large Cypress GPU, 1,024MB pool of memory, and sizeable 256-bit memory bus… multiplied by two. Yep, this dual-GPU enthusiast card was doubly good due to its doubled component count, and it used all that power to lord over the gaming landscape.

The tradition of stuffing two chips into one shroud continued right the way up to the AMD Radeon R9 295X2 and the Nvidia Titan Z. But once multi-GPU support started dwindling, single cards became the predominant form factor. Furthermore, with multi-GPU support entirely in the developers' court due to the introduction of DirectX 12, they may never make a return.

Nvidia GTX Titan

Nvidia GTX Titan

Year: 2013 | Core clock speed: 837MHz | Memory: 6,144MB GDDR5 | Transistors: 7,080 million | Process node: TSMC 28nm

Nvidia released the original GTX Titan back in 2013, and at the time it was intended to sit atop of the gaming world as the pinnacle of performance. And arguably it was the first ultra-enthusiast consumer card to launch.

The GTX Titan comes with 2,688 CUDA Cores, and could boost up to 876MHz. The memory, a whopping 6GB of GDDR5, remains more capacious than even some of the best graphics cards sold today, and with a 384-bit bus it was capable of keeping up with just about anything at the time.

Nvidia threw sense out of the window with the original GTX Titan, and it was enough to get anyone's blood pumping. A single GPU with a state-of-the-art architecture that could tear through the latest games—suddenly two cards became an unnecessary nuisance.

Nvidia GTX 1080 Ti

Nvidia GeForce GTX 1080 Ti

Year: 2017 | Core clock speed: 1,480MHz | Memory: 11GB GDDR5X | Transistors: 12bn | Process node: TSMC 16nm

There's no looking over the Pascal architecture, and its crowning glory, the GTX 1080 Ti, when discussing the most influential graphics cards to date. Pascal was a truly dominant high-end architecture from the green team, and the company's efforts in lowering performance per watt up to that point came together to create one of the finest cards that we're still enamoured with today.

At the time, the 250W GTX 1080 Ti was capable of dominating the field in gaming performance. It crushed the Titan X Pascal across the board, and even when Nvidia finally put together the faster $1,200 Titan Xp sometime later, the $699 GTX 1080 Ti damn near crushed that one, too—in performance per dollar, it was the obvious best pick.

AMD RX 580

AMD Radeon RX 580

Year: 2017 | Core clock speed: 1,257MHz | Memory: 4GB/8GB GDDR5 | Transistors: 5.7bn | Process node: GlobalFoundries 14nm

Alan Dexter: I've always had a fondness for budget graphics cards, and the release that stands out for me is the GeForce 6600GT. A true budget offering ($200 at launch) it had an ace up its sleeve in the shape of SLI support. So while you could happily just about get by with one card, sliding another identical card into your system almost doubled performance (except when it didn't... hey, SLI was never perfect).

It would be remiss of me not to mention the RX 580, and by extension the RX 480 it was built on, in this round-up. This highly-affordable Polaris GPU was my personal go-to recommendation for anyone looking to build their first PC for 1080p gaming.

Sitting a touch above the RX 570, which eventually helped redefine entry-level desktop gaming performance, the RX 580 is the epitome of AMD's fine-wine approach. Yeah, forget the faux Vega vintage, it was the Polaris GPUs that only ever got cheaper and more performant as time went on.

The RX 580 has done more for PC gaming affordability that most graphics cards today. Hell, it was such a superb card even the crypto-miners couldn't get enough. It's a standard by which all further AMD and Nvidia mainstream GPUs were to be compared against, and we're all the better for it.

Nvidia RTX 2080

Nvidia GeForce RTX 2080

Year: 2018 | Core clock speed: 1,710MHz | Memory: 8GB GDDR6 | Transistors: 13.6B | Process node: TSMC 12nm

In a pretty short period of time, the Turing architecture powering the RTX 2080 was replaced by much, much more powerful stuff. Ampere and Ada Lovelace make mincemeat of Turing today, and that's despite Turing introducing two of the things that make Ampere and Ada so great: ray tracing and AI-powered upscaling.

Without Turing, you wouldn't have DLSS Frame Generation, or DLSS whatsoever, and you wouldn't have today's impressive ray tracing acceleration. That's not to say without Nvidia no one would've ever introduced these two concepts to PC gaming, but the green team did a whole lot of work getting us to where we are today and getting these technologies more widely adopted. And that began with the RTX 20-series.

Nowadays Nvidia, AMD, and even new-starter Intel have ray tracing acceleration and upscaling tech, and you can pinpoint the RTX 2080 launch as the start, albeit a slow one, of a very positive trend for PC gaming performance.

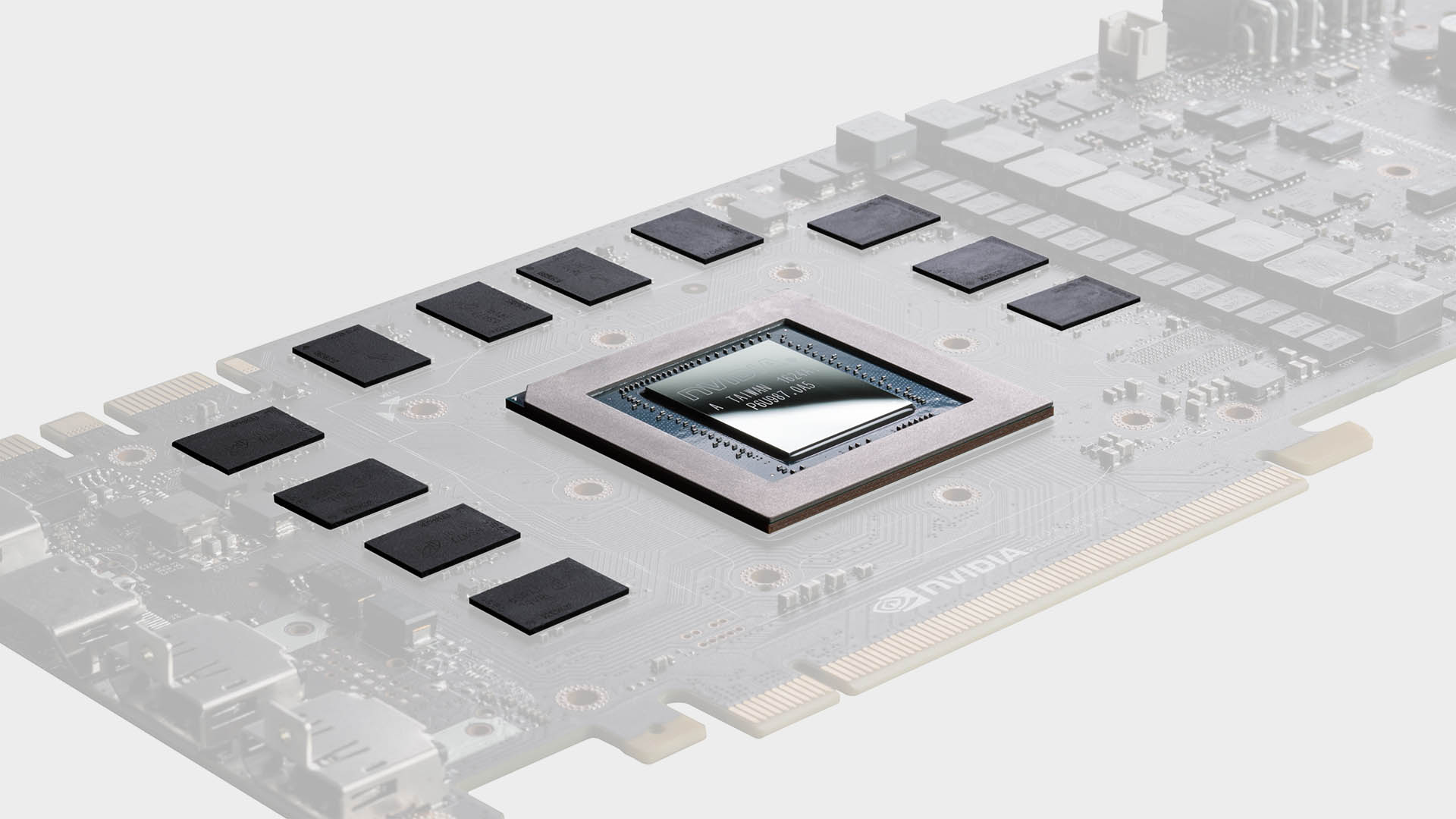

Nvidia RTX 4090

Nvidia GeForce RTX 4090

Year: 2022 | Core clock speed: 2,520MHz | Memory: 24GB GDDR6X | Transistors: 76.3B | Process node: TSMC 5nm

Every time I look at this list I struggle to decide whether to include today's 'top' graphics card. I know as well as you know that what's good today will be made to look inferior with the next generation, and you should never buy any GPU thinking it will be the bee's knees forever and ever (unless something drastic happens globally, anyways).

But the RTX 4090 really is an absolute monster, and while we all expected that to be true of Nvidia's top RTX 40-series card, just how mega the RTX 4090 was when it did launch took us all by surprise.

Even if you remove the massive performance uplift available from DLSS Frame Generation on the 4090, it's monumentally faster than the RTX 3090. In our RTX 4090 review, it at times mustered frame rates some 61–91% faster at 4K. It's stupendous, as is the size of this thing. It's comedically large.

Honourable mention: AMD's Radeon RX 7900 XTX deserves a mention in this list, if not for how influential it is today (not very), but for what it is the precursor to much more of in the future. This card, along with its smaller sibling, the RX 7900 XT, represent the first chiplet-based GPUs.

For now, AMD has stuck with a single lump of processing transistors in what it calls the GCD (Graphics Die). This is where all the shader, matrix acceleration, and ray tracing cores live. Outside of this chip, AMD's divided out the memory subsystems and Infinity Cache onto what it calls MCDs (Memory Cache Dies)—up to six of them per graphics card.

Right now, this chiplet-based approach is a light touch and these cards still struggle to match the best of Nvidia's monolithic RX 4000-series, such as the RTX 4090 above. Hence why it's not really a game-changer proper. But you can be sure that the RX 7900 XTX and XT are the start of a big trend in graphics cards.

Jacob earned his first byline writing for his own tech blog. From there, he graduated to professionally breaking things as hardware writer at PCGamesN, and would go on to run the team as hardware editor. He joined PC Gamer's top staff as senior hardware editor before becoming managing editor of the hardware team, and you'll now find him reporting on the latest developments in the technology and gaming industries and testing the newest PC components.