ORNL's Summit is the most powerful supercomputer in the world

It's also a deep learning and AI monster, breaking the exascale barrier.

Late last week, just as Computex was wrapping up and right before the madness that is E3 began, Oak Ridge National Laboratory brought the Summit supercomputer online. This marks the return of the US to the top of the supercomputing performance ladder, at least for the time being, and a second identical supercomputer is in the works at the Lawrence Livermore National Laboratory. Let's talk specs for just a moment.

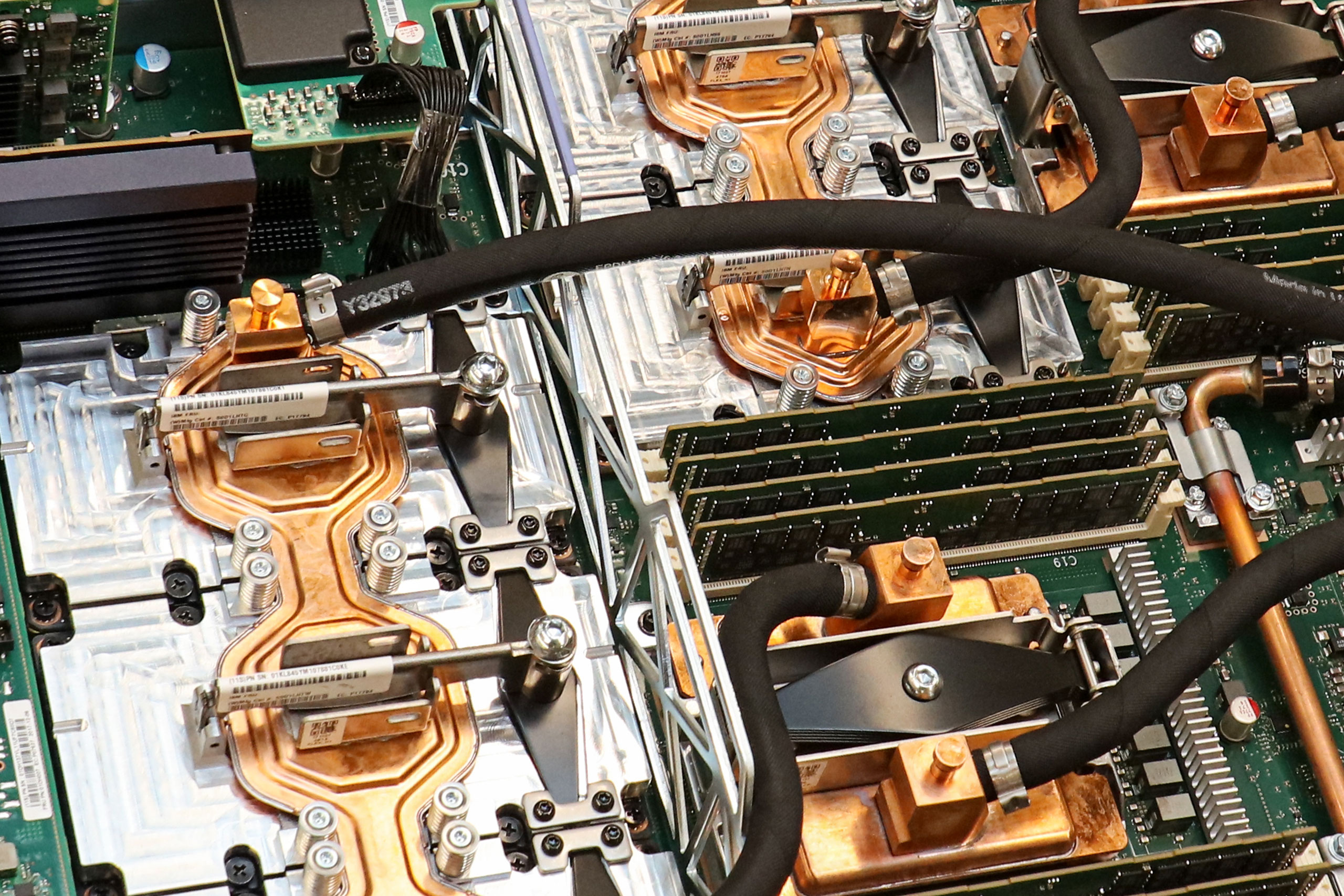

Summit has 4,608 nodes in total, connected via dual-rail Mellanox EDR 100Gbps InfiniBand adapters. Each node can be viewed as a unified memory space, including CPU memory, GPU memory, and even non-volatile memory. It's not clear what sort of non-volatile memory is in use here—Optane DC PM would be ideal, but I don't think the platform supports it. In total, Summit houses more than 10PB of memory between the system RAM, GPUs, and non-volatile memory.

The nodes are built on IBM's AC922 systems, which are a hybrid CPU-GPU architecture. There are two 22-core Power9 CPUs in each node, and unlike Intel's Hyper-Threading, the Power9 CPUs run up to four threads per core. That means 44-cores and 176-threads, and while clockspeed wasn't precisely detailed, Power9 is supposed to run at up to 4.0GHz. Nvidia lists the per-node performance as 49 teraflops, and that's for double-precision FP64 operations (ie, Linpack), of which the majority comes from the GPUs. But the Power9 CPUs should provide around 650 gigaflops each, possibly more depending on clockspeed.

ORNL hasn't released a Linpack score yet, but based on the per-node performance it should be in the 200 petaflop range. That might even be a bit conservative, since 4,608 * 49 teraflops ~= 226 petaflops. And again, that's for FP64 operations, which are great for complex scientific calculations but overkill for certain types of work. Which brings me to the final element, the GPUs.

Each node packs six Tesla V100 GPUs, connected via NVLink. Compared to ORNL's previous Titan supercomputer, which used a single Tesla K20x GPU per node, we're looking at 34 times more FP64 computational power. But each Tesla V100 also contains 640 Tensor Cores, specialized cores designed to accelerate machine learning operations. Compared to the FP64 capabilities, the Tensor Cores are far less precise, offering what amounts to FP16 calculations. What the cores lack in precision they make up in raw performance, with a single V100 able to do up to 125 teraflops. Do the math:

125 teraflops/V100 * 4,608 nodes * 6 V100/node = 3,456 petaflops

I think the clockspeeds of the V100 are a bit lower than the theoretical maximum, as I've seen suggestions of 100 teraflops instead of 125, but we're still well into the exaflops range for machine learning. During initial testing of Summit, on machine learning algorithms researchers have already hit 1.88 exaops. And by the way, estimates put the human brain at somewhere in the 250-1000 petaflops range. In other words, Skynet is now online.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Just how much did the Summit supercomputer cost to build? One site lists the total installation cost at between $400 and $600 million, which seems plausible. A bit of napkin math can help us dig into the node specifics. The base server price isn't listed anywhere, but around $20,000 seems likely (give or take). Dual 22-core Power9 CPUs should be around $5,999 each (the 20-core model is $3,999). The Tesla V100 GPUs go for $11,499 each, so another $69,000. IBM apparently charges $39 per GB for the 1024GB of registered DDR4-2666, which is $40,000 on memory (plus or minus). The two InfiniBand ports are about $1,000 each. Add in around 1,200GB of non-volatile enterprise storage per node, which if we use three Optane DC SSD P4800X 375GB drives would be around $4,000.

All told, just the node hardware, not including the racks, switches, software, power, and other elements, looks to be in the neighborhood of $125-$150,000, which is actually quite reasonable. Nvidia charges $149,000 for a DGX-1 server with eight Tesla V100 GPUs, though with less RAM and storage. $150,000 per node works out to nearly $700 million, but IBM would likely have government discounts and contracts in place. I'll take two, thanks.

But seriously, Summit is an extremely impressive feat of engineering. Along with Sierra at LLNL, these are likely the last pre-exascale supercomputers the US government will commission. Besides the cool hardware, these supercomputers will be working to solve problems in the fields of energy, astrophysics, materials sciences, cancer and other illnesses, biology, and more. Hopefully they will end up being less about taking over the world and destroying humanity, and more about helping to make the world a better place.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.