If Microsoft can't source enough electricity to power all the AI GPUs it has, you have to wonder how Amazon is going to cope in its new $38 billion deal with OpenAI

I worry about powering the sole GPU that's in my gaming rig, so that means I'm bonding with Microsoft, right?

To keep the AI juggernaut rolling ever forward, you might think that the biggest companies in artificial intelligence desperately need ridiculous numbers of AI GPUs. According to Microsoft, though, the issue isn't a compute or hardware limit, it's that there's not enough electrical power to run it all. And if that's the case, it raises the question as to how Amazon is going to cope now that it's signed a $38 billion, multi-year deal with OpenAI to give the company access to its servers.

It was Microsoft's CEO, Satya Nadella, who made the point about insufficient power in a 70-minute interview (via Tom's Hardware) with YouTube channel Bg2 Pod, alongside OpenAI's boss, Sam Altman. It came about when the interview's host, Brad Gerstner, mentioned that Nvidia's Jen-Hsun Huang had said that there was almost zero chance of there being a 'compute glut' within the next couple of years (and by that, he means an excess of AI GPUs).

"The biggest issue we are now having is not a compute glut, but it’s power," replied Nadella. "It’s sort of the ability to get the builds done fast enough close to power. So, if you can’t do that, you may actually have a bunch of chips sitting in inventory that I can’t plug in. In fact, that is my problem today. It’s not a supply issue of chips; it’s actually the fact that I don’t have warm shells to plug into."

The warm shells that he refers to aren't tasty tacos; they're hubs already connected with the relevant power and other facilities required, ready to be loaded up with masses of AI servers from Nvidia. What Nadella is effectively saying is that getting hold of GPUs isn't the problem, it's accessing cheap enough electricity to run them all.

That's not necessarily just a case of there just not being sufficient power stations—it matters a great deal where they are, because while one can just dump a building down almost anywhere, if the local grid can't cope with the enormous power demands of tens of thousands of Nvidia Hopper and Blackwell GPUs, then there's no point in building the shell in the first place.

When I heard Microsoft's CEO make that comment, I immediately recalled another news story I'd read today: OpenAI's $38 billion deal with Amazon that will give it access to AWS's AI servers. OpenAI already has such an agreement with Microsoft to use its Azure services, with the latter pumping money into the former as well.

"OpenAI will immediately start utilizing AWS compute as part of this partnership, with all capacity targeted to be deployed before the end of 2026, and the ability to expand further into 2027 and beyond," the announcement says.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

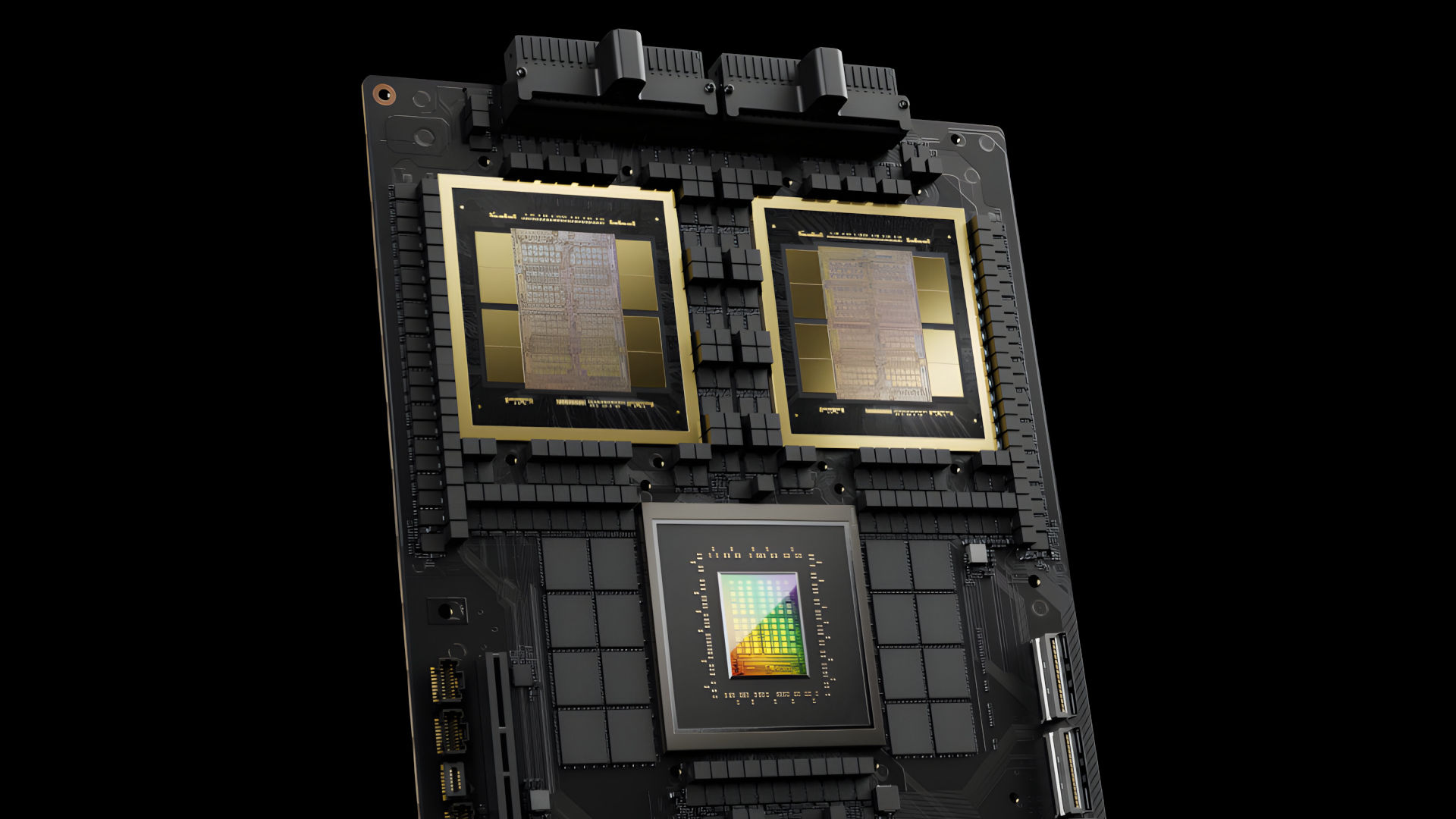

"The infrastructure deployment that AWS is building for OpenAI features a sophisticated architectural design optimized for maximum AI processing efficiency and performance. Clustering the NVIDIA GPUs—both GB200s and GB300s—via Amazon EC2 UltraServers on the same network."

Does this mean AWS is effectively handing over all its Nvidia AI GPUs to OpenAI over the next few years, or will it expand its AI network by building new servers? It surely can't be the former, as AWS offers its own AI services, so the most logical explanation is that Amazon is going to buy more GPUs and build fresh data centers.

But if Microsoft can't power all the AI GPUs it has now, how on Earth is Amazon going to do it? Research by the Lawrence Berkeley National Laboratory suggests that within three years, the total electrical energy consumption by data centers will reach as high as 580 trillion Wh. According to the Massachusetts Institute of Technology, that would put AI consuming as much electricity as 22% of all US households per year.

To meet this enormous demand, some companies are looking at restarting old nuclear power stations, or in the case of Google, building a raft of new ones. While the former is hoped to be ready by 2028, it will only produce 7.3 TWh per annum at best—a mere 1.3% of the total projected power demand. This is clearly not going to be a valid long-term solution to AI's energy problem.

With so much capital now invested in AI, it's not going to disappear without a trace (though that would solve the question of powering it all), so the only way forward for Amazon, Google, Meta, and Microsoft is to either build vastly more power stations, design and build specialised low-energy AI ASICs (in the same way that bitcoin mining has gone this way), or more likely, both.

This generates a new question: Where is the money required for all of this going to come from? Answers on a postcard, please.

1. Best gaming laptop: Razer Blade 16

2. Best gaming PC: HP Omen 35L

3. Best handheld gaming PC: Lenovo Legion Go S SteamOS ed.

4. Best mini PC: Minisforum AtomMan G7 PT

5. Best VR headset: Meta Quest 3

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?

You must confirm your public display name before commenting

Please logout and then login again, you will then be prompted to enter your display name.