Life after Moore's Law

Where are our performance gains going to come from now?

This article was originally published in PC Gamer UK 347. You can get our glossy mag and its fancy cover sent to your door monthly by subscribing.

Way back in 2012, you could buy a quad-core/eight thread CPU with a 3.5GHz base clock and a 3.9GHz turbo. In 2018, you could also buy a CPU with a 3.5GHz base clock and a 3.9GHz turbo, but this time it had 16 cores, and processed 32 threads simultaneously. It was a Threadripper 2950X, and was more than twice the price of 2012's Core i7-3770K.

What's going on there? Shouldn't Moore's Law, which back in 1975 (although the concept was first thought of in 1965) stated that the number of transistors in a dense integrated circuit doubles about every two years, have ensured that 2018's CPU was running at 28GHz? Sure, you can pick up a 5GHz i9, but Intel's 2020 Core i3-10100 manages four cores/eight threads at 3.6/4.3GHz, still rather close to the 2012 i7 specs.

Moore's Law has the effect of doubling computing performance every two years or so—it's Moore's Guideline really. And we're certainly not seeing things at that level any more. So why has this stagnation of performance happened, and where can we expect increases in computing performance to come from in the future?

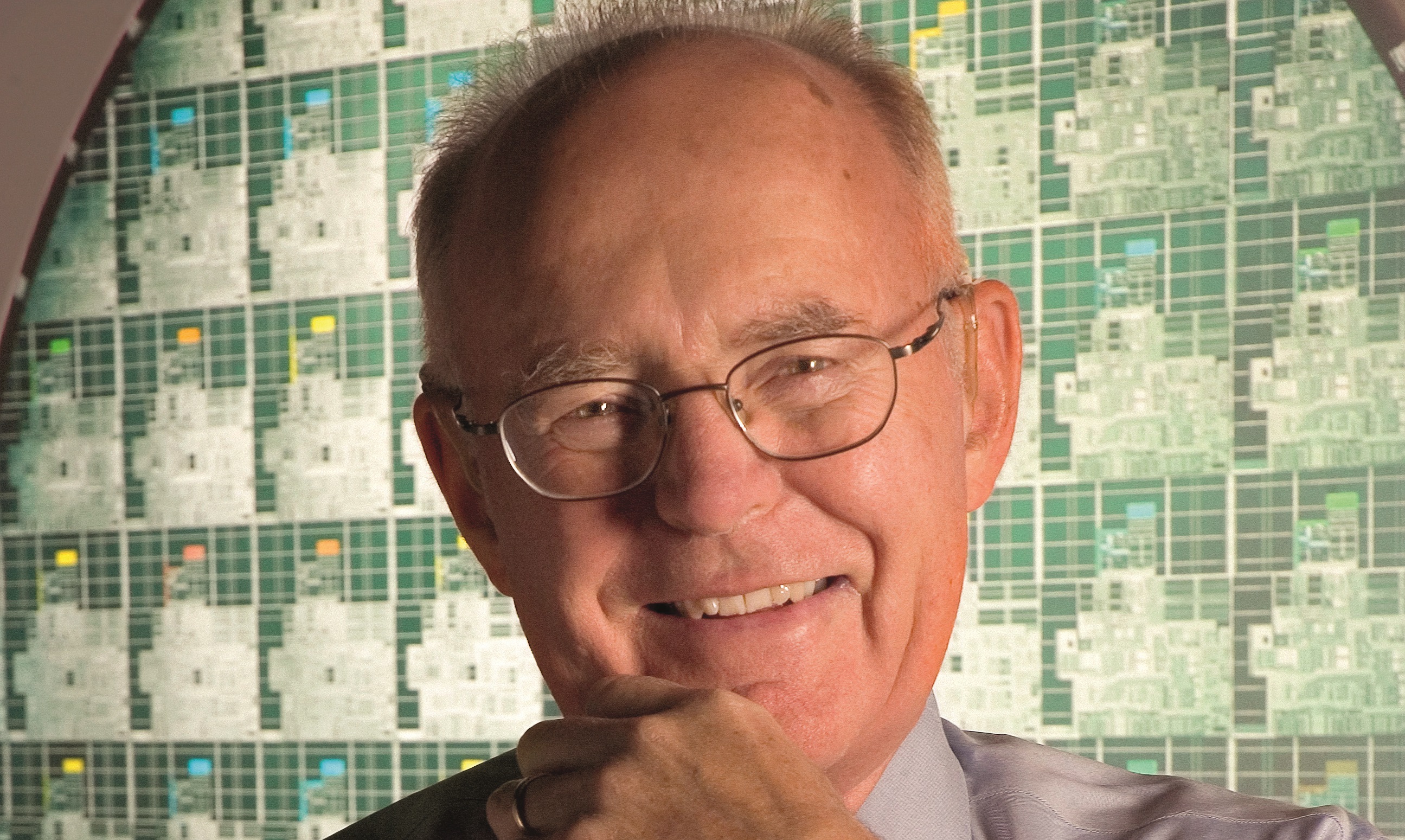

This problem has exercised some of the gigantic brains at MIT, leading to a paper in the June edition of Science: 'What will drive computer performance after Moore's law?' by, among others, Dr Neil C Thompson of MIT's Computer Science and Artificial Intelligence Lab.

The authors identify Intel's reliance on 14nm technology since 2014, but don't leave the blame there. Miniaturisation, they say, was coming to an end anyway. "Although semiconductor technology may be able to produce transistors as small as 2nm, as a practical matter, miniaturisation may end around 5nm because of diminishing returns," the authors write in their paper. As you get closer to the atomic scale (a silicon atom measures around 0.146nm) the cost of manufacturing rises significantly.

But what about core count? That's been steadily rising over the past few years, largely thanks to the efforts of AMD. Can that help us with this? "We might have a little bit of an increase in the number of cores but not that much," says Thompson. "And that's just because it is hard for pieces of software to effectively use lots and lots of cores at the same time." That's for home computers—servers, especially those involved in cloud computing and search engines, will continue to increase their core counts.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Moore or less

The thing holding CPUs back, according to Thompson, is their general-purpose nature. Specialised hardware is already creeping into our PC cases, often aimed at graphics. There are GPUs of course, and the Quick Sync core in Intel processors that does nothing but transcode video. Physics processing cards were mooted for PhysX-enabled games before Nvidia bought PhysX and bundled the functionality into its GPU API. Apple Mac Pro customers can avail themselves of an Afterburner card if they want hardware acceleration of ProRes video codecs, and specialised ASICs (application-specific integrated circuits) have cornered the market in BitCoin mining.

"I think one of the things that we're going to do quite a bit more of is design chips that are specialised for particular types of application," says Thompson, "and use those to get some speed-ups. But it isn't a replacement for the general purpose CPU which can give a lot of different things. If you look at chips, you'll see that they'll have a little specialised piece of circuitry on the chip that handles encryption, so when you're on the internet and you're trying to make a transaction secure, there could be a lot of computation involved there, but a little specialised chip does it."

Then there's software. Performance gains here will come through efficiency, as a quick experiment in Thompson et al's paper shows: a very hard sum (the multiplication of two 4096x4096 matrices) was coded in Python, and took seven hours to complete on a modern computer but using only 0.0006% of the peak performance of the machine. The same very hard sum was then coded in Java, and ran 10.8x faster. Then in C, which was 47x faster than the Python code. By tailoring the code to exploit the 18-core CPU in the test PC and its specific Intel optimisations, the very hard sum could be completed in 0.41 seconds. That's 60,000 times faster.

The above is using a general-purpose processor, so imagine you combine your optimised code with a processor that has specialised hardware for matrix multiplication, and you can go even faster still. In this post-Moore era, we may see apps and operating systems getting smaller as they become more efficient, rather than bloating all over our solid state drives and random access memory.

Say you want to send an email, and Outlook (we know, but it's a good example for this) gives you a 'Send, yes or no?' prompt. How could the computer handle that? "One thing you can do is design it from scratch," says Thompson. "You could go and find examples of people saying yes or no, and try to write a program that would recognise those things. Or you could say 'there are things like Siri and Google Assistant out there, that recognise not just yes or no but a million different things'. It probably doesn't take me very much work to write a little program that, when it hears the user say something, sends it to Google for computation and then back to me. That's a super efficient way to write your code, but a very inefficient way to do the computation, because a full voice recognition system is very complicated, and you already know it's going to be a yes or a no."

In this way, and in the face of a lack of performance gains at an engineering level, hardware and software will need to come together to ensure that the PC of the future will be faster – even if it still runs at 3.5GHz.

Ian Evenden has been doing this for far too long and should know better. The first issue of PC Gamer he read was probably issue 15, though it's a bit hazy, and there's nothing he doesn't know about tweaking interrupt requests for running Syndicate. He's worked for PC Format, Maximum PC, Edge, Creative Bloq, Gamesmaster, and anyone who'll have him. In his spare time he grows vegetables of prodigious size.