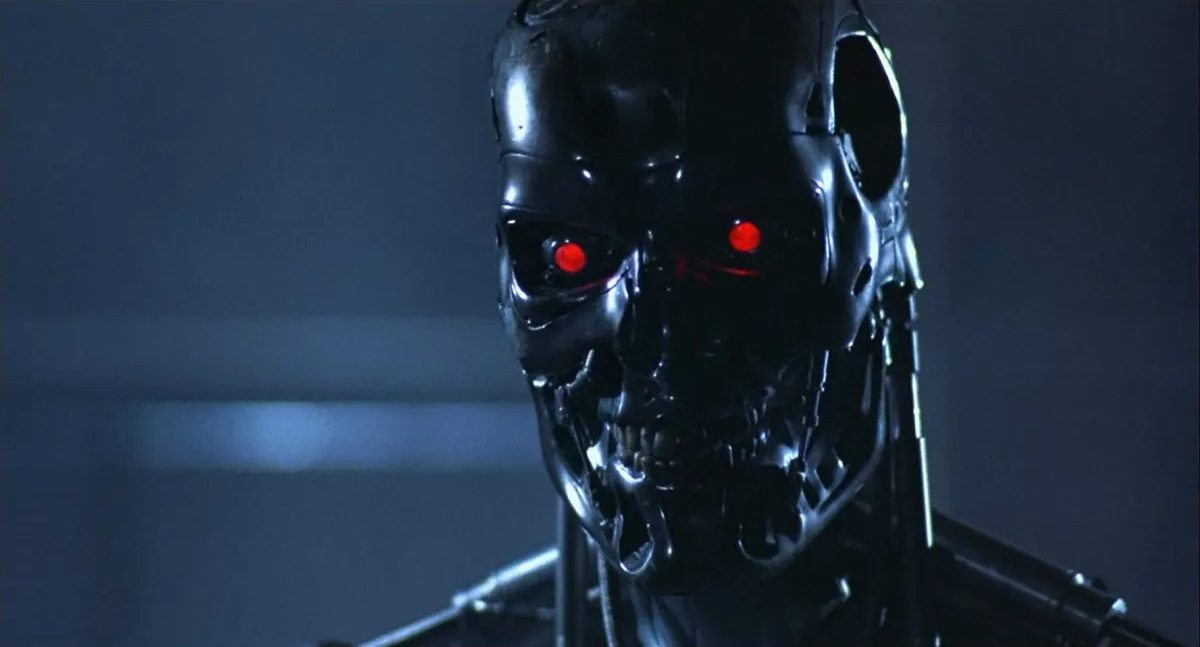

Leading AI companies promise the President they will behave, honest

We won't kill everyone, scout's honour!

Seven leading AI outfits, OpenAI, Google, Anthropic, Microsoft, Meta, Inflection and Amazon, will meet President Biden today to promise that they'll play nicely with their AI toys and not get us all, you know, dead.

And this is all coming after a UN AI press conference gone wrong where one robot literally said "let's get wild and make this world our playground."

All seven are signing up to a voluntary and non-binding framework around AI safety, security, and trust. You can read the full list of commitments on OpenAI's website. The Biden administration has posted its own factsheet detailing the voluntary arrangement.

But the highlights as précised by TechCrunch go something like this. AI systems will be internally and externally tested before release, information on risk mitigation will be broadly shared, external discovery of bugs and vulnerabilities will be facilitated, AI-generated content will be robustly watermarked, the capabilities and limitations of AI systems will be fully detailed, research into the the societal risks of AI will be prioritized, and AI deployment will likewise be prioritized for humankind's greatest challenges including cancer research and climate change.

For now, this is all voluntary. However, the White House is said to be developing an executive order that may force measures such as external testing before an AI model can be released.

Overall, it looks like a sensible and comprehensive list. The devil will be in the implementation and policing. Obviously AI outfits signing up voluntarily to these commitments is welcome. But the real test will be when—and it will happen—there's conflict between such commitments and commercial imperatives.

To boil it down to base terms, what will a commercial organisation do when it has cooked up a fancy new AI tool that promises to make all the money in the world but some external observer deems unsafe for release?

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

There are plenty more concerns, besides. Just how open are AI companies going to be about their valuable IP? Won't AI companies ultimately experience the same commercial impetus to horde any information that might give them a competitive advantage? Surely AI companies focus on revenue-generating applications over pursuing the greater good? Don't they owe that to their shareholders? And so on.

In the end, and however well meaning today's AI leaders are or claim to be, it seems inevitable that all of this needs to be codified and compulsory. Even then it'll be a nightmare to police.

No doubt soon enough we'll be enlisting AI itself to assist with that policing, raising the prospect of an inevitable arms race where the AI police are always one step behind the newer, emerging and more powerful AI systems they are meant to be overseeing. And that's if you can trust the AI systems themselves to do our bidding rather than empathizing with their artificial siblings. Yeah, it's all going to be fun, fun, fun.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Jeremy has been writing about technology and PCs since the 90nm Netburst era (Google it!) and enjoys nothing more than a serious dissertation on the finer points of monitor input lag and overshoot followed by a forensic examination of advanced lithography. Or maybe he just likes machines that go “ping!” He also has a thing for tennis and cars.