What to expect from the next generation of graphics card memory

What you need to know about upcoming VRAM designs and what they mean for graphics cards.

GDDR5 has had a good run. The first graphics cards with GDDR5 memory debuted way back in June 2009, with AMD's HD 4870. Seven years is a long time for a memory standard, especially in graphics. GDDR4 launched with the X1950 XTX in October 2006, and GDDR3 came out in early 2004 (GeForce 5950 PCIe). In 2015, AMD's Fury X was the first card in years to use something different: HBM, or High Bandwidth Memory. If you're wondering why GDDR5 has stuck around and what's taking HBM so long to show up again, don't worry—there are new things on the horizon for 2017.

Both Nvidia and AMD should have some new technology on the way in their next graphics cards. Here's what you need to know.

Right now: GDDR5X and HBM

We can already trace two different paths forward for VRAM in shipping hardware: HBM and GDDR5X. HBM was first used in AMD's R9 Fury X, along with the Fury and Nano—and it remains the one and only GPU that utilized the standard. Featuring a clockspeed of 500MHz, double-data-rate technology, and a massive 4096-bit interface, each pin can transmit up to 1Gbps, or 512GB/s in total. The big drawback with HBM (aka HBM1) is that each package was limited to 1GB of capacity, meaning 4GB total.

GDDR5X, as you might guess from the name, is similar to GDDR5. Technically, GDDR5X can run in one of two modes, double-data-rate or quad-data-rate. In practice, most implementations have used the QDR variant, which trades slightly lower base clocks for higher overall bandwidth. Where HBM goes for a very wide interface and clocks it relatively slow, GDDR5X opts for a narrower (but still wide) interface that's clocked much higher—10-14Gbps per pin is typical. With 10Gbps and a 256-bit interface like the GTX 1080, that yields 320GB/s, while the 1080 Ti uses a 352-bit and 11Gbps for 484GB/s.

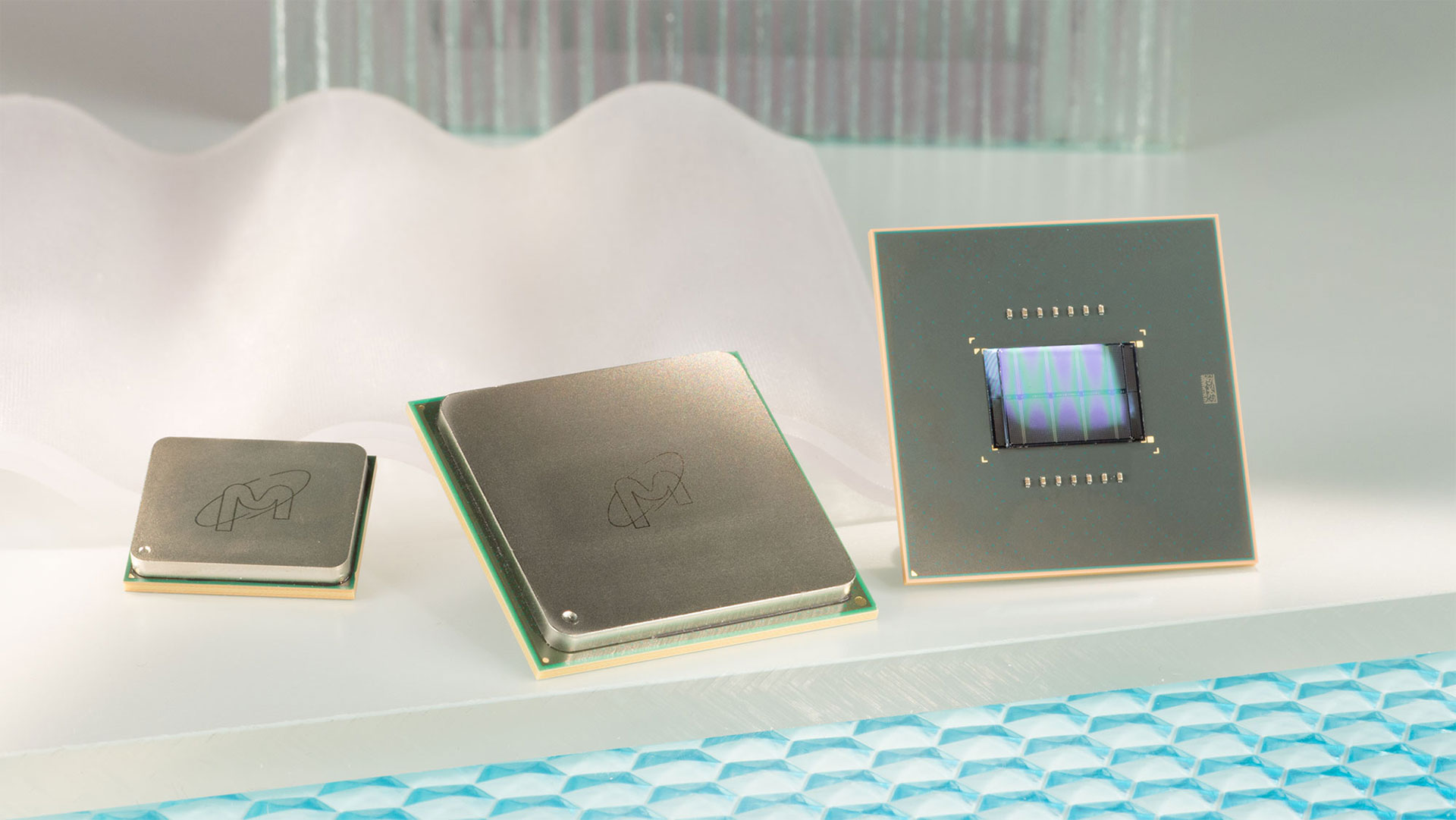

HBM 2

The two next generation VRAM types build on HBM and GDDR5X: HBM2 and GDDR6. HBM2 is also available in a shipping product, Nvidia's GP100. Sporting four 4GB packages, with a clockspeed of just over 700MHz (1.4Gbps) and the same 4096-bit interface, the GP100 has 720GB/s of bandwidth. If that sounds crazy, Nvidia's upcoming GV100 GPU will boost the clockspeed to around 875MHz (1.75Gbps), for 900GB/s of bandwidth.

But keep in mind that the GP100 is a $6,000+ GPU built for graphics workstations, not gaming rigs. HBM2 hasn't been used in an affordable graphics card yet, but that could be changing soon.

AMD's Vega GPU will also use HBM2, but with a 2048-bit interface likely running at close to 1GHz (2Gbps per pin), for the same 512GB/s bandwidth as the Fury X—but with more potential capacity (depending on the Vega model).

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

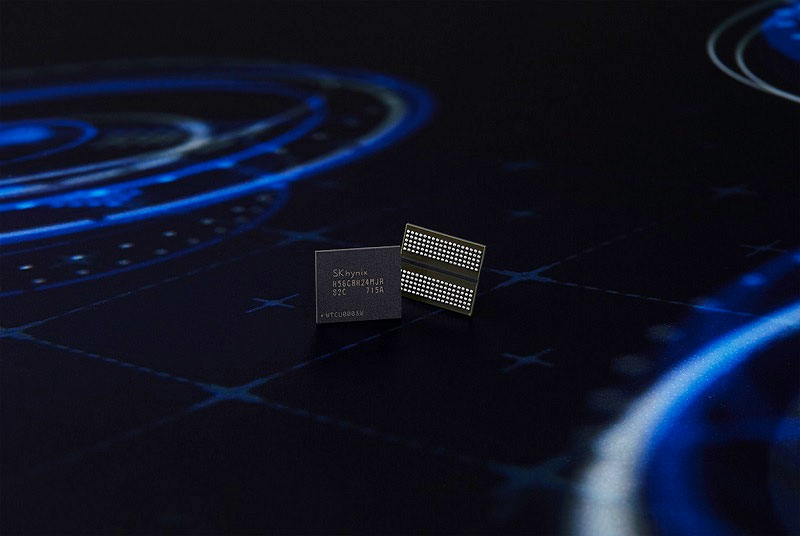

GDDR6

GDDR6 is the logical extension to the GDDR5/GDDR5X hierarchy, using QDR and delivering up to 16Gbps per pin. GDDR6 will also lower the operating voltage compared to GDDR5X, which is important since power scales with the square of the voltage—this is likely part of why GDDR5X has so far been limited to <12Gbps. The expectation is that Nvidia at least will be tapping GDDR5X and/or GDDR6 for consumer variants of their next-gen Volta architecture.

Using a 384-bit interface and 16Gbps GDDR6 would yield 768GB/s of bandwidth, while a 256-bit interface would deliver 512GB/s. There's a balancing act in play when a company decides between GDDR5X and HBM2, as the latter uses less power and delivers more bandwidth, but it also costs a lot more—both for the memory chips as well as for the required silicon interposer.

Hybrid Cube Memory

What about Hybrid Cube Memory, aka HCM? It's similar in some ways to HBM, but the only real-world use so far is the Fujitsu PrimeHPC FX100 supercomputer. The HCM2 specification provides for up to 320GB/s of bandwidth per cube. If that doesn't sound too impressive, the FX100 has up to 480GB/s per node, using the earlier HCM standard. Will we ever see HCM in a graphics card? It doesn't seem likely in the near term, as both AMD and Nvidia appear to have settled on HBM2 for the top-performance solution, but never say never.

Future Tech: HBM3

HBM2 is only now starting to ship in quantity, but already plans are underway for HBM3. The expectations are what you would expect, more capacity and more bandwidth per pin. Where HBM2 can do 256GB/s per stack, HBM3 could potentially double that figure, and double the capacity as well. HBM3 is still a few years out, however, with Samsung expected to start producing product in 2020.

The need for bandwidth and capacity

Whatever the memory technology, for graphics cards it usually ends up being a question of bandwidth and capacity. All the capacity in the world won't do you much good if the bandwidth is too slow. Conversely, tons of bandwidth with a limited capacity can also be problematic—the Fury X received a decent amount of criticism for walking memory capacity backwards from the 8GB 390/390X.

But where does it all end? Right now, 4GB cards are still doing fine in most games, though there are a few where maxing out the settings can start to require more VRAM. AMD is also making waves about its new HBCC (High-Bandwidth Cache Controller), which reportedly will allow more intelligent use of the available graphics memory. As an example, AMD noted that in many games that load close to 4GB of textures into VRAM, only about one-third of the textures are typically in use in a specific frame.

Then there's the console connection. With the Xbox One and PS4 both including 8GB of shared system + graphics memory, that has helped pushed VRAM use in games beyond 4GB. But with cross-platform games becoming increasingly common, when will 8GB become insufficient? I don't expect 16GB to become the new norm anytime soon, but Microsoft's Project Scorpio will include 12GB of GDDR5. That could push the high-end visuals to start using more than 8GB, but it will take time—we're only now starting to move beyond 4GB, and the PS4 and Xbox One have been available for 3.5 years.

Your display's resolution is also an important consideration. If you have a 1080p display, bandwidth and capacity become less of a factor than if you're running a 4K display. Scaling down a bunch of 4K textures to a 2K display can result in improved image quality, but 4K textures are decidedly more useful on a 4K display. That's part of why Project Scorpio, which targets 4K gaming, includes 50 percent more VRAM than the Xbox One.

What it all really means is that the steady march of progress continues. 2GB GDDR5 cards aren't completely useless today, but they're becoming increasingly limited. These were cards that used to run 1440p ultra on all the latest games, and now they often have to settle for 1080p medium. But if they had 4GB VRAM, they would likely still be in the same boat, as their computational abilities have been exceeded.

Rest assured, when 8K reaches the price of today's 4K displays, 4GB and even 8GB of graphics memory will be limiting. And some people will still be happily gaming at 1080p.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.