Generation Proc

Bridge of Size

Vinings agrees, particularly when it comes to music. “Repetitive music drives me nuts; once you’ve listened to Dungeons of Dredmor’s entire soundtrack, good as it is, you’ve heard it all. We tried to modulate this in Clockwork Empires by having the soundtracks adjust themselves as your mood changed, and while it does work pretty well, it doesn’t work as well as it could if we iterated on the technology for a few more rounds.

“The problem is that you have to have somebody who is a musician and a programmer, and you have to build tools. It’s a very complex process that requires specific sorts of individuals. How do you teach a computer about orchestration?” Despite that, we have taught computers about things like image recognition (see Dreams of the Deep, below).

One of the most interesting procedural-generation benefits has to do with file size. Today, we’re growing accustomed to an endless increase in our computer’s storage capacity; 1TB drives are now commonplace. But in areas of constraint, procedural generation can massively reduce file sizes, even eliminate the need for in-game assets.

When Naked Sky Entertainment’s game RoboBlitz launched on PC and Xbox 360, in 2006, Microsoft decided that the largest downloadable game it would allow on the system was a tiny 50MB, to fit on one of their (tiny for the time) memory sticks. Yet RoboBlitz was the first game to run the then-revolutionary Unreal 3 engine. As Naked Sky’s chief technical officer Joshua Glazer told us, they used lots of tricks to get the game down to under 50MB—among them procedural generation.

Minecraft’s genius is in combining a procedural world with user customization.

“All those levels would have loaded a lot faster if it weren’t for Microsoft’s 50MB requirement, coupled with the requirement that we couldn’t have any kind of ‘install’ process that unpacked the game after download,“ says Glazer. “We procedurally generated the textures for everything except Blitz (the hero) and the baddies. Pretty much every single square inch of the levels and most of the props were procedurally textured using Allegorithmic’s cool procedural texture-gen tools. Also, all the animation was procedurally driven by physics. We also procedurally modified our sound effects on the fly so that we could get more use out of the samples we were able to squish in the package. The levels were not procedurally generated—we didn’t experiment with that until our 2010 game, Microbot.”

Naked Sky’s latest game, Scrapforce, has mostly avoided procedural generation, save for an interesting-sounding stochastic AI system. And RoboBlitz isn’t the smallest game made using procedural techniques: that prize almost certainly goes to .kkrieger, a 2004 shooter that’s only 96KB, but is very impressive for its size.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Hard Limitations

Despite all these positives, there are limits to procedural generation. Firstly, it can be processor intensive. Dwarf Fortress can take several minutes to get a game going, despite only displaying its graphics in ASCII. Gamers who are used to the rapid loading of mobile games might find this frustrating. And it’s power-hungry, too, as Cook explains. “If you’re generating things like stories, or special abilities for an RPG, you want to know that they make sense, that they’re balanced, that they’re fun. That’s an extremely difficult thing to test, and it often needs a lot of playtesting. That means more computing power.”

Another problem is that procedural generation works best in clearly delineated areas. Because it’s so hard to define things like "beauty" or "fun," procedural generation often stays in areas where it knows it can perform. In those areas, it’s commonplace. “Procedural art systems for trees and rocks are really popular—you probably don’t even notice the games they’re used in half the time,” says Cook. “People don’t really notice if a branch is slightly out of place, and trees follow quite clear natural rules that we can give to a computer, so it’s a perfect use-case.” For example, SpeedTree, the games industry standard tree generator, has appeared in nearly every AAA game for the last few years: The Witcher 3, Shadow of Mordor, Destiny, Far Cry 4….

This is not a photo, but an example of how real SpeedTree’s tech can look.

Cook explains that when using “generative software in music, art, or films, we mostly use it to target things where imperfections are hard to see (abstract art instead of portraiture), things that aren’t the focus of attention (crowd scenes instead of big centerpieces), and preferably things where there are clear patterns and rules (I think electronic music benefits here).” Games, however, often allow procedural content to be closely scrutinized, increasing the quality threshold hugely.

The game combining all these elements is Dwarf Fortress, currently the high point of procedurally generated games. “One of the strengths of Dwarf Fortress—a huge inspiration for Clockwork Empires—was that everything is procedurally generated,” says Vining. “History, terrain, monsters, gods. The newer stuff in the upcoming Dwarf Fortress patch, with procedurally generated libraries full of procedurally generated books and poetic forms, is completely insane in the best way possible.”

The Apex

Developed by two brothers, Tarn and Zach Adams, Dwarf Fortress procedurally generates almost everything you could imagine. According to Tarn, in their previous game, Slaves to Armok, “You could zoom in on your character, and it’d tell you how curly his leg hairs were, and the melting and flash points of various materials. It was insane.” Dwarf Fortress goes further.

The entire world and its history is generated, epoch by epoch, before you play, with kingdoms rising, gods falling, and magical items being lost for centuries. Then it generates the terrain you’re in, the dwarves you’re nominally in command of, the local flora and fauna, and a hundred other things, including art and poetry that reference in-game events. Dwarf Fortress is so impressive and rigorous, but also insane, that people have built working computers inside it. That’s right, a virtual machine running inside a game.

Michael Cook’s Angelina simulation can’t claim to be as complex as Dwarf Fortress, but it’s increasing comprehensiveness in a different direction. That’s because Angelina (www.gamesbyangelina.org) is an experiment in computers designing and evaluating video games autonomously. “The games Angelina produces aren’t procedural,” he explains. “All the generation happens during the design of the game, but Angelina releases static games like you or I might if we made a game. It’s all about how we can procedurally generate a game design, ideas, art, mechanics, music, and put it all together, rather than generating what happens in the player’s computer when they’re playing.”

Sir, You Are Being Hunted used procedural generation to replicate the British countryside… most of the time.

“Angelina is interested in generating everything in a game, which means we have to tackle the hardest problems, like aesthetics and emotions, as well as the more rule-based stuff like mazes and rocks. I want Angelina to generate program code, and game mechanics, and systems of meaning, so it can tell you a story with its games or make you think.”

These two types of comprehensiveness—Dwarf Fortress’s obsessive inclusiveness and Angelina’s procedural game design—are core to the story here. Games, and their successor, VR (see Generating Escapism, below), are a universal experience. They can contain everything else in the world, so they take in every other part of generative art, if everything’s not to be handcrafted at great expense. The only way to do that, without us all becoming developers, is procedural generation.

Generating Escapism

Procedural game design academic Michael Cook said something very interesting. “Depending on how VR designers want to take the tech, procedural generation could be the defining tech of the VR age.” As we’ve explored in recent months, AR and VR are taking lessons from games and growing them into whole new fields. Oculus is even employing ex-Pixar staff to make VR kids' movies.

Of course, like AAA games, VR has to be convincing to work, and the hardware can’t do it alone. It needs tons of content—a lot more than any game, because it has to be all around the player and in great detail. Procedural generation might not just be helpful, it seems essential.

Valve and HTC’s Vive system adds even more complexity, given that it uses extra cameras to map the dimensions of the room. That means shifting the size and shape of the rooms you’re experiencing through the device, which means the designers will have to create the game with variable locations for cues and objects. Your fantasy tavern might just be a few feet across and need a keg in the middle to hide your coffee table, or it might be very big and absolutely clear.

Cook has ideas on how this will be dealt with. “The way we’ll solve these problems, I believe, is by having procedural generation systems that are intelligent enough to redesign games to fit inside your room. So, the game designer specifies what needs to be in this tavern—a bar, a table, a door—and then your HTC Vive automatically detects where these things should go.

“It generates the layout of the room to make sure you don’t walk into that vase you have in the corner, or accidentally put your knee through the TV. To me, VR is an amazing new environment to do procedural generation in.”

Dreams of the Deep

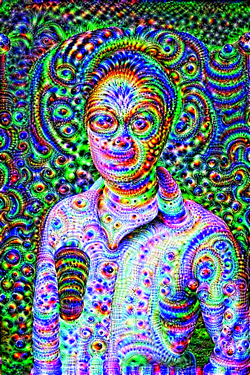

There are ways other than procedural generation and handcrafting to generate content. The most spectacular in recent months has been the frankly terrifying and hallucinatory images coming out of the Google Deep Dream project.

This project works by starting with a neural network. These are typically piles of 10–30 stacked layers of artificial neurons. The team starts training the neural network, using millions of examples, and then adjusts the network parameters gradually over time so that fidelity isn’t lost, but random noise is.

Each layer of the network extracts higher-level features than the previous one, until the final layer decides wha

t the image looks like. So, the first level might detect edges, then intermediate layers might detect recognizable shapes, while the final layers assemble them into complete interpretations. This is exactly how Google Photo works, too.

The reason these horror pictures have been going around, of people with hundreds of dog’s eyes making up their face, is that this particular neural network, called Deep Dream, has been fed data from ImageNet, a database of 14 million human-labeled images. But Google only uses a small sample of the database, which contains “fine-grained classification on 120 dog sub-classes.”

It’s the opposite of Mandelbrot’s images. Instead of seeing the same pattern as we go deeper into the image, the computer sees the same pattern everywhere it looks. Sadly, the pattern happens to be that of a dog.

Proc-Gen Media

Fiction book: Life, A User’s Manual

Written by Georges Perec, in line with the Oulipo manifesto, this book isn’t strictly speaking programmatic, but Perec wrote it in line with formulaic constraints that meant he had to write about a certain room, in a certain building, with certain people and objects interacting in every chapter. Perhaps because of that, it comes across as a weird jigsaw puzzle of a book. And somewhat long-winded.

Fact book: Philip M. Parker

Professor Philip M. Parker has patented a method that automatically produces books from a set template, which is then filled with data from Internet searches. He claims to have produced over 200,000 books, ranging from medical science to dictionaries about just one word, with most of them being print-on-demand only… he’s also started generating factual books for under-served languages, in collaboration with the Bill & Melinda Gates Foundation.

Music: Brian Eno

Back in 1996, Brian Eno used Intermorphic’s SSEYO Koan software to create his album Generative Music 1, which was in fact a piece of software itself. The music ran off a floppy disk and "improvised" within the 150 parameters that Eno had set to create a different track every time. He has continued to experiment throughout his life.

Film: WETA and Massive

The armies in the conversion of Tolkien’s trilogy were generated by a collaboration between WETA and a special effects firm called Massive, which created convincing representations of thousands of actors—unless you looked closely and noticed they all looked like Andy Serkis (joke).

Cartoon: Toy Story 3

It might seem old-school now, but Toy Story 3 used a ton of groundbreaking procedural generation techniques to animate large-scale scenes. The scene where the toys find themselves in a garbage incinerator? Most of the trash was procedurally generated from the other trash around it. Similarly, the plastic bags wafting through the wasteland and how Barbie tore up Ken’s vintage clothing collection.

Game: No Man's Sky

Yes, it’s a game, but Hello Games’s No Man’s Sky plans to procedurally generate an entire universe, from the flora and fauna, to the planets and stars. Then it generates alien landscapes and lets you land on them and walk around. Amazing.