The coolest tech demos from SIGGRAPH 2017

See the cutting edge of computer graphics and simulation.

SIGGRAPH is one of my favorite yearly events: five days of computer graphics and interactivity demonstrations that often baffle me on a technical level, but never fail to be extremely cool. Tell me you don't want to see what a 'multi-scale model for simulating liquid-hair interactions' looks like? You can't, because you definitely do. Everyone wants to.

The research papers and demos I've collected here come from the latest conference, which ended just a few days ago (though some of these submissions were originally posted a few months ago). Not everything at SIGGRAPH has to do with gaming or real-time graphics specifically, but I've included anything I thought was cool. Just because our games don't currently include 'multi-species simulation of porous sand and water mixtures' doesn't mean they won't in the future. In fact, I demand that they do. I'm tired of sub-par porous sand and water mixtures in games and I think it's about time someone said it.

Anisotropic elastoplasticity for cloth, knit and hair frictional contact

Chenfanfu Jiang, Theodore Gast, Joseph Teran

Fabric is made of math. We all knew this. But this is some of the most impressive fabric math I've ever seen, especially when you get to the simulations of knitted fabric at the end.

ClothCap

Gerard Pons-Moll, Sergi Pujades, Sonny Hu, Michael J. Black

Sometimes fabric is made of math captured from the real world, as with ClothCap, which uses a 4D scanner to capture clothing in motion, and then segments the garments and allows for all their folding and bunching to be mapped onto 3D models.

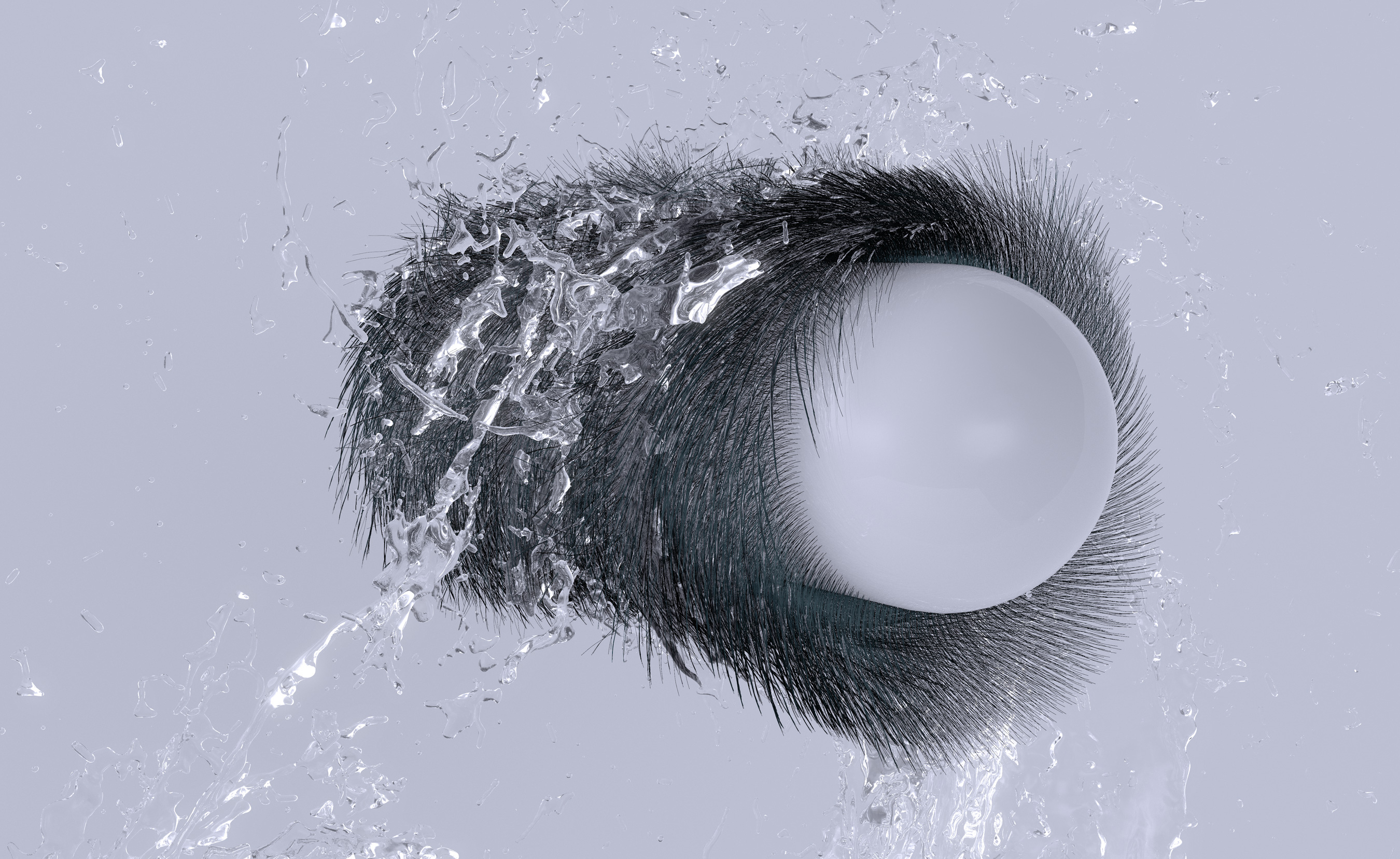

Multi-scale model for simulating liquid-hair interactions

Yun (Raymond) Fei, Henrique Teles Maia, Christopher Batty, Changxi Zheng, and Eitan Grinspun

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

A reminder of just how much stuff there is to simulate in the physical world, this open-source project simulates the way water clings to and drips off of hair. Skip to near the end for the best part of the demo, a shaking 'dog' (actually a nub covered with hair) spraying water at what are presumably a bunch of off-screen nub people sitting by the pool.

Multi-species simulation of porous sand and water mixtures

Andre Pradhana Tampubolon, Theodore Gast, Gergely Klár, Chuyuan Fu,

Joseph Teran, Chenfanfu Jiang, and Ken Museth

I promised multi-species simulation of porous sand and water mixtures in the introduction, and I wasn't going to let you down. This is one of the most visually impressive demonstrations from this year's event, and it's sand getting wet.

DeepLoco

Xue Bin Peng, Glen Berseth, KangKang Yin, Michiel van de Panne

Remember when 'pathfinding' consisted of a bundle of pixels constantly getting stuck on rock sprites? We've come a long way. DeepLoco proposes 'dynamic locomotion skills using hierarchical deep reinforcement learning.' In other words, check out this simulation of a legged-torso kicking a soccer ball around.

Phase-functioned neural networks for character control

Daniel Holden, Taku Komura, Jun Saito

Another movement-based paper, 'phase-functioned neural networks' are put to use here to generate smooth and realistic walking and running animations in real-time. Impressive stuff, though I wonder if I'll miss hopping up the sides of sheer cliffs Skyrim-style when advanced character simulation like this is the norm.

A deep learning approach for generalized speech animation

Sarah Taylor, Taehwan Kim, Yisong Yue, Moshe Mahler, James Krahe, Anastasio Garcia Rodriguez, Jessica Hodgins, Iain Matthews

Thanks to Mass Effect: Andromeda, the topic of speech animation has been popular this year. In massive RPGs, how do you animate hours upon hours of dialogue? The answer is: procedurally, and the results aren't always great. But automated speech animation is coming along, as evidenced by this Disney project to generate believable lip-syncing from audio alone. Another possible use: animating player characters in VR in real-time as the players speak.

Animating elastic rods with sound

Eston Schweickart, Doug L. James, Steve Marschner

This is my favorite demo of the bunch. The somewhat confusing title seems to suggest they're using sound to create animations, but the research paper is about the opposite: simulating sounds. Imagine a future with no more canned sound effects, but actual simulation based on materials colliding and interacting. This method deals specifically with 'deformable rods'—a classic rod subgroup—and the many sounds they can be made to produce with "a generalized dipole model to calculate the spatially varying acoustic radiation."

You can find out more about SIGGRAPH's technical papers here, and also watch the livestreamed sessions from this year's conference—the Technical Papers Fast Forward offers a quick look at these projects and others.

Tyler grew up in Silicon Valley during the '80s and '90s, playing games like Zork and Arkanoid on early PCs. He was later captivated by Myst, SimCity, Civilization, Command & Conquer, all the shooters they call "boomer shooters" now, and PS1 classic Bushido Blade (that's right: he had Bleem!). Tyler joined PC Gamer in 2011, and today he's focused on the site's news coverage. His hobbies include amateur boxing and adding to his 1,200-plus hours in Rocket League.