Processor Architecture 101 – the heart of your PC

A short primer on CPU architecture fundamentals.

I was recently writing about AMD's new Zen architecture (now Ryzen), and Intel's Kaby Lake chips are coming out soon. I throw around a lot of technical jargon that can come off sounding like insane gobbledygook if you don't have a Master's in computer engineering. In fact, one of my fellow editors highlighted a paragraph, sent it over to me in Slack, and basically said, "I have no idea what this means. Can you give me the condensed version?" The ultra-condensed version is that the new architecture is better. But that's a bit too simplistic. The "why" is the important part. And to make that "why" easier to understand, I decided to put together a short primer on how CPUs work.

The whole point of any processor is to get stuff done. The faster you can get work done, the better. The problem is that all the electricity flowing around inside a chip has to take a long and winding road from start to finish, which can slow things down—at least insofar as the speed of light is 'slow.'

Consider for a moment that light in a vacuum travels 3.0x10^8 meters per second, but each clock cycle on a 4GHz processor is 0.25 nanoseconds, or 2.5x10^-10 seconds. In the time of a single clock cycle on a 4.0GHz processor, 0.25ns, the electricity moving around inside the chip can travel about 5cm down a wire. (Note that electricity moving through a wire—the energy wave—is slower than light in a vacuum, about 0.7 to 0.9c, depending on the material used.) Given chips are around 1-2cm on a side, that might not seem too bad, but there are chip delays that need to be dealt with, as charges propagate through billions of transistors and voltages stabilize. Timing considerations are way beyond the scope of this article, but here's a 256-page book that digs deeper into such matters.

Basically, modern processors are clocked so fast that it's extremely difficult to make everything work—it's practically voodoo. This is a peek under the witch's hat to talk a bit about how the magic happens. I'm going to briefly discuss the core concepts for processor pipelines, branch prediction, superscalar designs, and a few other items of interest, giving a high level overview of what each means and why it's useful.

Disclaimer: processors are really complex. Most processors have hundreds if not thousands of extremely bright engineers working on them. Massive dissertations have been written on how processors work. A short introduction can't possibly cover all the details, but if you're still scratching your head over something after reading this primer, ask in the comments or send me an email.

The classic assembly line analogy

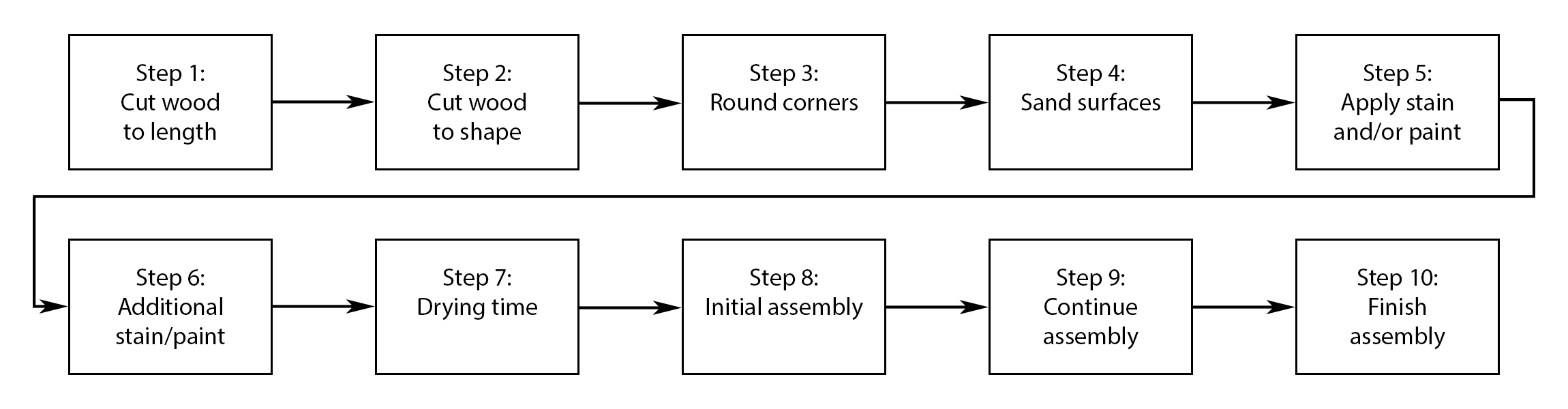

No one can do everything at once, and processors are the same. Things have to be ordered with a clear start and finish, with interim stages that each do part of the work. This is what is known as the processor pipeline, and it has some well-known similarities to a factory assembly line. Understanding how assembly lines improve efficiency is one element to understanding how processors work. (Note: don't get hung up on the numbers or other factors in this analogy, as they're merely for illustration. The core idea is what's important, and we could use clothing, cars, etc. as the product being produced. I'm using furniture.)

Imagine a factory putting together furniture. In the 'good old days,' a bunch of people would hand-build each piece and it might take a day or two. Then they'd build the next piece and so on. Each part was effectively hand-made, which meant no two tables or chairs were exactly alike. Hypothetically, let's say it takes 20 combined hours for a team of five people to assemble one complete china cabinet—so one solid day of work for the team yields two cabinets. Doubling the number of people working on a single cabinet would be difficult, as they would start bumping into each other and create other problems, but you could have two groups working in parallel and they can do four cabinets per day. The ratio is ten people working yields four cabinets per day.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

One of Ford's insights in the automotive world was that the process of building a car could be greatly streamlined with an assembly line, which is how most factories now run. Instead of doing one piece of furniture at a time, start to finish, use a bunch of assembly stages. 'Cut the wood to length/shape,' 'round corners and sand surfaces,' 'apply paint/stain,' and 'assemble all pieces.' Instead of having teams of five working on each step together, two people can handle each step.

Make each step take the same amount of time and labor, giving five steps that take 0.8 hours each. Stage one does the first steps, and the parts of the cabinet move on to stage two while stage one starts a new cabinet. Next, stage two finishes and moves on to the second cabinet, stage one starts a third cabinet, and stage three works on the first cabinet. And so on until all five stations are working on five cabinets in different states of assembly.

Ten people are working on building furniture, just like before. It still takes four hours after starting for the first cabinet to be finished, but once the full assembly line is active, production is greatly improved. Now ten people produce ten cabinets per day instead of four. Or maybe you have ten stages with one person on each stage taking 0.4 hours, with a rate of twenty cabinets per day:

In some modern assembly lines, there might be hundreds of 'stages' (many handled by robots/machinery). Imagine 480 stages that each take one minute. The first part has to go through every stage and requires eight hours, but the second is finished one minute later, and the factory can churn out 480 units daily. Of course, a 480 stage assembly line also requires a lot of other extras. There might be a conveyor system, workstations for 480 stages, and supply lines for whatever parts are needed at each stage. Assembly lines require more room, but the efficiency gains make them worthwhile.

There is a potential problem, however: what if the factory produces multiple types of furniture, like cabinets, tables, chairs, desks, and beds? There are similarities in how each would be assembled, but they don't all use the same steps or parts, so the assembly line has to be reconfigured when changing to a new type of furniture. The hypothetical factory starts on a cabinet, there's a one day ramp up time, and then it churns 480 cabinets per day. Then management determines making tables right now is better, so all the cabinet parts are swapped for table parts, stages are reconfigured, and there's another one day ramp up time.

In a worst case scenario where only one of each furniture type was made before switching, this would be terribly inefficient. It's possible to work around this by doing batches, though: "Make 4,000 cabinets, then retool and make 4,000 tables, then retool and make 16,000 chairs, etc." Reconfiguring the stages can also be done as soon as the last part is finished, which would reduce the one day ramp up time. But this is all hypothetical anyway, so let's move on.

There are several issues apparent in our furniture assembly line at this point. First, how many stages can you break the process into before it becomes ludicrous? More complex assembly lines (e.g., automobiles) might have hundreds of stages, but a table with four legs is a different matter—a few dozen stages would probably be sufficient, although there would likely also be a holding area where stained/painted pieces would wait for a few hours to dry. Reconfiguring for different types of furniture is also problematic as some items are easier to build—tables versus cabinets, for instance.

Instead of having just one assembly line in a factory doing one type of furniture, why not have five or ten production lines, each tailored to a different complexity of furniture? And each line could be tuned to meet market demand—so the factory might do four times as many chairs per day as tables, but a similar number of tables and cabinets. If there's a sudden spike in demand for one particular item (say, desks), all of the appropriate lines could switch to that furniture type for a while.

This analogy is sounding incredibly messy, even in my head, but once I bring in the CPU equivalent a lot of this will make more sense.

The processor pipeline equivalent

All of this assembly line stuff might sound strange when talking about processor architectures, but while assembly lines probably aren't going to switch production types on a regular basis, the way CPUs handle instructions actually has a lot in common with this hypothetical factory.

CPUs used to do basically one instruction at a time (386/286 and earlier era), and the whole chip was far less complex. Because each instruction used all resources, chips were often limited by the most complex instructions that could be run—and some complex instructions might take many clock cycles. Clock speeds topped out at 30-50MHz in the 386 era, and 12-16MHz in the 286 era. The 486 was the first tightly pipelined x86 processor, which dramatically improved clock speed scaling and overall performance compared to the 386.

(Note that technically earlier chips including the 8086 through 386 were 'loosely' pipelined, which means there was overlap in the fetching, decoding, and execution of instructions. Tight pipelining in the 486 and later chips generally means each stage is designed to take the same amount of time and reduces the reliance on buffers and other techniques. Combined with a fast local data/instruction cache, the 486 effectively doubled the performance of the 386 at the same clock speed.)

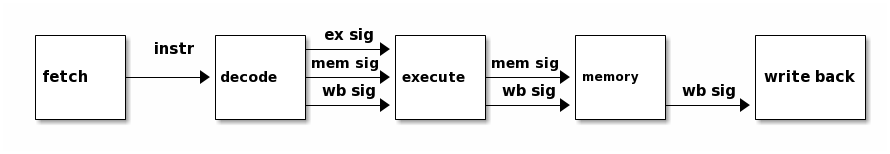

In today's processors, like in a factory assembly line, handling each instruction now happens in stages. The classic 5-stage pipeline consists of fetch, decode, execute, memory, and write-back stages. Each of those stages can be subdivided into additional steps, which is precisely what modern processors like Intel's Kaby Lake and AMD's Ryzen do—along with every other Intel/AMD processor going back at least 20 years. The complexities of modern designs and the competitive market make it difficult to compare implementations of AMD and Intel directly, but the fastest x86 processors typically have in the neighborhood of 20 stages, while some of the slower models (Intel's Atom and AMD's Puma/Jaguar) might have closer to 10 stages.

Where CPU pipelines differ from assembly lines is that, unlike switching from one furniture type to another, microprocessors running code have frequent branch instructions that change what the processor is working. Branches are important because programs are full of comparisons with the next step depending on the result. Every if-then-else statement like "if x < 5 then … else …" involves a branch or two. Every loop of code, like "for(i=0;i<1000;i++)" will have a branch at the end of the loop. Every function/subroutine call involves a branch at the start and end. Without branching instructions, our PCs wouldn't be able to do most of the tasks that make them useful.

The problem with pipelines is that each time a branch occurs, the pipeline can be forced to start from scratch. In a worst case where every fifth instruction is a branch, roughly half of the time a 5-stage pipeline would be empty or 'stalled.' But there are ways to work around this, the biggest being branch prediction. This is akin to knowing what comes next in a factory running batches of production, but there's no way to know with certainty the size of the current batch of instructions, or what the next batch will entail.

Branch prediction in a nutshell

Branch instructions typically have two potential targets—either you take the branch or you don't. (There are other types of branches, but that's a more advanced topic.) When a branch comes down the pipeline, one option is to just wait until the 'execute' stage to find out whether a branch is taken or not. However, doing nothing is a waste, so instead of waiting, every time a branch instruction comes along, processors try to determine which of the two potential targets to take and then speculatively execute that code. This is known as branch prediction.

The difficulty with branch prediction is that if the CPU guesses wrong, the pipeline needs to be cleared of the speculative instructions and begin fetching and running the correct instructions. This adds complexity and delays, and it gets worse with a longer pipeline. If you have a five stage pipeline and have a branch misprediction, three or four cycles of instructions end up being wasted (the same as if the pipeline had just 'done nothing'), but in a 25-stage pipeline there might be 20-22 cycles/stages of invalid instructions. In other words, longer pipelines (more stages) make predicting branches accurately extremely important.

There are various forms of branch prediction (Wikipedia has a decent summary), the goal being to balance accuracy of predictions with speed as well as storage requirements. There's even a regular Championship Branch Prediction competition to design the best branch predictor run by JILP. Because branch prediction is so important, companies like Intel and AMD don't often provide too much detail, but whatever the type of branch prediction, remember that it's still only part of the overall picture. To really determine CPU performance, you need to know the branch prediction accuracy, branch misprediction penalty (number of cycles lost if the pipeline needs to be restarted), and the processor clock speed.

Of course, when you're using a computer, you won't actually 'feel' pipeline stalls—everything happens too fast. A 24-cycle delay might sound like a lot (and it is), but at 4GHz that's only 6ns. Instead, it's the overall performance that gets affected by all of this stuff. Some hypothetical examples here should help explain this better.

Assume the branch instruction rate is 10 percent (which is pretty typical), meaning 10 percent of all instructions that a CPU has to handle are some type of branch instruction. A processor with a 20-stage pipeline, 16-cycle misprediction penalty, and 93 percent accurate branch prediction would be about 89 percent efficient—meaning, due to branch mispredicts that occur, it loses 11 percent of its performance potential. Improving the same chip to a 97 percent accurate branch predictor would increase efficiency to 95 percent. In contrast, a processor with a 25-stage pipeline, 21-cycle misprediction penalty, and only 90 percent accurate branch prediction would only be 79 percent efficient.

All other things being equal (which they never are in the real world), if the first chip ran at 4.0GHz, the second chip would be about the same performance while running at 3.7GHz, and the third chip would need to run at 4.5GHz to match the first chip.

Superscalar, or going wide

Remember when I talked about having multiple assembly lines that each do a different type of product? Processors do that as well, starting with Intel's Pentium (P5) when talking about x86 processors. The basic idea is that instead of executing a single pipeline of instructions, you have multiple pipelines and effectively double throughput (at least in ideal conditions). Having multiple pipelines in a processor makes the design a superscalar architecture, and again (since the late 90s), most modern processors use this technique.

Intel's P5 architecture duplicated most of the integer execution pipeline, with two paths, U and V. U could handle all instructions while V only worked on relatively common simple instructions—and there was also a separate floating point pipeline. (Note: most computers use a lot of integer calculations, meaning whole numbers like -2, -1, 0, 1, 2, etc. Floating point numbers with a decimal point, like 3.14159, are more complex, but they're used in a lot of scientific calculations, as well as in graphics.) In many cases, the P5 could execute two instructions per clock cycle.

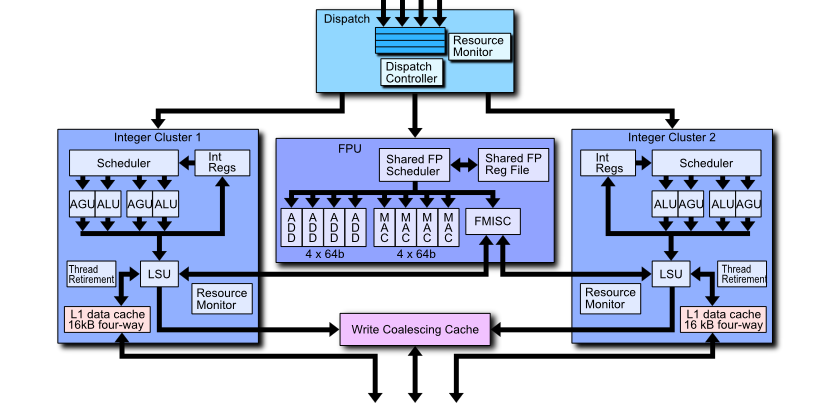

More recent CPU architectures include even more pipelines, and these have become even more specialized in terms of which instructions they handle—WikiChip has a nice diagram of Skylake as an example. Or here's a slightly older block diagram of AMD's Bulldozer:

In order to feed all of the pipelines, processors started fetching multiple instructions each cycle. Pentium (P5) processors fetch two instructions per clock, making them a '2-wide' architecture. P6 (Pentium Pro through Pentium III) processors were a 3-wide design, while its successor NetBurst (Pentium 4/Pentium D) was 2-way again—but far more complex in many other areas, and designed for higher clock speeds. The Core series and AMD's Bulldozer family were 4-wide designs that stuck around for a long time, but Skylake and now AMD's Ryzen are both moving to (sort of) 6-wide designs.

The difficulty with going wider is that instructions in each pipeline need to be independent of each other—so if you have three instructions, C=A+B, F=D+E, and G=C+F, the third instruction requires the results of the first two operations. Checking for instruction dependencies and determining what order to run the instructions is yet another task most processors perform, and the complexity scales exponentially. The result is that sometimes pipelines end up with an empty 'bubble' where no work is assigned.

For any given superscalar architecture, there will be an overall typical efficiency. A 4-wide architecture might on average only fill three of the four execution slots, making it 75 percent efficient. But the same basic design with a 3-wide architecture wouldn't suddenly be 100 percent efficient, instead it might be 85 percent efficient. Even a 2-wide architecture wouldn't be 100 percent efficient, but it's easier to fill a few execution slots in a superscalar design than it is to fill many. For most tasks there's a limit to how wide an architecture can go before it sees no more benefit, and 6-wide is likely close to that practical limit with today's technology.

Symmetric Multi-Threading to the rescue

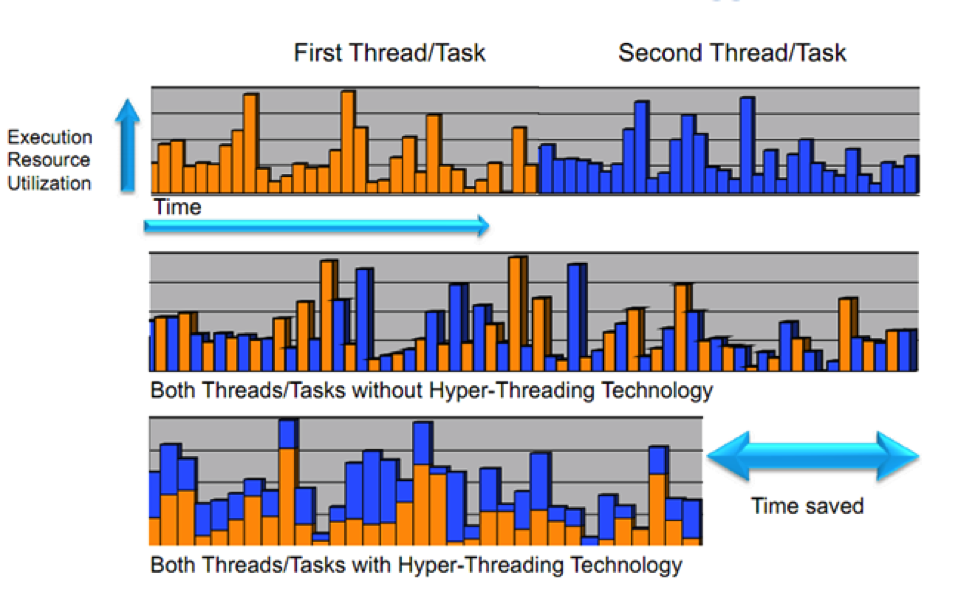

Because searching for dependencies among instructions is so complex, one potential workaround is to include instructions from more than one stream (thread) of instructions. This is a real-world problem, as superscalar processors basically never fill every execution slot on each cycle because of dependencies. But there's one way to guarantee instructions are completely independent: use instructions from different threads.

Think of it like browser tabs—what happens in your PCGamer.com tab has nothing to do with what's going on in your banking tab. Or as applications, where the instructions coming from Chrome are completely independent of the instructions coming from Slack, and both are also independent of Steam, which is also independent of VLC, etc. Symmetric Multi-Threading (SMT) allows a superscalar processor core to pull from two independent program threads in order to fill up all the available pipelines.

Intel first introduced SMT with their Pentium 4 line and called it Hyper-Threading. The Core 2 processors then shipped without SMT, but Core i7 brought it back. AMD's Ryzen will be their first SMT processor. Again, there's compromise involved, using transistors to support SMT instead of something else, but with all the 'easy' performance gains already used, SMT is one more tool in the architecture's utility belt of tricks.

SMT isn't a magical fix for performance. Each core has to share resources between two threads, which isn't always a good thing. There are times where SMT causes a (slight) drop in performance, for example if two threads end up fighting for the same resources. SMT also doesn't do much when a system isn't running lots of demanding threads (programs or portions of a program), as there may not be enough work to fill up all the pipelines. However, in many situations SMT can be beneficial, and Intel has been using it with reasonable success since around 2009 on all their high-end parts.

Somewhat related to SMT is the number of CPU cores, but this is pretty straightforward. Early on, I mentioned doubling the number of people working on assembling furniture by having two teams instead of one. This is the equivalent of a dual-core processor, where each 'core' is a team of five people in this case. For actual processors, take all the pipeline and buffers and add some gate logic to have the cores talk with each other, and the potential performance improves linearly with core count. (In practice, there's a limit to how many cores you can add before you hit scaling limits, but again that's beyond the scope of this article.)

Putting it all together

Okay, I admit, this wasn't really brief or simple. There are a ton of other factors that also affect performance that I haven't even mentioned. Cache sizes, the type of cache (L1/L2/L3 as well as the set associativity), and cache latency are important. In-order vs. out-of-order execution, bus interface, memory technology, and various buffers like the reorder buffer, TLB and BTB all impact performance.

The main lesson here is that pipelines are a big part of allowing CPUs to run at higher clock speeds, and branch prediction helps to mitigate the problems associated with longer pipelines. All other aspects being equal, a processor with a 5-stage pipeline won't clock nearly as high as one with a 20-stage pipeline. But that doesn't mean all processors need to have the same complexity.

All of the stuff involved in a 20-stage pipeline may allow for high clock speeds and high performance, but it also uses a lot of power. ARM processors as an example generally have shorter pipelines and fewer resources, trading clock speed and complexity for efficiency and simplicity—you wouldn't want to try running an i7-6950X in your smartphone.

Going back to my starting point, the paragraph that kicked off this article was this one from the Zen/Ryzen overview:

"Zen has an improved 'perceptron' branch prediction algorithm, now decoupled from the fetch stage, which again helps performance. We don't actually know the pipeline length for Zen (Bulldozer is estimated at a 20-stage pipeline), but better branch prediction can help mitigate having more stages. Notice for example that Intel's NetBurst pipeline was nominally a 20-stage design, which was 'too long' back in the day, and yet all of Intel's designs going back at least to Sandy Bridge are around the same length. And not to downplay these aspects, but Zen also features larger load, store, and retire buffers, along with improved clock gating."

I haven't covered every facet of that paragraph, but hopefully it now makes a little more sense. In effect, AMD has improved their branch prediction in Ryzen, using a form of neural network. That should help a lot relative to their previous architecture, and combined with other factors like a wider superscalar design and SMT, it's not too difficult to see how AMD might improve IPC by 40 percent. But higher IPC doesn't guarantee better performance, because clock speed still matters. We've also reached the point where making use of all the performance potential in a modern PC can be difficult, which is why Intel's mainstream parts have been relatively similar in performance for the past six years.

When you consider the factory and assembly line analogy, hopefully it gives you a better sense of the difficulties involved in making a processor. During the past 40 years, processors have gone from small, single-building factories with teams of a few people handling instructions to massive, sprawling complexes that are comparatively the size of a large city. These 'factories' now have to deal with roads and other infrastructure that was never a consideration when everything was housed in a single room. Real factories don't normally get that large, but the world of silicon microchips is a technological marvel.

Put in different terms, the relative performance of today's processors is close to a million times faster than the original 16-bit 8086 chip of the late 70s. Or if you want to think of it in terms of size, the original 8086 was manufactured on a 3.2 micron—3,200nm—process. It had around 29,000 transistors and measured just 33mm^2, or a bit more than 1/8 the size of a dime. In contrast, an i7-6950X is manufactured on a 14nm process, has around 3.2 billion transistors, and measures 246mm^2 (about the area of a dime, but in a square shape). The i7-6950X has around 110,000 times more transistors, and manufacturing Broadwell-E using the same technology as the 8086 would require a chip measuring nearly 2m per side—obviously impossible to do, but fun to visualize.

It's becoming increasingly difficult to improve performance with our processors, but there are still plenty of ways forward. Kaby Lake and Zen are mere stepping stones along the path, and the next architectural updates are already well under way, with Cannonlake and Ice Lake (and maybe Coffee Lake as well) next on Intel's timeline, and Zen+ coming from AMD. Whatever the codename, you can rest assured that the new parts will be superscalar, hyper-pipelined, branch predicting monsters. I wouldn't have it any other way.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.