Nvidia CloudLight: using cloud computing to deliver faster lighting

Remember Microsoft making some noises about the Xbox One's cloud-rendering power? To somewhat offset the fact they're jamming a weaker GPU into their gaming slab than Sony is with the PlayStation 4, Microsoft is employing 300,000 servers to bolster processing of “latency-insensitive computation”. And now Nvidia has just announced CloudLight , something which sounds more than a little bit similar.

Nvidia released a technical report on CloudLight on their website outlining what it could mean for games. They call it a system “for computing indirect lighting in the Cloud to support real-time rendering for interactive 3D applications on a user's local device.” In practice, this means Nvidia can use GeForce GRID servers to compute a game engine's global illumination to ease the load on your gaming device of choice.

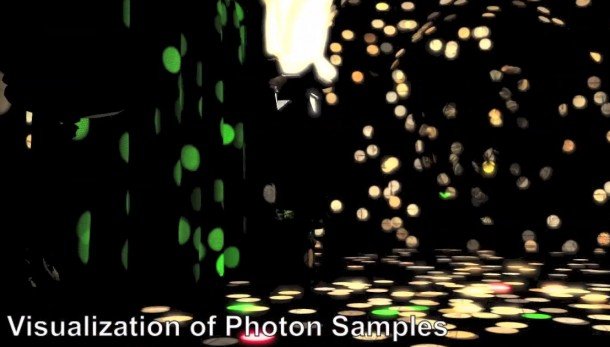

And that seems to be key here - CloudLight is being designed to run across both tablet, laptop and desktop gaming rig, and adapt the way it works according to the device you're using. A gaming rig, for example, would use photons to represent global illumination, which is a more hardware-intensive than the irradiance maps Nvidia use for tablets. Both would still utilise cloud-based computing to aid the lighting of a level, but the higher quality option would require a higher-powered PC.

Lighting is a useful process to move to the cloud, because lighting lag is far less noticeable than other game elements like sound, or physics. In Nvidia's video presentation they show the impact of latency on moving light sources, and it's not until they get above the 500ms mark that lag becomes an issue.

“We found, empirically, that only coarse synchronisation between direct and indirect light is necessary and even latencies from an aggressively distributed Cloud architecture can be acceptable,” the technical report states.

One of the big worries about this sort of tech is what happens when our connection to the cloud drops out, as it inevitably will - I'm looking at you [Dave's ISP redacted]. Nvidia claims that as indirect illumination is view-independent, it's robust enough to stand up against short network outages. “In the worst case,” the report claims, “the last known illumination is reused until connectivity is restored, which is no worse than the pre-baked illumination found in many game engines today.”

It sounds like a rather neat way for Nvidia to get around their current hardware's limitations when it comes to processing global illumination, especially for rigs with weaker graphics hardware. But quite what it's going to be like for developers to code for - and whether those Nvidia cloud services will still be serving your games a few years down the line - is still up for debate.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

I've got my own questions for Nvidia about this, but what would you like to know about CloudLight?

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.