Intel's chip foundry keeps losing billions of dollars but CEO Gelsinger says this is it, this year is 'the trough'

Internal restructuring and foundry investments are the first steps back to profitability.

As part of Intel's internal reshuffling, separating its chip-making facilities into Intel Foundry and everything else as Intel Products, the company filed a Form 8-K with the United States Securities and Exchange Commission. In this document, the full picture of how well the foundry service was performing became clear and with operating losses of $7 billion in 2023, more than $2B worse than the previous year, it's clear that Intel has a lot of work to do to turn that around.

Intel's CEO Pat Gelsinger went through the details of it all in an earnings call (transcript via SeekingAlpha), pointing out that one significant mistake that Intel made was taking far too long to switch to EUV (extreme ultraviolet) lithography, the method that all of the latest CPUs and GPUs are fabricated with. Although Gelsinger doesn't mention this, it's worth noting that Intel's foundries traditionally only ever made chips for itself—without orders from a wide range of fabless companies, such as AMD or Nvidia, there was little call for the company to innovate nor maintain the older manufacturing lines.

TSMC and Samsung not only pour billions of dollars into building cutting-edge fabrication plants, but they also keep their foundries running on old process nodes alive. The order books for them are just as healthy as they are for the latest 3 or 4 nm ones.

The 8-K does show that Intel Products, as a division, is doing very well and in the earnings call, CFO David Zinsner, states that the operating margins are consistently strong, despite decreases in revenue. Intel has long-term targets of 60% and 40% for its gross and operating margins, respectively.

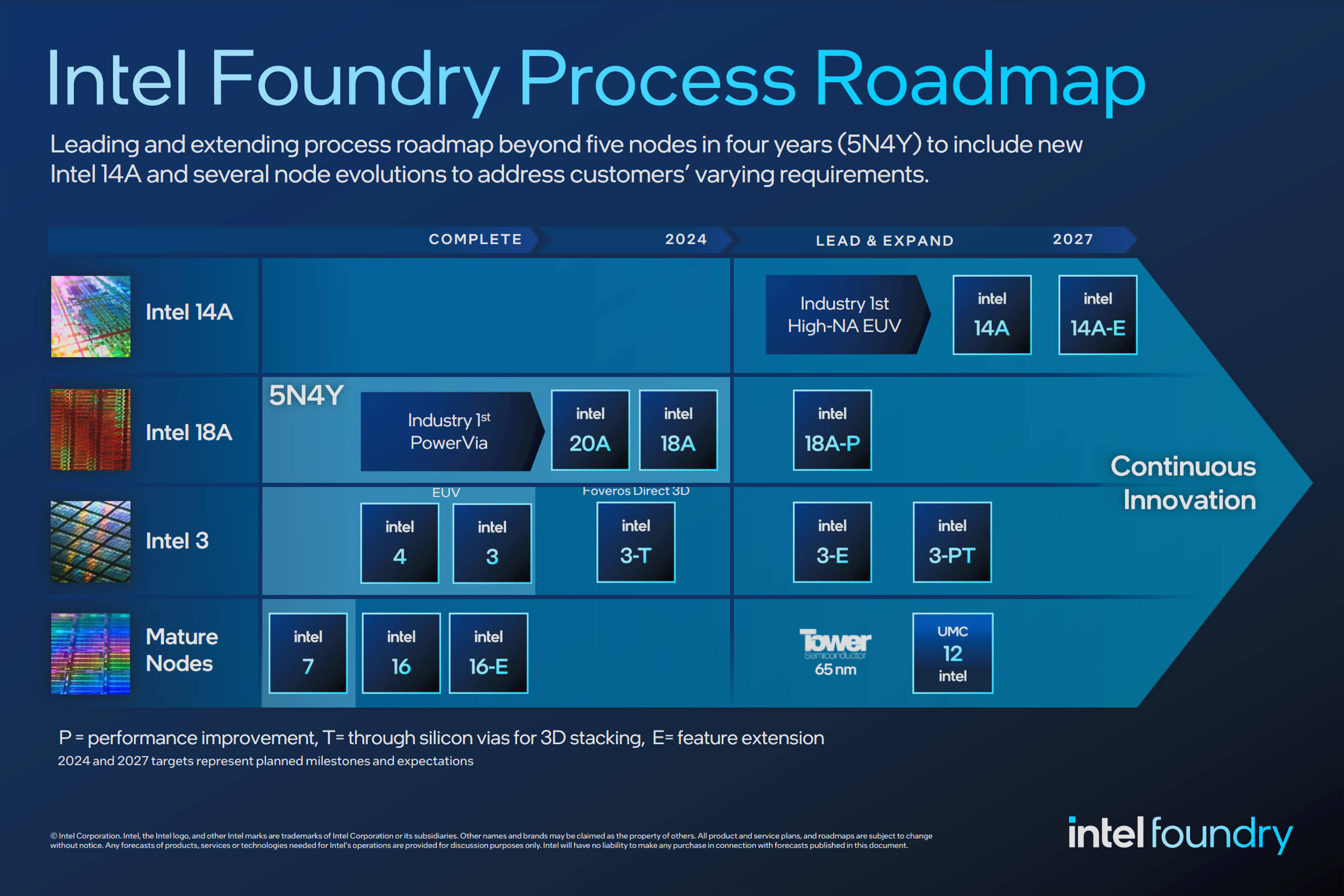

However, with Intel Foundry losing money all over the place, the targets are far more modest, at 40% and 30% for the gross and operating margins by 2030. Right now, Intel is just aiming to get the division to break even by 2027. Hence why it has been accruing financial aid in the billions, to ensure that its multi-process-node plan sticks to deadlines and can manufacture chips in sufficient quantities.

Gelsinger confirmed this in the call, stating that "in '25, we'll start ramping Intel 3. In 26, we'll start ramping 18A. And when we get to 18A, we believe that we have competitiveness and the cost structure."

Making Intel Foundry into a TSMC/Samsung-like business is going to be no small feat. It's not just about having sufficient investment for it, it's about having the same tools, resources, and services on offer as the competition. When you've spent almost 50 years making chips almost entirely for yourself, there's no call to make even basic documentation widely accessible and of a certain standard.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

Intel expects to reduce its dependency on TSMC for wafers, with the changes it's implementing with its foundries. At the moment, roughly 30% of all the wafer it requires are externally fabricated, and Gelsinger said that Intel is hoping to reduce this to 20% over time. Exactly how long this will take isn't clear, but it's probably looking to achieve this by 2030 at the latest.

Fully separating the foundries into an almost separate-like business is a shrewd decision, because as AMD showed many years ago, if things go ill and the money begins to dry up, it can be sold off relatively easily. If that comes to pass, there will be almost nothing to stop TSMC and Samsung's dominance in the chip fabrication industry.

However, Intel has managed to secure a healthy sum of cash, in the form of grants and loans, via the US Chips Act. No administration is going to hand over billions of dollars without some securities in place, either from Intel or the US government. If every goes to plan, Intel could well displace Samsung as the second largest supplier of large scale chips in the world.

Nick, gaming, and computers all first met in the early 1980s. After leaving university, he became a physics and IT teacher and started writing about tech in the late 1990s. That resulted in him working with MadOnion to write the help files for 3DMark and PCMark. After a short stint working at Beyond3D.com, Nick joined Futuremark (MadOnion rebranded) full-time, as editor-in-chief for its PC gaming section, YouGamers. After the site shutdown, he became an engineering and computing lecturer for many years, but missed the writing bug. Cue four years at TechSpot.com covering everything and anything to do with tech and PCs. He freely admits to being far too obsessed with GPUs and open-world grindy RPGs, but who isn't these days?