Battlefield 1 performance analysis

The pseudo-historical "greatest war" simulation is a finely tuned killing machine.

Battlefield has been a cross-platform series since Bad Company 2, and Battlefield 1 is still carrying that torch. But this time around Dice dropped the PS3 and Xbox 360 from the list of supported platforms, and though it sticks with Frostbite 3 as the engine, the graphics have received a nice upgrade since Battlefield 4.

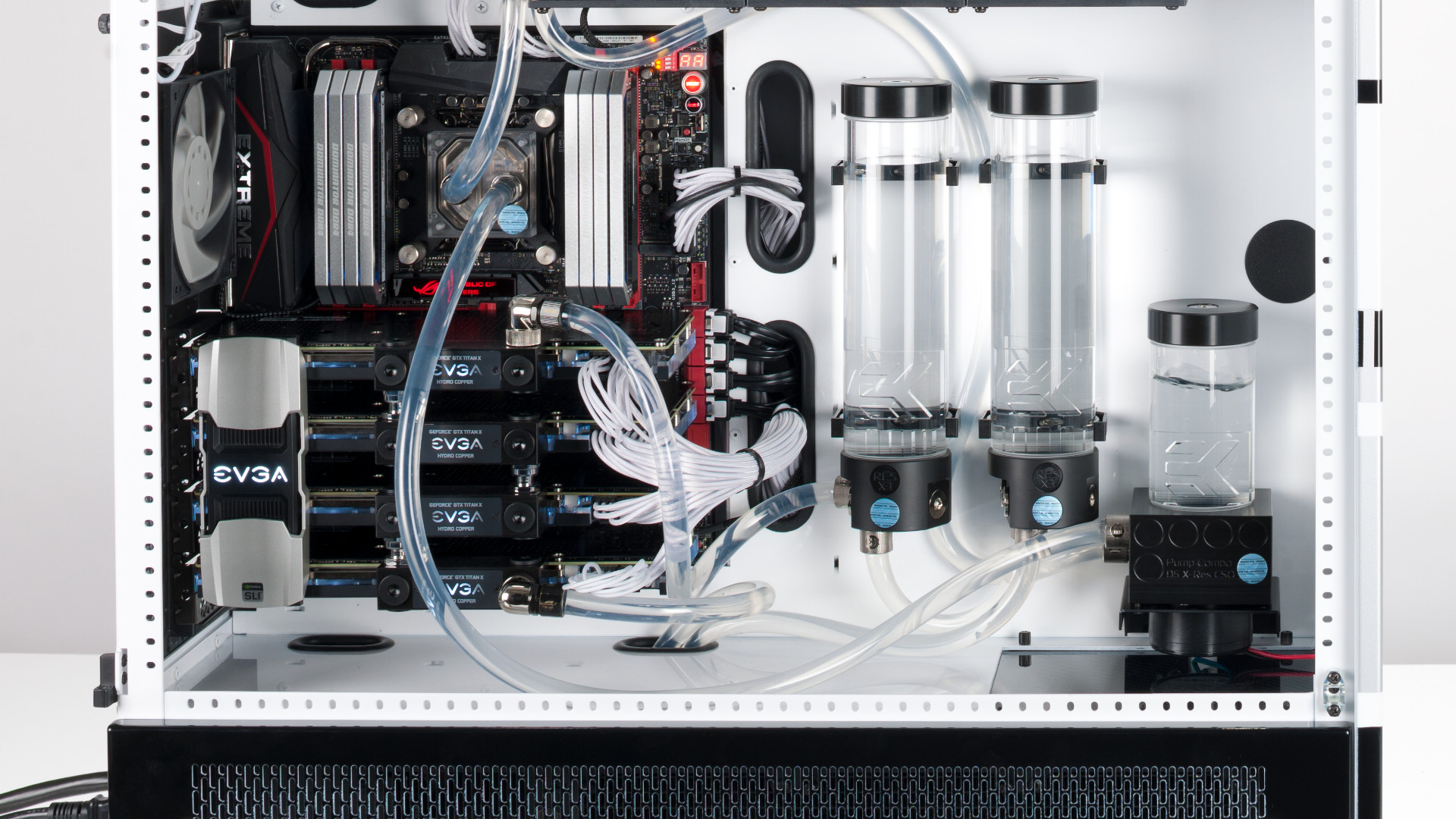

Frostbite 3 is interesting because it supports both DX11 and DX12 APIs, and Dice has been working with low-level APIs longer than just about anyone else in the business—Battlefield 4 was the first major game to get patched with support for AMD's now-defunct Mantle API (Mantle having effectively been rendered moot by the Vulkan API). Despite the change in API from Mantle to DX12 for low-level support, BF1 should in theory be a good test-case for DX12. There's one small hiccup, however: BF1 in DX12 mode doesn't support multiple GPUs right now. Par for the course, really, but high-end gamers with multiple GPUs in CrossFire or SLI will want to stick with DX11. I'll look at the performance implications of this in a bit.

Let's start first with our standard PC feature checklist for Battlefield 1, move on to the available settings and what they mean, and wrap up with a look at performance.

Battlefield 1 ends up looking a lot like Gears of War 4 when it comes to PC-centric features. All the core graphics and hardware support is there, but modding is one area where the Battlefield series has always come up short. Dice bluntly stated that Battlefield 4 wouldn't have mod support, and as far as I can tell, nothing has changed since then. And it's not like having no official mod support ends up being a boon to the playerbase, as cheating remains a serious problem. But this is EA/Dice we're talking about, so this is pretty much expected behavior—the game server rentals at launch don't even have a kick/ban option yet, which makes about as much sense as putting framerate caps on games.

Graphics settings and impressions

Battlefield 1 is a nice looking game, with a few levels that are simply amazing. The combination of semi-destructible environments, terrain deformation, and lighting effects—along with plenty of skill on the part of the level designers and artists—results in a visually impressive game. I'm also one of the people that find the move from modern combat to WWI helps make the game a lot more enjoyable than the last couple Battlefields.

BF1 includes a decent number of settings to tweak, in addition to the presets. Rather than providing extensive control over every aspect (a la Gears 4), however, many of the settings group similar features. The settings also describe what each affects, which is a step in the right direction, but there aren't any details on the performance impact. That's why I'm here.

The overall graphics quality controls all the sub-settings by default, and you need to set it to 'custom' if you want to tweak things. Most of the sub-settings have low/medium/high/ultra values that correspond to the overall setting, with antialiasing post and ambient occlusion being the only exceptions.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Checking out the four presets, there's more of a distinct progression in quality than in some games. Low quality has little in the way of dynamic lighting, ambient occlusion, and other effects, and it also has significantly lower texture quality. Medium quality provides a good boost to overall image fidelity and is what I'd target on lower end hardware. High quality improves shadows and textures, with ultra providing the usual incremental improvements over high.

While the above still images show a clear progression in image quality, I'd also point out that in motion, differences are both more and less severe. I didn't really notice the drop in texture quality or lighting, but aliasing (jaggies) on the ground and other objects was very noticeable and distracting at the low and medium presets. TAA (temporal antialiasing) is one setting I'd try to enable if at all possible.

As far as the performance impact of the presets goes, running at low vs. ultra will typically double your fps (and more if you happen to be VRAM limited). Medium is likewise a substantial 40-50 percent bump in performance over ultra, but the high present only yields 8-10 percent more performance. Digging into the customizable settings helps explain where the biggest gains come from, and I performed some quick testing using the GTX 1060 3GB and R9 380 4GB to check the impact of the various options.

Resolution scale does exactly what you'd except—it renders offscreen at anywhere from 25 percent to 200 percent of the current resolution, and scales the result to your selected resolution. If you have spare graphics horsepower, you can opt for a value greater than 100 to get supersample antialiasing, though the demands for that are quite high. Going below 100 is less useful, unless you're already at you display's minimum resolution—and upscaling anything below 50 percent tends to look pretty awful.

GPU memory restriction is designed to help the game engine avoid using more VRAM than you actually have available. I don't know what exactly gets downgraded or changed, but on both GPUs I tested, turning it on actually helps performance to the tune of around five percent. That's partly because I'm starting at the ultra preset as my baseline, but it still appeared to give a small boost even when starting from the medium preset on a 4GB card. Grabbing a couple of screenshots, this appears to impact the amount and quality of showmaps used, but it ends up being a very small change in image quality.

Texture quality on the 3GB and 4GB cards I checked has very little performance impact, so this one is best left at ultra, or at least high. The drop in quality at low and medium is too great to warrant the 2-4 percent increase in performance. Texture filtering also has a negligible impact on performance (2-3 percent going from ultra to low), though the visual impact is less. I'd leave both on ultra unless you're severely VRAM limited.

Not surprisingly, lighting quality is one of the settings that has the biggest impact on performance. This is mostly for dynamic lighting and shadows, and turning this to low improved performance by around 15 percent. But lighting and shadows do make a visible difference, so I wouldn't necessarily turn it to low unless you absolutely need to.

Effects quality isn't really explained well—does this mostly deal with explosions, or something else? In my limited testing, I saw no difference in performance between low and ultra settings, so I'd leave this on ultra.

Post processing in many games tends to be undemanding, but it all depends on what the game engine is doing. In the case of Battlefield 1, post process quality ends up being the second most demanding setting next to lighting, and turning it to low improved performance by nearly 15 percent. I couldn't tell just looking at the game while running what was changed, but after grabbing a couple of screenshots, this appears to include reflections. That explains both the high impact and negligible change in image quality, so turn this down as one of the first options if you want higher framerates.

Mesh quality, terrain quality, and undergrowth quality all appear to affect geometric detail, perhaps with some tessellation being used. Terrain and undergrowth quality didn't change performance much at all, while mesh quality could add up to five percent higher fps when set to low.

Frostbite 3 is a deferred rendering engine, so doing traditional multi-sample antialiasing is quite difficult and performance intensive. In place of this, most modern games are turning to various forms of post-processing like FXAA, SMAA, and TXAA (though the names may differ). BF1 lumps these under antialiasing post, and has options for off, FXAA medium/high, and TAA. FXAA doesn't work very well in my experience, while TAA does a good job while introducing a bit of blurriness. Given the amount of aliasing otherwise present, I'd set this to TAA, but you can improve performance by 7-8 percent by turning this off.

Finally, ambient occlusion is another setting that relates to shadows, with three options: off, SSAO, and HBAO. Turning this off can add about 10 percent to fps, but it does tend to make the game look a bit flat. SSAO is a middle ground that adds some shadows back in with about half the performance impact.

Battlefield 1 Performance

I mentioned earlier that Battlefield 1 and the Frostbite 3 engine support both DirectX 11 and DX12. In many games, that has resulted in a decent boost to AMD GPU performance. I'm not going to bother with a graph here, because in Battlefield 1, DX12 does almost nothing for performance on the cards I tested—and in several cases it made things substantially worse in the form of periodic choppiness.

This happened with both AMD and Nvidia GPUs, and while DX12 didn't usually drop performance much (maybe five percent at most), for most users it's not going to be a huge boon. A few AMD cards, like the R9 380, did show a slight (1-2 percent) improvement with DX12 in some situations, but the Fury X and RX 480 performed worse. Given the lack of CrossFire support under DX12 and the apparent non-changes to performance, I didn't do extensive testing across all AMD hardware.

If that seems unusual, my results aren't the only numbers showing little to no benefit with DX12. AMD provided some performance results with DX11 and DX12 figures, and in many cases they also reported slightly lower performance under DX11. Props to AMD here for actually showing the performance loss caused by the API they normally champion—I appreciate the honestly at least. The bottom line is that DX12 at present isn't really helpful for most GPUs in Battlefield 1. We'll have to see if a future patch changes the situation.

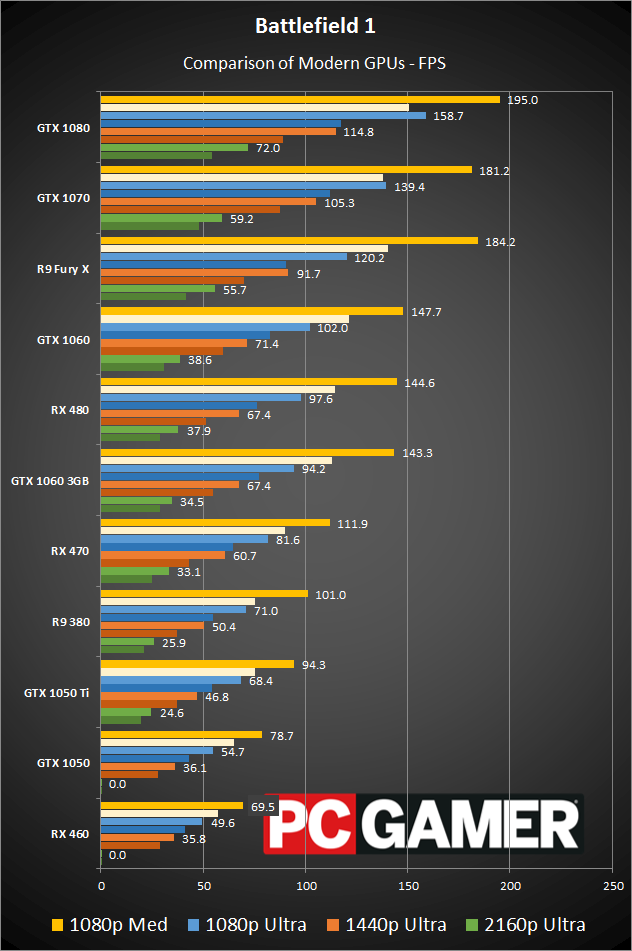

For testing Battlefield 1, my primary benchmarks all use the single player War Stories campaign (to be precise, the final act from the Mud and Blood campaign where you're in Black Bess facing off against a pair of A7V's and two FT light tanks). This involves plenty of explosions and represents a better view of performance than a sequence with no combat, but even so it's not quite the same as the multiplayer experience; more on this below. I use four test settings: 1080p medium quality, and 1080p/1440p/4K ultra quality. Note also that last minute driver updates from both AMD and Nvidia delayed my reporting; all of the results were tested with Nvidia's new 375.63 and AMD's Crimson 16.10.2 drivers.

The 1080p medium preset can be a huge help to slightly older GPUs, but in the case of Battlefield 1, you'll need to be reaching pretty far down before you have to drop that far. Most cards are well above 60 fps, and while hardware from three or more years back will need help, anything less than two years old should be fine. Even the ultra-budget GTX 1050 and RX 460 are easily above of 60 fps at 1080p medium, and you could turn up a few settings without too much harm, though multiplayer performance will be lower than what I'm showing here.

There's some concern for those who have hardware too slow to handle medium quality, because even dropping to the low setting probably won't help enough. Frostbite and Battlefield games have typically been designed to deliver striking visuals across a wide range of hardware, but the minimum system requirements tend to be higher than other games. (In other words, integrated graphics users aren't invited to this party.)

Moving to 1080p ultra, the GTX 960 and 1050 Ti, along with the R9 380, are all above 60 fps, and you could easily add another 20-30 percent with a few tweaked settings. 1440p ultra drops a few of the least potent cards from the 60 fps club, but 1060 3GB and the RX 470 4GB are still doing okay. As usual, 4K ultra ends up being too tall a mountain to climb for most single GPUs. The GTX 1080 still hits 72 fps, and the 1070 and Fury X aren't far off the mark, but if you're serious about 4K ultra at 60+ fps, you'll want a pair of high-end graphics cards. GTX 1070 SLI for example managed 85 fps at 4K ultra, while 480 CrossFire is a less extreme (and less expensive) solution that barely squeaked by with 62 fps.

If you're wondering about older Nvidia GPUs, I did do some testing of GTX 980 and GTX 960 4GB as well, though those aren't in the charts. The 960 ends up trailing the 1050 Ti by a few percent while the 980 leads the 1060 6GB by a few percent, across all settings. In effect, last year's $500 and $200 cards have become this year's $250 and $140 cards.

A quick note on multi-GPU testing is that, while Nvidia's SLI mode worked fine in my testing, CrossFire had sporadic issues. These were most pronounced at medium quality, and if I had to venture a guess, the current 16.10.2 hotfix driver was only tuned for ultra quality in BF1 when running CrossFire. Specifically, 1080p ultra actually runs substantially better on RX 480 CF than 1080p medium, including minimum fps that's more than double the performance. CrossFire actually reduces performance at 1080p medium compared to a single RX 480. There were also rendering errors at 1080p medium, and while these weren't consistently a problem, it points to a hastily created CrossFire profile:

Checking CPU scaling by simulating a Core i3-4360 (dual-core, Hyper-Threading, and 3.7GHz) and Core i5-4690 (quad-core, no Hyper-Threading, and 3.9GHz), Battlefield 1 definitely makes use of more than two CPU cores. On high-end graphics cards you can lose a fair amount of performance, though I need to qualify that statement a bit. The War Stories are far less taxing on the CPU, and Core i3 only showed about a 15 percent drop in performance at 1080p ultra, with Core i5 showing almost no change. Multiplayer is a different beast.

Additional performance testing was done using multiplayer servers with 24 and 64 players active, and the CPU requirements are substantially higher. Compared to the 6-core 4.2GHz i7-5930K, the Core i5 dropped performance in multiplayer by 15 percent, while the Core i3 performance was down 35 percent. As another test, I locked the CPU clock to 3.6GHz and tested 2-core + Hyper-Threading, 4-core without HT, and 6-core with HT. The multiplayer performance results with those CPUs are around 80/50 fps (avg/min) with 2-core, 100/75 fps with 4-core, and 125/90 fps with 6-core. That's all at 1080 ultra with a single 1070 card, and as usual 1440p and 4K become more GPU limited; however, running 1070 SLI moves the bottleneck back to the CPU.

Ultimately, even if it's not as capable and not officially within the system requirements, a Core i3 processor should be fine for most single GPU users. Not that I'd necessarily recommend going that route, since it will always be the scapegoat if you encounter problems, but if you already have one you should be fine. In my limited testing it didn't appear to be a serious concern for budget and mainstream graphics cards—which is precisely where I'd expect to see Core i3 used.

Battlefield 1's true system requirements

If you're just looking to get into a Battlefield 1 match and maintain decent framerates, our best budget gaming PC ends up being a great fit for 1080p ultra, perhaps with one or two tweaks to keep things solidly above 60 fps. Our mid-range gaming PC build (listed above) easily handles 1440p ultra and will be a great fit for a 144Hz display if you've got one—it can even make a good run at 4K ultra, though you'll probably want to tweak a few settings (e.g. post process quality). And finally, for silky-smooth 4K ultra, our high-end gaming PC will get you there and more—GTX 1070 SLI manages over 80 fps, and 1080 SLI should improve on that by close to 20 percent.

So what might you want to change from our normal builds, if you're specifically interested in Battlefield 1? The budget build is the only system that might need some tweaking, and upgrading the CPU to a i5-6400, Core i5-6500, or better might be good. But until you get to higher-end graphics cards the Core i3-6100 shouldn't be a serious bottleneck. The graphics cards are all fine, and with the current state of DX12 support, Nvidia basically wins in Battlefield 1 at every price point—the possible exception being the RX 470 4GB, which now costs less than the 1060 3GB. For BF1, the 1060 3GB is still clearly better, but long-term there are other games where the extra VRAM could prove useful.

Final analysis

I have to hand it to Dice on the performance front. Battlefield 1 is a great looking game, and it runs well on a large variety of hardware—including CPUs that are well below their "minimum" requirement. By continuing to iterate on the Frostbite 3 engine, Dice simplifies a few variables and it's mostly about creating new maps, weapons, vehicles, and sounds, all of which come together nicely.

The odd man out here is DirectX 12, and much like DX12 support in Rise of the Tomb Raider, it feels more of an afterthought than an integral part of the engine. There's definitely room for a future patch to help the situation, and as a game that AMD is actively marketing, the lack of clear performance improvements here is a bit of a surprise. There are a few cases where it helps AMD, but never by more than a few percent, and some of that might just be margin of error.

Not even multiplayer seems to benefit from DX12; I ran a collection of benchmarks with the RX 470 at 1080p ultra with DX11 vs. DX12, on the Ballroom Blitz map, sticking mostly to the open areas. The results put DX11 at 76.8 fps vs. DX12 at 76.3 fps, but minimum fps favors DX11 at 60 fps vs. 49 fps. I'd also like to see Dice add DirectX 12's multi-adapter support for GPUs, but given SLI is working great under DX11 this is only important and useful if they can make the DX12 mode run better.

None of this affects Battlefield 1 as a fun game, however, and for a multi-platform release it's the way we like to see things done. BF1 will utilize high-end hardware if you have it, but it will run just fine on mainstream and even budget systems. Unless you're truly running a dinosaur, and decent PC coupled with a good graphics card should prove more than sufficient for Battlefield 1.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.