What Microsoft's DirectX Raytracing means for gaming

Physically based graphics rendering could simplify and improve the way games look.

If you follow the technology in computer graphics, we've always known where we eventually want to end up. Our demands aren't much: we simply want a perfect rendition of the world around us—or at least the option to create such a thing. The theory behind recreating such a thing has existed for decades, and it's called raytracing (or path tracing, if you prefer the implementation that's often used today).

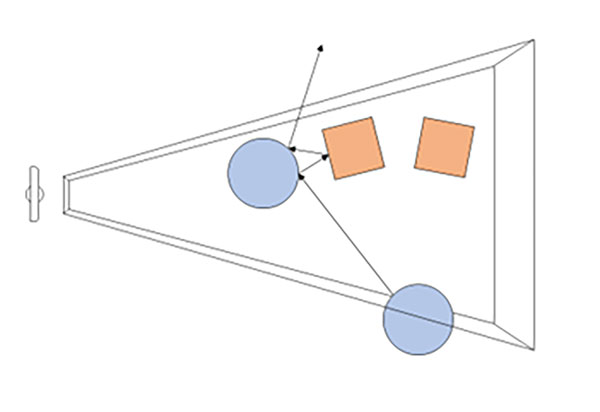

At a high level, imagine the model for computer graphics as trying to recreate the world. Photons bounce around, emitted from light sources like the sun, reflections off other objects, lightbulbs, our monitors, etc. Our eyes face in some direction and what we 'see' is effectively the collection of all photons hitting the receptors in our eyeballs, and our brain interprets that as an image.

The problem is that this all happens at an analog level—the photons are always being emitted, not necessarily at a uniform rate, and at different wavelengths. At a rough estimate (thanks to this insanely detailed paper), a billion photons reach your eyes every second, while a single lightbulb in a room emits somewhere on the order of 8*1018 photons per second. Simulating every photon bouncing around a room would be impractical, but what if we just simulate the photons that hit our eyes? A billion photons per second might be a tractable number… maybe.

And that's the essence of raytracing. Start with your computer display, running 1920x1080 pixels as an example. Now reverse-project a path from each of those pixels out into the model of an environment, like an office, house, etc. Bounce a bunch of these paths (rays) around and you get a fairly good approximation of what a real, physically modeled view of the environment should look like.

If you've ever run a benchmark like Cinebench, you should have a good idea how long all of these computations take—about 60 seconds on a faster CPU to render a single 1280x720 image for Cinebench 15. In the professional world of movies, rendering the images that go into the latest Pixar film can be far more complex—higher resolution displays, higher resolution textures, and a far more detailed model of the world being rendered. It often takes many hours to render each frame of a CGI movie.

Playing games at 10 hours per frame wouldn't be much fun, obviously, so we need a way to approximate everything and get it running in real-time. This process is collectively known as rasterization, and it's how our computer graphics in games function. And it works pretty well, and looks increasingly better every passing year. But rasterization has started using techniques that are quite complex, including screen space reflections, global illumination, environment mapping, and more.

The difficulty with all of the rasterization techniques is that they also require a lot of time, only here it's often the time of artists, level designers, and software developers. If the end goal is still to get the best approximation of the way things look in the real world, we're eventually going to end up going back to raytracing in some form.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Today at GDC, Microsoft is announcing the next generation of it's DirectX API, called DirectX Raytracing (DXR). The new API is intended to bridge the gap between rasterization and raytracing, allowing for new and increasingly realistic renderings to take place. You can read about the details on Microsoft's DirectX blog, but let's talk about what this means for games in practice.

First, DXR doesn't actually require radically altered hardware. DXR workloads can run on the existing DirectX engines, because it's fundamentally a computational workload. The same capabilities that allow your GPUs to be used for things like mining cryptocurrencies can be used for things that directly deal with games. Long-term, our graphics chips are becoming increasingly general purpose—more programmable. All the fixed function units are being replaced by general purpose hardware running shader programs.

What does this mean for games? Initially, don't expect games to suddenly transition to full raytracing—we're still a way off from that being practical. However, DXR will allow new rendering techniques that should improve image quality, and over time things like screen space reflections and true global illumination should shift to the new API. Even further out, raytracing could replace rasterization as the standard method for generating 3D worlds, though we may need new display technologies before leaving rasterization fully in the past becomes practical.

The good news is that DXR will run on currently existing hardware—so you don't have to ditch your new RX Vega or GTX 1080 just to experience DXR. However, future hardware will be built to better handle the new possibilities, and Nvidia at least is already talking about how it's Volta architecture can enable better performance with DXR. Nvidia is already taking things a step further with RTX, which is built on DXR but includes additional hooks that require Volta hardware.

AMD for its part has issued the following statement: "AMD is collaborating with Microsoft to help define, refine and support the future of DirectX12 and ray tracing. AMD remains at the forefront of new programming model and application programming interface (API) innovation based on a forward-looking, system-level foundation for graphics programming. We’re looking forward to discussing with game developers their ideas and feedback related to PC-based ray tracing techniques for image quality, effects opportunities, and performance."

What's great about DXR is that raytracing isn't some crazy idea that game developers don't want and will never use. If you go up to any 3D engine developer and say, "We've got this new tool that allows you to combine many of the benefits of raytracing into a traditional game engine, and still get the whole thing running in real time at 60fps (or more)," every one of them would (or at least should) be interested. And Microsoft is already announcing support from EA's Frostbite and SEED, Futuremark, Epic Games and its Unreal Engine, and Unity 3D with its Unity Engine.

If/when we eventually reach the point where everything is being fully simulated with raytracing (and we're running around the Holodeck), from the developer standpoint things also get simplified somewhat. Instead of spending time and resources generating shadowmaps and calculating various hacks to simulate the way things should look, raytracing can just do all the heavy lifting. You want accurate lighting, shadows, and reflections? You're covered. So the artists can all focus on creating a great model of their world and let the engine do the rendering. That's the idea, anyway.

My understanding is that we should be able to see actual live demos at GDC this week, so the early fruits of DXR may arrive sooner than later. We're not going to be playing games with the full quality of Pixar's Coco just yet, but real-time Toy Story? Yeah, that's getting tantalizingly close.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.