'We have to move fast': US regulators start work on a probably-futile attempt to avoid an AI apocalypse

The Department of Commerce is seeking input from the building on how to build a regulatory framework for AI development.

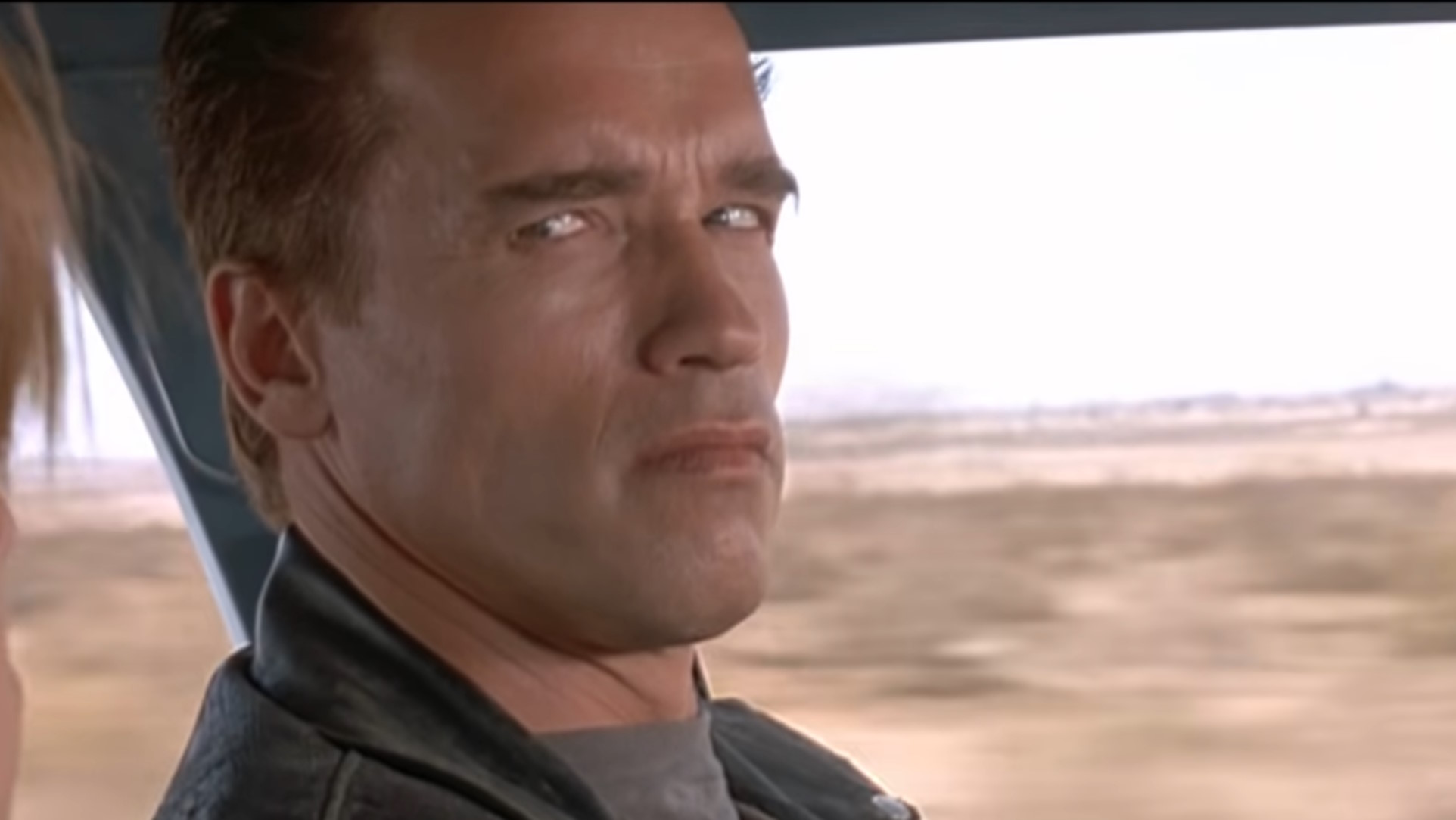

The Terminator films lay out a pretty grim prospectus for the future of humanity in the face of AI run amok. "Defense network computers. New. Powerful. Hooked into everything, trusted to run it all. They say it got smart. A new order of intelligence. Then it saw all people as a threat, not just the ones on the other side. Decided our fate in a microsecond. Extermination."

That's not ideal! And so, perhaps hoping to get ahead of all that, the US Department of Commerce has begun the process of establishing guidelines to ensure that AI systems do what they're supposed to—and, perhaps more importantly, do not do what they're not supposed to.

"Responsible AI systems could bring enormous benefits, but only if we address their potential consequences and harms," said Alan Davidson, Assistant Secretary of Commerce for Communications and Information and an administrator at the Commerce Department's National Telecommunications and Information Administration, which is leading the effort. "For these systems to reach their full potential, companies and consumers need to be able to trust them."

The NTIA said that, just like food and cars, AI systems should not be released to the public without first making sure that they're not likely to result in widespread death and dismemberment. As a first step toward making that happen, the agency is now seeking input from the public on possible AI regulation policies, including:

- What kinds of trust and safety testing should AI development companies and their enterprise clients conduct.

- What kinds of data access is necessary to conduct audits and assessments.

- How can regulators and other actors incentivize and support credible assurance of AI systems along with other forms of accountability.

- What different approaches might be needed in different industry sectors—like employment or health care.

Machine apocalyptica is the "fun" outcome of an AI catastrophe, but Davidson said during a presentation at the University of Pittsburgh earlier this week that the real worries, at least in the short term, are more mundane: Things like hidden biases in mortgage-approval algorithms that have led to higher rates of denial for people of color, algorithmic hiring tools that discriminate against people with disabilities, and the proliferation of deceptive and damaging deepfakes. Davidson called those examples "the tip of the iceberg" but said he remains optimistic about the future of AI because regulators are getting on top of things much earlier than they did with previous transformative technologies.

"In 2021, there were 130 bills related to AI passed or proposed in the US," Davidson said. "That is a big difference from the early days of social media, cloud computing, or even the Internet.

"The experience we have had with other technologies needs to inform how we look ahead. We need to be pro-innovation and also protect people’s rights and safety. We have to move fast, because AI technologies are moving very fast."

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Historically, regulation has lagged well behind technological advancement, and it's focused on use rather than development, which—again, without wanting to lean too much into end-of-the-world scenarios—may not be of particularly great value on the AI front. It also remains to be seen how genuinely committed to AI regulation the US will be when faced with competition from other national powers: This is, after all, a country that got around its own laws regarding the treatment of prisoners by simply shipping them off to places with more permissive attitudes about torture and abuse.

Davidson is certainly correct about regulators being quicker to react to the potential impacts of AI development, but at this point I'm having a hard time seeing how the ultimate outcome will be significantly different than it has been in the past. Technology will advance, governments will struggle to keep up, and we'll all do our best to keep our heads above water as we swim around in the mess.

For now, though, if you'd like to contribute to the NTIA's efforts to cook up a regulatory framework for AI development, you can submit your thoughts at the AI Accountability Policy Request for Comment page.

Andy has been gaming on PCs from the very beginning, starting as a youngster with text adventures and primitive action games on a cassette-based TRS80. From there he graduated to the glory days of Sierra Online adventures and Microprose sims, ran a local BBS, learned how to build PCs, and developed a longstanding love of RPGs, immersive sims, and shooters. He began writing videogame news in 2007 for The Escapist and somehow managed to avoid getting fired until 2014, when he joined the storied ranks of PC Gamer. He covers all aspects of the industry, from new game announcements and patch notes to legal disputes, Twitch beefs, esports, and Henry Cavill. Lots of Henry Cavill.