Most of The Witcher 3's dialogue scenes were animated by an algorithm

The Witcher 3 is a massive game. It packs in 35 hours of dialogue, each line of which was voice acted and motion captured. If I had been in charge of orchestrating all the moving parts of the game's development, I would've had a breakdown a month in and the dialogue system would've ended up more like Facade. Thankfully, the much more capable Piotr Tomsinski was in charge, and he gave an enlightening talk at GDC on Friday about how much work went into making the characters move and speak so naturally.

The problem going into The Witcher 3 was obvious: they were making a vast, non-linear, fully-voiced RPG. CD Projekt wanted decisions in The Witcher 3 to feel meaningful, and for them to feel meaningful players needed to form emotional attachments with the characters. They wanted to be able to sell drama by showing it, not by telling you up front a scene was supposed to be emotional. Writing 101, essentially.

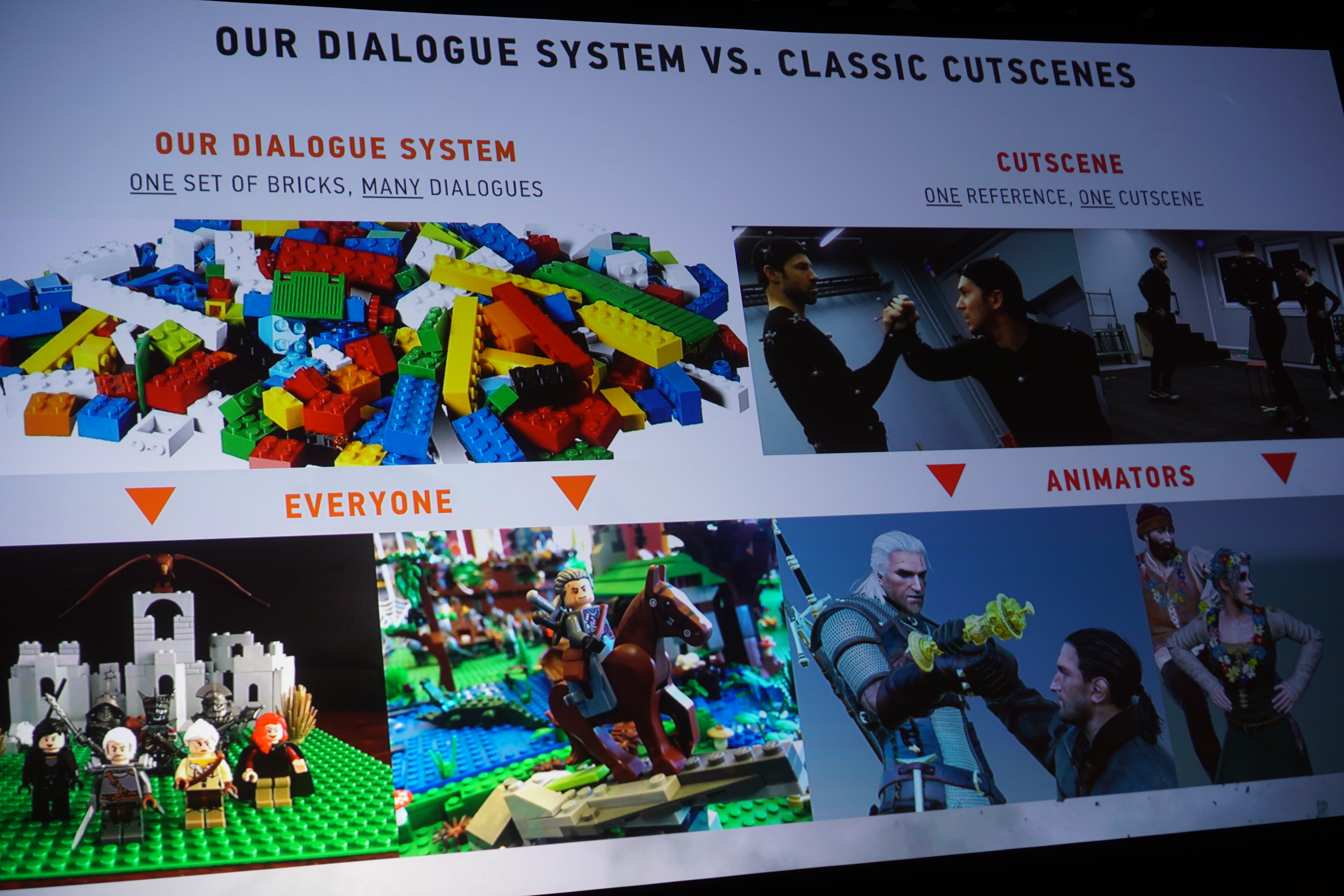

Doing individual motion capture work for every dialogue scene and then animating them all by hand would've been impossible, or taken up ridiculous resources (Tomsinski showed that a team of only 14 worked on the cinematic dialogue system, including programmers, animators, and QA—other hands likely pitched in, but that seems to be the core team). So CD Projekt built a number of systems, and a huge library of data in the form of reusable and easily modified animations, that could be combined together to create The Witcher 3.

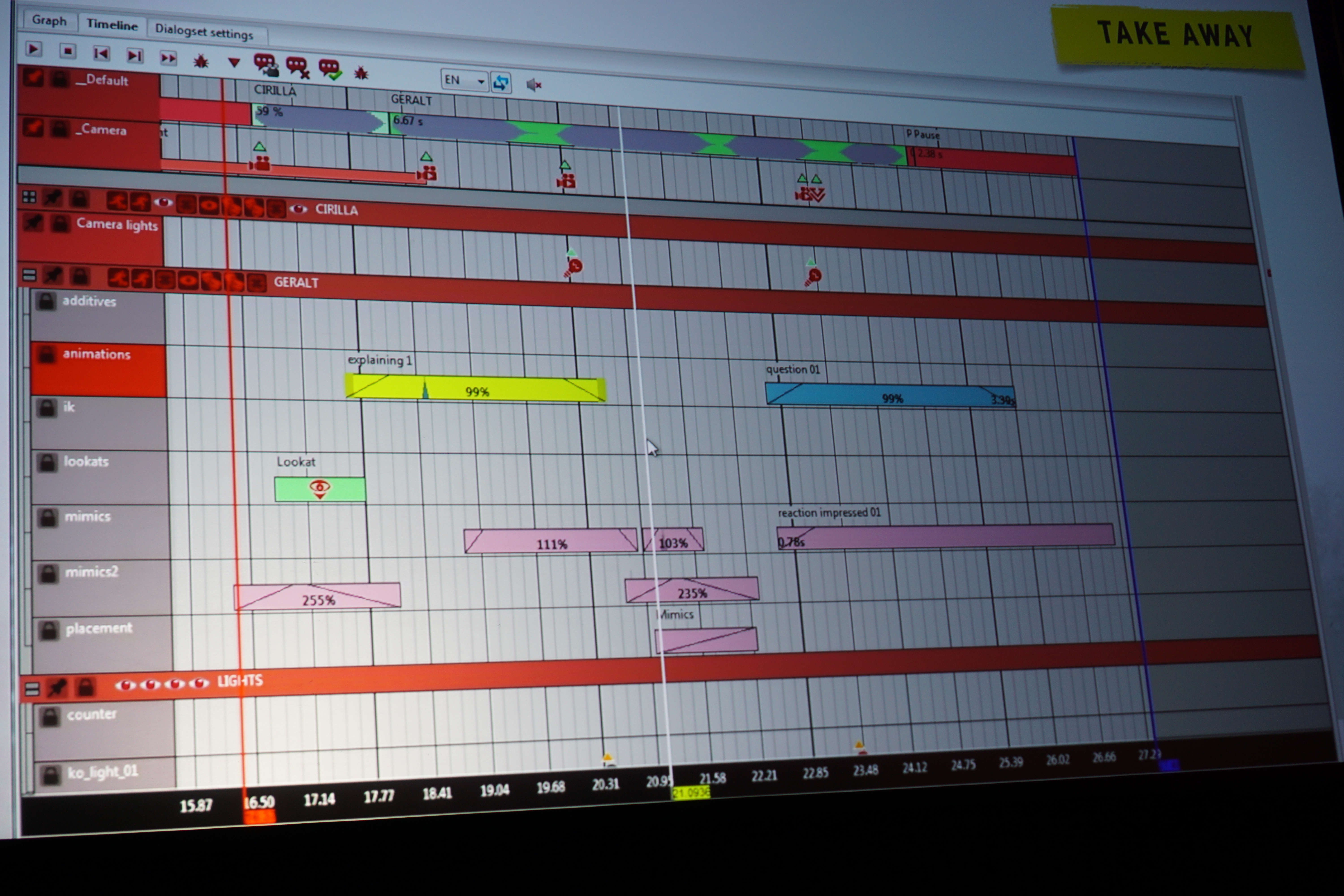

With the systems they created, designers could make their own dialogue scenes without needing to pull models into a tool like Maya to do heavy duty animation. When he first showed off their Timeline tool, it looked overwhelmingly complicated—like a more complex version of Logic Pro or Adobe Premiere. But it's actually not so bad: there are different rows for animations, 'lookats' (which is where the characters in the scene are looking), placement (location in 3D space), and a few other elements.

The real magic comes in how they generated the dozens of hours of dialogue scenes using an algorithm, and then went into the timeline to hand-tune each one instead of building it from scratch.

"It sounds crazy, especially for the artist, but we do generate dialogues by code," Tomsinski said. "The generator's purpose is to fill the timeline with basic units. It creates the first pass of the dialogue loop. We found out it's much faster to fix or modify existing events than to preset every event every time for every character. The generator works so well that some less important dialogues will be untouched by the human hand."

That's right: a bunch of math determined how most of the dialogue in The Witcher 3 was arranged and animated. So how did it work?

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

"The generator requires three different types of inputs: information about the actors, [some cinematic instructions], and finally the extracted data from voiceovers. We use an algorithm to generate markers, or accents, from the voiceovers, so later we can match the events in animation with the sound. It generates camera movement and placement, facial animation, body animations, and the lookats."

The Witcher 3 has some of the best-looking character interaction in any game, and most of that started with procedural generation. If the animators weren't happy with a scene, they could simply press a button to regenerate it, and the algorithm would conjure up something new with a slightly altered mix of camera movements and animators. Tomsinski showed off some side-by-side examples, and it was easy to see the small distinctions between them; subtle differences between head and body movements, the pauses between movements.

"The generator works so well that some less important dialogues will be untouched by the human hand."

Of course, they didn't let the algorithm run and call it a day. The thing both scenes had in common was that they looked a bit amateurish—really, like awkward actors stumbling over a scene in a film, or the not-quite-natural animation of games that started to really explore cinematic character interactions (i.e. almost everything pre-Mass Effect). Most of the time, the animators would take what the generator had created, then go into the timeline to tweak it by hand, which could deliver a much better scene in just a few minutes. In some cases, they'd add in more elaborate camera movements, reposition characters and facial expressions, and so on, but they already had a great, unpolished base to work from.

The finished example Tomsinski showed adding a lingering camera shot to the end of the scene for a more cinematic transition, and the character Geralt had been talking to made a subtle facial expression as the witcher walked away. It doesn't sound like much, but it's amazing how much more life that gave the scene.

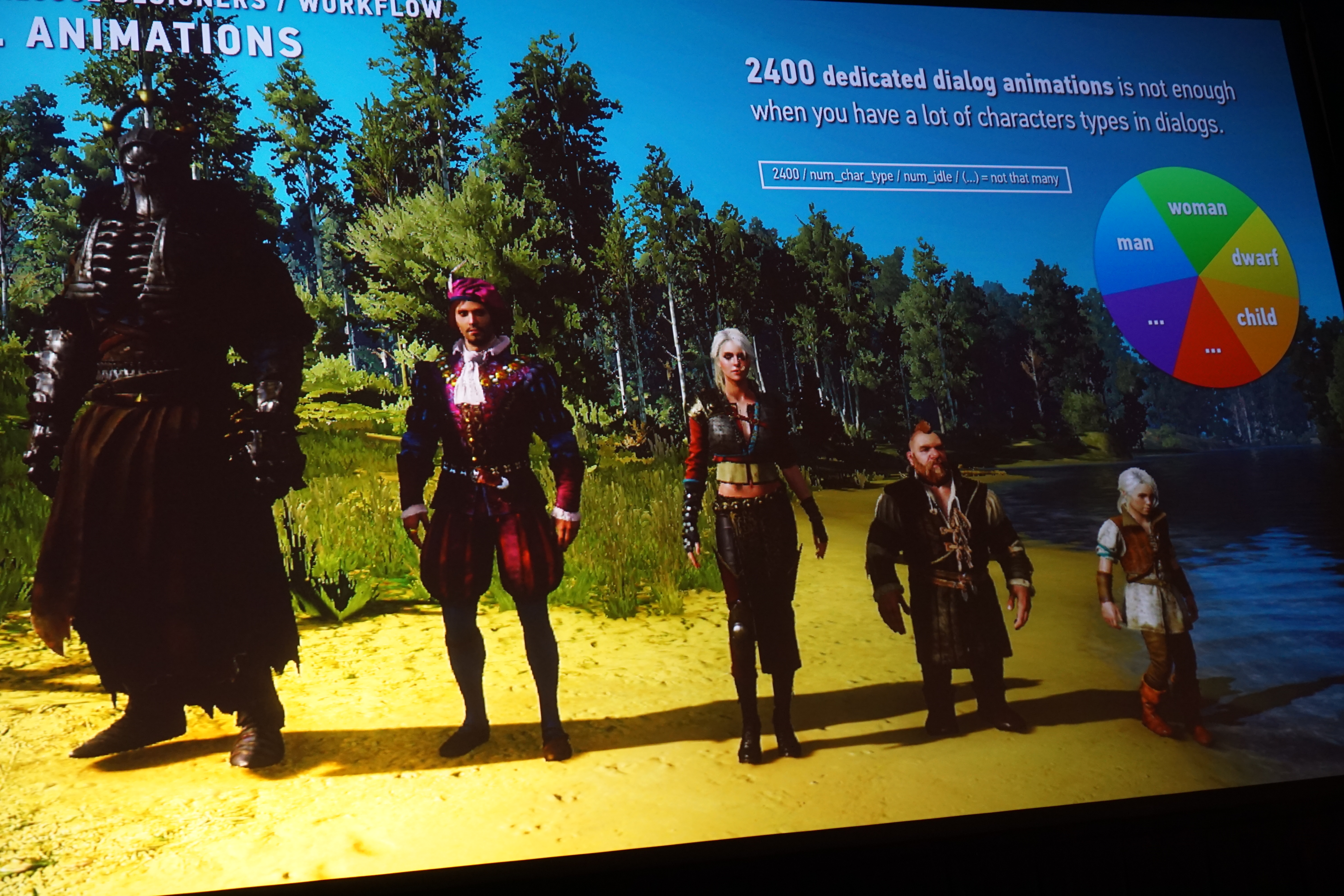

The building blocks for all those scenes were a set of 2400 dialogue animations, but divided between the various types of characters: men, women, dwarves, elves, children, etc., and different poses (standing, kneeling, and sitting), that number gets significantly smaller. They needed to be reusable.

Tomsinski gave an example: a simple gesture Geralt makes with his hand while standing. What if they wanted Geralt to make that gesture while sitting? They could try adding that animation to the timeline after inserting Geralt in a sitting pose, but that doesn't work—he suddenly appears stood up and waves. So they created a system for additive animations, where only the key part of the body will move—in this case, his arm—allowing animations to be combined. Bam! Geralt is sitting down, but making the same gesture. Other tools, like masking, let them further tweak the movement of specific limbs. In this example, they made sure his legs looked natural as he moved.

There were other key elements to the system, like how they designed the lookat animations with attached poses, so characters would lean on one arm when looking in a certain direction, and how the timeline could dynamically scale for localization to account for longer or shorter dialogue in different languages. But to recap: holy cow, the cinematic dialogue in The Witcher 3 is amazing, and now we know why.

Update 3/23/2016: As I wrote above, the algorithmic generation was a starting point for the Witcher 3's dialogue scenes, which designers then turned into better scenes. Piotr Tomsinski sent over a note elaborating on that process.

At CD PROJEKT RED we strongly believe in a hands-on, custom approach to content creation. I'm sure you can tell this from the way how the world Geralt traverses was designed - and our interactive cinematic sequences (dialogues as we call them) are no different. It's not true that "a bunch of math determined how most of the dialogue in The Witcher 3 were arranged and animated".

How does creating a dialogue scene like this look like from cinematic designer's point of view? First of all, it's not only animating. In fact, there's very little animating at all. Animations are delivered to the animation library - a huge set of gestures, moves and facial animations. Cinematic designer working on a scene simply uses those libraries, crafting actor performance from pre-made animation blocks. And it's something no algorithm can do. Does the woman who lost everything in the fire do this or that gesture? Should it happen while she speaks or during a pause? In what pose does she stand? Should she look away? For how long? Should she be expressive or hide her feelings?

We would like to emphasize that creating a compelling scene is more than just “animating”, which can be seen as the process of constructing the acting. Creating a compelling scene is in fact editing, preparing cinematography, staging and applying other cinematic means of expression. Algorithm didn't compose our shots so that they have depth and balance. An algorithm didn't decide when to cut the camera to show the NPC's reaction or when to move from a medium shot to a close-up. The algorithm didn't decide when characters moved or changed poses. It didn't tell us if a scene should be fast-paced with wide-angle shots or slow shot with medium lenses.

The “algorithm” or “generator” as we call it, was used only as a solid base for further development of the scene. It was a shortcut, a tool, but never a goal. More of a production-related thing. It created a rough first pass through a scene, which was always tweaked and adjusted by hand - in all 1463 dialogues. In many, the algorithm wasn't used at all, as they demanded custom approach from the very beginning.

Every cinematic dialogue was approached with the same care, attention and goal - to create the most compelling and emotional scene for given quest and story. Only this way, the characters could ring true and players would want to invest in them, to understand them, to help or condemn them. When they act like humans, not voiceover-delivery machines. Achieving this is a deliberate, careful process. Procedural doesn't get you this. A designer with empathy does. Because you have to put your heart into something to move someone else's.

Wes has been covering games and hardware for more than 10 years, first at tech sites like The Wirecutter and Tested before joining the PC Gamer team in 2014. Wes plays a little bit of everything, but he'll always jump at the chance to cover emulation and Japanese games.

When he's not obsessively optimizing and re-optimizing a tangle of conveyor belts in Satisfactory (it's really becoming a problem), he's probably playing a 20-year-old Final Fantasy or some opaque ASCII roguelike. With a focus on writing and editing features, he seeks out personal stories and in-depth histories from the corners of PC gaming and its niche communities. 50% pizza by volume (deep dish, to be specific).