How VR Is Resurrecting 3D Audio

Sound off

Your brain is amazing. With two holes and some cartilage on the sides of your head, you can precisely pinpoint the position of an object in 3D space purely based on auditory cues, a process known as localization. Stop for a moment. Listen to the sounds around you. No, really. Stop. Listen. Even if the sounds are outside your field of vision, can you place where they are? Ah, the sweet sound of localization. This is an incredibly useful phenomenon we often take for granted, helping us accomplish an array of activities ranging from safely crossing the street, to escaping overprotective dogs, to creating immersive video games.

Making sense of it all

In real life we have five senses (maybe six if you’re in an M. Night Shyamalan movie). These are touch, taste, smell, sight, and sound; but, when it comes to video games and virtual reality, only two of these are at our disposal: sight and sound.

In some ways, that’s for the best (smell in a zombie game, anyone?), yet with only two senses to play off of, crafting a truly immersive experience means fully appealing to both. That boils down to high-caliber 3D graphics and truly 3D audio.

While the field of graphics has made near continual strides, the history of PC audio is more tumultuous, ranging from rapid innovation to stalled progress to outright regression. Finally, with the dawn of virtual reality, truly 3D audio is again gaining traction and prominence. By taking lessons from the days of yore coupled with new innovations, virtual reality is pushing for a more immersive auditory experience than ever before.

In need of resurrection

3D audio is ill. Not in the dope rapper sense. Correctly implemented 3D audio is pretty sick, but for nearly the last decade, the overall health of 3D audio has been less than fine. Not to be melodramatic, but in order to understand how virtual reality is resurrecting 3D audio, it’s important to understand why it needs resurrection in the first place.

Three-dimensional sound implies x,y,z coordinates in space for every sound source and its listener, yet, most modern games confine sounds to the horizontal axis with minimal elevation and distance cues. That means sound is essentially stuck in a stationary hula hoop around the listener, allowing for only an anemic, pseudo-3D effect.

The sound of progress?

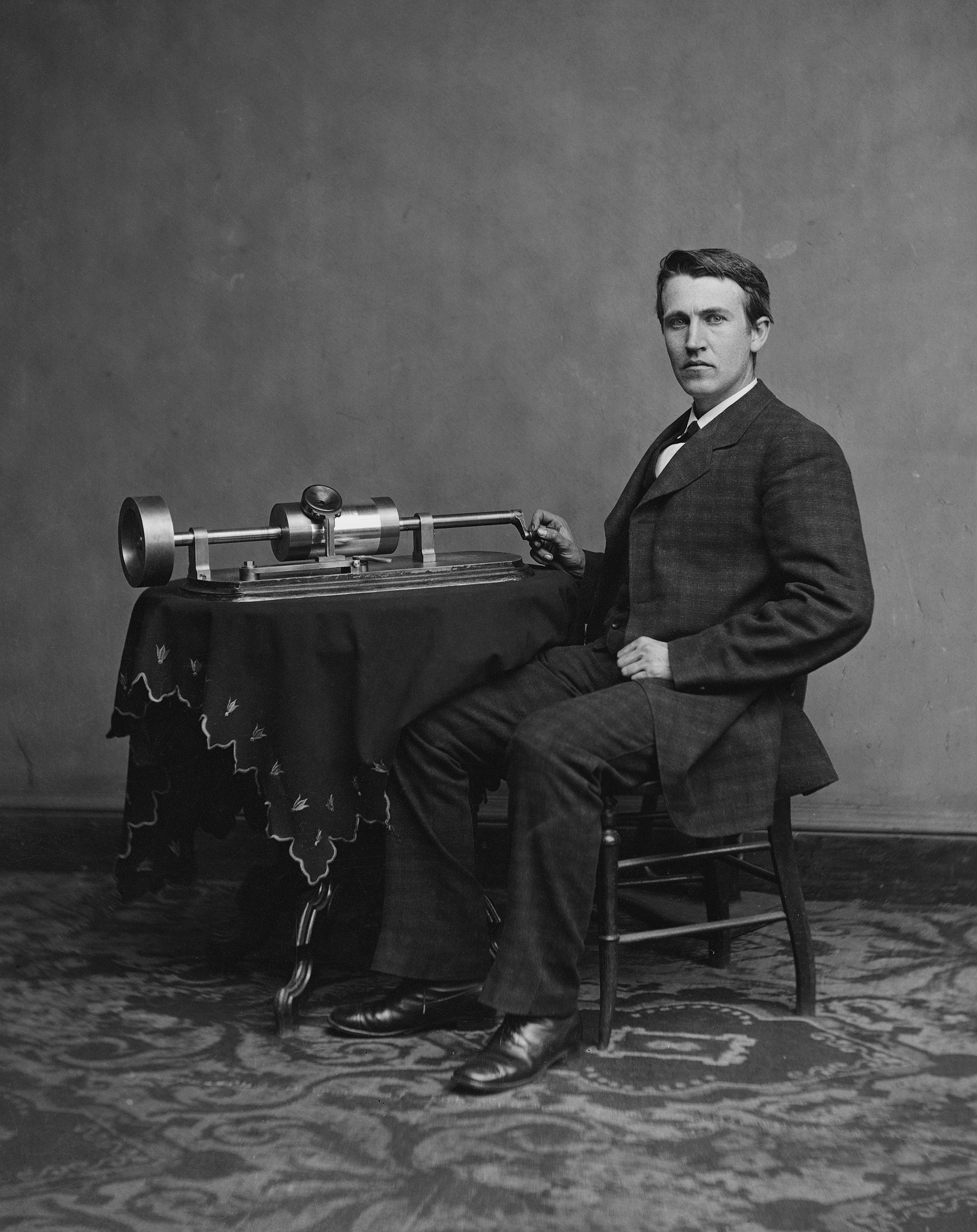

In some ways, sound has improved tremendously over the past several decades, most notably in terms of fidelity and signal-to-noise ratio. We’ve come a long way since Thomas Edison first jumped for joy at the playback of sound on his phonograph in the late 1800s. However, while the overall fidelity of prerecorded sound has, for the most part, consistently improved, the real-time modeling of sound in a 3D space has experienced less sure footing.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

So, what’s so hard about making good 3D audio in games anyway, and why isn’t it in my game yet? Well, reproducing high-fidelity sound itself isn’t that hard. However, reproducing the dynamic behavior of sound in a 3D space in real time is a tougher nut to crack.

Space jam

First, we start with a prerecorded sound effect: a sample. Maybe it’s a zombie ralphing. Maybe it’s a gun firing. Maybe it’s your companion’s footsteps crunching in the snow. Whatever the sound effect, it comes from a source and is heard by a listener.

Both the sound source and listener have to be “rendered” or “placed” in a 3D space, a process known as spatialization. Essentially, this means both sound source and the listener have full and dynamic x,y,z coordinates, left-right, back-forth, and up-down. As their positions change, the way that prerecorded audio sample sounds must also change to reflect its position. There are fancy Scrabble words for this, words like azimuth (left-right, forward-back), elevation (up and down), and distance. Though spatialization is incredibly important for auditory immersion, it’s also just the tip of the iceberg. Before the sound even arrives at the listener, it has to take an often convoluted trip through space to get there.

Much like light, sound rarely travels unaltered from point A to point B. Instead it can undergo a myriad of alterations based on the surrounding environment. Like light, sound can be reflected (early or late reflections), absorbed (absorption/dampening) or blocked entirely (sound occlusion). It can also echo (convoluted reverberations) as it fills a space, all depending on the environment through which it travels. Together, these environmental effects create what is commonly referred to as audio ambiance.

Accounting for all these environmental effects on the sound waves before they even arrive at the listener’s ear, and in real time, quickly becomes computationally intensive. As a gross simplification, this is basically like adding another physics engine, acoustic raytracing if you will, to your game. It’s also one of the best arguments for hardware-accelerated sound. At any rate, most games today take neither spatialization nor ambiance into full account.

The Aureal deal

Now, all this can be a bit hard to wrap your head around, so instead, wrap your head in a set of headphones and take a listen to this. Seriously. Listen to it. This is a real-time 3D audio technology that briefly existed in the late 1990s. Yes, that’s right. Nearly two decades ago the technology to create immersive, lifelike, positional 3D audio was alive and well. The technology was called A3D 2.0, and it implemented many of the above-mentioned effects in real time. The company responsible for this technology was Aureal.

Much of this technology relied on head-related transfer functions (or HRTFs), mathematical algorithms that take into account how sound from a 3D source enters the head based on ear and upper-body shape. This essentially helps replicate the auditory cues that allow us to pinpoint, or localize, where a sound is coming from. Again, this was achieved in the late 1990s.

At this point, if you’ve listened to the above link and feel a little short-changed right now, that’s OK. You probably should. If this technology existed so long ago, why on earth don’t games today sound at least this good? In a word, competition.

The caveat of competition

Ideally, competition is a beautiful thing, bringing the cream of the crop to the top, like when little Johnny decides to run faster as Sally passes him in a foot race. Unfortunately, competition also has the potential to do the opposite and shove the cream to the bottom of the barrel, like when Johnny decides to push Sally as she passes him. Sometimes companies behave the same way.

Aureal was one of the first companies to pioneer 3D audio. To say the least, its sound tech was impressive, especially considering the date it was achieved. Then, Creative, Aureal’s nearest competitor, sued them for patent infringement. Even though it’s widely accepted that Aureal had the superior audio technology, the cost of legal action left Aureal too crippled to proceed. In short, these two companies didn’t play nice, and audio technology stumbled as a result. The unhealthy competition between them not only disrupted the development of 3D audio, it did so at the expense of the consumer, who wound up with an inferior product in the end.

For a while, Creative continued to innovate into what could be considered the golden age of 3D audio. Most of this innovation was built on the backbone of DirectSound and DirectSound3D, two pivotal technologies offered by Microsoft.

The exes

First, let’s clarify a colloquialism. You may have heard the term DirectX tossed around once or twice, probably in reference to some shiny-new graphics feature. Although, DirectX is most commonly associated with 3D graphics, it’s actually a whole slew of multimedia APIs (application programming interfaces), essentially layers of software that help powerful hardware hook up with power-hungry software in a standardized way.

The graphics API of DirectX is Direct3D. This is what most people typically refer to when they say DirectX. DirectSound, on the other hand, is (or rather, was), the corresponding audio API. There was also a little extension to DirectSound called DirectSound3D.

DirectSound (and by extension DirectSound3D) did two key things. First, it created a standardized, unified environment in which 3D audio could grow as a technology and be easily utilized by software developers. Second, it allowed for the hardware acceleration of 3D sound, which can be a computationally intensive task. Up until 2006, DirectSound and DirectSound3D were the backbone of many audio applications. Then Vista happened.

The axes

With the release of Windows Vista, Microsoft axed DirectSound3D, pulling the rug out from under Creative and years of audio development as a whole. Both standard audio API and hardware acceleration went to seed. To understand the true tumult of this, imagine if Microsoft suddenly decided to axe Direct3D. Sure, the graphics industry would likely recover, but it would be a huge blow.

The removal of DirectSound and DirectSound3D was advantageous in some regards, but it was a serious setback for the sound status quo. First, Creative slit Aureal’s tires, then Microsoft sucked the wind out of Creative’s sails.

In the wake of DirectSound3D there was much mention of how hardware acceleration wasn’t needed anymore anyway. While there is some truth to this, regardless of that argument, the fact is that for many years, software implementations in games generally fell flat because they didn’t compute the array of computations needed to create truly 3D audio. For the better part of a decade, 3D audio backpedaled, stumbling to regain its footing as software alternatives fumbled to fill the void.

The aftermath

Though most of the alternatives from Vista hereon have been software based, there have been some hardware-accelerated solutions, such as AMD’s TrueAudio technology, where the GPU helps conquer the math needed to create accurate 3D Audio. When we recall that sound is a physics-based phenomenon, and consider the GPU’s increased role in physics rendering (Nvidia PhysX for example), it’s not too far fetched to say GPUs could have the math chops to serve up some accurate, immersive 3D audio as well. However, the 3D audio sector still remains fragmented.

Ultimately, whether the solution is hardware or software is less important. What is important is that the processing for proper spatialization and ambience happens somehow. For nearly a decade, much of that processing has been glossed over. With the advent of virtual reality, the necessity for truly 3D audio is finally coming to a head. Literally, and that head could be yours.

The honor of your presence

Virtual reality is all about immersion. Oculus Rift specifically emphasizes the concept of presence, or the sensation of physically being in an environment. Both sight and sound help solidify this sensation.

In virtual reality, one of the keys ways immersion and presence are accomplished graphically is through low-latency head tracking, turning your head or even crawling on your hands and knees while the display matches your field of vision in real time with no perceptible lag. Interestingly enough, head tracking is also one of the key reasons truly 3D audio is so critical.

In real life, we often pinpoint sounds by moving our head slightly, rotating or cocking it while our brain notes the sonic discrepancies. Mouse-look could previously simulate this to some degree, making truly 3D audio preferable, but head tracking practically mandates it.

3D audio: virtually a mandate

Audio can sell or dispel the sensation of presence in virtual reality. Properly implemented, 3D audio solidifies a scene, conveying information about where objects are and what type of environment we are in. Tracking a moving object with your head is one thing, but hearing the sound match its precise location is equally imperative. Both visual and auditory cues amplify each other. If the cues conflict, immersion is lost.

Imagine if you saw an object move above you, but it sounded as if it were beside you? Or imagine you were listening to a character, but no matter where you looked their voice always sounded as if it were in front of you? Immersion would be shattered, and consequently the sensation of presence would be lessened or lost altogether.

3D audio is also important because it can somewhat stand in for the currently absent sensation of touch, such as when we hear the sound of things we feel, like wind rushing in our face, or rain falling around us. Check out this excellent video from the Oculus Connect Conference to learn more about the importance of 3D audio in virtual reality.

But it’s not just that a lack of properly implemented 3D audio can undermine immersion. It’s that truly 3D audio can entirely augment it in a multiplicative fashion. That’s why virtual reality is making such a pronounced push for truly 3D audio that incorporates both spatialization and ambience.

An audio resurrection

Back in 2014, Oculus licensed VisiSonic’s RealSpace3D audio technology, ultimately incorporating it into the Oculus Audio SDK (HTC Vive has been comparatively coy about its 3D audio solution). The tech relies heavily on custom HRTFs to recreate accurate spatialization over headphones, the same principle Aureal pushed nearly two decades ago. Head here to peep the audio technology working it’s sonic magic on the Rift in real time.

The best part of all this is that Oculus isn’t only incorporating it into their audio SDK. They are offering their audio SDK for free, and not just for VR, but for any platform, including plain-Jane PC games. While there are many other viable third-party audio solutions, this is a great step toward offering an easily accessible, high-quality 3D audio baseline, and perhaps even the start of a standard for truly 3D audio in games, a standard that has been lacking since the demise of DirectSound3D nearly a decade ago.

For some time now, truly 3D audio has been dead, or if you’re an optimist, in serious need of resurrection. Thanks to VR, that need for resurrection is finally gaining attention and traction. 3D audio is no longer viewed as optional seasoning. Instead, truly 3D audio is a key ingredient, one that augments and multiplies the entire VR experience, taking presence and immersion to a height mere graphics never could.