The AMD Ryzen Threadripper 1950X and 1920X Review

AMD lays claim to the fastest consumer CPU on the planet, outside of games.

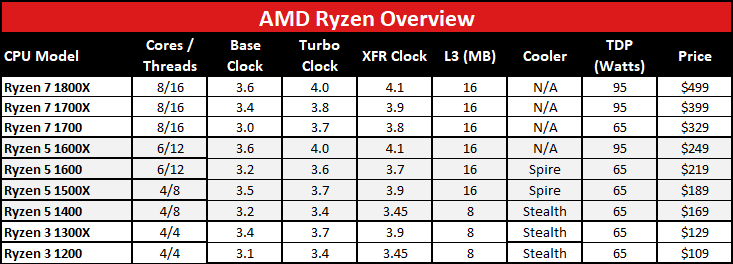

AMD has been on a roll this year, at least in its CPU division, and the positive vibes coming off the people in charge at the recent Tech Day were almost palpable. Ryzen isn't necessarily the fastest CPU around, but for the price, it's damn hard to beat. From the budget-minded Ryzen 3 to the midrange Ryzen 5, and on to the high-end Ryzen 7, there are reasons to consider going with an AMD CPU at every pricing tier. The one exception has been at the ultra-high-end where halo parts like Intel's i9-7900X and i7-6950X reigns as expensive but powerful kings. That changes today.

Threadripper has been an ill-kept secret for the past several months, but from the look of things Intel wasn't really counting on AMD having competitive 16-core products for the enthusiast (and prosumer) sector. The Core i9-7900X was always going to happen, and likely the i9-7920X as well, but if Intel had its druthers both CPUs would be priced substantially higher. Look no further than 2015's Broadwell-E launch where the new CPUs offered the same core counts but at higher prices than Haswell-E, and then the i7-6950X catapulted the Extreme Edition part from $1000 to $1723. But with the threat of a 16-core halo product from AMD, Intel needed something more… only it will still be a month or so before we see the 18-core i9-7980XE, and never mind the $2000 price tag.

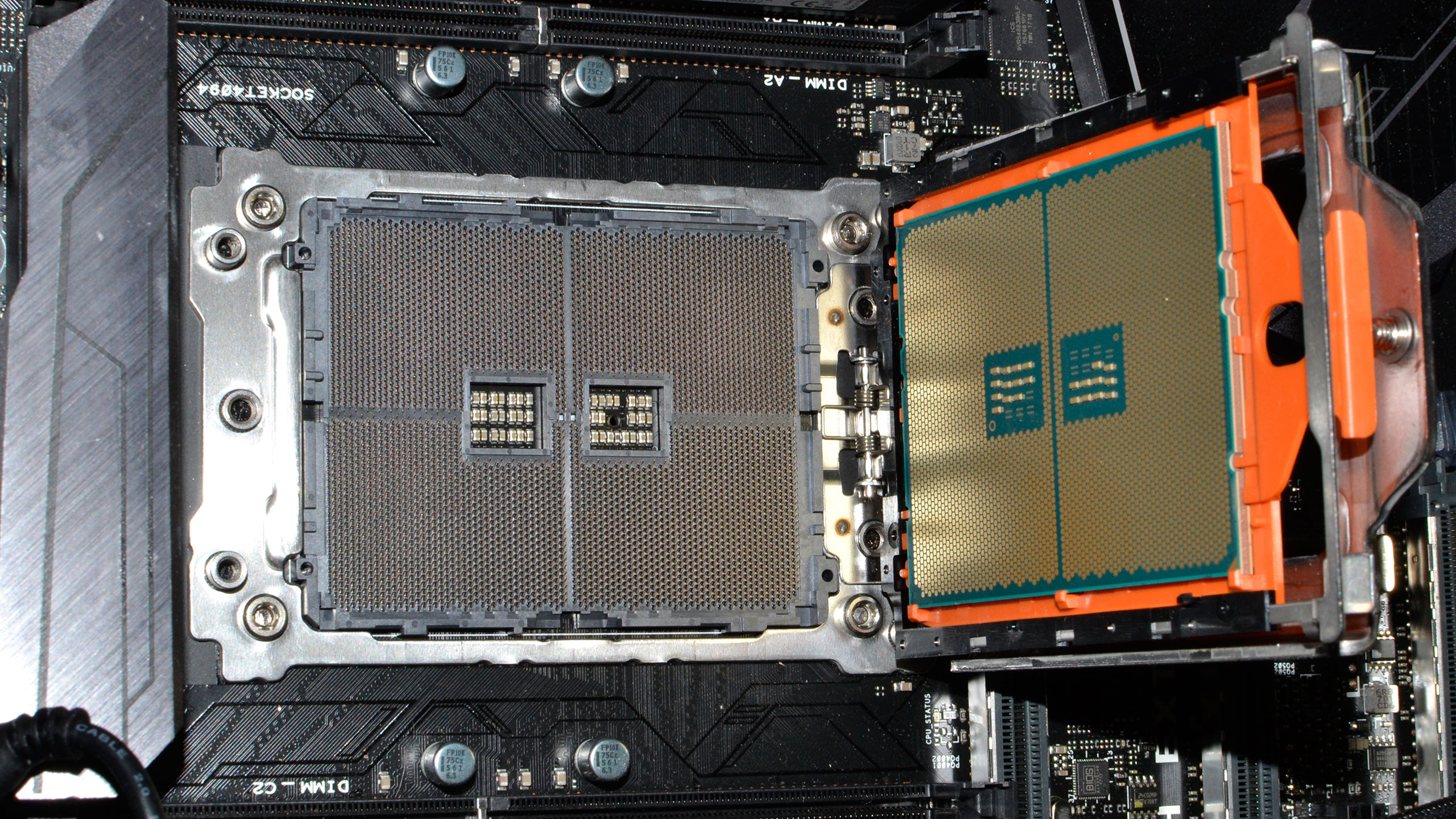

AMD has accomplished this rapid deployment of top-shelf CPU performance by tweaking its server CPU, Epyc. (That should sound familiar to Intel fans, since all the X79, X99, and now X299 enthusiast processors are similarly tweaked versions of Intel's Xeon workstation chips.) While Epyc tops out with a 32-core/64-thread part, complete with 128 PCIe lanes, 8-channel memory, and dual-socket support, AMD basically disabled half of Epyc and modified the socket interface to allow half the cores, threads, PCIe lanes, and memory channels. Welcome to Threadripper and socket TR4.

This is an excellent example of AMD's newfound scalability, which AMD credits to its Infinity Fabric. Intel might poke fun at Epyc and Threadripper "just using consumer CPUs that are glued together," but Intel has done the same thing in the past (eg, Pentium D and Core 2 Quad). By leveraging the same basic design, AMD has managed to launch a complete top-to-bottom suite of CPUs in less than six months. Meanwhile, Intel launched Kaby Lake earlier this year, which is a modest update to the Skylake architecture that debuted in 2015, and more recently Intel released Skylake-X and Kaby Lake-X that are also refinements of the Skylake architecture. That's one core architecture, spaced out over a two-year period—and counting, as Coffee Lake and Cannonlake will continue to use much of Skylake's design.

Of course, Intel didn't have a need to radically change its CPU architectures, since its existing Haswell and even Ivy Bridge and Sandy Bridge (4th, 3rd, and 2nd Gen Core, respectively) were already outperforming AMD's best CPUs. Skylake and Kaby Lake merely increased the gap between Core i3/i5/i7 and AMD's aging FX-series CPUs, while AMD's APUs were mostly used in low power devices like laptops, or in budget PCs. In other words, AMD had a lot of catching up to do. The good news is that the Zen architecture is the biggest generational jump in CPU performance we've ever seen from AMD.

The gap isn't gone, however. The Zen architecture is roughly 50 percent faster, IPC (Instructions Per Clock), compared to the Piledriver architecture used in the FX-8350 (and similar) CPUs. That puts Zen just ahead of Intel's Haswell/Broadwell and slightly behind Skylake/Kaby Lake. But IPC is only part of the equation, the other part being core counts. AMD has offered "more cores for less" going back to the original FX-8150 8-core CPU, but the problem was the cores in Bulldozer (and later Piledriver) weren't particularly potent. Zen is much better, and with 8-core/16-thread CPUs priced around the same level as Intel's 4-core/8-thread and 6-core/12-thread Core i7 parts, AMD has the edge in aggregate CPU performance.

Threadripper takes that same approach and moves in on Intel's X299 enthusiast platform, which for the present still tops out with the 10-core/20-thread i9-7900X. For the same $1,000 asking price, AMD's Ryzen Threadripper 1950X gives you a 16-core/32-thread CPU—60 percent more cores/threads, balanced against slightly lower clockspeeds. Step down a notch and the $800 price the Threadripper 1920X has a 12-core/24-thread design going up against Intel's 8-core/16-thread i7-7820X—except that Intel's chip actually costs $200 less than the 1920X. It also offers just 28 PCIe lanes, vs. 60 PCIe lanes on Threadripper, in case you want to add more than just a single GPU and a few other high-performance peripherals.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

AMD TR4 Testbed

Asus X399 Zenith Extreme

32GB G.Skill TridentZ DDR4-3200 CL14

Samsung 960 Pro 512GB

Open Bench Table

Thermaltake Grand 1200W

Intel X299 Testbed

Asus Prime X299-A

16GB Crucial Ballistix DDR4-2666 CL16

Samsung 960 Pro 512GB

Open Bench Table

Seasonic Prime 1000W

AMD AM4 Testbed

Gigabyte Aorus X370 Gaming 5

16GB GeIL Evo X DDR4-3200 CL16

Samsung 960 Evo 500GB

Enermax Ostrog Lite

Enermax Platimax 750W

Intel LGA1151 Testbed

MSI Z270 Gaming M7

16GB GeIL Evo X DDR4-3200 CL16

Samsung 960 Evo 500GB

Corsair Carbide Air 740

Corsair HX750i 750W

Common Hardware

GeForce GTX 1080 Ti FE

Samsung 850 Pro 2TB

And that leads us into the main event: a battle of the $1,000 heavyweights from AMD and Intel. Before we hit the benchmarks, I want to make one thing clear: neither the i9-7900X nor the Threadripper 1950X are gaming CPUs. Oh, sure, they can both run games quite well, but there are numerous games where the i7-7700K and even the i5-7600K will beat the heavyweights. Think of it as a fast and agile fighter taking down a lumbering giant—one landed punch might do the plucky featherweight in, but landing that punch can be difficult. Rather than gaming, both AMD and Intel are pushing heavy multitasking workloads as a primary focus, and content creators in particular will find plenty of ways to put the extra cores to use—play a game while encoding an HEVC video in the background, or work on setting up the next frame for a 3D rendering while the current frame render is going on.

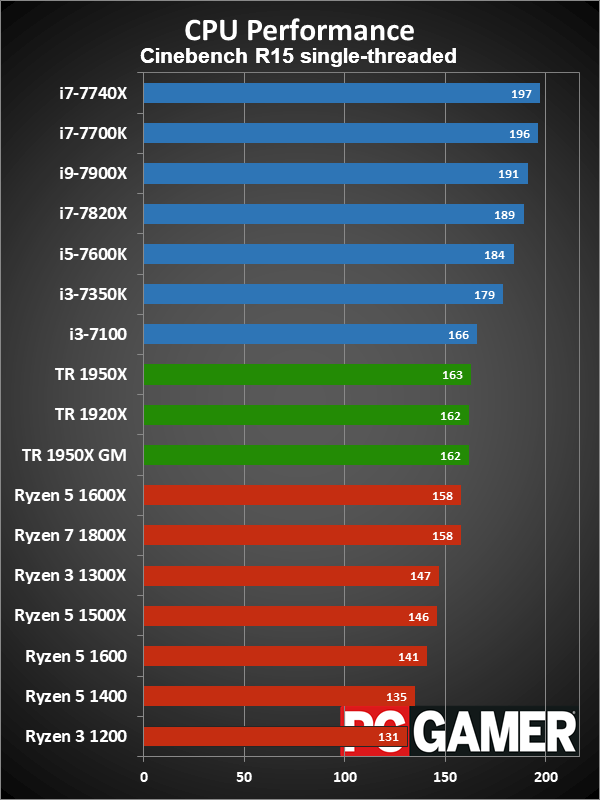

If you're wondering why the higher core counts don't usually help gaming performance—and in some cases hurt it—it goes back to the architectural designs, specifically the L2/L3 cache and memory latencies. Threadripper and Core i9 both have more cache and twice the theoretical memory bandwidth of the mainstream consumer parts (all the socket AM4 and LGA1151 CPUs). The problem is, accessing all that memory and cache actually takes longer. If you're running a workload that can leverage all 32-threads, the slight memory performance deficit isn't a big deal… but most games don't fall into that category. AMD says Threadripper's 4-channel memory controller on average has 87ns of memory latency, compared to 72ns for Ryzen 7—and higher L3 cache latency as well (as well as slower L2 latency when accessing data in a different core's cache). The same thing is true for Intel's X299 processors. Combined with slightly lower clockspeeds (relative to the i7-7700K at least) and the many-core chips often fall behind in gaming workloads.

What if you sometimes want to play games on Threadripper, and you don't like losing performance compared to the Ryzen 7 1800X? AMD offers a solution: Game Mode. Using AMD's Ryzen Master software, enabling Game Mode disables half the cores and tells Windows to function in NUMA (Non-Uniform Memory Access) mode. NUMA tells the OS to use memory closer to the CPU cores first, the low-latency RAM, and while all four memory channels are still enabled, only workloads that overflow the first two channels (16GB in our test system) will end up in the 'slower' RAM. The problem with Game Mode: you'll have to reboot to enter/exit it, and you're losing half of your CPU cores.

I can't really see many people buying Threadripper and then using Game Mode, and even AMD admits it's more of a feature for the few who might want it. Still, I tested with Game Mode on the 1950X, just to show you how it affects performance. You might expect the resulting CPU to be pretty similar to the 1800X, and you'd be right, but apparently binning and some other refinements in the chipset allow the 1950X at least to beat the 1800X in most scenarios.

One final tidbit before we get to the benchmarks. All of the AMD Ryzen CPUs were tested with DDR4-3200 memory, though the Threadripper chips were tested with higher quality CL14 kits. The mainstream CPUs were all tested with DDR4-3200 CL16 dual-channel kits, while the Intel X299 system used DDR4-2666 CL15 memory. (It's what Intel shipped the kit with, and I haven't had a chance to retest using the faster RAM yet.) All systems were also equipped with SSDs, with games loaded from a 2TB SATA SSD while the OS boots from a fast M.2 NVMe SSD. The full details of the test systems can be seen in the (friggin' huge!) boxout to the right.

Ryzen Threadripper CPU performance

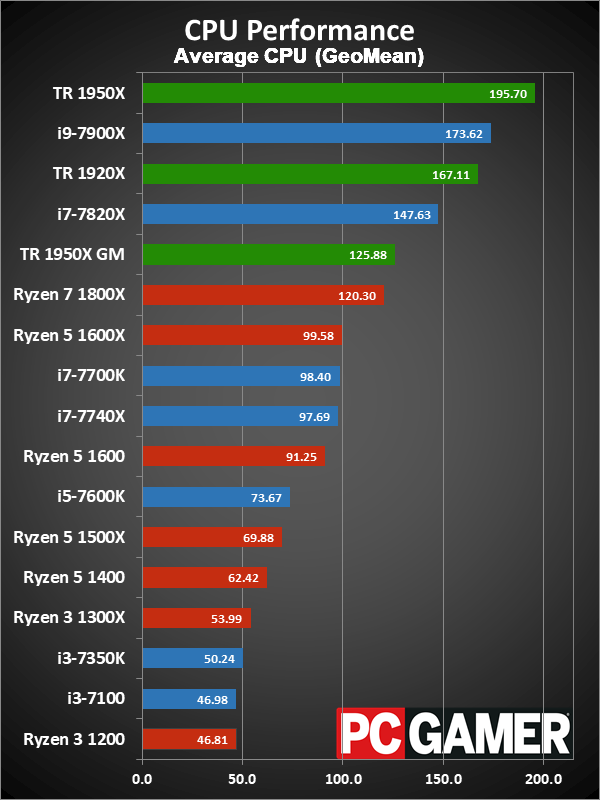

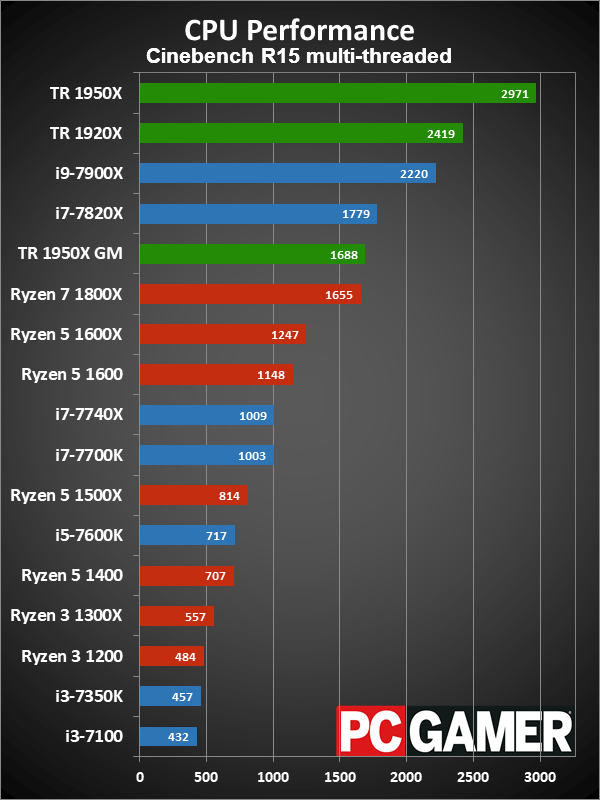

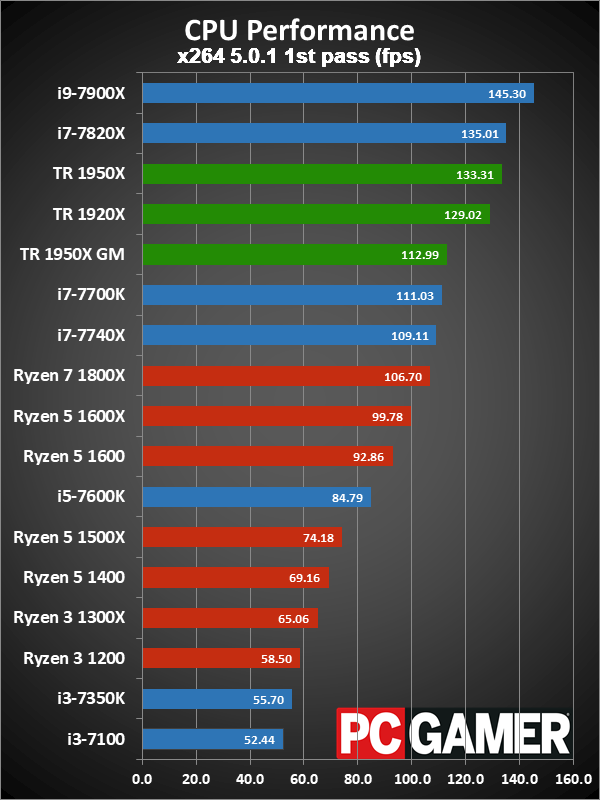

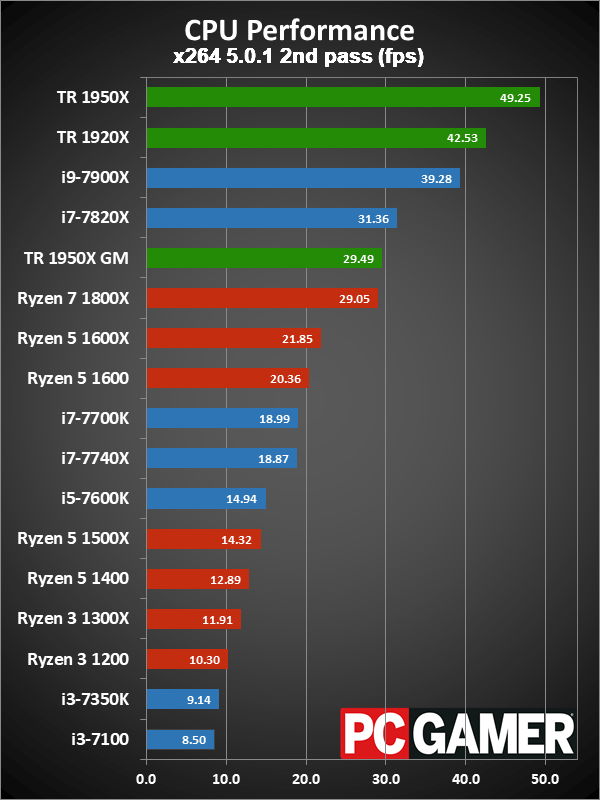

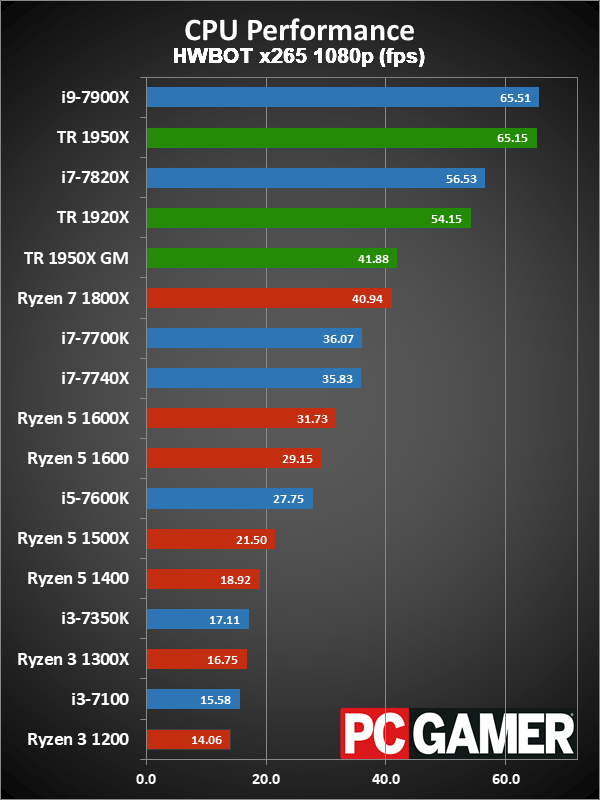

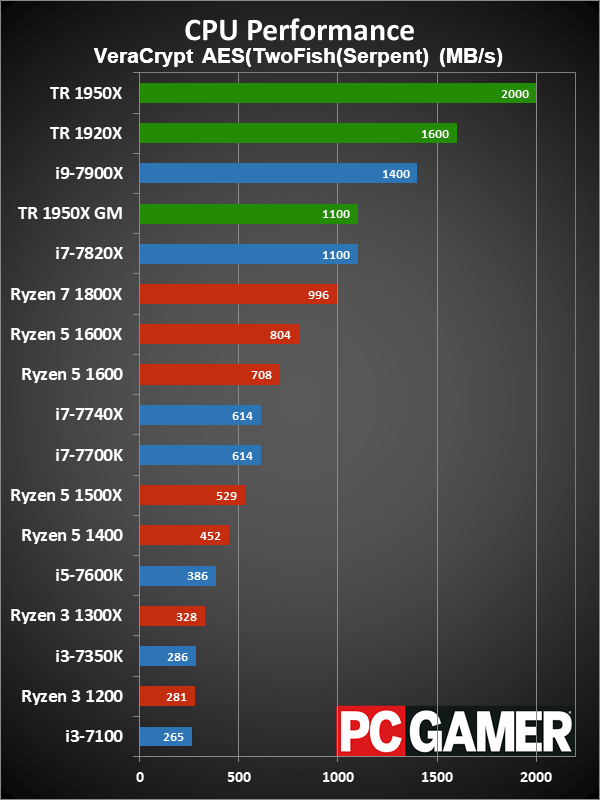

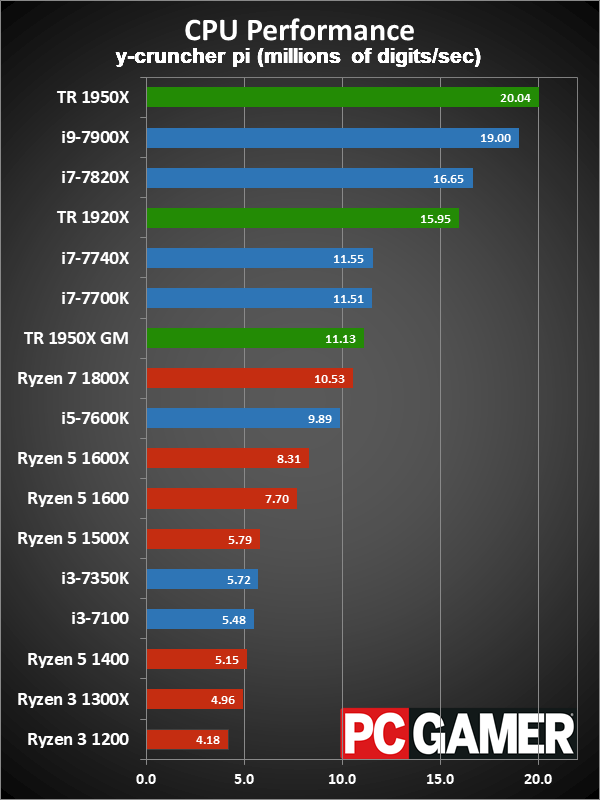

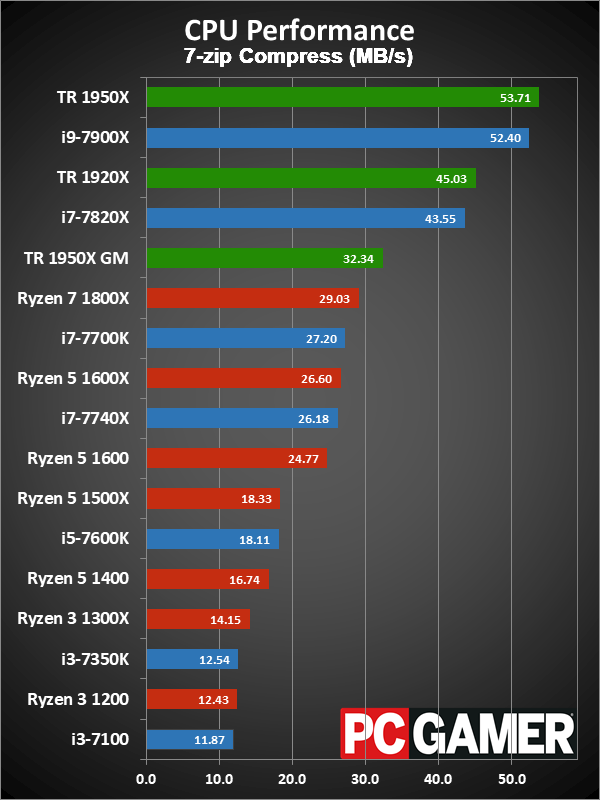

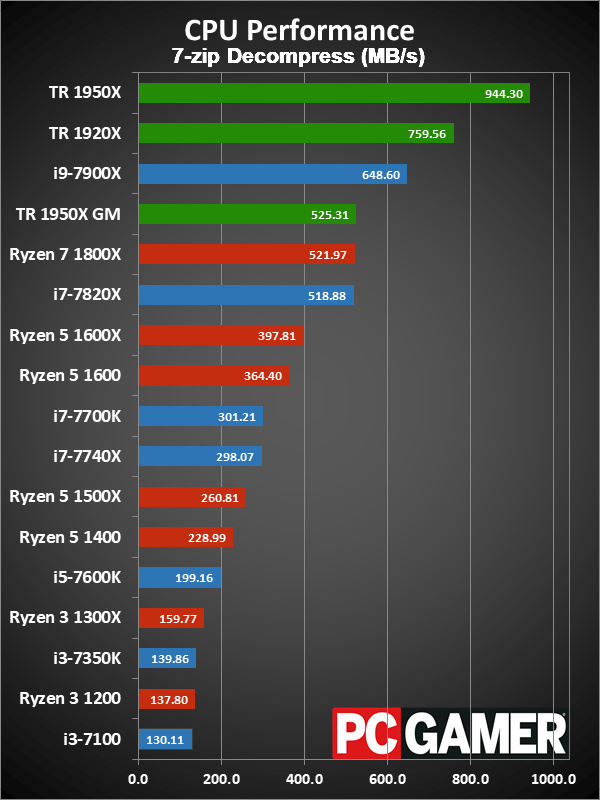

I know, I know—it's PC Gamer, where the hell are the gaming benchmarks!? As I said already, Threadripper is not a gaming-centric CPU, and to drive that point home, I'm starting with the CPU benchmarks. Threadripper has 32 and 24 threads, on the two models I'm testing, and if you're in the market for a high-end desktop, you better be doing more than just playing Overwatch. I've included one single-threaded workload (Cinebench), along with several other multi-threaded workloads that should scale well with increased core counts.

Given the lead-in, obviously Threadripper is going to be potent, but it sure is nice to see an AMD CPU topping the performance charts for a change. In aggregate, the 1950X is 13 percent faster than the i9-7900X, and several tests show the raw power of Threadripper, with VeraCrypt and Cinebench being particularly strong points. If you run an application that can leverage all 32 threads in the 1950X, prepare to be impressed. The 1920X also does well, often beating the i9-7900X, though it trails in the overall metric.

I have to say that several of the benchmarks are probably doing the chip a disservice. x264 for example is several years old, and while I've updated to a newer build, I'm looking to retire the test. Sometime in the coming months, I'll move to Handbrake AVC and HEVC encodes most likely, as that's a more agnostic setup. Still, for applications that can make use of AVX (like y-cruncher and x265), Intel isn't entirely out of the running.

A few other points that aren't covered in the benchmarks gallery are worth mentioning. First, despite having a 180W TDP compared to 140W TDP on Intel, power draw in most cases is very similar—I measured a CPU load of 280W in Cinebench on the i9-7900X compared to 266W with the 1950X and 263W on the 1920X. Idle power on the other hand definitely favors Intel, 68W for X299 compared to 106W with Threadripper. But saving a bit of power at idle isn't really the point of a HEDT build.

The other item I want to mention is that I retested the i9-7900X, and added the i7-7820X, using an Asus motherboard, as the Gigabyte board I used in my earlier testing tended to auto-overclock. The Asus board on the other hand is running full stock clocks, so this is basically the minimum performance you should expect from an X299 system. And yes, I still need to retest some of the other Ryzen 7 chips, along with the old FX-8370 I have stored in the garage. Time is a harsh mistress….

Ryzen Threadripper gaming performance

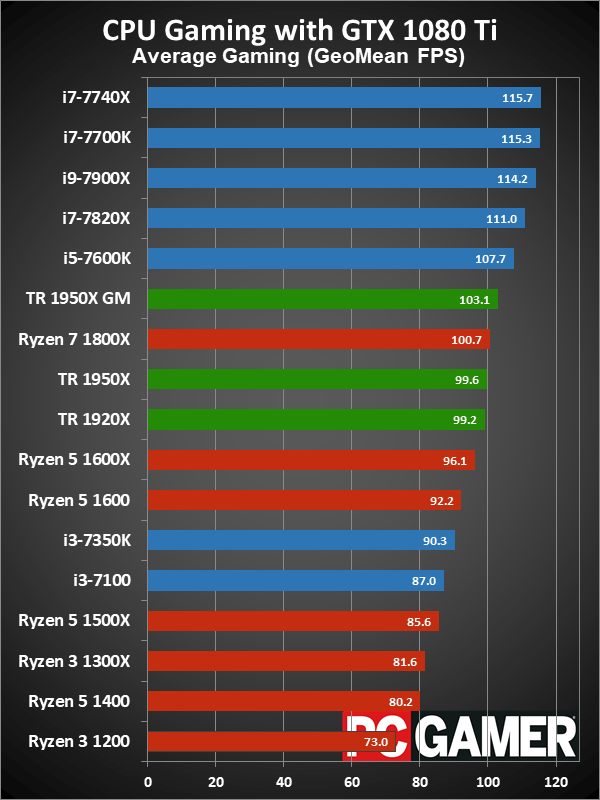

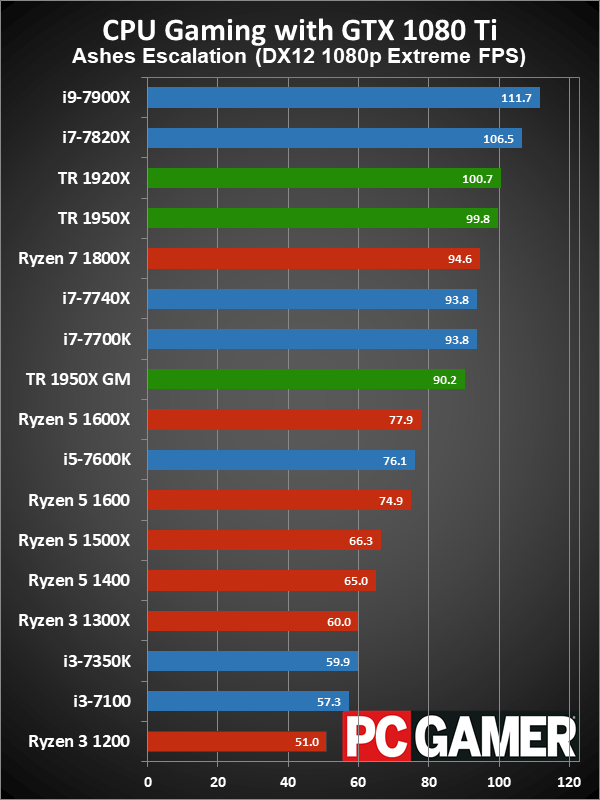

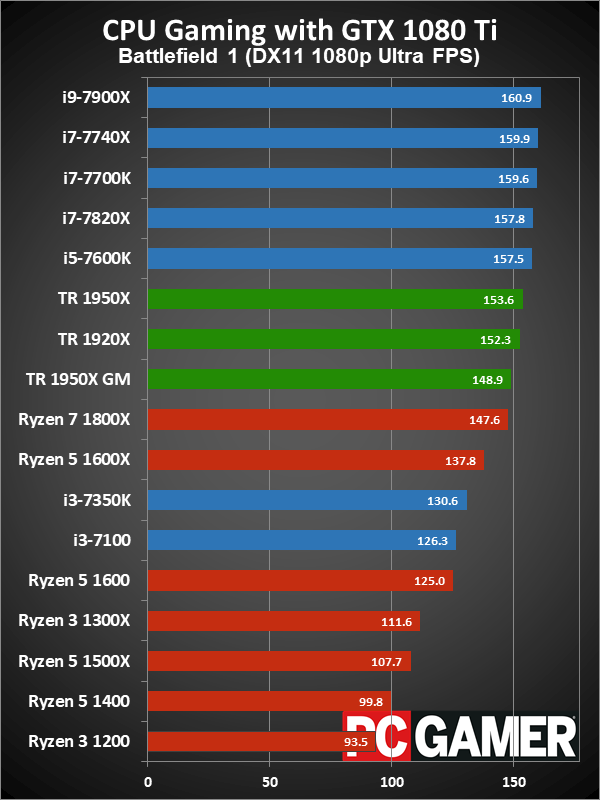

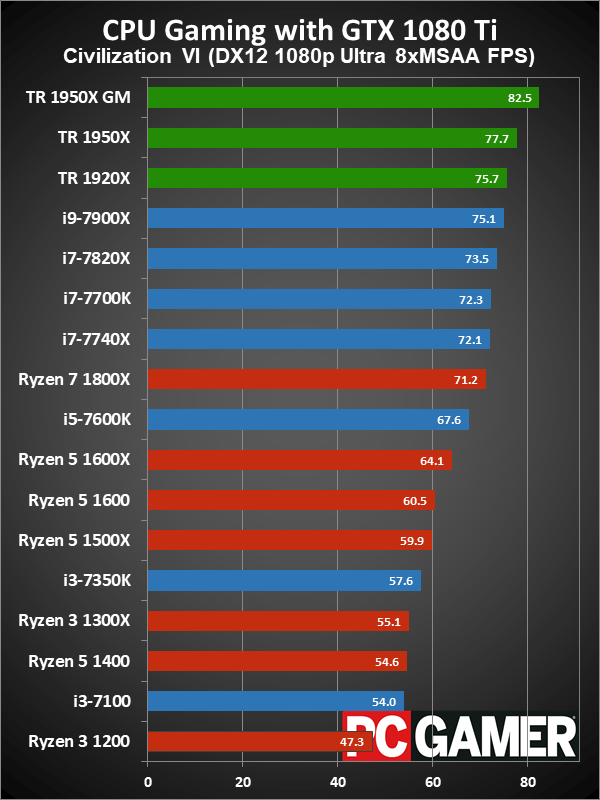

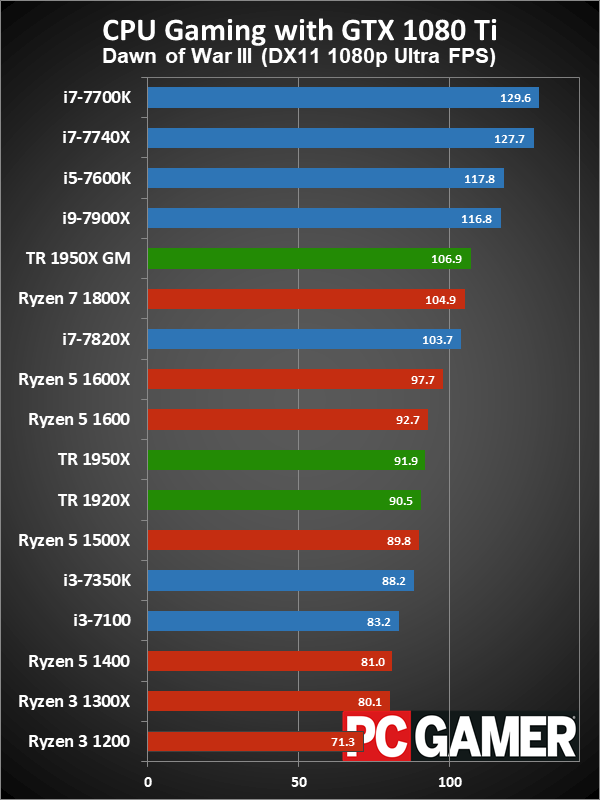

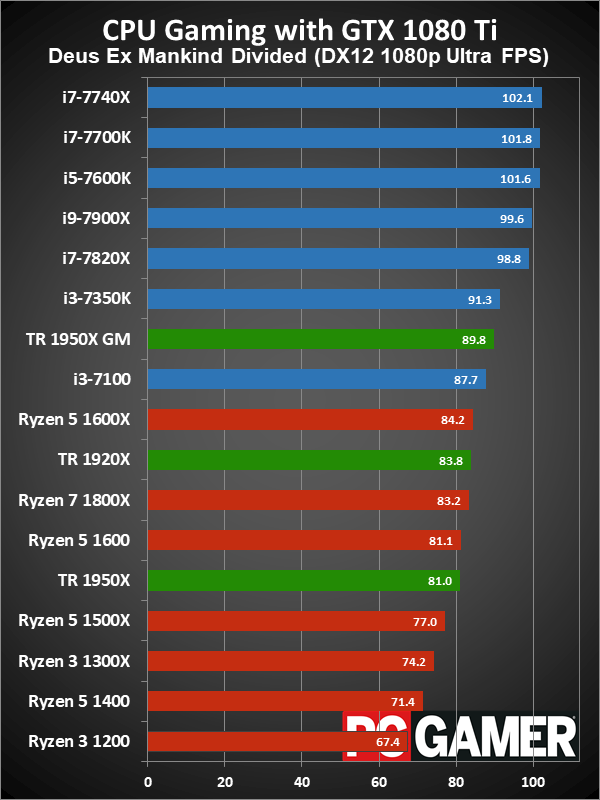

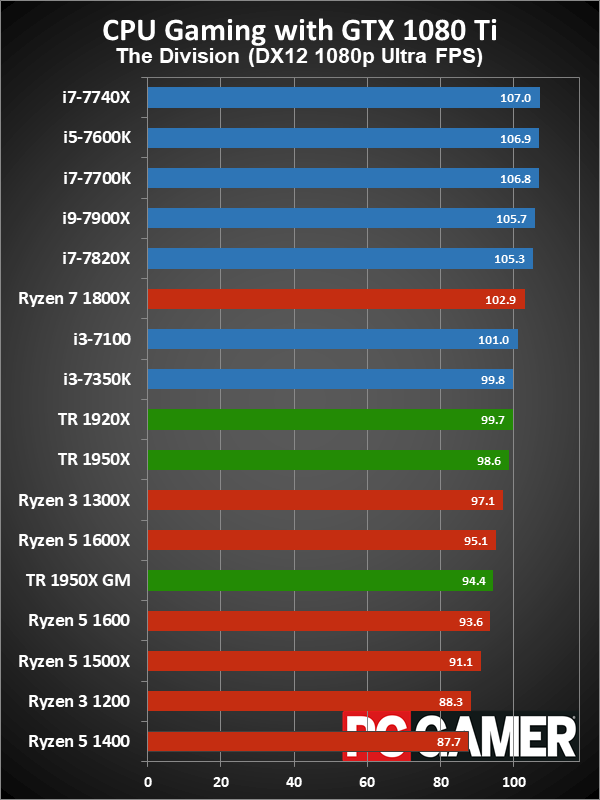

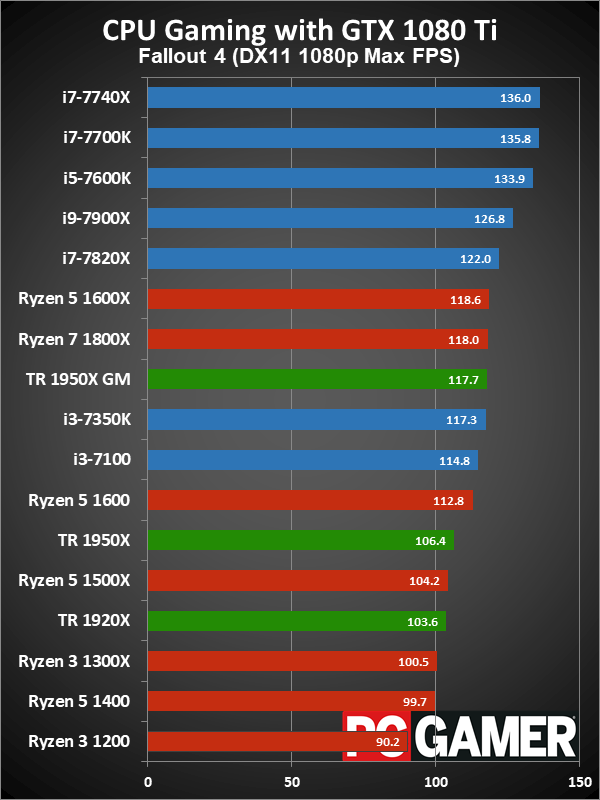

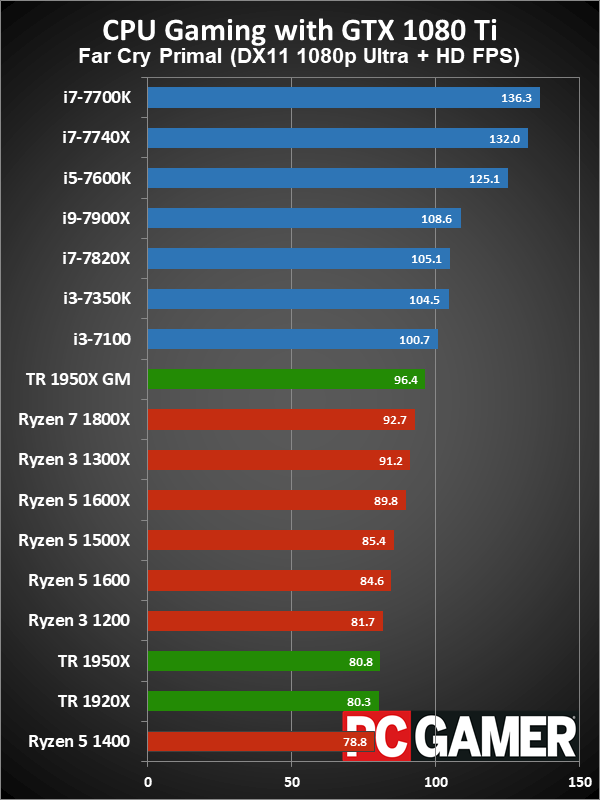

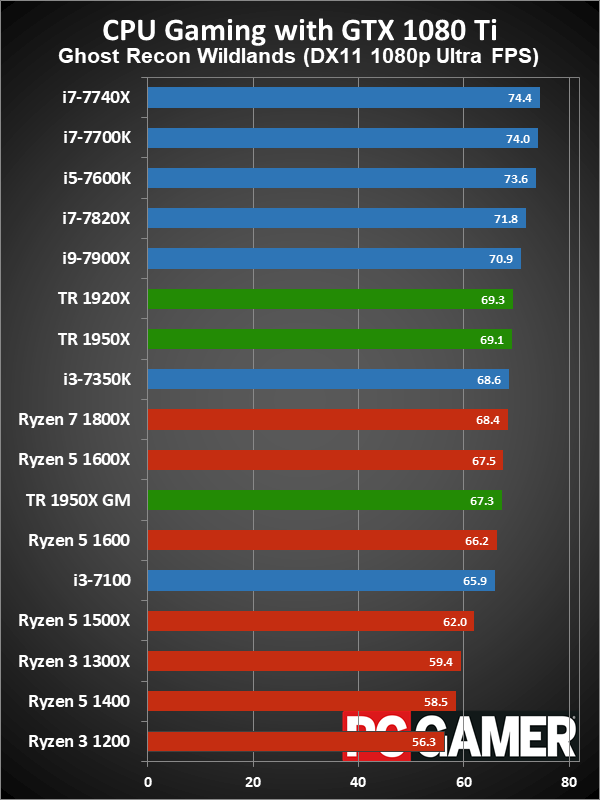

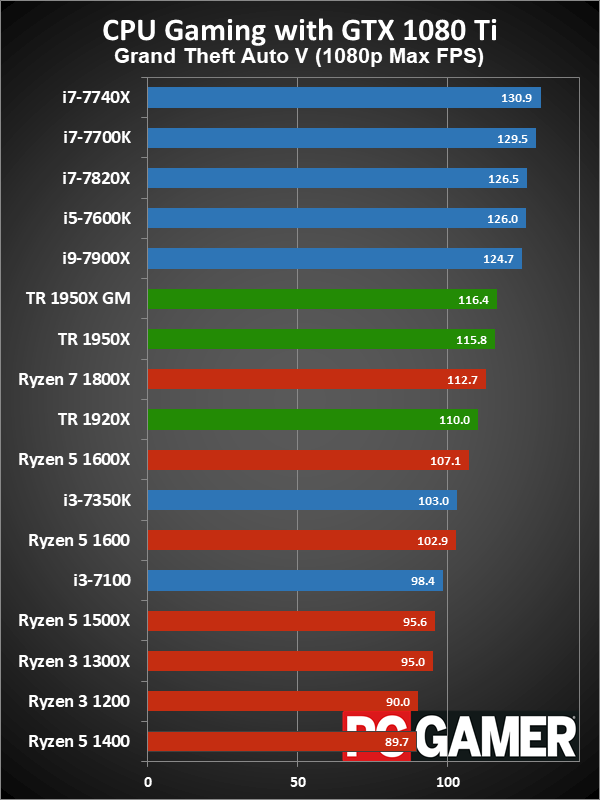

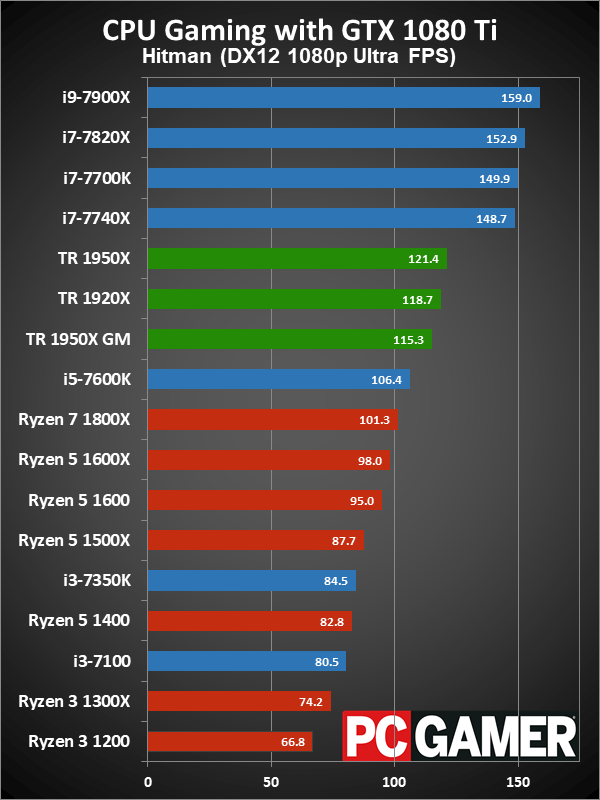

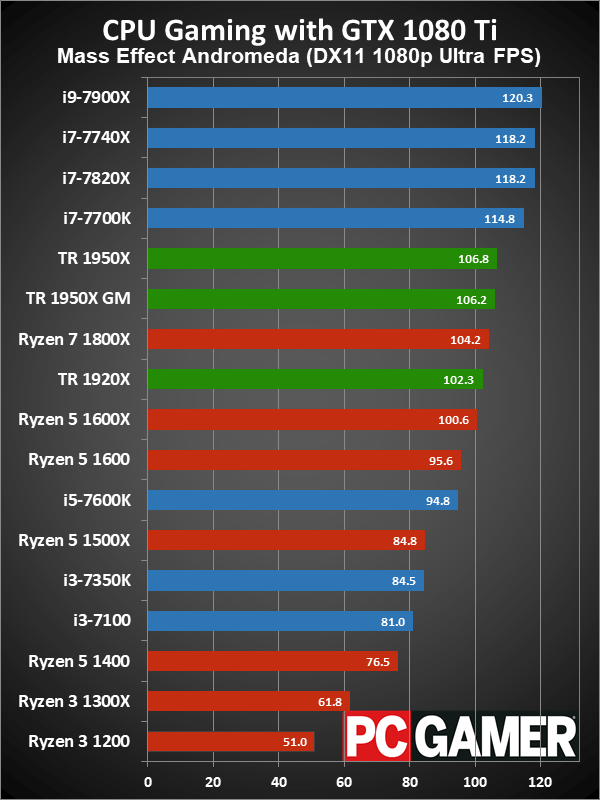

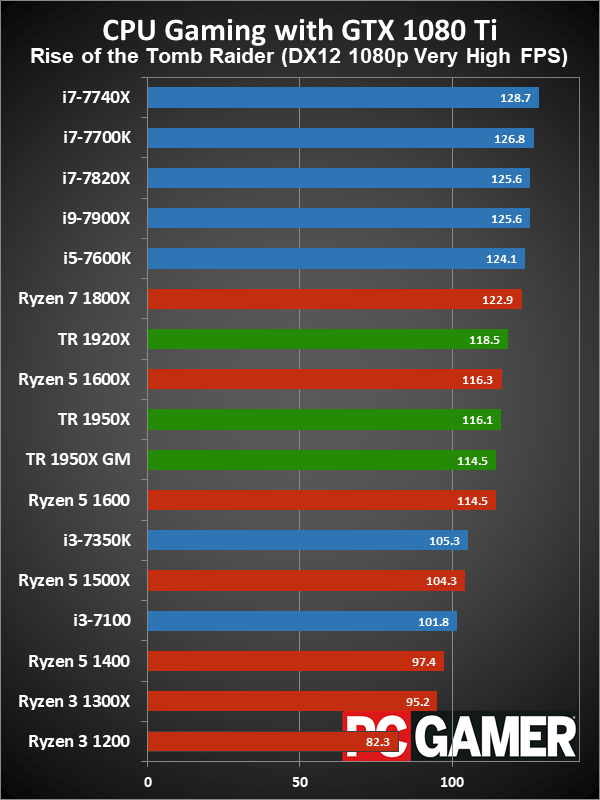

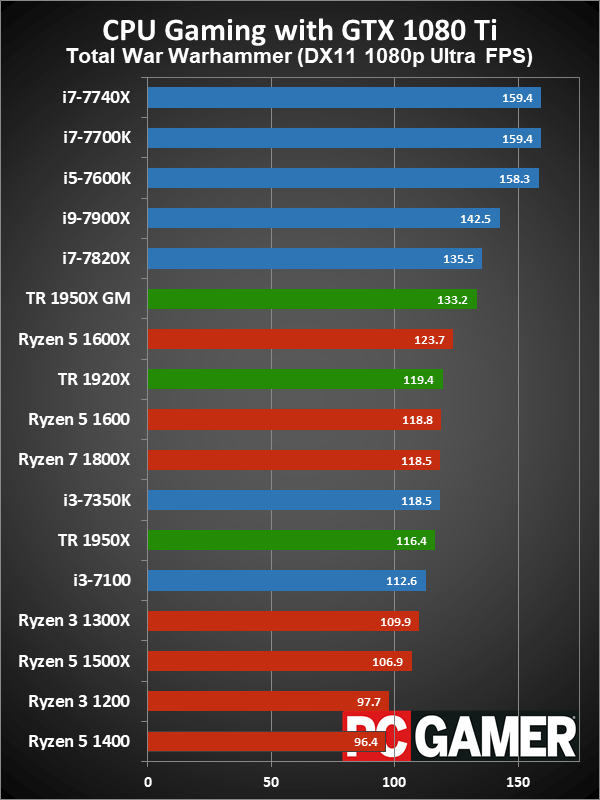

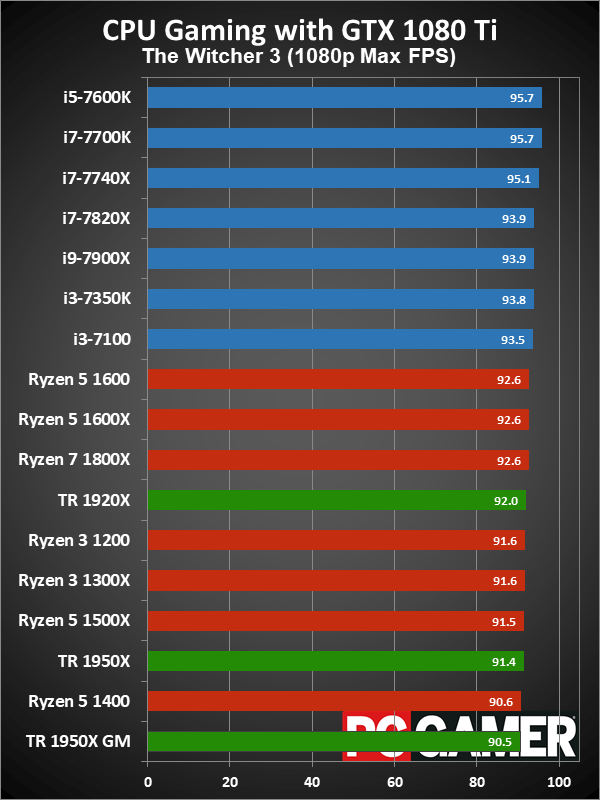

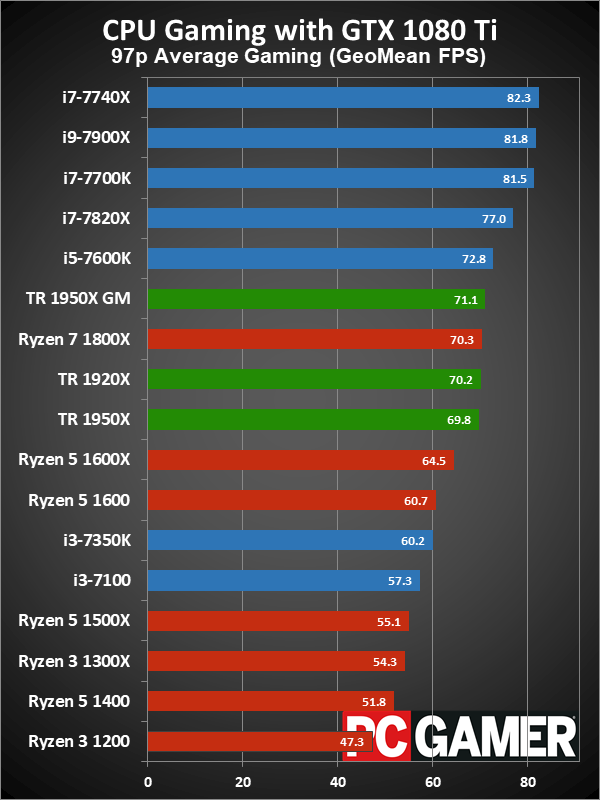

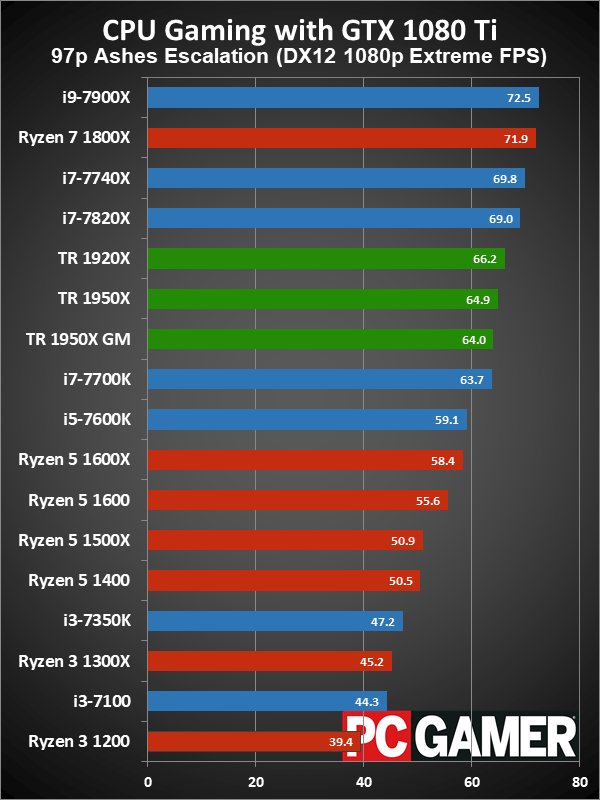

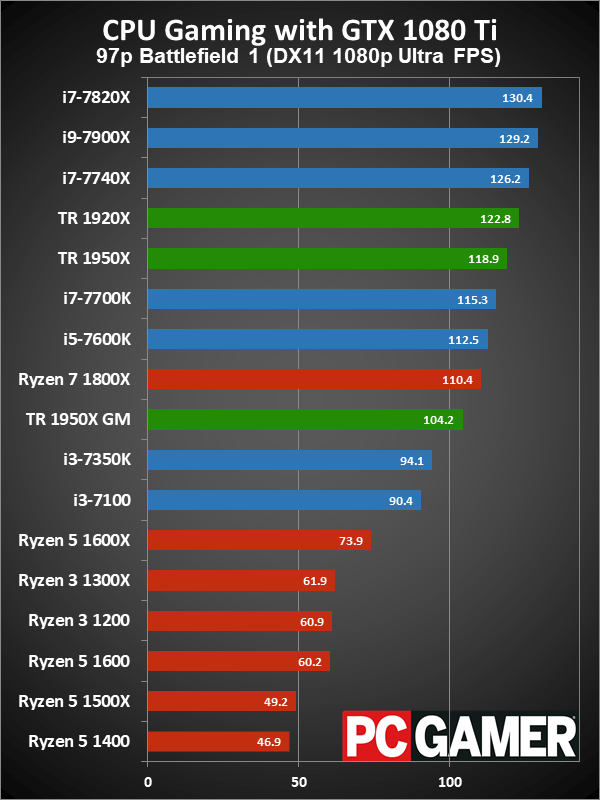

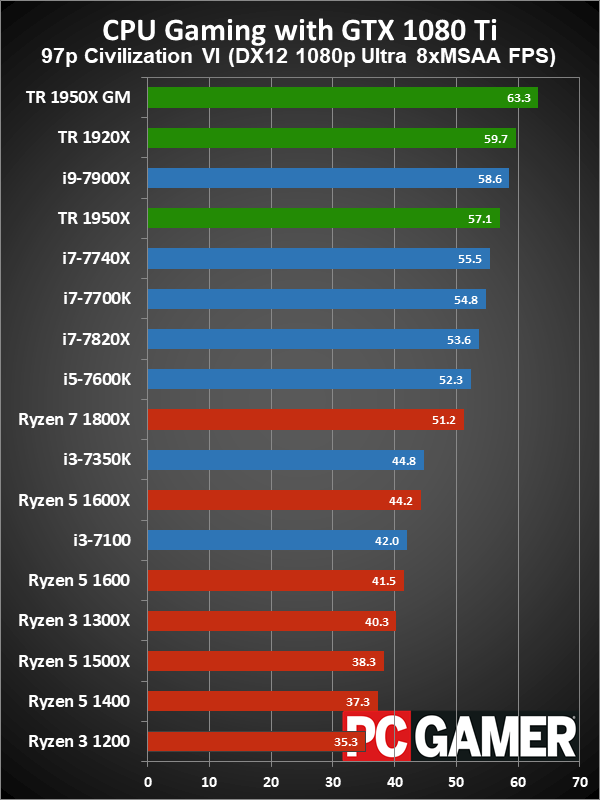

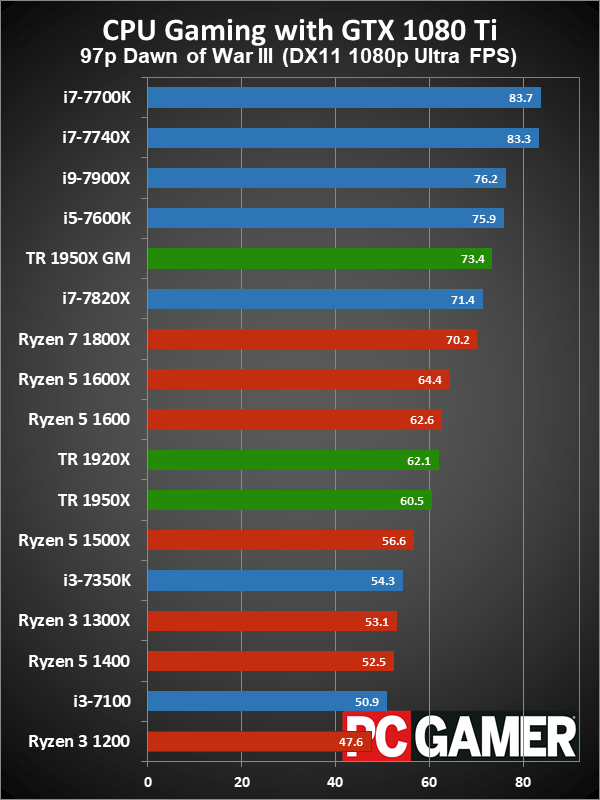

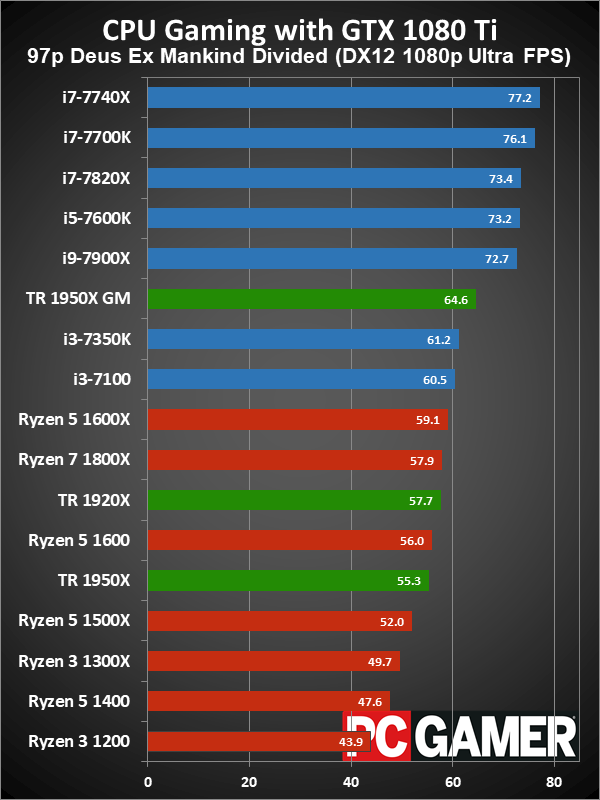

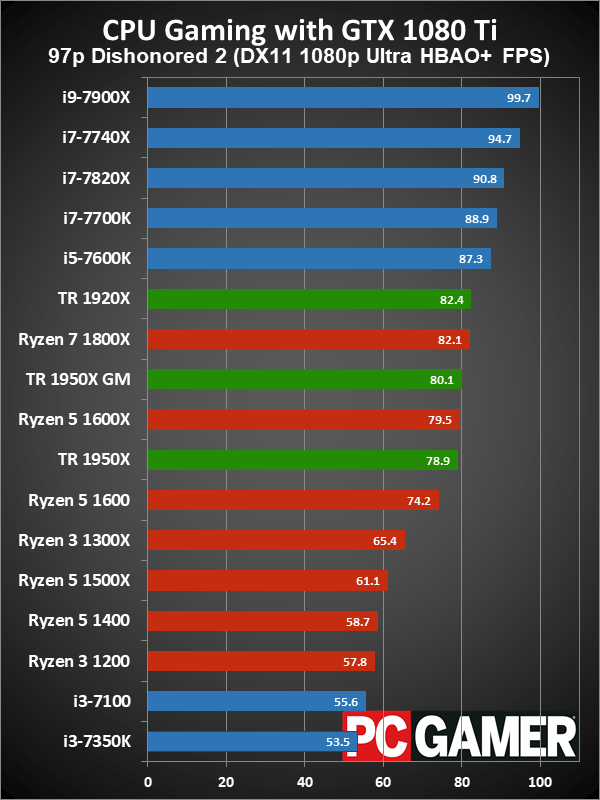

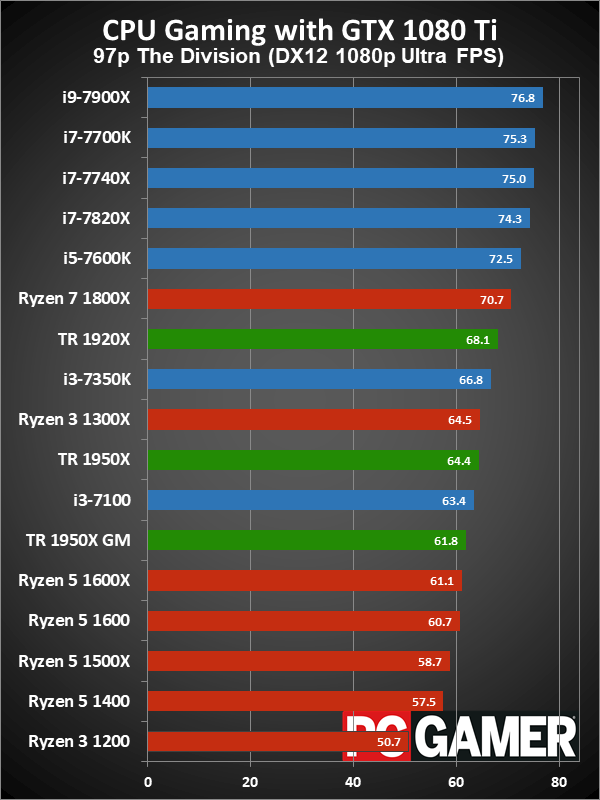

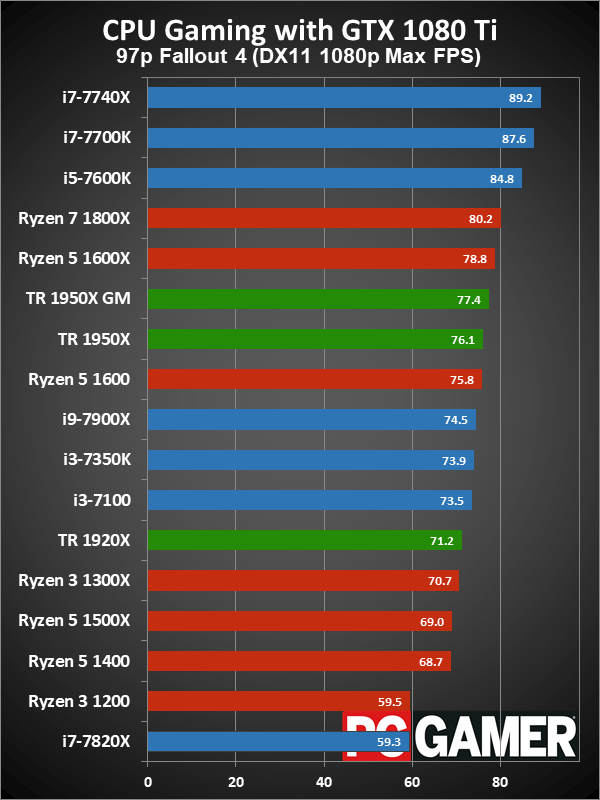

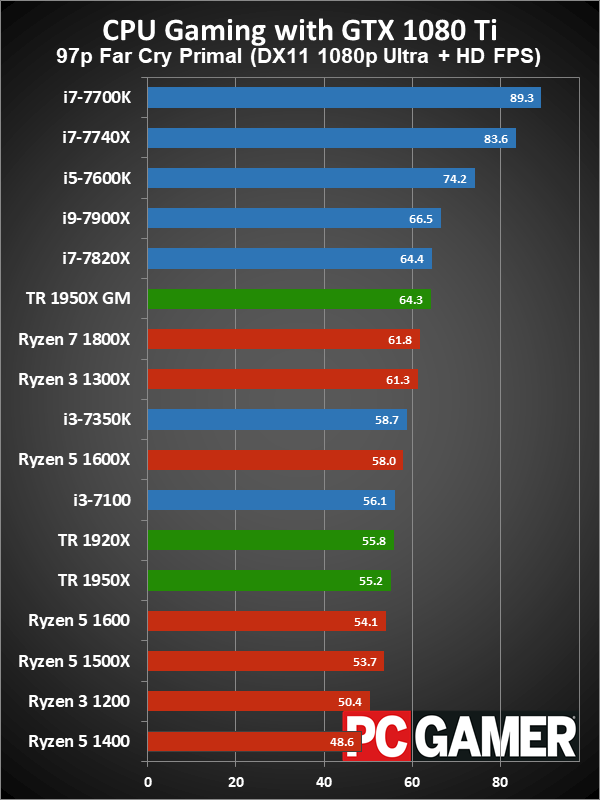

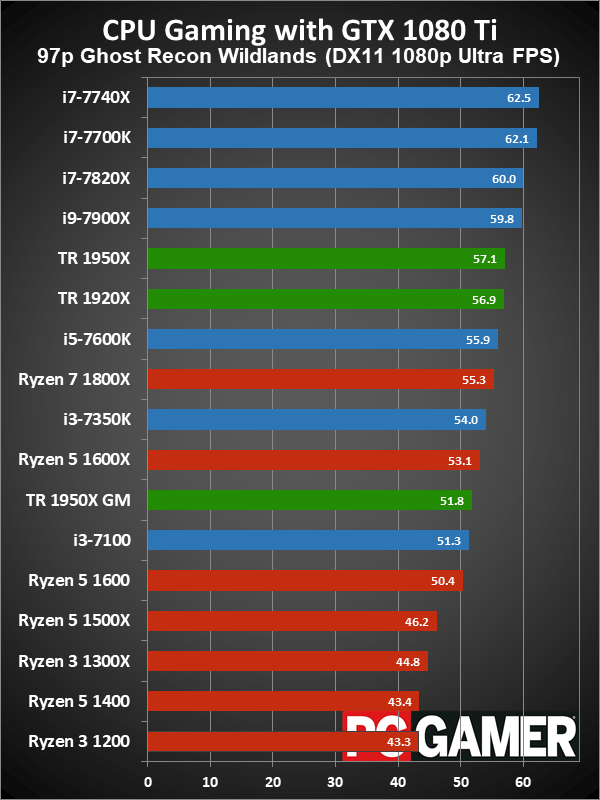

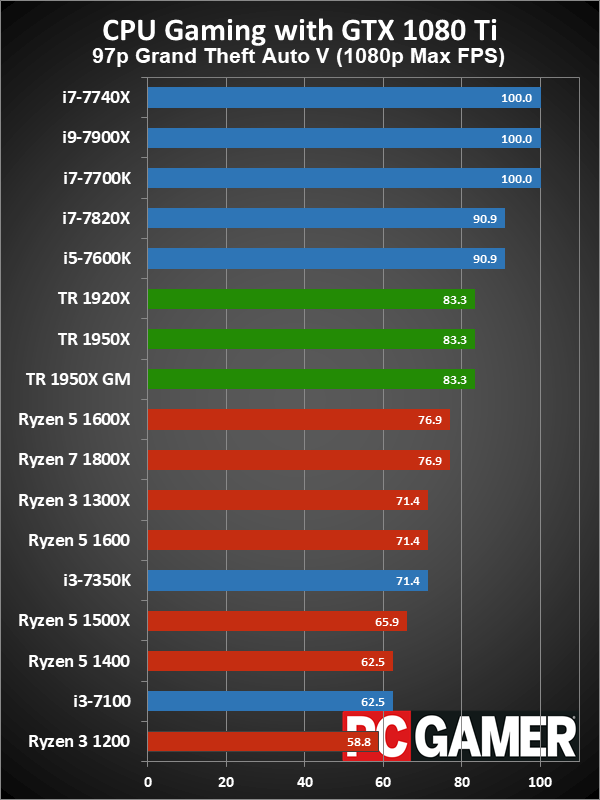

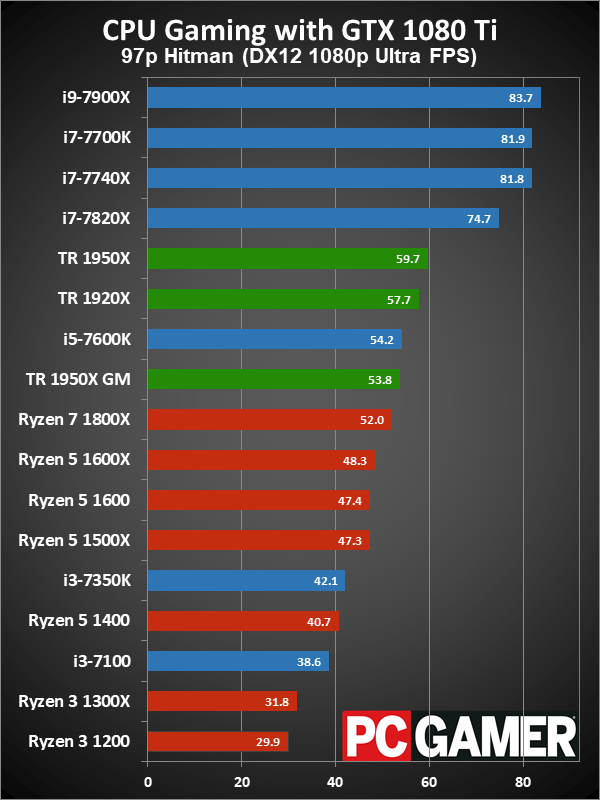

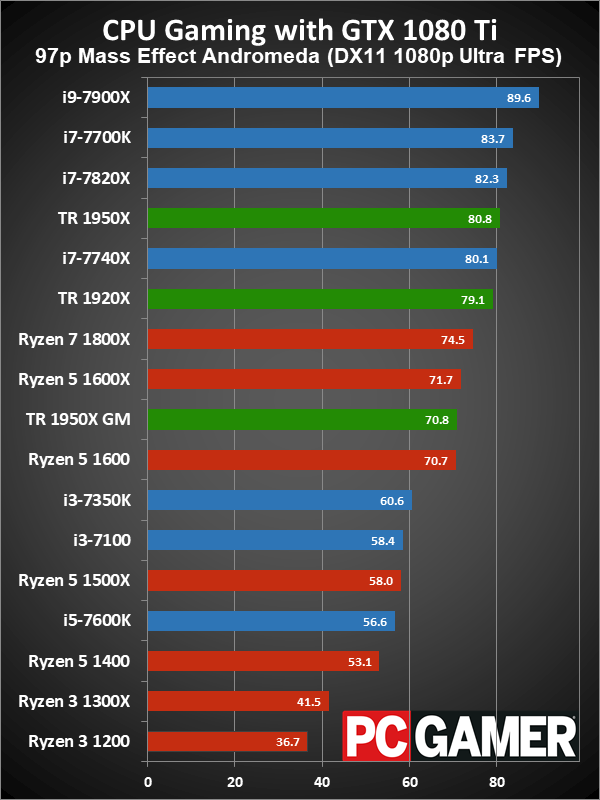

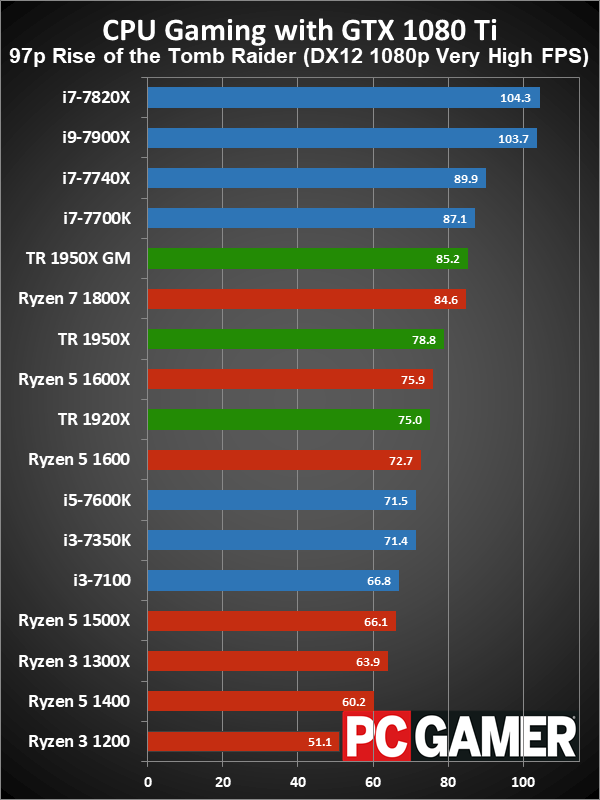

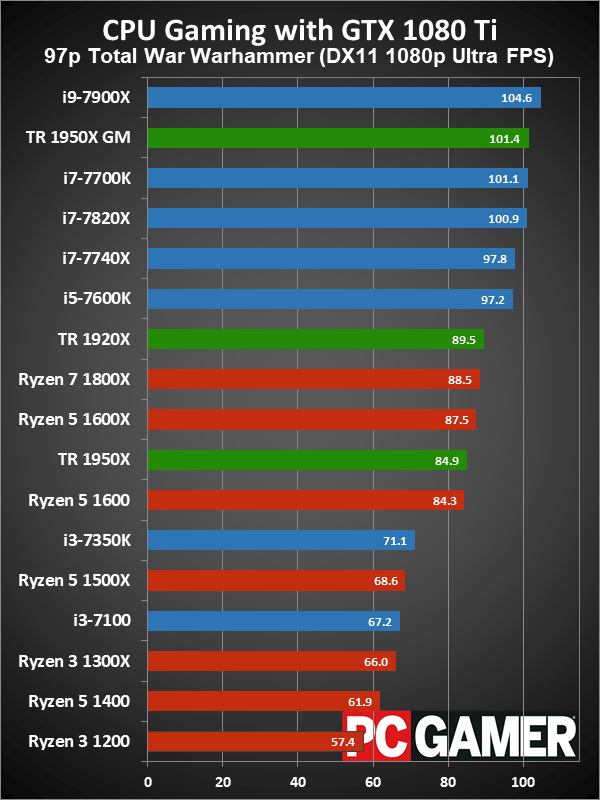

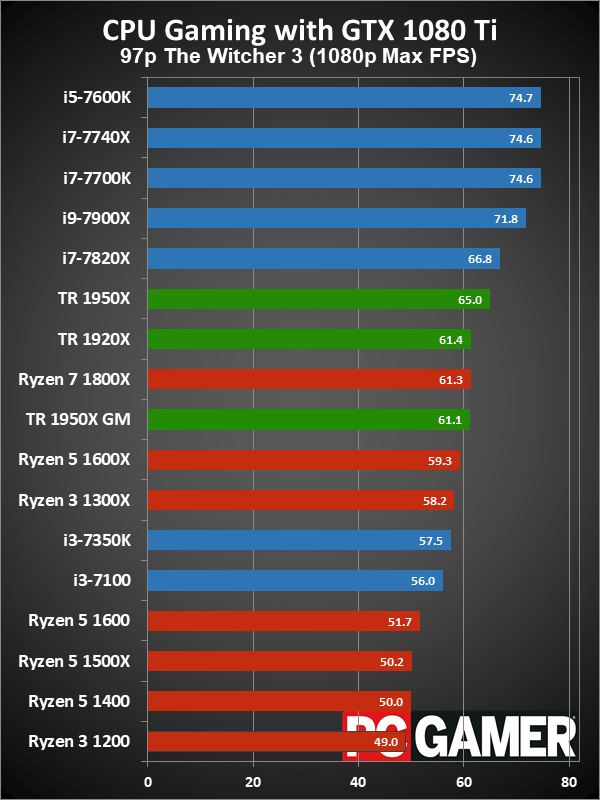

For all the good stuff we saw on the CPU-centric testing, if you've read all the earlier Ryzen coverage, you can probably already guess that Threadripper doesn't really change the formula. Other than a few games now having tuned builds that run better on Ryzen, in general Intel wins the gaming tests. Of course, this is at 1080p ultra quality, using a GTX 1080 Ti—which honestly isn't that far off what I'd expect any gamer considering Threadripper or Core i9 to be running. Anyway, a slower GPU would show far less difference, and running at 1440p or 4K would also narrow the gap. But if you want maximum gaming performance, AMD still has some work to do.

The good news is that Threadripper isn't really any slower than the Ryzen 7 1800X in overall gaming performance. And if you want to enable Game Mode (why!?), the 1950X actually edges past the 1800X. But in pure framerates, Intel is about 10 percent faster. It's not just about average fps either, as the 97 percentile minimum fps also favors Intel, and by a slightly wider margin in many cases.

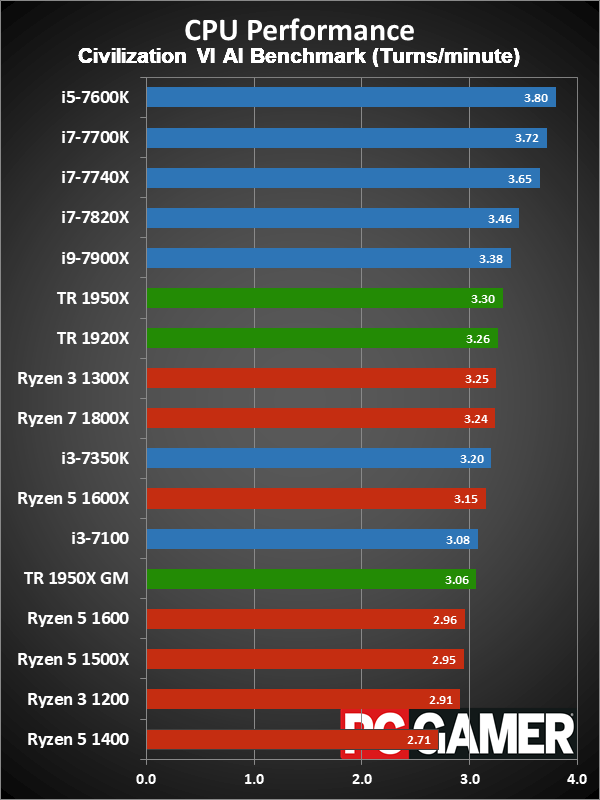

Only one game gives the lead to Threadripper right now, and not surprisingly it's sporting an AMD Gaming Evolved logo. Civilization VI has received quite a few patches since it launched, and some of the latest updates specifically targeted Ryzen performance. Where most of the Intel i7/i9 CPUs end up around 72-75 fps, the 1950X scores 82 fps. Unfortunately, the latest update was only run on the i9-7900X, i7-7820X, and Threadripper—so the other Ryzen chips would probably improve in Civ6 performance as well.

On the other end of the spectrum, Far Cry Primal continues to favor Intel CPUs by a wide margin. Interestingly, FCP is also one of the few games that has a hard-coded thread limit of 24, so I couldn't run it natively on the 1950X—I had to disable SMT to get it to run! Total War: Warhammer also favors Intel quite a bit, and it's one of the best showcases for the Game Mode inclusion.

In terms of bang for the buck, the Core i5-7600K still continues to impress in overall gaming performance, but that's only if you're not doing anything else while playing games. Streamers will definitely want at least a Core i7, or one of the 6-core Ryzen 5 chips. I've used the 6-core i7-5930K for several years now in my GPU testbed, and it's hard going back to a system with only four cores now. You can get away with things on 6-core systems (like leaving a bunch of extra apps running without a second thought), where Core i5 really does best if you're not doing much multitasking.

Ryzen Threadripper overclocking

Yesterday, we had a news post where someone claimed to hit 4.1GHz on the 16-core Threadripper 1950X. I have to admit I was highly skeptical, and after seeing the Cinebench result I'm even more doubtful that the overclock was actually stable. The report gave a Cinebench 15 result of 3,337, which is pretty good… except I was able to score 3,336, with my Threadripper 1950X running at just 3.9GHz. Hmmm.

Basically, in my testing, overclocking of Threadripper was about the same as other Ryzen chips. The 1950X could probably do 4.0GHz if I had better cooling, but 3.9GHz and 1.375V was at the ragged edge of stability, and in CPU intensive workloads the power draw routinely hit over 400W. Temperatures at 3.9GHz also got above 90C, which is way more than I'd want to run on a routine basis. Dropping down to 3.8GHz, I was able to complete all of my benchmarks with only 1.25V, and no intermittent crashing.

Overall, running at 3.9GHz on all 16 cores improved performance in CPU testing by about six percent, with a power increase of over 50 percent. Yeah. Gaming performance on the other hand didn't change too much—only about two percent faster, which is practically margin of error. To put things in perspective, using Game Mode actually gave a bigger improvement (for gaming) than overclocking.

The 1920X fared a bit better, probably because it only has 12 cores to worry about cooling. I hit 4.0GHz with 1.35V and completed all of my benchmarks. Performance was up about two percent in games is all, but CPU-centric workloads improved by eight percent. Power draw was also far lower, at just 310W for Cinebench. You'll definitely want liquid cooling at a minimum if you plan on overclocking Threadripper, though.

As with other Ryzen chips, overclocking was underwhelming. Maybe my 1950X chip isn't as good as others, and it's summertime which means higher ambient temperatures as well, but for the small gains I'd suggest anyone running Threadripper just stick with stock operation. AMD has done a good job of extracting almost all the performance from the chips at stock clocks, and the process as a whole seems to run into a clockspeed wall of around 4.0GHz, unless you use liquid nitrogen.

Is Ryzen Threadripper the new king of the CPU hill? In some areas, yes, absolutely. Run a heavily threaded workload like video encoding and Threadripper flies, easily besting Intel's current-fastest i9-7900X. But of course, Intel has its response coming in the form of the i9-7920X, i9-7940X, i9-7960X, and i9-7980X—and every chip will cost more than Threadripper, while only the 7960X and 7980XE will actually offer 16 or more cores. But if gaming is a major consideration, in most cases Intel's CPUs are still faster. But you shouldn't be looking at either the X299 or X399 platform if you're just playing games, because these CPUs are designed to do far more.

I love that AMD is bringing the fight to Intel, hitting it in the fat HEDT wallet. Enthusiasts have been stuck with only one compelling option for far too long on the CPU side of things. Now, even though Intel has plenty of areas where it can beat Threadripper, for anyone in the market for a high-performance CPU—for software development, content creation, virtualization, and more—AMD is worth a serious look. And the competition will only continue to benefit consumers, as it will mean faster CPUs at lower prices, and hopefully larger performance gains with the next generation of architectures.

Again, Threadripper isn't about playing games. It's complete overkill if that's all you're looking to do. Game developers on the other hand could definitely get some mileage out of the chips. Think about it this way for a moment. Ryzen 5 1500X is a decent CPU that costs around $190. Threadripper 1950X stuffs four of those into a single package, with higher clockspeeds as a bonus. If you can't think of anything useful to do with four PC's worth of power, by all means stick with the AM4 and LGA1151 offerings. But if you've ever wondered what it's like to have a server as your main desktop, Threadripper is basically that—minus the noisy fans.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.