The RTX 4060 Ti highlights where the limitations lie with Nvidia's Frame Generation technology

My testing highlights the fact that instead of giving extra performance, sometimes Frame Generation can slow your games down.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

You are now subscribed

Your newsletter sign-up was successful

Want to add more newsletters?

Every Friday

GamesRadar+

Your weekly update on everything you could ever want to know about the games you already love, games we know you're going to love in the near future, and tales from the communities that surround them.

Every Thursday

GTA 6 O'clock

Our special GTA 6 newsletter, with breaking news, insider info, and rumor analysis from the award-winning GTA 6 O'clock experts.

Every Friday

Knowledge

From the creators of Edge: A weekly videogame industry newsletter with analysis from expert writers, guidance from professionals, and insight into what's on the horizon.

Every Thursday

The Setup

Hardware nerds unite, sign up to our free tech newsletter for a weekly digest of the hottest new tech, the latest gadgets on the test bench, and much more.

Every Wednesday

Switch 2 Spotlight

Sign up to our new Switch 2 newsletter, where we bring you the latest talking points on Nintendo's new console each week, bring you up to date on the news, and recommend what games to play.

Every Saturday

The Watchlist

Subscribe for a weekly digest of the movie and TV news that matters, direct to your inbox. From first-look trailers, interviews, reviews and explainers, we've got you covered.

Once a month

SFX

Get sneak previews, exclusive competitions and details of special events each month!

Nvidia's Frame Generation technology is magic. It delivers extra gaming performance with seemingly no downside. But I had no real understanding of its limits until I plugged in my RTX 4060 Ti and saw behind the curtain.

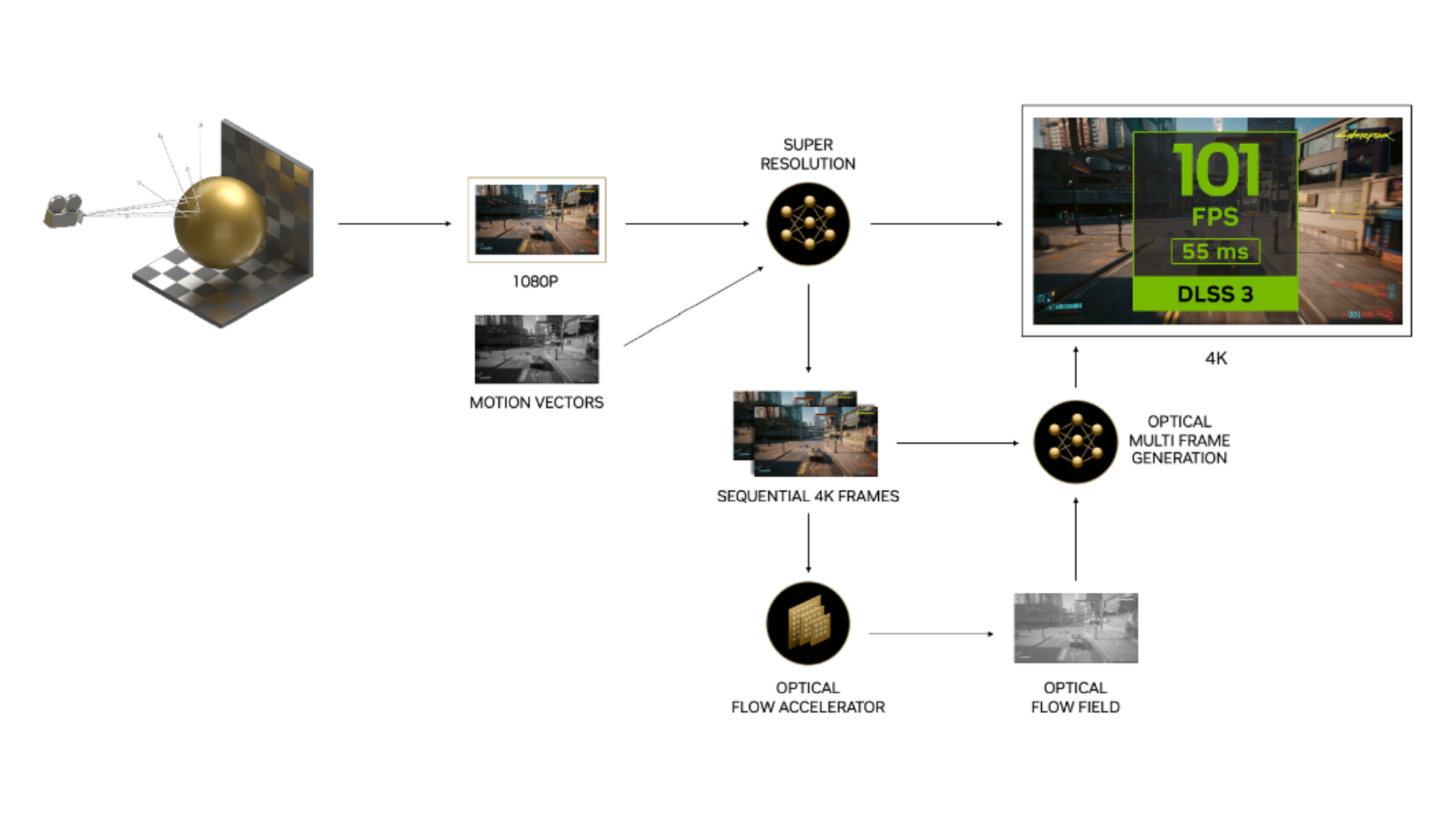

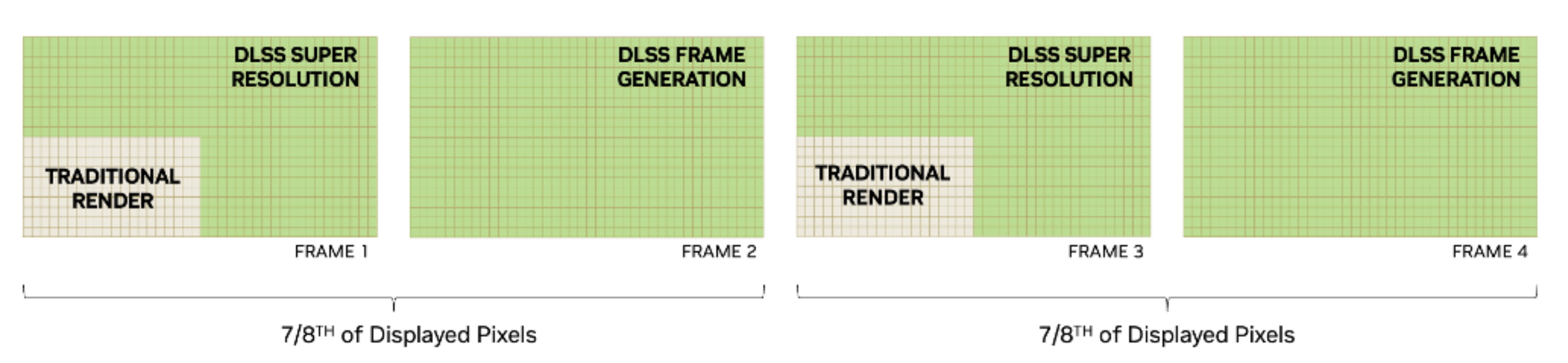

One of the shining lights of Nvidia's RTX 40-series has been the twin benefits of DLSS 3 and nascent Frame Generation technologies. Introduced alongside the RTX 4090, which showed stellar performance improvements itself, Nvidia introduced us to a world where seven-eighths of a game's pixels are rendered by AI and not the GPU hardware.

The interpolation magic of Frame Generation seemed like a global panacea for the ills of poor frame rate on any RTX 40-series GPU it was deployed on. The only limits we had were the facts that it could only run on a graphics architecture that used the new Optical Flow Accelerator hardware and that it needed developer integration on a per-game basis.

But when it's supported, oh boy, does Frame Generation deliver the goods. And without any appreciable loss in image fidelity or with the introduction of weird graphical artefacts because of the interpolated frames.

Frame Generation uses the updated Optical Flow Accelerator hardware baked into the Ada GPU silicon. It's a part of Nvidia's graphics chips that's been in place since Ampere, but with the Ada architecture that silicon has been improved to the point that it's twice as fast as the previous generation.

And that raw speed is necessary to be able to generate entire frames in real time, and also why Frame Generation isn't currently available outside of Nvidia's RTX 40-series GPUs.

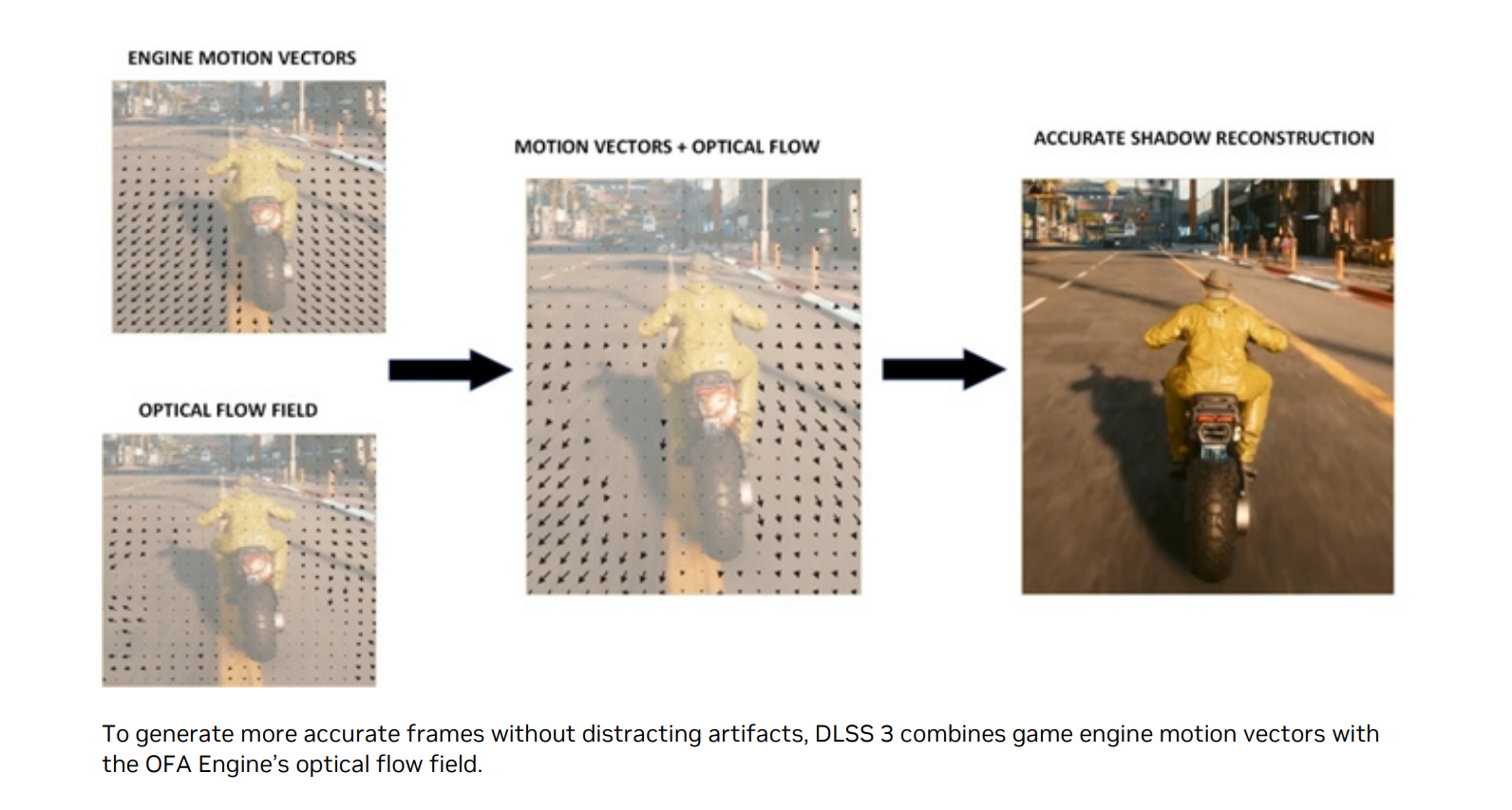

Optical flow itself is about estimating the direction and speed of the motion of pixels in consecutive frames, and is used in image stabilisation and autonomous navigation outside of gaming. Its use in games, however, is all about looking at different frames, estimating where those pixels ought to be in another frame using the Optical Flow Accelerator and mixing that data with a given game engine's motion vectors. All that information then gives the GPU enough data to generate an entire subsequent game frame to drop in without visual anomalies.

Keep up to date with the most important stories and the best deals, as picked by the PC Gamer team.

Those extra frames aren't generated out of thin air, and aren't actually free.

The spectacular performance benefits, and added smoothness it offers to the likes of an RTX 4090 or RTX 4080 are hugely welcome, offering 4K gaming performance that far outstrips any previous GPU generation. But you're already getting great frame rates with those high-end graphics cards, which makes the lower spec cards of the RTX 40-series the GPUs that could ultimately benefit the most from having something that generates extra frames out of thin air.

But there are limits, and those have been highlighted recently in my testing of the RTX 4060 Ti, because those extra frames aren't generated out of thin air, and aren't actually free.

Admittedly I only ran into issues with Frame Generation on the RTX 4060 Ti when I was being naughty and making it run games at 4K. The card isn't designed to be used at this level, its frame buffer is too small, its memory bandwidth too low, and its GPU too weak. So I shouldn't be surprised that even Frame Generation couldn't help it. But I didn't really expect it to harm my frame rate.

Using the technology at 1080p or 1440p certainly delivered the fps increase I was expecting. At 4K, however, there are times when turning on Frame Generation actually decreases performance compared with DLSS alone.

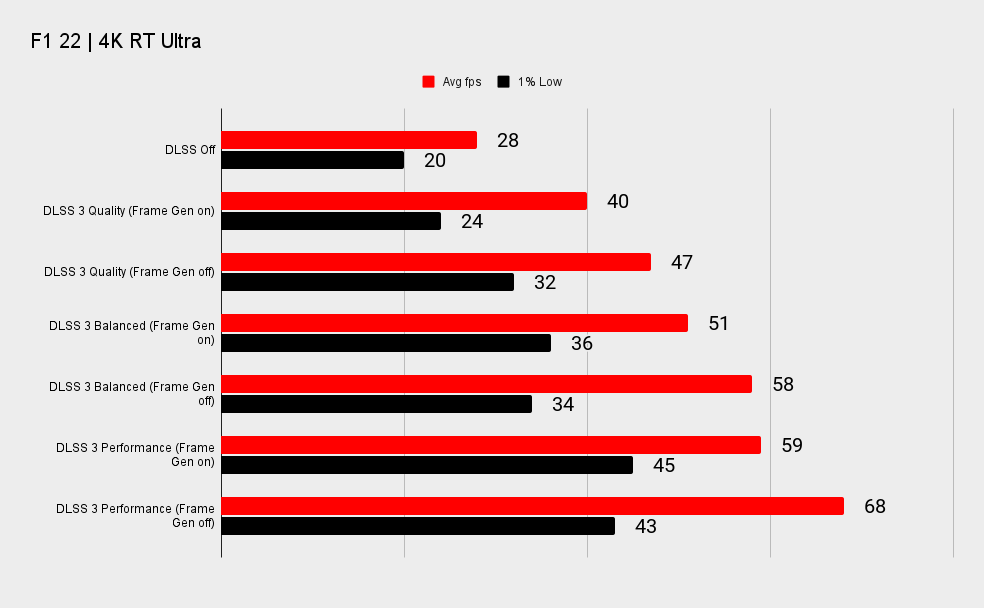

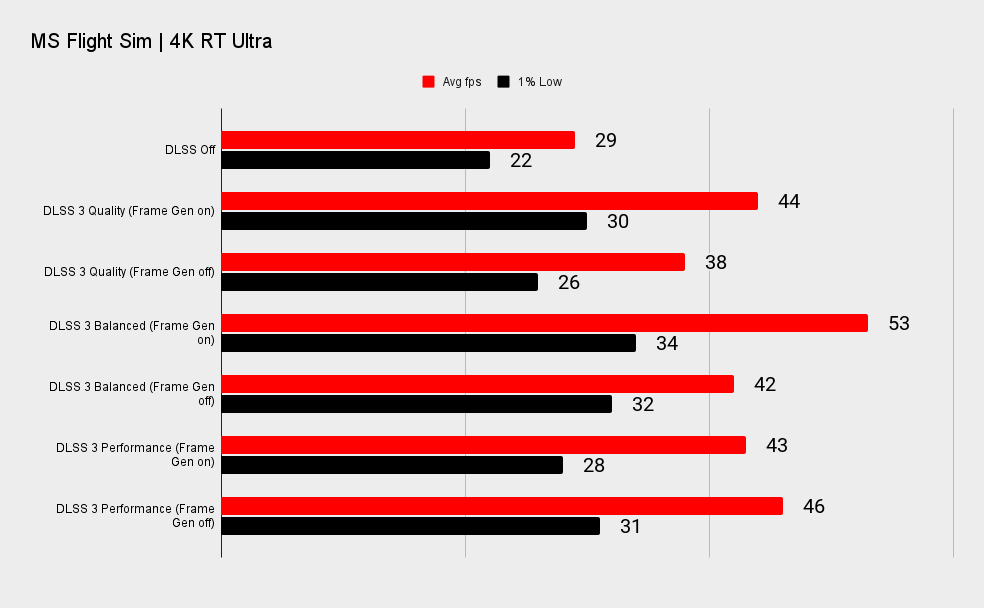

Across the board, in F1 22, turning Frame Generation on drops performance as opposed to just running DLSS on its own. The issue surfaces again in MS Flight Sim, the poster child of Frame Gen, but only down at the Performance level of DLSS where having Frame Generation enabled cuts into the fps boost of DLSS itself.

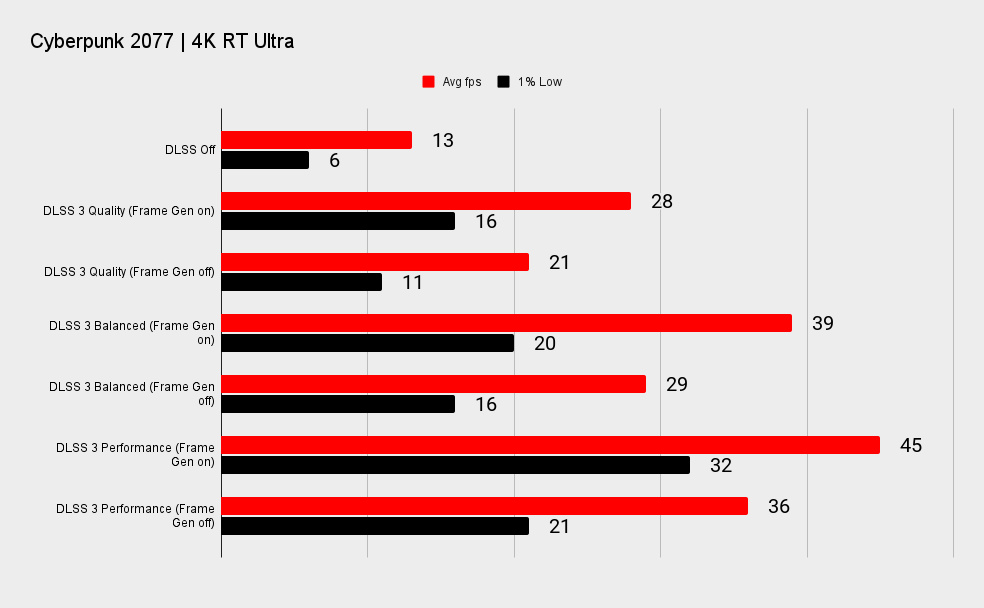

Cyberpunk 2077, however, shows that even on the RTX 4060 Ti running with everything turned on, at 4K, Frame Gen can still sometimes help deliver a game changing performance uplift.

Where it is an issue you will see it just slowing things down compared with running DLSS on its lonesome, but in one case I ended up with artefacts in The Witcher 3 that made all the NPCs rubber band all over the place, bouncing around Oxenfurt like glitching puppets on strings. Which I guess essentially they are anyways, but hopefully you get what I mean.

Because an Ada GPU needs time to create its interpolated frames that can have an impact on your overall frame rate if it's taking longer to do that than it takes for the GPU to actually render a given frame. And if your rendered frame is running on a lower input resolution because of DLSS, then at an otherwise GPU bound resolution such as 4K, DLSS is going to be finishing its frames before the AI generated ones.

Your queue of visible frames is then being held up so the game can slot the AI generated frame in at the right place, and so your overall frame rate drops while it's waiting. I'm guessing in The Witcher 3 I was seeing those AI frames being dropped in out of order, which is why those pseudo-medieval scoundrels were seemingly phasing between timelines.

Take Frame Generation out of the equation and DLSS has free rein to render its low input resolution frames, upscale them, and fire them out at your monitor as and when they're ready to roll without anything else getting in the way.

Nvidia has explained that's all expected behaviour when taking Frame Generation out of its comfort zone. That's what I was doing with the RTX 4060 Ti, but it highlights the limits of the technology. At a level where you're heavily GPU bound in a game, and there isn't performance enough in your card's tank to cope with a game's graphical demands, Frame Generation and DLSS aren't going to scale optimally. And, when you're that GPU bound, Frame Generation could end up actually retarding your frame rate.

It's going to be harder going for Frame Generation down at the bottom of the Nvidia GPU stack.

I was told by an Nvidia representative that: "DLSS 3 works great both in CPU and GPU bound scenarios. DLSS 3 Super Resolution offers highest scaling when it's GPU bound, while DLSS 3 Frame Generation offers highest scaling when it's CPU bound."

In CPU limited situations, as highlighted in Microsoft Flight Sim, for example, DLSS alone can't deliver a particularly impressive performance uplift. Throw Frame Gen into the mix and it absolutely can because the graphics card has resources to spare. But when your performance is completely limited by the GPU hardware it doesn't then have the performance headroom to be able to give you the extra frame rate you might otherwise expect.

That means it's going to be harder going for Frame Generation down at the bottom of the Nvidia GPU stack, arguably where any extra gaming performance is needed the most. Will an RTX 4060 or RTX 4050 not be able to deliver decent 1440p frame rates using DLSS 3's full feature set, taking away some of the green team's extra gaming performance benefits? Will those lower spec cards struggle to glean a boost from Frame Generation even just at 1080p with ultra performance settings enabled?

However it shakes out, you are at least still going to be able to take advantage of the pure DLSS experience, with upscaling alone always being able to deliver higher frame rates regardless of whether you're GPU bound or not. But however enamoured with the tech I have been since first testing it, the magic of Frame Generation has dulled a little for me as the RTX 4060 Ti has highlighted the limits of its power at the low end.

Dave has been gaming since the days of Zaxxon and Lady Bug on the Colecovision, and code books for the Commodore Vic 20 (Death Race 2000!). He built his first gaming PC at the tender age of 16, and finally finished bug-fixing the Cyrix-based system around a year later. When he dropped it out of the window. He first started writing for Official PlayStation Magazine and Xbox World many decades ago, then moved onto PC Format full-time, then PC Gamer, TechRadar, and T3 among others. Now he's back, writing about the nightmarish graphics card market, CPUs with more cores than sense, gaming laptops hotter than the sun, and SSDs more capacious than a Cybertruck.