Anthem performance, settings, and system requirements

Anthem is tough on any hardware, but our GPU, CPU and notebook testing will help you go in prepared.

Anthem has had a tough launch for many reasons, some of it at least partially related to PC performance. The VIP test and following beta both had problems getting to 60fps on resolutions higher than 1080p, and even top-tier CPUs could end up acting as bottlenecks.

Hope remained that the full release would polish things up, but unfortunately, performance gains have been minor, or simply not materialized. Compared to what we saw from the demo, you can squeeze out a few more frames here and there, but in most cases, not to the extent that you'd actually notice the difference.

Still, with the full game in hand, plus several patches and driver updates, we (well, Jarred) set about testing the game on a wide range of CPUs and GPUs. Let's start with a look at its major features:

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Anthem on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops—see below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

It's good to see native 21:9 ultrawide support, and even 32:9 resolutions can be enabled without any stretching. BioWare has also added an FOV slider, with five different options depending on whether you're flying, hovering, on the ground, swimming, or zoomed in with a scope. As usual with EA games, especially those running on the Frostbite engine, modding support is non-existent, through at least the PC version gets full key customization and an unlocked framerate. (There's technically a 200fps cap, though in practice the only way we could get there was with a high-end CPU and GPU, staring at a wall.)

Post-launch, Anthem has also been updated to support (DLSS), Nvidia's fancy supercomputer-assisted anti-aliasing tech. We've already covered it in the linked article so we won't focus on it here. Essentially, it can boost framerates by anything from 15 to 40 percent, but the supported resolutions are a bit limiting—it only works at 1440p and 4K on RTX cards, but not 1080p. Partially that's because 1080p already runs fast enough even on a desktop RTX 2060, though the RTX laptops could use a bit of help at 1080p ultra.

Swipe for Nvidia GTX 1060 6GB chart

Swipe for AMD RX 580 8GB chart

Anthem settings overview

Anthem provides a decent selection of advanced settings to play with, and quite a few bits have changed since the game first launched in February. It's now possible to change the severity of screen shake (including disabling it entirely), motion blur has its own toggle instead of being lumped in with post-processing, and just about everything you might want is available.

The settings area consists of multiple screens, with resolution, vsync, HDR, and DLSS under Display Settings; Motion Blur, Depth of Field, Chromatic Aberration, and Camera Shake are under Graphics Settings; and then the main Graphics Quality setting along with ten other options are under Advanced Graphics Settings. There's also a separate Field of View tab where you can tweak the five different FOV options. We'll mostly confine our settings discussion to the advanced settings.

Comic deals, prizes and latest news

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

Graphics Quality: This is the quick and easy option, with four presets that change the rest of the advanced settings en masse. Naturally, this changes how good the game looks and can have a marked effect on performance: switching from ultra to low can as much as double your framerate, on budget and premium hardware alike. The exception is the jump from High up to ultra—with most GPUs, it's less than a 10 percent change in performance and no significant change in image quality. If you find high is already running well, you can probably crank it up to ultra.

Anti-Aliasing: This smooths out any jagged edges you might see on in-game geometry. Anthem lets you choose between TAA (temporal AA) and ultra, though it's not clear how the latter differs. Turning AA off entirely only seems to grant a tiny performance boost, so it's fine leaving it on.

Ambient Occlusion: This determines the quality of shading in those little nooks, crannies and crevices you'll find in Fort Tarsis and beyond. As with anti-aliasing, the good news is that the SSAO and HBAO settings have a relatively small impact on performance, and even the HBAO Full setting only drops performance 5 percent.

Texture Quality: This determines the resolution of the textures used in the game, and you'll generally want it as high as possible. 8GB graphics cards can safely use the ultra setting, while 4GB cards should opt for high—only cards with 2GB or less VRAM would need to use the low or medium settings. There is a modest performance hit with higher resolution textures as well, and you can improve performance by 8-12 percent when going from ultra to low, but at the cost of image quality.

Texture Filtering: If you want details to look nice and detailed from as far away as possible, a high texture filtering setting will do the trick. This is yet another option that has a modest 6 percent effect on performance when dropping from its highest to lowest setting, so dropping one or two notches may be beneficial on slower GPUs.

Mesh Quality: Turning this up will result in more detailed 3D models, even if it's just a humble pile of stones. However, the difference between ultra and low isn't all that noticeable, and performance only improves about 6 percent in testing.

Lighting Quality: Dropping this self-explanatory setting down to low netted a performance gain of about 10 percent, though this is a big sacrifice; environmental lighting aside, many Javelin abilities have some impressive lighting effects that it'd be a shame to lose. Medium quality can be a good compromise.

Effects Quality: As with lighting, it's worth keeping this high to ensure that Anthem's explosion-inclined combat looks as good as it can, though there's also less of a performance drop if you do so—about 6-8 percent.

Post-Processing Quality: Post-processing is anything that alters the rendered output after the main image is complete. It can include things like bloom, denoising, or other bits, and the effect is often relatively subtle. Using ultra quality compared to low causes a 6 percent performance drop, though it does ensure objects are rendered with a more polished look.

Terrain Quality: Setting this to low helps performance by around 5-7 percent, and since you'll going to be looking at an awful lot of terrain when flying around the open world portion of Anthem, don't be afraid to set this to medium or higher.

Vegetation Quality: If you want Anthem's plant life to look its most otherworldly, leave this maxed out, though with a 9 percent performance impact compared to using its lowest setting it's also one of the first options you should consider cutting if your framerate is suffering.

Motion Blur: Love it or hate it, motion blur is an option. We did most of our testing with it disabled, but if you like the effect it only causes about a 3 percent drop in performance.

MSI provided all the graphics hardware for testing Anthem, including the latest GeForce GTX and RTX cards. All of the GPUs come with modest factory overclocks, which in most cases improve performance by around 5 percent over the reference models.

My primary testbed uses the MSI Z390 MEG Godlike motherboard with an overclocked Core i7-8700K processor and 16GB of DDR4-3200 CL14 memory from G.Skill. I've also run additional tests on other Intel CPUs, including a stock Core i9-9900K, Core i5-8400, and Core i3-8100. AMD's Ryzen 7 2700X and Ryzen 5 2600X processors (also at stock) use the MSI X470 Gaming M7 AC, while the Ryzen 5 2400G is tested in an MSI B350I Pro AC (because the M7 lacks video outputs). All AMD CPUs also used DDR4-3200 CL14 RAM. The game is run from a Samsung 860 Evo 4TB SATA SSD on desktops, and from the NVMe OS drive on the laptops.

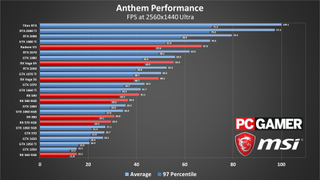

Anthem graphics card benchmarks

Since Anthem doesn't have its own benchmark tool, all of the benchmark data was recorded while playing the game—specifically, flying around a freeplay mission area. While this arguably paints a more accurate, warts-and-all picture of how Anthem performs than a scripted benchmark, there is the issue of the dynamic time of day and weather systems to consider.

As in the beta, weather and time of day change over time, which isn't particularly helpful when you're trying to run tests that are both repeatable and fair. Luckily, time of day appears to have a minor impact on performance, and while "stormy" weather does drop performance up to 10 percent, we avoided using it during benchmarks (which sometimes required exiting and restarting freeplay repeatedly). The results should be relatively consistent, but there's more variation with Anthem benchmarks than in other games.

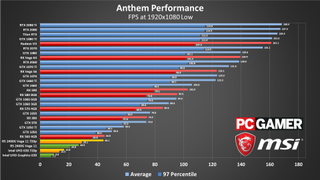

Frankly, even the clearest in-game skies won't prevent Anthem from being a very taxing game. Starting off with the low quality preset and a basic 1080p resolution, you need at least a midrange card like the GTX 1060 (or GTX 970 / R9 390) to get a comfortable 60fps average—budget cards like the GTX 1050 Ti don't even make it to 60fps.

In fairness, none of the dedicated GPUs sink below a 30fps average, so you can get Anthem technically playable on entry-level kit, but smooth framerates will require more capable hardware.

CPU limits do show up, with most GPUs topping out around 165-170 fps. That's better than the earlier versions of the game at least, where maximum fps was closer to 140.

There are GPUs that simply can't handle Anthem, of course—we're looking specifically at Intel's UHD Graphics 630. Even at 720p, it plugs along at just 17 fps, and 1080p drops framerates into the single digits. AMD's Vega 11 (Ryzen 2400G) as usual does better, though in this case that means playable at 720p and minimum quality and not much more.

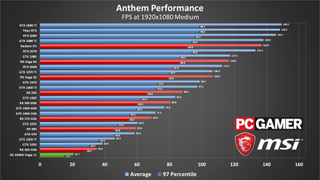

Medium settings are viable for most GPUs too, though the RX 560 and GTX 1050 both dip a little below 30fps at times. You don't need a monster GPU for a firm 60fps—RX 580 8GB / GTX 1060 6GB and above will generally suffice—but if you want to max out the refresh rate of a 144Hz monitor, you'll be disappointed. G-Sync and FreeSync will help, or you could shoot for 120Hz instead, but options are already narrowing fast. 13 GPUs could exceed 120fps with low settings, but only six can repeat the feat for 1080p medium: RTX 2070 and above.

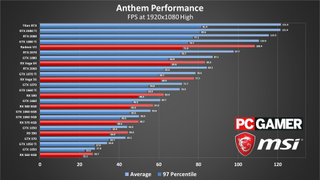

The high preset is where things really start to get dicey, as it's only slightly less stressful for most cards than the ultra preset. In general, the jump from the medium to high preset drops performance around 25 percent, and with the medium preset already pushing mainstream cards to the limit, many fall short. The GTX 1060 and RX 580 can't quite break 60fps averages (never mind minimums), and it's only higher-end GPUs (GTX 1070 Ti / Vega 56 and above) that are generally capable of staying above 60fps.

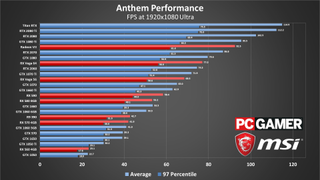

Ultra drops performance another 8-12 percent, with a few cards (oddly, Radeon VII among them) showing slightly larger drops. It's shame that not even the mightiest graphics cards can do 144fps at 1080p high, let alone ultra, and even 120fps is mostly out of reach. Again, adaptive refresh rate displays can help, and ultra-high fps certainly isn't required, but there's plenty of minor stuttering in Anthem. Much of that likely goes back to using the Frostbite engine for an open world game, though Anthem in general doesn't feel like it was built with high framerates in mind.

Upping the resolution to 1440p rules out a lot of GPUs entirely: the GTX 1060 and RX 580 barely average 30fps, and only the RTX 2070 and Radeon VII and above average 60fps. If you want to keep minimums above 60 as well, only the RTX 2080 Ti will suffice—unless you want to include the "prosumer" Titan RTX. Dropping to the high preset can boost performance 10-15 percent, but medium or low would be required to get most high-end cards up to 60.

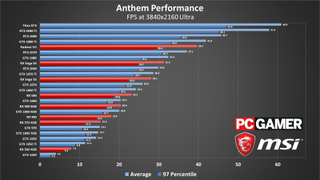

At least a few cards can still push playable framerates at 1440p, though—at 4K, using ultra quality is mostly a pipe dream. The Titan RTX gets there, and the 2080 Ti is close, but even the latter costs as much as a good midrange gaming PC. In fact, a mere smattering of eight cards can make it to 30fps. Realistically, you'd want an RTX 2070 or faster for 4K Ultra.

Using the minimum quality preset can just about double the framerate, or you can use DLSS on the RTX cards for about a 35-40 percent boost, but short of that you're better off with a lower resolution.

Of course, these results are averages, and there are places where Anthem can manage 4K with maxed-out settings and still be above that 60fps mark on lesser GPUs—some of the indoor missions, for example. But considering that Anthem's combat is a combination of explosive abilities, speedy jet maneuvers, and almost constant gunfighting, staying above 60fps is definitely helpful.

Overall, we've seen about a 15-20 percent increase in performance since the pre-release beta testing. While that may disappoint anyone hoping for larger performance improvements, it's not too bad and the game is now playable on most gaming PCs. Considering the overall reception, Anthem is more in need of a major reworking of its core mechanics than slightly better performance.

Anthem CPU benchmarks

The CPU side isn't much better—there are no magic performance leaps to be found here either. Provided you have at least an Intel 6-core CPU (6th/7th Gen 4-core/8-thread should also suffice), or a 6-core/12-thread Ryzen, 60fps should be within reach. However, even at ultra quality and higher resolutions, having a Core i7-8700K or i9-9900K can help. The CPU testing uses an RTX 2080, in order to show the difference between the CPUs as much as possible, though without resorting to the 2080 Ti.

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Starting with Intel, at 1080p low and medium quality the i7-8700K and i9-9900K are up to 50 percent faster than the i3-8100. Move up to 1080p high or ultra quality and the gap narrows to around 30 percent, while at 1440p ultra it's only 10 percent, and finally at 4K ultra it's down to just a few percent at most.

The AMD story starts similarly, with the Ryzen 7 2700X beating the Ryzen 5 2400G at 1080p low/medium by about 40 percent, but it also leads at 1080p high/ultra by 35/45 percent. At 1440p the gap is 25 percent, and at 4K ultra it's 8 percent. Much of the difference appears to be from clockspeeds and cache sizes, and perhaps the 2400G's x8 PCIe link plays a factor. The Ryzen 5 2600X meanwhile is mostly on par with the 2700X—a few fps lower, but within margin of error for Anthem testing.

Compare the AMD and Intel results with each other and things get a bit messier. The Ryzen 7 2700X, a high-end 8-core/16-thread CPU, tends to be either behind or on even footing with the midrange 6-core/6-thread Core i5-8400. This isn't a clockspeed issue, either—the 2700X runs at 3.7-4.3GHz (typically 4.1GHz in testing), while the Core i5-8400 sits at 3.8GHz in testing. Then again, the Core i5-8400 has long been able to take on more expensive CPUs in games.

None of the chips dropped the RTX 2080 below a 60fps average, even on ultra quality, except for the Ryzen 5 2400G. Again, it's not clear if that's a motherboard issue, or the x8 PCIe link, or something else. The other CPUs should all be fine, though if you're using a 144Hz display you'll likely want to stick with Intel's Core i7 or i9 parts.

Even a lowly Core i3-8100 won't tank performance too much, and it's unlikely most people would be partnering up such a CPU with the likes of the RTX 2080—but if you're still running an older Haswell or earlier CPU with a high-end GPU, it's probably time to upgrade.

Anthem notebook performance

We've updated our notebook testing to use MSI's latest models, equipped with GeForce RTX cards. Anthem can stay above 60fps on all three of the notebooks, at least on 1080p low and medium, but you'll need an RTX 2080-equipped laptop like the MSI GE75 (or perhaps a non-Max-Q 2070) to do that at 1080p high and ultra quality.

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

Swipe for additional charts

That all sounds fine and dandy, but there's something a little worrying about these results. Namely, the RTX 2070 Max-Q is hardly any faster than a mobile RTX 2060. Max-Q GPUs are intentionally downclocked, so you should always approach them with the understanding that a degree of muscle has been sacrificed in exchange for efficiency, but the RTX 2060 (non-Max-Q) is basically in the same 80-90W range as the 2070 Max-Q. Considering the 2070 Max-Q laptops cost quite a bit more than the 2060 models, you're likely better off with the 2060.

CPU speed is another factor, as well as the cooling setup on a laptop. It's kind of nuts that even the desktop GTX 1060 6GB generally does better than the mobile 2060 and 2070 Max-Q. Of course ray tracing and DLSS aren't an option on the GTX cards, but since Anthem only supports DLSS at 1440p and 4K it doesn't really matter here.

Official system requirements

Anthem's official system requirements are as follows.

Minimum:

- OS: 64-bit Windows 10

- CPU: Intel Core i5-3570 or AMD FX-6350

- RAM: 8GB

- GPU: Nvidia GTX 760, AMD Radeon HD 7970 / R9 280X

- GPU RAM: 2GB

- HARD DRIVE: At least 50GB of free space

- DIRECTX: DirectX 11

Recommended:

- OS: 64-bit Windows 10

- CPU: Intel Core i7-4790 3.6GHz or AMD Ryzen 3 1300X 3.5 GHz

- RAM: 16GB

- GPU: Nvidia GTX 1060 / RTX 2060, AMD RX 480

- GPU RAM: 4GB

- HARD DRIVE: At least 50GB of free space

- DIRECTX: DirectX 11

While EA gives no indication about what sort of performance you can expect at either level, based on our testing we can say that the minimum requirements should net you 30fps or more at 1080p low or medium, while the recommended setup will handle 1080p medium at 60fps. Unless you have an RTX 2060, in which case you can comfortably run 1080p ultra at more than 60fps.

Closing thoughts

Desktop PC / motherboards / Notebooks

MSI Z390 MEG Godlike

MSI Z370 Gaming Pro Carbon AC

MSI X470 Gaming M7 AC

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Gaming Z 8G

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

MSI GTX 1050 Ti Gaming X 4G

MSI GTX 1050 Gaming X 2G

AMD GPUs

MSI Radeon VII Gaming 16G

MSI RX Vega 64 Air Boost 8G

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

Anthem's troubles are well known and increasingly well documented, and we don't really want this performance analysis to be taken as another pitchfork poking out the top of a braying mob. For one thing, it's not like it runs truly badly—cheaper GPUs and CPUs can handle it at 1080p medium, at the very least—and Anthem often looks quite good, even at modest settings.

That said, it can be a very demanding game, and it's worrying that even midrange stalwart GPUs struggle to keep up with the higher quality settings. The official system requirements ambitiously list the GTX 1060 as one of the 'recommended' graphics cards, but it doesn't even come that close to 60fps at 1080p ultra, and it almost falls apart completely at 1440p.

Anthem's future is going to depend on a lot more than just how well it runs, but the fact that it's currently only really at home on the most expensive kit is only going to make the path forward more difficult. Hopefully BioWare can continue to work on improvements, including better performance, but the delayed feature updates don't bode well for the game.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular