10 years later, we can finally run Crysis

But as a performance analysis with 2017 hardware shows, it continues to punish even modern GPUs.

Today marks the 10-year anniversary of the release of Crysis, one of the most demanding games ever to grace a computer screen. Even after all these years, Crysis can still punish high-end PC hardware. What made the game so difficult to run? It was a confluence of many factors, combined with a desire by developer Crytek to push the limits of software and hardware. Back in 2007, no system could possibly run the game smoothly at maximum settings—and that would be true for years.

Crysis was one of the very first PC games to support DirectX 10, and it used the budding API in ways that perhaps weren't quite expected. Roy Taylor (of Nvidia at the time) stated that Crysis uses over 85,000 shaders—small programs designed to generate graphical effects. Using shaders in games is common these days, but in 2007 having that many was bleeding edge stuff. Shaders were also somehow involved with the game's antialiasing support (turning on AA forces Shader Quality to high, which itself causes a large impact to performance), but the complexity goes far beyond antialiasing. Crysis includes volumetric lighting, lush vegetation, and it was the very first game to ever implement screen space ambient occlusion.

Blazing new trails and being first doesn't always lead to a 'best' solution, and that was true of Crysis's SSAO. It looked good, perhaps a bit too good, and the hardware of the day wasn't up to the task. Many benchmarks ended up turning down the shader quality a notch to provide a reasonable balance between performance and quality—and really, Crysis with everything set to medium quality (plus high shaders if you want AA) still looks pretty amazing.

Launching Crysis also gives a few hints at other potential performance factors. You'll see the now-familiar "Nvidia The Way It's Meant To Be Played" promo video, followed by a "Play to win with Intel Core 2 Extreme inside" clip. Those are $1,000 processors that were rarely recommended, and while Core 2 Extreme included quad-core models by 2007, Crysis only ends up using two CPU cores in any meaningful way. Our modern 4-5GHz CPUs are much faster than a Core 2 or Athlon X2 from 2007, but a big part of that comes from increasing the core and thread count, which mostly won't help Crysis.

Crysis hardware in 2007

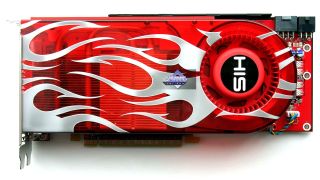

What's particularly shocking to me is just how old Crysis is in terms of the day's hardware. The state-of-the-art Nvidia GPUs at the time of launch were the GeForce 8800 GT/GTX/Ultra cards. The fastest of those is the 8800 Ultra, which delivered a measly 384 GFLOPS of compute performance, with a slightly more impressive 103.7GB/s of memory bandwidth.

AMD's best at the time was the HD 2900 XT, and the HD 3870 released one week after Crysis. Those offered 475 and 497 GFLOPS with 128GB/s and 72GB/s of bandwidth, respectively. To put that in perspective, a GTX 1080 Ti has about 30x more computational power and 4.5x the memory bandwidth as the 8800 Ultra, while the RX Vega 64 is about 26x more compute with 4x the memory bandwidth as the HD 2900 XT.

Now to be fair, the best monitors in 2007 consisted of 30-inch panels running at 2560x1600, but most places didn't even both testing Crysis at that resolution because it just wasn't feasible. Our modern 4k resolution (3840x2160) is just over twice as many pixels, but with orders of magnitude more computational power, we should be able to take down Crysis with ease, right? Maybe…

Comic deals, prizes and latest news

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

Maxing out Crysis at 4k with 4xAA

What does it take to run Crysis at maximum quality, at 4k resolutions? First, one small caveat: I couldn't max out antialiasing with 8xAA, which even now proved to be too much for any single GPU. Seriously. Multiple GPUs in SLI or CrossFire could do so, but I wanted to stick with a single graphics card. I did limited testing, mostly because I was only concerned with conquering Crysis, not providing a modern look at the past decade of graphics and processor hardware. GTX 1070 Ti as a point of reference only manages 42 fps in limited testing, so that's not going to suffice.

That leaves the obvious choice of the GTX 1080 Ti, which I paired with an i7-8700K. 1080p with Very High quality went down easily, as did 1440p, but 4k at 60+ fps was still just a bit out of reach.

So I overclocked the CPU to 4.8GHz and ran the GPU at around 2000MHz, with a small boost to the VRAM clock, and that did the trick. Mostly. There are a few things to note. First, don't use FRAPS with Crysis—it doesn't appear to like the game these days (in my experience, it caused major stuttering).

Second, you'll see in the video that framerates still dip below 60 fps on occasion. However, I'm recording the video using ShadowPlay (GeForce Experience), which at 4k ends up dropping gaming performance by around 10 percent. I'm also looking at an early portion of the game, and some of the later levels can be a bit more demanding. If I turn off ShadowPlay, average framerates are well above 60 fps, but there are still occasional fps drops.

Crysis settings overview, 10 years later

Beyond the steep system requirements, it's worth pointing out that even a decade later, Crysis is still one of the best-looking games on PC. There are a few shortcuts that might not be needed in a modern implementation—palm trees only break in one of two spots when you cut them down, shadows don't look quite as nice or as accurate as in the latest games, and you won't encounter large groups of NPCs. But other than excessive motion blur (which can thankfully be turned way down or off), there aren't any obvious signs of aging.

I did run through the various settings in Crysis, using a GTX 1070 Ti at 2560x1440, to see which options cause the largest impact to performance. For the baseline, I tested without anti-aliasing, since enabling it requires "high" shaders. Everything else was set to Very High, though it's worth noting that you can manually tweak the configuration files if you want additional control. Here's the quick summary:

For modern GPUs with lots of memory (and relative to 2007's Crysis, 'lots' means 2GB or more VRAM, so everything qualifies), Texture Quality and Shadows Quality make no difference in performance, and Physics Quality also doesn't change performance—in fact, setting these to Low actually dropped performance slightly, suggesting Nvidia's drivers may have been tuned over the years for the Very High settings.

Surprisingly, as I remember it being different back in the day, Volumetric Effects Quality (1%), Game Effects Quality (1%), Postprocessing Quality (3%), Particles Quality (1%), Water Quality (2%), and Motion Blur (2%) also fail to cause any major change in performance. That leaves just three settings you need to worry about—beyond resolution, naturally.

Anti-Aliasing Quality set to 4x causes a relatively large 15 percent drop in performance, and 8x AA is a 25 percent hit to framerates. Note that this isn't strictly MSAA, nor is it the newer post-processing based FXAA or SMAA, but instead Crysis uses a combination of MSAA, with Quincunx AA on Nvidia cards used at higher levels.

Shaders Quality provides a similar hit to performance, and dropping to Low improved framerates by 15 percent—but the impact on visuals is very apparent, not to mention you can't use AA with anything below the High setting.

Objects Quality ends up being the biggest single ticket item in terms of framerates, and you need to exit to the main menu to change this setting. In testing, setting this to Low improves performance by around 25 percent, though it also causes very obvious object and detail 'pop' where things are only visible if they're in range.

While the individual impact of the settings is relatively minor, it's interesting that in combination—ie, setting everything to Low—the result can be far greater. At 1440p with everything at Low, framerates more than doubled, giving a silky-smooth (and somewhat ugly) 224 fps on the 1070 Ti.

Crysis post-mortem

After spawning a meme that has lasted more than a decade, we still haven't seen a true successor to Crysis. Yes, modern games can be more demanding—Ark: Survival Evolved only manages 25 fps on a 1080 Ti at 4k Epic, for example—but the fastest GPUs back in 2007 would have likely pushed low single-digit framerates at 4k in Crysis, if they could even run it at all. And that's not even getting into the debate over graphics quality.

2007 was really an amazing year for PC gaming, including other giants like Valve's Orange Box (Portal, Half-Life 2: Episode 2, and Team Fortress 2), the original BioShock, and Stalker: Shadow of Chernobyl competing for top honors. But I can't name a single game that has pushed the graphics envelope as hard or as far as Crysis. It caused PC gamers everywhere to invest in new hardware, and it continues to stand as a shining example of ambitious PC-focused development.

Yes, we can finally run Crysis, and if you gave it a pass all those years ago due to the steep system requirements, it's still worth revisiting today.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular