Nvidia unveils the GeForce GTX 680: massively multicore, insanely powerful

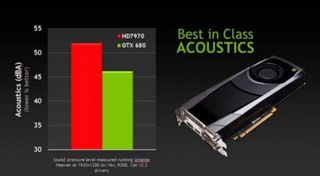

So, it's as powerful as three GTX 580's, yet uses less power, creates less heat, and of course, creates less noise. Nvidia has provided us with this handy image to drive home these points.

Running at 195W (as opposed to the 580's 205W and the 7970's 250W) it also only requires two six pin connectors, which your PSU will thank you for.

Now let's get to the fun stuff, the new stuff, the cool stuff, shall we?

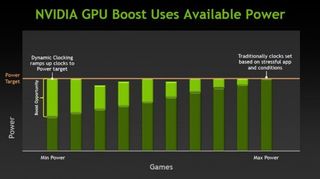

First off is Nvidia's new GPU Boost.

GPU Boost increases GPU performance by dynamically increasing its clock speed. Since it's dynamic, there's no setup or tweaking involved, it's one of those things that just works... or so Nvidia says. Technically, it's a GPU clocking technique that dynamically raises clock speeds based on per app power variation being monitored in real time. So, it monitors your GPU workload and will increase your clock speed whenever it can. How does it do that? I'm going to guess witchcraft.

Nvidia also claims that their FXAA (Fast Approximate Anti-Aliasing), which is becoming available in more and more games, is 60% faster than 4x MSAA and provides even smoother visuals.

However, the 680 also features a new type of anti-aliasing that Nvidia is calling TXAA.

PC Gamer Newsletter

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

TXAA is a combination of hardware anti-aliasing, higher ("film style") quality AA resolve and optional temporal components for increased quality (in TXAA2) It comes in two flavors. TXAA1 and TXAA2.

TXAA 1 comes at a similar cost as 2xMSAA but with edge quality better then 8xMSAA and TXAA 2 comes at a similar cost as 4xMSAA but with quality that Nvidia says is "far beyond" 8xMSAA. We shall see.

Not every game will support TXAA right off the bat this coming year, (Borderlands 2 and MechWarrior Online are among a few that already will) but you can force it via the control panel to see just how it affects your favorite games such as Arkhamfield Infinite.

Jagged edges be damned, you're not welcome around the GTX 680!

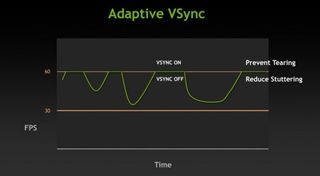

However, while TXAA is certainly interesting, one of the coolest, if not THE coolest feature of the GTX 680 has to be Adaptive VSync. What this does is allow your GPU to dynamically enable or disable VSync depending on your system performance. So, if your frame rate goes above or below your refresh rate, your 680 will turn VSync on or off dynamically. It's that easy!

Nvidia says that this will prevent halting, disconcerting frame rate stutter that's nearly as bad as the screen tearing it's trying to avoid.

All of these new options are now available through the Nvidia control panel you've all come to know and love. (You know, the one that nags you with Windows popup messages every time new beta drivers are available.)

Last but not least, Nvidia has also proclaimed that the GTX 680 can power four monitors all by itself and has even shown off a single 680 doing so. To really rub it in, they gave the same demo with three of the four monitors running 3DSurround. Why anyone would put a fourth monitor on top of their already insane 3DSurround setup (or how for that matter) is beyond me, but it does make for this awesome image below. Fus Ro Dah indeed.

Oh, the price? Well, you can get the GTX 680 from a variety of Nvidia's partners starting today at $500+. Given that a 580 will run you around $400+ and a dual GPU 590 will run you around $700+, I'd say that's fair, if not downright reasonable.

So, should you go out and buy a GTX 680 today? The early adopter in me says yes, yes oh hell yes, but the frugal young man in me stops and asks, "do you need it?" Yes and no. If you've already got a great SLI setup that crushes anything and everything, there's really no need for you to upgrade, unless the thought of dynamic GPU clock boosting and adaptive VSync sound just too good to be true. However, the fact that this card also runs cooler and quieter than the 580 and uses less power is also a huge plus. I've currently got a GTX 590 (akin to two GTX 580s) and a GTX 560ti (dedicated to PhysX) in my rig, but after seeing for myself what the GTX 680 can do all by itself, and just how cool, quiet and dynamic it is? I think I might only have need of one GPU this year—the GTX 680.

Stay tuned for the full review and more.

Most Popular