Here's what you need to know about Kaby Lake-X

Start small and 'step up' to a faster CPU in the future, but watch out for the pitfalls.

I'll be posting the Skylake-X i9-7900X and Kaby Lake-X i7-7740X reviews in the near future (I just received what I hope will be 'final' firmware for the test board). In the meantime, I wanted to talk specifically about Kaby Lake-X, as it can be a rather confusing part. What is Kaby Lake-X, and do we really need it?

To start, LGA2066 with the X299 chipset is Intel's latest 'enthusiast' platform, offering the potential for up to 18-core processors. (The LGA115x series of sockets have been Intel's 'mainstream' platform for the past eight years.) Previously, the bottom of the enthusiast platform started with 6-core parts (for Haswell-E and Broadwell-E on LGA2011-v3), but Intel also has a couple of lower tier parts this round, the i7-7740X and the i5-7640X. These are both Kaby Lake-X (KBL-X) processors, which end up being quite different from Skylake-X (SLK-X).

For one, this is the first time Intel has had a Core i5 part on its enthusiast platform. It's there primarily as a stepping stone. The idea is that you can buy the appropriate motherboard and other components, but start small on the CPU side of things with a Core i5 or quad-core i7 part. Then, in the future when you're ready to put more money into the system, you can upgrade to the full-fledged Core i9 of your choice. Assuming you ever have the need to move beyond your starting processor, of course.

Here's where things get confusing. LGA2066 has the potential for quad-channel DDR4 memory and up to 44 PCIe lanes, but you only get that on the Skylake-X parts—and only the Core i9 parts get the full 44 PCIe lanes. If you choose to go with one of the quad-core Kaby Lake-X parts, however, you're further restricted to only 16 PCIe lanes—from the CPU; the chipset still provides an additional 28 PCIe lanes, though they share a DMI 3.0 connection to the CPU. That's a bit of a concern, but it gets even worse: you lose two of the memory channels.

What that means in practice is that even though most X299 motherboards will have eight DIMM slots for memory, if you're using a Kaby Lake-X processor, half of those slots are useless. So…

But it's not just memory slots that are disabled with Kaby Lake-X. Depending on the motherboard, you may also find one or more of the PCIe slots (or, alternatively, M.2 slots) disabled. Gigabyte's X299 motherboards for example have five (physical) PCIe x16 slots. Slots 1, 3, and 5 come from the CPU, with slots 2 and 4 from the chipset, but slot 5 only works if you have a Skylake-X processor. If you have one of the 44 lane CPUs (Core i9), slots 1 and 3 are x16 connections, and slot 5 is an x8 connection. Use a 28 lane CPU (i7-6820X and i7-6800X) and slot 1 gets an x16 connection with slot 3 as an x8 connection, but if you put something in slot 5 then both slots 1 and 5 become x8 connections. Other motherboard vendors are free to do as they see fit, but basically you need to do some research before buying X299 to know exactly what you're getting.

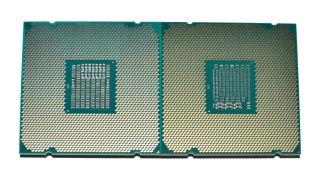

Which brings me back to the actual CPU die. Some have wondered why KBL-X only has a dual-channel memory controller. The reason is simple: the actual silicon chip in the CPU package is none other than Kaby Lake. Meaning, it has two cores, two memory channels, 256kB L2 cache per core, and even a decent chunk of real estate dedicated to integrated graphics. Except, LGA2066 doesn't have any support for integrated graphics, so that part of the chip is completely disabled. Yeah. Put another way, there are 2066 pins on the socket for a reason, but Kaby Lake-X effectively ignores about 1000 of them.

PC Gamer Newsletter

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

This is obviously a business decision. It's less expensive to repurpose an existing die than to make a new custom die just for Kaby Lake-X, and it creates additional separation between the processor tiers. But in going this route, it makes the various CPUs for LGA2066 very different. We have a wide range of potential configurations, from quad-core/quad-thread with dual-channel memory and 16 PCIe lanes, to 6/8-core designs with quad-channel memory and 28 PCIe lanes, to 10-18 core parts with quad-channel memory and 44 PCIe lanes.

This results in additional complexity, which I've encountered in my initial testing of Skylake-X. I've run through three BIOS revisions on one board, and I'm working through the fourth now. The first version was early and not fully optimized in a variety of ways. The second BIOS had whacky turbo modes that ended up overclocking the i9-7900X to 4.5GHz on all 10 cores—fast, but also out of spec. The third (after I complained to the vendor about the auto-overclocking) fixed things by running the i9-7900X at 4.0GHz. Manually setting the turbo modes on either BIOS resulted in unexpected behavior. I still haven't even gotten around to properly testing the PCIe slots to ensure they're working as expected.

If you're wondering, the actual spec for the i9-7900X is supposed to be up to 4.5GHz on two cores, with in-between turbo states for 3-7 core loads, and 4.0GHz max for 8-10 core loads. This is important for gaming in particular, because while things like video encoding will use all 10 cores, most games will only push 4-6 cores at most. Not to mention running 10 cores at 4.5GHz requires a lot of power. Do a video encode and you should expect 4.0GHz, but play a game and you could see 4.3GHz or even 4.5GHz—not a huge jump, but it does mean Skylake-X parts shouldn't be substantially slower than Skylake when it comes to gaming.

All this might sound a bit crazy, considering X299 based motherboards and processors going on sale starting next week (and are available for pre-order already), but for new platforms this sort of thing is basically the norm. Ryzen motherboards for example had memory support issues that are still being addressed, and at least one motherboard I tested had a secondary x16 PCIe slot that didn't work (which was later fixed with a new BIOS). Kaby Lake's launch didn't have too many issues, mostly because it wasn't all that different from Skylake, but Skylake had a lot of firmware optimizations that had to be worked out. And X99 before that was quite problematic at times—hell, I have an X99 board where it still auto-overclocks the i7-6950X to 4.0GHz at 'stock' settings (and overheats and throttles on heavier workloads); it never did receive a 'proper' BIOS for Broadwell-E.

What about Kaby Lake-X, though—is it even worth considering? Yes, for a few niche markets. First, the stock clocks are slightly higher than Kaby Lake, so the i5-7640X runs at 4.0GHz base and 4.2GHz turbo, and more importantly it will do all four cores at 4.2GHz running stock. Similarly, the i7-7740X has a 4.3GHz base and 4.5GHz turbo, and will run all four cores at 4.5GHz stock. The change in package also appears to have unlocked a bit more overclocking potential, maybe 100-200MHz extra relative to the i7-7700K. And of course, you can buy an i5-7640X and upgrade to a 10-core or even 18-core processor in the future, plus you get a few benefits from an X299 motherboard that you might not get on a Z270 board. I suspect at least part of the reason KBL-X exists is for OEMs and system integrators who only want to validate a single platform for gaming PCs.

If none of those reasons sound particularly compelling—and for most of you, they shouldn't—Kaby Lake-X is essentially a more expensive option compared to Kaby Lake. The cost isn't in the CPU, though, it's in the rest of the platform—X299 boards will likely start at $200 and up, where decent Z270 boards can be found for under $150. It's not inherently bad, but it only adds confusion to an already complex lineup of processors.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular