Call of Duty: Modern Warfare PC settings guide, system requirements, benchmarks, ray tracing, and more

A return to form for both Call of Duty and Intel, though the ray tracing effects and Ryzen performance are lacking.

Call of Duty: Modern Warfare is a surprising reclaiming of the franchise's former glory, marrying modern gameplay and visuals with Modern Warfare's iconic story and settings. These modern visuals can look great on almost any machine, with budget PCs putting out extremely playable framerates even at maxed out settings, though not including ray tracing. Turning on the ray tracing effects with RTX hardware isn't too rough, but GTX graphics cards will struggle mightily.

A notable hardware story with Call of Duty is its odd performance on AMD hardware. Radeon graphics cards put up a good fight against their Nvidia competitors, but the Ryzen processors don't look so hot. It's not that you can't play Modern Warfare on a Ryzen CPU, but the latest Ryzen 3900X flagship performs worse than Intel's last-gen Core i5-8400 offerings at 1080p, and even the Core i3-8100 jumps ahead at 1440p and 4K. It's not all smooth sailing for Intel, either, as Hyper-Threading appears to hurt performance. That might explain the Ryzen results as well, but the game engine doesn't seem to deal well with high core counts.

As our partner for these detailed performance analyses, MSI provided the hardware we needed to test Call of Duty: Modern Warfare (2019) on a bunch of different AMD and Nvidia GPUs, multiple CPUs, and several laptops. See below for the full details, along with our Performance Analysis 101 article. Thanks, MSI!

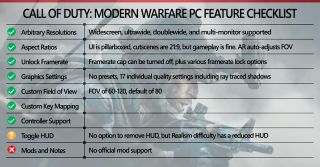

Features are fairly standard for an Activision title these days. Resolutions of all shapes and sizes are supported, including double-wide and multi-monitor setups. In testing, 16:9, 21:9, and even 32:9 look correct, with the latter two giving a much wider field of view by default. The intro movies are also in 21:9, which is a nice touch. Nearly everything else is as expected for a AAA game of this caliber; controller support, a healthy amount of graphics settings to play with, your usual FOV and framerate boundaries.

CoD:MW does limit the HUD removal options, however. You can't just turn them off, but instead have to play in Realism mode. In the singleplayer campaign, that's a higher difficulty, while in multiplayer you're left with a small unremovable reticle in the center of the screen and a tiny killstreak pager that beeps once your perks are available. It also heavily buffs weapons damage and lowers defensive stats, making the game much less forgiving. If this style of play is your cup of tea, great! Otherwise, you're stuck with an always-on HUD. There's also Ansel support for capturing images, though only on Nvidia GPUs.

Call of Duty: Modern Warfare system requirements

Infinity Ward built Modern Warfare to be played on modern hardware, though you can certainly get by with much less. Here's the developer's minimum and recommended specs:

Minimum PC specifications:

- OS: Windows 7 64-bit (SP1) or Windows 10 64-bit

- CPU: Intel Core i3-4340 or AMD FX-6300

- Memory: 8GB

- GPU: Nvidia GTX 670/GTX 1650 or AMD HD 7950

- DirectX 12.0 compatible

- Storage: 175GB

Recommended PC specifications:

PC Gamer Newsletter

Sign up to get the best content of the week, and great gaming deals, as picked by the editors.

- OS: Windows 10 64-bit

- CPU: Intel Core i5-2500K or AMD Ryzen R5 1600X

- Memory: 12GB

- GPU: Nvidia GTX 970/GTX 1660 or AMD R9 390/RX 580

- DirectX 12.0 compatible

- Storage: 175GB

Modern Warfare's minimum spec is a bit higher than other games, suggesting current-gen graphics cards. And that's overlooking the mind-boggling 175GB of storage space for the game itself. That size makes even Red Dead Redemption 2 look pint-sized, so I would advise setting aside an afternoon just for the game to download. The game originally launched at 112GB, but two updates have already pushed that to 141GB and future updates are planned.

The recommended specs aren't much higher, though the fact that a 2nd Gen Intel chip is paired against a Ryzen 5 suggests Infinity Ward knew AMD's CPUs would struggle a bit. Based on our testing and a little bit of number-comparing for the older parts, the minimum spec should have no problem breaking 60fps at 1080p min, while recommended specs would net somewhere around 60 fps at 1080p max.

Call of Duty: Modern Warfare settings overview

Modern Warfare doesn't provide any graphics presets for you to choose from. Instead, it gives you 16 individual graphics quality settings to play around with, and most of these hardly affect performance. Lower-end cards like a GTX 1650 take a hit to performance when going from minimum to maximum, but modern midrange and high-end cards go from extremely playable to slightly less extremely playable at 1080p. Unless you need to make every bit of VRAM count, I'd suggest aiming for maxed out (or nearly maxed out) settings; the game looks significantly better for not much of a hit to performance.

There are two exceptions to the above. First, AMD cards with only 4GB failed to run Modern Warfare at maximum quality—they simply crashed to desktop. Updated drivers or a game patch may fix this, as the GTX 1050 2GB card didn't completely fail to run.

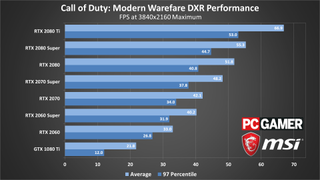

Second, DirectX Raytracing (DXR) is available on Nvidia cards, as Modern Warfare has joined the hall of games with RTX support. But don't expect to see Control levels of graphical improvement; ray tracing on Modern Warfare is almost unnoticeable. The shadows and light casting look better with ray tracing, but not by a significant margin, and after Control, it's odd to not have accurate reflections. For 30 percent lower performance, you're getting very little impact to your viewing experience, so unless you really need to have all settings maxed for cool points, leaving ray tracing turned off isn't a bad idea.

That goes double if you have a GTX graphics card with 6GB or more VRAM. You can technically enable ray tracing, but the GTX 10-series hardware sees framerates cut to one third their non-ray traced levels, while GTX 16-series hardware doesn't quite drop by half.

Call of Duty: Modern Warfare graphics card benchmarks

For testing CoD, we used the singleplayer campaign on the first mission. It was reasonably demanding and, unlike multiplayer, it's possible to roam around without repeatedly dying. Performance can be lower or higher in other maps and game modes, but our test at least gives a baseline level of performance.

For these tests, we only used the minimum (all settings to low) and maximum (all settings to high except DXR) options for 1080p, and full maximum quality at 1440p and 4K. We also tested with DirectX raytracing shadows enabled on many of the GPUs, using the same settings otherwise.

Our GPU testbed is the same as usual, an overclocked Core i7-8700K with high-end storage and memory. This is to reduce the impact of other components, though we'll test CPUs and laptops below.

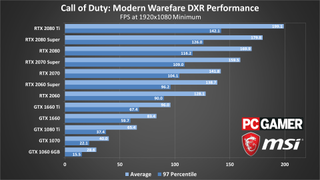

At 1080p minimum, Modern Warfare doesn't really look like Call of Duty. The flat, lifeless colors and low resolution of textures can look blocky and ugly, particularly on some of the effects like fires and lights. The good news is that every graphics card we tested is very playable. Even the RX 560 and GTX 1050 average60 fps, with occasional dips only going down to 50. Anything faster won't have any problems.

Going up the ladder presents a very familiar graph, with most cards exactly where you'd expect. And in a bit of a win for AMD, the normally lackluster Vega 56 and 64 beat out the GTX 1070 by a solid margin, though the RTX 2060 manages to match them. Meanwhile, every card in the top 10 is pretty much in the same places they take in almost every other hardware test.

Ray tracing (DXR) with everything else turned down doesn't really make much sense, but it does show that it's the DXR calculations causing the massive drop in performance. The 2060 drops from 180 fps to 'only' 128 fps, and the particle effects and textures still look awful.

The GTX 1070 meanwhile plummets from 143 fps without DXR to just 40 fps with DXR. Yikes. The newer Turing architecture GTX 1660 meanwhile goes from 137 fps without DXR to 83 fps with DXR. That concurrent FP + INT hardware in Turing certainly can be useful.

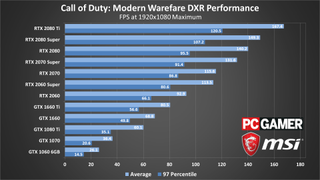

Cranking graphics settings up to maximum, the story largely remains the same. All cards take about a 25% dip in performance, and the only changes are with cards that were already within a handful of frames of each other. The GTX 1050 drops to around console framerates, and anything slower could be deemed unplayable. AMD's 4GB cards also failed to run and aren't present in these charts.

No cards make surprising rises or falls, and curiously, all cards drop at almost the same rate. That means, at least with our overclocked Core i7-8700K test CPU, we're almost purely GPU limited, even with the RTX 2080 Ti. The game also looks much nicer; especially in night missions like "Going Dark" that depend on shadows and reflections to truly stand out.

If you have an RTX GPU, enabling DXR is certainly still possible. Even the RTX 2060 stays above 60 fps on minimums. The GTX 1660 cards also do surprisingly well—again, thanks to the concurrent FP and INT hardware in Nvidia's Turing architecture. A GTX 1080 Ti meanwhile can only just barely average 60 fps, with stutters from minimum fps dropping into the 30s. If you want to try ray tracing, in other words, you really need a Turing GPU.

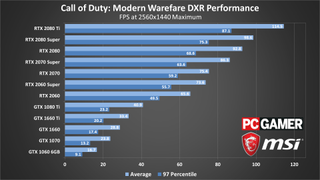

Now the culling begins, with anything short of an RX 580 dropping below 60fps and only cards above the 2060 Super managing to stay above 100fps. For single-player experiences, I would say 30-40fps is playable, but in online titles where your refresh rate, frames per second, and input lag all impact your playing performance, I would err against dropping below 60 frames at all costs. Keep your 1060s set to 1080p, lest your K/D ratio drop to unforgivable lows.

The high-end graphics cards mostly stay in the same positions as 1080p max, with the 1080 Ti and RX 5700 XT swapping spots. Also note that not even a 2080 Super can max out a 144Hz 1440p display, and even the 2080 Ti dips below that on minimums.

DXR naturally makes things even more demanding. For improved shadows that you may or may not notice in the heat of battle, the RTX 2060 goes from buttery smooth to I-can't-believe-it's-not-butter. None of the GTX cards are even worth discussing at 1440p, but the 2070 Super and above keep minimums above 60 as well. But the most competitive gamers are better off with a 144Hz or even 240Hz monitor running at 1080p.

The pinnacle of PC gaming, 4K ultra (or maximum, since there is no ultra preset) is surprisingly forgiving to our GPUs. While every card below the GTX 1070 is now borderline unplayable for a multiplayer shooter, everything from the RTX 2070 and up can hit the coveted 60 fps.

For AMD, the Radeon VII and RX 5700 XT trade places, finally favoring the older but theoretically more powerful GPU. Both are right on the 60 fps threshold as well, along with the GTX 1080 Ti. Only the RTX 2070 Super and beyond consistently reach 60 fps, with the 2080 Ti delivering an impressive 94 fps average. While the cost of entry to 4K ultra 60fps here is $499, it's still a wide field of viable cards for peak performance.

Or you can enable DXR and drop the 2080 Ti to point where it waffles around the 60 fps mark, depending on the scene, and every other GPU falls shy of 60. And no, you can't run with SLI to boost performance with DXR, sorry.

Call of Duty: Modern Warfare CPU benchmarks

Now to address the elephant in the room. For some reason, Ryzen takes a huge performance hit in Call of Duty. The Ryzen 5 3600 and Ryzen 9 3900X fall well behind their usual Intel rivals. The previous generation Ryzen 5 2600 looks even worse. At 1080p, the R9 3900X has trouble keeping up with the i5-8400, and the story only gets worse moving into higher resolutions. Here are the graphs below:

In 1080p min and max, the two Ryzen 3000 series chips we tested fall 30 frames below the i5-8400, with the older Ryzen 5 2600 trailing them by an additional 30 fps at minimum quality. Intel's range of products all fall down the line in order, with the overclock on our i7-8700K barely making any difference versus stock. Only the 4-core/4-thread i3-8100 really falls off the pace.

DXR drops performance on everything, with a much heavier GPU load, but the Ryzen CPUs all continue to trail the Core i5. The faster Intel CPUs (ie, not the i3) with ray tracing almost perform as well as the Ryzen chips without ray tracing.

When we move up to 1440p or 4K, there's some interesting moving around in the ranks. The i5-8400 jumps up to second place, surpassing both i7-8700Ks. The i3-8100 also makes a surge, joining the rest of Intel handily above the Ryzen family, though minimum fps is still lower at 1440p.

This seeming superiority of the slower i5-8400 processors doesn't necessarily indicate an Intel preference, but rather that the game engine doesn't quite know what to do with higher thread count processors. Having no Hyper-Threading on the i5-8400 overcomes even a 1000MHz deficit relative to the overclocked 8700K. Even though we live in a time where real-time ray tracing exists, we still don't have nice multi-core support.

Call of Duty: Modern Warfare laptop benchmarks

The three laptops MSI provided feature an RTX 2080, RTX 2070 Max-Q, and RTX 2060 GPUs. Based on general rules of thumb with laptop GPUs, these shouldn't perform too terribly with Modern Warfare. Especially since we're limited to 1080p, though DXR could be a bit of a problem on the lower spec models.

Since the RTX 2060 and RTX 2070 Max-Q offer near-identical performance, the GS75 and GL63 trade blows in this matchup, staying within margin-of-error of each other. And since the laptops have 1080p displays, they end up being CPU limited. All three laptops still struggle to keep up with the full-sized cards, with the desktop RTX 2060 barely edging the GE75 at 1080p minimum but falling behind at 1080p maximum.

We also tested with DXR on, and surprisingly, all three laptops can keep Call of Duty at very playable framerates at both minimum and maximum quality. The GE75 generates a bit of a lead at 1080p max without DXR but seems to fall back once DXR is enabled.

Still, even if these laptops run Modern Warfare adequately, they still take a huge hit compared to their full-sized brothers. This is the cost of operation with gaming laptops: higher price tags and lower performance for the sake of portability. If you're looking to fight some terrorists at a friend's house, great, but otherwise a gaming desktop and non-gaming laptop for on-the-go productivity tasks is more sensible.

Final thoughts

Desktop PC / motherboards / Notebooks

MSI MEG Z390 Godlike

MSI Z370 Gaming Pro Carbon AC

MSI MEG X570 Godlike

MSI X470 Gaming M7 AC

MSI Trident X 9SD-021US

MSI GE75 Raider 85G

MSI GS75 Stealth 203

MSI GL63 8SE-209

Nvidia GPUs

MSI RTX 2080 Ti Duke 11G OC

MSI RTX 2080 Super Gaming X Trio

MSI RTX 2080 Duke 8G OC

MSI RTX 2070 Super Gaming X Trio

MSI RTX 2070 Gaming Z 8G

MSI RTX 2060 Super Gaming X

MSI RTX 2060 Gaming Z 8G

MSI GTX 1660 Ti Gaming X 6G

MSI GTX 1660 Gaming X 6G

MSI GTX 1650 Gaming X 4G

AMD GPUs

MSI Radeon VII Gaming 16G

MSI Radeon RX 5700 XT

MSI Radeon RX 5700

MSI RX Vega 64 Air Boost 8G

MSI RX Vega 56 Air Boost 8G

MSI RX 590 Armor 8G OC

MSI RX 580 Gaming X 8G

MSI RX 570 Gaming X 4G

MSI RX 560 4G Aero ITX

As usual, a huge thank you to MSI for providing us the hardware for testing Call of Duty: Modern Warfare. Modern Warfare is a huge step in the right direction for the franchise, going back to the realism fans look for but still providing an interesting storyline; though it would be nice to not run into as many darn claymores in every single multiplayer match. As for the hardware requirements, the GPUs fall pretty much where you'd expect, but gamers with higher thread count CPUs, and Ryzen in particular, may be disappointed.

There's still hope for optimizations that could improve the situation, but considering these problems continue more than a month after release, fixing them is obviously not the top priority.

It's a busy time of year, with lots of new games along with the shopping madness of Black Friday and what is quickly becoming Cyber Month. We're basically done with 2019 now at least, so hopefully that means a bit of a breather. Or perhaps not, as 2020 is shaping up to be even more exciting in terms of hardware and games that push into new levels of realism. It will be interesting to see what RTX 3080 and AMD's RX 5900 (aka Navi 20) bring to the table.

Jarred's love of computers dates back to the dark ages when his dad brought home a DOS 2.3 PC and he left his C-64 behind. He eventually built his first custom PC in 1990 with a 286 12MHz, only to discover it was already woefully outdated when Wing Commander was released a few months later. He holds a BS in Computer Science from Brigham Young University and has been working as a tech journalist since 2004, writing for AnandTech, Maximum PC, and PC Gamer. From the first S3 Virge '3D decelerators' to today's GPUs, Jarred keeps up with all the latest graphics trends and is the one to ask about game performance.

Most Popular